multi-agent-ralph-loop

Multi-Agent AI Orchestration with GLM-4.7 PRIMARY, Claude, Codex, Gemini. Quality gates, memory system, 67 hooks. MiniMax deprecated.

Stars: 79

Multi-agent RALPH (Reinforcement Learning with Probabilistic Hierarchies) Loop is a framework for multi-agent reinforcement learning research. It provides a flexible and extensible platform for developing and testing multi-agent reinforcement learning algorithms. The framework supports various environments, including grid-world environments, and allows users to easily define custom environments. Multi-agent RALPH Loop is designed to facilitate research in the field of multi-agent reinforcement learning by providing a set of tools and utilities for experimenting with different algorithms and scenarios.

README:

"Me fail English? That's unpossible!" - Ralph Wiggum

Smart Memory-Driven Orchestration with parallel memory search, RLM-inspired routing, quality-first validation, checkpoints, agent handoffs, local observability, autonomous self-improvement, Dynamic Contexts, Eval Harness (EDD), Cross-Platform Hooks, Claude Code Task Primitive integration, Plan Lifecycle Management, adversarial-validated hook system, Claude Code Documentation Mirror, GLM-5 Agent Teams, Dual Context Display System, full CLI implementation, Automatic Learning System, Intelligent Command Routing, Swarm Mode Integration, and Hook System v2.84.3 (100% validated, race-condition-free, all JSON bugs fixed).

🆕 v2.84.3: Comprehensive Hook System Bug Fixes - Fixed 10 hooks with invalid JSON format (trailing

\'pattern), correcteddecision-extractor.shinvalid early-exit JSON, fixedlocalscope bugs, added URL injection prevention incurator-scoring.sh, eliminated TOCTOU race conditions incheckpoint-manager.sh, removed all hardcoded paths. 7 commits, ~30 files modified.

v2.84.1: GLM-5 Agent Teams Complete Integration - 7 commands with

--with-glm5flag (/orchestrator,/loop,/adversarial,/bugs,/parallel,/gates,/security), SubagentStop native hook (not TeammateIdle/TaskCompleted which don't exist), project-scoped storage (.ralph/), reasoning capture with thinking mode, Codex upgraded togpt-5.3-codex. 42/42 tests passing.

v2.83.1: 5-Phase Hook System Audit Complete - 100% validation achieved (18/18 tests passing). Eliminated 4 race conditions, fixed 3 JSON malformations, added TypeScript caching (80-95% speedup), multilingual support (EN/ES) for 20+ file extensions, atomic file locking for critical hooks, and 5 new critical hooks.

Status: 5-phase audit complete with 100% validation (target was 95%). All 83 hooks validated, 18/18 tests passing, 4 race conditions eliminated, 3 JSON malformations fixed.

-

Race Conditions Eliminated: Fixed 4 race conditions in concurrent file operations

-

promptify-security.sh: Added atomic locking for log rotation -

quality-gates-v2.sh: Added file-based caching with atomic writes -

orchestrator-auto-learn.sh: Addedmkdir-based atomic locking for plan-state.json -

checkpoint-smart-save.sh: Added exclusive file locks withflock

-

-

JSON Malformations Fixed: Fixed 3 hooks with invalid JSON output

- All PreToolUse hooks now use correct

hookSpecificOutputwrapper - PostToolUse hooks validate JSON before output

- Added JSON validation tests (100% passing)

- All PreToolUse hooks now use correct

-

Archived Invalid Hook:

post-compact-restore.shmoved to.claude/hooks/archived/(PostCompact event does not exist in Claude Code)

-

TypeScript Compilation Cache:

- File-based caching using

mtime + md5hash keys - 1000-entry LRU cache with automatic cleanup

- Performance gain: 80-95% reduction in TypeScript compile times

- Cache location:

~/.ralph/cache/typescript/

- File-based caching using

-

Multilingual Support:

- Added Spanish keyword detection to

fast-path-check.sh - Keywords:

arreglar,corregir,cambio simple,cambio menor,renombrar, etc. - English + Spanish support improves detection accuracy by ~15%

- Added Spanish keyword detection to

-

Security Hardening:

-

umask 077applied to 38 hooks (files created with 700 permissions) - Removed insecure

.zshrcAPI key extraction pattern - Added dependency validation before hook execution

-

-

New Hooks Created:

-

todo-plan-sync.sh- Synchronizes todos with plan-state.json progress -

orchestrator-auto-learn.sh- Auto-detects knowledge gaps, triggers curator

-

-

Documentation Updates:

- Added 24 hooks to COMPLETE_HOOKS_REFERENCE.md (+76 lines)

- Updated

settings.json.exampleto 41 registered hooks - Added inline comments to 15 complex hooks

-

Modern File Extensions: Added support for 8 new extensions

- Vue (

.vue), Svelte (.svelte), PHP (.php), Ruby (.rb) - Go (

.go), Rust (.rs), Java (.java), Kotlin (.kt) - Total supported: 20 file extensions

- Vue (

-

Rate Limiting: Added GLM-4.7 API rate limiting with exponential backoff

- Prevents 429 errors during parallel operations

- Automatic fallback to sequential execution when rate limited

-

Structured Logging: All hooks now output structured JSON logs

- Log location:

~/.ralph/logs/ - Rotation: 5 backups maintained with atomic operations

- Log location:

╔══════════════════════════════════════════════════════════════════╗

║ v2.83.1 VALIDATION RESULTS ║

╠══════════════════════════════════════════════════════════════════╣

║ Test Category │ Total │ Passed │ Failed │ Status ║

║ ─────────────────────────────────────────────────────────────── ║

║ Syntax Validation │ 83 │ 83 │ 0 │ ✅ 100% ║

║ JSON Parseability │ 83 │ 83 │ 0 │ ✅ 100% ║

║ Shebang Presence │ 83 │ 83 │ 0 │ ✅ 100% ║

║ Executable Permissions │ 83 │ 83 │ 0 │ ✅ 100% ║

║ Integration Tests │ 18 │ 18 │ 0 │ ✅ 100% ║

║ Race Condition Tests │ 4 │ 4 │ 0 │ ✅ 100% ║

║ ─────────────────────────────────────────────────────────────── ║

║ TOTAL │ 354 │ 354 │ 0 │ ✅ 100% ║

╚══════════════════════════════════════════════════════════════════╝

| Hook | Event | Purpose |

|---|---|---|

orchestrator-auto-learn.sh |

PreToolUse (Task) | Auto-detects knowledge gaps, triggers curator learning |

promptify-security.sh |

PreToolUse (Task) | Security validation for prompts with pattern detection |

parallel-explore.sh |

PostToolUse (Task) | Launches 5 concurrent exploration tasks |

recursive-decompose.sh |

PostToolUse (Task) | Triggers sub-orchestrators for complex tasks |

todo-plan-sync.sh |

PostToolUse (TodoWrite) | Syncs todos with plan-state.json progress |

Your personal settings.json has been updated with 5 additional critical hooks:

- Before: 34 hooks registered

- After: 39 hooks registered (+5)

-

Location:

~/.claude-sneakpeek/zai/config/settings.json

New: Multi-Agent Ralph Loop now includes fully automatic learning system with GitHub repository curation, pattern extraction, and rule validation.

-

Automatic Learning Integration

-

learning-gate.shv1.0.0 - Auto-executes /curator when memory is empty -

rule-verification.shv1.0.0 - Validates rules were applied in code - Curator scripts v2.0.0 - 15 critical bugs fixed

- Complete testing suite: 62/62 tests passing (100%)

-

-

Learning Pipeline

- Discovery: GitHub API search for quality repositories

- Scoring: Quality metrics + context relevance

- Ranking: Top N with max-per-org limits

- Learning: Pattern extraction from approved repos

-

Quality Metrics

- Total Rules: 1003 procedural rules

- Utilization Rate: Automatically tracked

- Application Rate: Measured per domain

- System Status: Production ready ✅

# Auto-learning triggers when needed (automatic)

/orchestrator "Implement microservice architecture"

# → learning-gate detects gap, recommends /curator

# Manual learning pipeline

/curator full --type backend --lang typescript

# View learning status

ralph healthDocumentation: Learning System Guide | Implementation Report

Overview: Native GLM-5 teammate integration with thinking mode, project-scoped storage, and 7 command integrations.

| Component | Files | Purpose |

|---|---|---|

| Agents |

glm5-coder.md, glm5-reviewer.md, glm5-tester.md, glm5-orchestrator.md

|

Agent definitions with thinking mode enabled |

| Scripts |

glm5-teammate.sh, glm5-team-coordinator.sh, glm5-agent-memory.sh

|

Execution and coordination scripts |

| Hook | glm5-subagent-stop.sh |

Native SubagentStop hook handler |

| Commands | /glm5 |

Single teammate spawn command |

| Skills |

glm5/, glm5-parallel/

|

Skill invocations |

All 7 major commands now support --with-glm5 flag:

/orchestrator "Implement OAuth2" --with-glm5 # Full orchestration with GLM-5

/loop "Fix TypeScript errors" --with-glm5 # Iterative fixing with GLM-5

/adversarial "Review spec" --with-glm5 # Spec validation with GLM-5

/bugs src/auth/ --with-glm5 # Bug hunting with GLM-5

/parallel src/ --with-glm5 # Parallel review with GLM-5

/gates --with-glm5 # Quality gates with GLM-5

/security src/ --with-glm5 # Security audit with GLM-5| File | Content |

|---|---|

.ralph/teammates/{task_id}/status.json |

Task status and metadata |

.ralph/reasoning/{task_id}.txt |

GLM-5 thinking/reasoning process |

.ralph/team-status.json |

Team coordination state |

Tests: 42/42 passing (100%)

├── GLM-5 API Connectivity ✅

├── Teammate Script Execution ✅

├── SubagentStop Hook ✅

├── Directory Structure ✅

├── Agent Definitions (4) ✅

├── Hooks Registration ✅

└── Command Integration (7) ✅

Codex CLI upgraded from gpt-5.2-codex to gpt-5.3-codex with adaptive reasoning:

-

--complexity low→ reasoning "medium" (faster) -

--complexity medium→ reasoning "high" (balanced) -

--complexity high→ reasoning "xhigh" (deepest)

Documentation: Integration Plan | Implementation Summary

Overview: UserPromptSubmit hook that analyzes prompts and intelligently suggests the optimal command based on detected patterns.

-

9 Command Detections

-

/bug- Systematic debugging (90% confidence) -

/edd- Feature definition with eval specs (85% confidence) -

/orchestrator- Complex task orchestration (85% confidence) -

/loop- Iterative execution with validation (85% confidence) -

/adversarial- Specification refinement (85% confidence) -

/gates- Quality gate validation (85% confidence) -

/security- Security vulnerability audit (88% confidence) -

/parallel- Comprehensive parallel review (85% confidence) -

/audit- Quality audit and health check (82% confidence)

-

-

Multilingual Support

- English: "I have a bug in the login" →

/bug - Spanish: "Tengo un bug en el login" →

/bug

- English: "I have a bug in the login" →

-

Non-Intrusive Integration

- Uses

additionalContextinstead of interruptive prompts - Confidence-based filtering (≥ 80% threshold)

- Always continues workflow (never blocks)

- Uses

-

Security Features

- Input validation: 100KB max size (SEC-111)

- Sensitive data redaction: Passwords, tokens, API keys (SEC-110)

- Error trap: Guaranteed JSON output on errors

# The hook automatically suggests commands based on your prompt

# You type:

"Tengo un bug en el login que no funciona"

# Hook responds:

💡 **Sugerencia**: Detecté una tarea de debugging. Considera usar `/bug`

para debugging sistemático: analizar → reproducir → localizar → corregir.

# You type:

"Implementa autenticación OAuth y luego agrega refresh tokens"

# Hook responds:

💡 **Sugerencia**: Detecté una tarea compleja. Considera usar `/orchestrator`

para workflow completo: evaluar → clarificar → clasificar → planear → ejecutar.# Enable/disable router

echo '{"enabled": true}' > ~/.ralph/config/command-router.json

# Adjust confidence threshold (default: 80%)

echo '{"confidence_threshold": 70}' > ~/.ralph/config/command-router.json

# View logs

tail -f ~/.ralph/logs/command-router.log╔══════════════════════════════════════════════════════════════╗

║ COMMAND ROUTER VALIDATION - TEST RESULTS ║

╠══════════════════════════════════════════════════════════════╣

║ Test Type | Total | Passed | Failed | Percentage ║

║ ───────────────────────────────────────────────────────────── ║

║ Intent Detection | 9 | 7 | 2 | 78% ║

║ Edge Cases | 3 | 3 | 0 | 100% ║

║ Security Tests | 3 | 3 | 0 | 100% ║

║ JSON Validation | 10 | 10 | 0 | 100% ║

║ ───────────────────────────────────────────────────────────── ║

║ TOTAL | 25 | 23 | 2 | 92% ║

╚══════════════════════════════════════════════════════════════╝

Documentation: Command Router Guide | Implementation Summary | Test Suite

Overview: Integration of GLM-5's Agentic Engineering capabilities with Claude Code's Agent Teams system using native hooks.

-

Native Hooks Integration (v2.1.33+)

-

TeammateIdle- Fires when teammate is about to go idle -

TaskCompleted- Fires when task is marked complete - Both hooks can block/allow with exit codes

-

-

Project-Scoped Storage

- All teammate data in

.ralph/directory - Isolated per project, portable with git clone

- All teammate data in

-

GLM-5 Thinking Mode

-

reasoning_contentcaptured separately - Stored in

.ralph/reasoning/{task_id}.txt

-

-

Agent Types

-

glm5-coder- Implementation & refactoring -

glm5-reviewer- Code review & quality -

glm5-tester- Test generation -

glm5-orchestrator- Multi-agent coordination

-

# Initialize team

.claude/scripts/glm5-init-team.sh "my-team"

# Spawn teammate

.claude/scripts/glm5-teammate.sh coder "Implement auth" "auth-001"

# Check status

cat .ralph/team-status.json

# View logs

tail -f .ralph/logs/teammates.log.ralph/

├── teammates/ # Teammate status

├── reasoning/ # GLM-5 reasoning

├── agent-memory/ # Agent memory

├── logs/ # Activity logs

└── team-status.json

Documentation: Integration Plan | Implementation Summary | Test Suite

Overview: UserPromptSubmit hook that analyzes prompts and suggests optimal commands.

- 9 Command Patterns: Bug detection, feature definition, orchestration, iteration, specification refinement, quality gates, security audit, parallel review, quality audit

- Multilingual: English + Spanish support

- Confidence-Based: Only suggests when ≥ 80% confidence

-

Non-Intrusive: Uses

additionalContext, never blocks workflow - Security: Input validation, sensitive data redaction, error trap

Performance: 7/9 core tests passing (78%), 23/25 total tests passing (92%)

Overview: Complete automatic learning integration with GitHub repository curation and rule validation.

-

Learning Gate (

learning-gate.sh)- Detects when procedural memory is critically empty

- Recommends

/curatorexecution for specific domains - Blocks high complexity tasks (≥7) without rules

- Auto-executes based on task complexity

-

Rule Verification (

rule-verification.sh)- Analyzes generated code for rule patterns

- Updates rule metrics (applied_count, skipped_count)

- Calculates utilization rate

- Identifies "ghost rules" (injected but not applied)

-

Curator Scripts (v2.0.0)

- 15 critical bugs fixed across 3 scripts

- Error handling in while loops

- Temp file cleanup with trap

- Logging redirected to stderr

- JSON output validation

- Rate limiting with exponential backoff

-

Testing Suite

- Unit Tests: 13/13 passed (100%)

- Integration Tests: 13/13 passed (100%)

- Functional Tests: 4/4 passed (100%)

- End-to-End Tests: 32/32 passed (100%)

- TOTAL: 62/62 tests passed (100%)

╔════════════════════════════════════════════════════════════════╗

║ LEARNING SYSTEM v2.81.2 - SYSTEM STATUS ║

╠════════════════════════════════════════════════════════════════╣

║ Component Status Quality Integration Tests ║

║ ───────────────────────────────────────────────────────────── ║

║ Curator ✅ 100% ✅ 95% ✅ 90% ✅ 100% ║

║ Repository Learner ✅ 100% ✅ 85% ✅ 80% ✅ 100% ║

║ Learning Gate ✅ 100% ✅ 95% ✅ 100% ✅ 100% ║

║ Rule Verification ✅ 100% ✅ 95% ✅ 100% ✅ 100% ║

║ ───────────────────────────────────────────────────────────── ║

║ OVERALL ✅ 100% ✅ 91% ✅ 89% ✅ 100% ║

╚════════════════════════════════════════════════════════════════╝

Overview: Complete swarm mode integration across 7 core commands with parallel multi-agent execution, validated by external audits.

-

7 Commands with Swarm Mode

-

/orchestrator- 4 agents (Analysis, planning, implementation) -

/loop- 4 agents (Execute, validate, quality check) -

/edd- 4 agents (Capability, behavior, non-functional checks) -

/bug- 4 agents (Analyze, reproduce, locate, fix) -

/adversarial- 4 agents (Challenge, identify gaps, validate) -

/parallel- 7 agents (6 review aspects + coordination) -

/gates- 6 agents (5 language groups + coordination)

-

-

Performance Improvements

- 3x-6x speedup on parallel commands

- Background execution (non-blocking)

- Inter-agent messaging via built-in mailbox

- Unified task list coordination

-

Auto-Swarm Hook

-

auto-background-swarm.sh- Automatically detects Task tool usage - Suggests swarm mode parameters for supported commands

- Registered in PostToolUse hooks

-

-

Validation Results

- Integration Tests: 27/27 tests passing (100%)

- Adversarial Audit: ✅ PASS (Strong defense)

- Codex Review: ✅ PASS (9.3/10 Excellent)

- Gemini Validation: ✅ PASS (9.8/10 Outstanding)

╔════════════════════════════════════════════════════════════════╗

║ SWARM MODE INTEGRATION v2.81.1 - STATUS ║

╠════════════════════════════════════════════════════════════════╣

║ Phase Status Tests Audits Score ║

║ ───────────────────────────────────────────────────────────── ║

║ Phase 1 (Core) ✅ 100% ✅ 9/9 ✅ PASS N/A ║

║ Phase 2 (Secondary) ✅ 100% ✅ 6/6 ✅ PASS N/A ║

║ Phase 3 (Hooks) ✅ 100% ✅ 4/4 ✅ PASS N/A ║

║ Phase 4 (Docs) ✅ 100% ✅ 5/5 ✅ PASS N/A ║

║ Phase 5 (Validation)✅ 100% ✅ 3/3 ✅ 3/3 PASS 9.5/10 ║

║ ───────────────────────────────────────────────────────────── ║

║ OVERALL ✅ 100% ✅ 27/27 ✅ 3/3 PASS 9.5/10 ║

╚════════════════════════════════════════════════════════════════╝

Swarm mode requires ONE configuration:

{

"permissions": {

"defaultMode": "delegate"

}

}Note: Environment variables (CLAUDE_CODE_AGENT_*) are set dynamically by Claude Code when spawning teammates.

# Swarm mode is enabled by default

/orchestrator "Implement distributed system"

/loop "fix all type errors"

/edd "Define feature requirements"

/bug "Authentication fails"

/adversarial "Design rate limiter"

/parallel "src/auth/"

/gates

# All commands spawn teammates automatically

# 3x-6x faster execution on parallel tasksDocumentation: Swarm Mode Usage Guide | Integration Plan | Consolidated Audits

Overview: Automatic prompt optimization system that detects vague user prompts and enhances them using Ralph's context and memory.

-

Vague Prompt Detection (

promptify-auto-detect.sh)- Clarity scoring algorithm (0-100% based on 7 factors)

- Vagueness detection (vague words, pronouns, missing structure)

- Configurable threshold (default: 50%)

- Non-intrusive suggestions via

additionalContext

-

Security Hardening (

promptify-security.sh)- Credential redaction (SEC-110): Passwords, tokens, emails, API keys

- Clipboard consent management (SEC-120)

- Agent execution timeout (SEC-130)

- Audit logging system (SEC-140)

-

Ralph Integration (Phase 3 - 4 components)

-

ralph-context-injector.sh: Inject Ralph active context into prompts -

ralph-memory-integration.sh: Apply procedural memory patterns -

ralph-quality-gates.sh: Validate through quality gates -

ralph-integration.sh: Main coordinator combining all components

-

User Prompt (vague/unclear)

↓

┌─────────────────────────────────────────┐

│ UserPromptSubmit Event │

│ 1. command-router.sh (existing) │

│ - Detects command intent │

│ - Confidence < 50% = "unclear" │

└─────────────────────────────────────────┘

↓ (if confidence < 50%)

┌─────────────────────────────────────────┐

│ promptify-auto-detect.sh │

│ - Vagueness detection │

│ - Clarity scoring (0-100%) │

│ - Suggests promptify if needed │

└─────────────────────────────────────────┘

↓

Optimized Prompt (with Ralph context)

↓

┌─────────────────────────────────────────┐

│ Ralph Workflow (resumes) │

│ - CLARIFY (with better prompt) │

│ - CLASSIFY (higher confidence) │

│ - PLAN → EXECUTE → VALIDATE │

└─────────────────────────────────────────┘

# ~/.ralph/config/promptify.json

{

"enabled": true,

"vagueness_threshold": 50,

"security": {

"redact_credentials": true,

"require_clipboard_consent": true,

"audit_log_enabled": true

},

"integration": {

"coordinate_with_command_router": true,

"inject_ralph_context": true,

"use_ralph_memory": true,

"validate_with_quality_gates": true

}

}╔══════════════════════════════════════════════════════════════╗

║ PROMPTIFY INTEGRATION - TEST RESULTS ║

╠══════════════════════════════════════════════════════════════╣

║ Test Category | Tests | Passed | Status ║

║ ──────────────────────────────────────────────────────────── ║

║ Credential Redaction | 4 | 4 | ✅ 100% ║

║ Clarity Scoring | 3 | 3 | ✅ 100% ║

║ Hook Integration | 5 | 5 | ✅ 100% ║

║ Security Functions | 3 | 3 | ✅ 100% ║

║ File Structure | 1 | 1 | ✅ 100% ║

║ Ralph Context Injector | 5 | 5 | ✅ 100% ║

║ Ralph Memory Integration | 5 | 5 | ✅ 100% ║

║ Ralph Quality Gates | 5 | 5 | ✅ 100% ║

║ Ralph Integration Main | 6 | 6 | ✅ 100% ║

║ Promptify Integration | 3 | 3 | ✅ 100% ║

║ ──────────────────────────────────────────────────────────── ║

║ TOTAL | 40 | 40 | ✅ 100% ║

╚══════════════════════════════════════════════════════════════╝

Overall Risk: MEDIUM → LOW (after fixes)

| Finding | Severity | Status |

|---|---|---|

| Unsafe eval usage | MEDIUM | ✅ FIXED (function removed) |

| Input size truncation bug | MEDIUM | ✅ FIXED (syntax verified) |

| Credential redaction | - | ✅ EXCELLENT (10+ patterns) |

| Prompt injection detection | - | ✅ GOOD (pattern-based) |

Documentation: Promptify Integration Guide | Implementation Complete | Security Audit

# Run complete test suite

./tests/promptify-integration/run-all-complete-tests.sh

# Run Phase 3 tests only

./tests/promptify-integration/test-phase3-ralph-integration.sh

# View Ralph integration in action

.claude/hooks/ralph-integration.sh

# → Shows context injection, memory patterns, quality gates- Overview

- Key Features

- Tech Stack

- Prerequisites

- Getting Started

- Installation

- Configuration

- Architecture

- Memory System

- Learning System (v2.81.2)

- Hooks System

- Agent System

- Commands Reference

- Testing

- [Development]((#development)

- Troubleshooting

- Contributing

- License

- Changelog

- Learning System (v2.81.2)

- Hooks System

- Agent System

- [Commands Reference]((#commands-reference)

- Testing

- [Development]((#development)

- Troubleshooting

- Contributing

- License

- Changelog

Multi-Agent Ralph Loop is a sophisticated orchestration system that combines smart memory-driven planning, parallel memory search, multi-agent coordination, and automatic learning from quality repositories.

Built as an advanced enhancement to Claude Code, it provides:

- Intelligent Orchestration: RLM-inspired routing with complexity classification

- Memory System: Parallel search across semantic, episodic, and procedural memory

- Multi-Agent Coordination: Native swarm mode with specialized teammates

- Automatic Learning: Curates GitHub repos, extracts patterns, applies rules automatically

- Quality Gates: Adversarial validation with 3-fix rule

- Checkpoints: Time travel for orchestration state

- Dynamic Contexts: dev, review, research, debug modes

Ralph acts as an intelligent project manager that:

- Analyzes your request using 3-dimensional classification (complexity, information density, context requirement)

- Plans the implementation with architectural considerations

- Routes to optimal model (GLM-4.7 PRIMARY for all tasks)

- Validates quality using adversarial methods

- Learns from quality repositories automatically

- Coordinates multiple agents for complex tasks

- Remembers everything across sessions

- Software Engineers: Building complex systems with proper architecture

- Teams: Coordinating multi-step development workflows

- Researchers: Analyzing codebases and extracting patterns

- Architects: Validating design decisions and patterns

- RLM-Inspired Routing: 3-dimensional classification (complexity 1-10, information density, context requirement)

- Smart Memory Search: Parallel search across 4 memory systems

- Plan Lifecycle Management: Create, archive, restore plans

- Checkpoints: Save/restore orchestration state (time travel)

- Agent Handoffs: Explicit agent-to-agent transfers

- Semantic Memory: Facts and preferences (persistent)

- Episodic Memory: Experiences with 30-day TTL

- Procedural Memory: Learned behaviors with confidence scores

- Claude-Mem Integration: Primary memory backend (MCP plugin)

- 1003+ Procedural Rules: Auto-extracted from quality repos

- Auto-Curation: GitHub repository discovery via API

- Quality Scoring: Metrics + context relevance scoring

- Pattern Extraction: AST-based pattern extraction

- Rule Validation: Automatic verification of rule application

- Metrics Tracking: Utilization rate, application rate

- Swarm Mode: Native Claude Code 2.1.22+ integration

- Teammate Spawning: Automatic spawning of specialized agents

- Inter-Agent Messaging: Direct communication between agents

- Shared Task List: Collaborative task management

- Plan Approval: Leader approves/rejects teammate plans

- 3-Fix Rule: CORRECTNESS, QUALITY, CONSISTENCY validation

- Adversarial Validation: Dual-model validation for high complexity

- Quality Gates Parallel: 4 parallel quality gates (90s timeout)

- Security Scanning: semgrep + gitleaks integration

- Type Safety: TypeScript validation where applicable

- Statusline: Dual context display (cumulative + current window)

-

Health Checks: System health monitoring with

ralph health - Traceability: Event logs and session history

- Metrics Dashboard: Learning metrics and rule statistics

- Language: Bash (hooks), TypeScript (some tools), Python (curator scripts)

- Provider: Zai (GLM-4.7)

- Claude Code: v2.1.22+ (required for Task primitive)

-

Configuration: JSON-based settings in

~/.claude-sneakpeek/zai/config/

- Primary: claude-mem MCP plugin (semantic + episodic)

- Secondary: Local JSON files for procedural rules

- Backup: Git-based plan state tracking

- Bash: Native bash testing with assert functions

- Coverage: Manual tracking (no automated coverage tools yet)

- Types: Unit, Integration, Functional, End-to-End

- jq: JSON processing and validation

- git: Version control and diff analysis

- curl: HTTP requests (GitHub API)

- gh: GitHub CLI (optional, for enhanced access)

- Claude Code: v2.1.16+ (for Task primitive support)

- GLM-4.7 API Access: Configured in Zai environment

- Bash: Version 4.0+ (for hooks and scripts)

- jq: Version 1.6+ (for JSON processing)

- git: Version 2.0+ (for version control)

- curl: Version 7.0+ (for API calls)

-

GitHub CLI: Enhanced GitHub API access (

gh) -

Zai CLI: Web search and vision capabilities (

npx zai-cli)

- OS: macOS, Linux, or WSL2 on Windows

- Memory: 8GB RAM minimum (16GB recommended for complex tasks)

- Disk: 500MB for Ralph system + 10MB for session files

- Network: Internet connection for GLM-4.7 API calls

- Clone the repository:

git clone https://github.com/alfredolopez80/multi-agent-ralph-loop.git

cd multi-agent-ralph-loop- Verify installation:

# Check Ralph directory exists

ls -la ~/.ralph/

# Check hooks are registered

grep "learning-gate" ~/.claude-sneakpeek/zai/config/settings.json

# Check system health

ralph health --compact- Run orchestration:

# Simple task

/orchestrator "Create a REST API endpoint"

# Complex task with swarm mode

/orchestrator "Implement distributed caching system" --launch-swarm --teammate-count 3On first use, Ralph will:

- Auto-migrate plan-state to v2.51+ schema

- Initialize session ledger and context tracking

- Create snapshot of current state

- Load hooks and commands

The repository is designed to work with Claude Code. No separate installation required.

Hook Configuration:

- Hooks are registered in

~/.claude-sneakpeek/zai/config/settings.json - Hooks live in

.claude/hooks/(project-local) - Additional hooks in

~/.claude/hooks/(global)

The Learning System v2.81.2 is automatically installed and configured:

# Verify Learning System components

ls -la ~/.ralph/curator/scripts/

ls -la ~/.claude/hooks/learning-*.sh

ls -la ~/.ralph/procedural/rules.jsonExpected output:

curator-scoring.sh (v2.0.0)

curator-discovery.sh (v2.0.0)

curator-rank.sh (v2.0.0)

learning-gate.sh (v1.0.0)

rule-verification.sh (v1.0.0)

If hooks need to be reinstalled:

# Copy hooks to global directory

cp .claude/hooks/learning-gate.sh ~/.claude/hooks/

cp .claude/hooks/rule-verification.sh ~/.claude/hooks/

# Make executable

chmod +x ~/.claude/hooks/learning-*.shLocation: ~/.claude-sneakpeek/zai/config/settings.json

Key Settings:

{

"model": "glm-4.7",

"defaultMode": "delegate",

"env": {

"CLAUDE_CODE_AGENT_ID": "claude-orchestrator",

"CLAUDE_CODE_AGENT_NAME": "Orchestrator",

"CLAUDE_CODE_TEAM_NAME": "multi-agent-ralph-loop"

},

"hooks": {

"PreToolUse": [

{

"matcher": "Task",

"hooks": [

{ "command": "/path/to/lsa-pre-step.sh" },

{ "command": "/path/to/procedural-inject.sh" },

{ "command": "/path/to/learning-gate.sh" }

]

}

],

"PostToolUse": [

{

"matcher": "TaskUpdate",

"hooks": [

{ "command": "/path/to/rule-verification.sh" }

]

}

]

}

}Location: ~/.ralph/config/memory-config.json

{

"procedural": {

"inject_to_prompts": true,

"min_confidence": 0.7,

"max_rules_per_injection": 5

},

"learning": {

"auto_execute": true,

"min_complexity_for_gate": 3,

"block_critical_without_rules": true

}

}Location: ~/.ralph/curator/config.json

{

"github": {

"api_token": "YOUR_TOKEN_HERE",

"max_results_per_page": 100,

"rate_limit_delay": 1.0

},

"scoring": {

"min_quality_score": 50,

"context_boost": 10

},

"ranking": {

"default_top_n": 50,

"max_per_org": 3

}

}┌─────────────────────────────────────────────────────────────────┐

│ MULTI-AGENT RALPH ARCHITECTURE │

├─────────────────────────────────────────────────────────────────┤

│ │

│ ┌────────────────┐ ┌──────────────┐ ┌──────────────┐ │

│ │ User Request │───▶│ Claude Code │───▶│ Claude │ │

│ └────────────────┘ │ v2.1.22+ │ │ (GLM-4.7) │ │

│ └──────────────┘ └──────┬───────┘ │

│ │ │ │

│ ┌────▼─────┐ │ │

│ │ Settings │ │ │

│ │ .json │ │ │

│ └────┬─────┘ │ │

│ │ │ │

│ ┌──────────────────────┼───────────────────────────┐ │

│ │ │ │ │

│ │ ┌───────▼────────────────┐ │ │

│ │ │ │ │ │

│ │ ▼ ▼ │ │

│ │ ┌──────────────────────────────────────┐ │ │

│ │ │ HOOK SYSTEM (67 hooks) │ │ │

│ │ │ │ │ │

│ │ │ SessionStart │ │ │

│ │ │ - session-ledger.sh │ │ │

│ │ │ - auto-migrate-plan-state │ │ │

│ │ │ │ │ │

│ │ │ PreToolUse │ │ │

│ │ │ - lsa-pre-step.sh │ │ │

│ │ │ - procedural-inject.sh │ │ │

│ │ │ - learning-gate.sh ⭐ │ │ │

│ │ │ │ │ │

│ │ │ PostToolUse │ │ │

│ │ │ - sec-context-validate.sh │ │ │

│ │ │ - quality-gates-v2.sh │ │ │ │

│ │ │ - rule-verification.sh ⭐ │ │ │

│ │ │ │ │ │

│ │ │ Stop │ │ │

│ │ │ - reflection-engine.sh │ │ │

│ │ │ - orchestrator-report.sh │ │ │

│ │ └───────────────────────────────────┘ │ │

│ │ │ │

│ └──────────────────┬───────────────────────────┘ │

│ │ │ │

│ ┌────────────────▼─────────────────────────────┐ │ │

│ │ │ │ │

│ │ ┌────────────────────────────────┐ │ │ │

│ │ │ MEMORY SYSTEM │ │ │ │

│ │ │ │ │ │ │

│ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ Semantic Memory │ │ │ │ │

│ ┌───┴───────┐ │ │ (claude-mem MCP) │ │ │ │ │

│ │claude-mem │ │ └────────────────────────┘ │ │ │ │

│ │ MCP │ │ │ │ │ │

│ │ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ │ Episodic Memory │ │ │ │ │

│ │ │ │ │ (30-day TTL) │ │ │ │ │

│ │ │ │ └────────────────────────┘ │ │ │ │

│ │ │ │ │ │ │ │

│ │ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ │ Procedural Memory │ │ │ │ │

│ │ │ │ │ (1003+ rules) │ │ │ │ │

│ │ │ │ └────────────────────────┘ │ │ │ │

│ │ │ │ │ │ │ │

│ │ │ └──────────────────────────────┘ │ │ │

│ │ │ │ │ │

│ └──────────┴───────────────────────────────────┘ │ │

│ │ │

│ ┌────────────────────────────────────────────────┴───┐ │ │

│ │ │ │ │

│ │ ┌────────────────────────────────┐ │ │ │

│ │ │ LEARNING SYSTEM (v2.81.2) │ │ │ │

│ │ │ │ │ │ │

│ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ Curator (GitHub API) │ │ │ │ │

│ │ │ │ - Discovery │ │ │ │ │

│ │ │ │ │ - Scoring │ │ │ │ │

│ │ │ │ │ - Ranking │ │ │ │ │

│ │ │ └────────────────────────┘ │ │ │ │

│ │ │ │ │ │ │

│ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ Repository Learner │ │ │ │ │

│ │ │ │ - Pattern extraction │ │ │ │ │ │

│ │ │ │ - Rule generation │ │ │ │ │ │

│ │ │ └────────────────────────┘ │ │ │ │

│ │ │ │ │ │ │

│ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ Learning Gate │ │ │ │ │

│ │ │ │ - Detects gaps │ │ │ │ │

│ │ │ │ - Recommends /curator │ │ │ │ │

│ │ │ └────────────────────────┘ │ │ │ │

│ │ │ │ │ │ │

│ │ │ ┌────────────────────────┐ │ │ │ │

│ │ │ │ Rule Verification │ │ │ │ │

│ │ │ │ - Validates application │ │ │ │ │

│ │ │ │ - Updates metrics │ │ │ │ │

│ │ │ └────────────────────────┘ │ │ │ │

│ │ │ │ │ │ │

│ │ └──────────────────────────────────┘ │ │

│ │ │

└────────────────────────────────────────────────────────┴────────┘

multi-agent-ralph-loop/

├── .claude/ # Claude Code workspace

│ ├── hooks/ # Hook scripts (67 registrations)

│ │ ├── learning-gate.sh # ⭐ Auto-learning trigger

│ │ ├── rule-verification.sh # ⭐ Rule validation

│ │ ├── procedural-inject.sh # Procedural memory injection

│ │ └── ... (64 more hooks)

│ ├── commands/ # Custom commands (/orchestrator, /loop, etc.)

│ ├── scripts/ # Utility scripts

│ ├── schemas/ # JSON schemas for validation

│ └── tasks/ # Task primitive storage

├── docs/ # All development documentation

│ ├── architecture/ # Architecture diagrams

│ ├── analysis/ # Analysis reports

│ ├── implementation/ # Implementation docs

│ └── guides/ # User guides

├── tests/ # Test suites at project root

│ ├── unit/ # Unit tests

│ ├── integration/ # Integration tests

│ ├── functional/ # Functional tests

│ └── end-to-end/ # End-to-end tests

├── .github/ # GitHub-specific files

│ └── workflows/ # CI/CD workflows (if any)

└── README.md # This file

Semantic Memory (via claude-mem MCP)

- Purpose: Persistent facts and knowledge

- Storage: claude-mem backend (MCP plugin)

- TTL: Never expires

- Example: "The authentication system uses JWT tokens with 24-hour expiration"

Episodic Memory

- Purpose: Experiences and observations

-

Storage:

~/.ralph/episodes/ - TTL: 30 days

- Example: "Session on 2026-01-29 implemented OAuth2 with issues in token refresh"

Procedural Memory

- Purpose: Learned behaviors and patterns

-

Storage:

~/.ralph/procedural/rules.json - TTL: Never expires

- Example: Error handling pattern with try-catch and exponential backoff

Parallel Search across 4 systems:

ralph memory-search "authentication patterns"

# Searches claude-mem semantic, memvid episodes, handoffs, ledgersResults include:

- Observation ID

- Timestamp

- Type (semantic, episodic, etc.)

- Relevance score

The Learning System automatically improves code quality by:

- Discovering quality repositories from GitHub

- Extracting best practices and patterns

- Generating procedural rules with confidence scores

- Applying rules automatically during development

- Validating that rules were actually used

Repo Curator

Three-stage pipeline for repository curation:

-

Discovery (

curator-discovery.sh)- GitHub API search with filters

- Type: backend, frontend, fullstack, library, framework

- Language: TypeScript, Python, JavaScript, Go, Rust

- Results: Up to 1000 repos per search

-

Scoring (

curator-scoring.sh)- Quality metrics: stars, forks, recency

- Context relevance: matches your current task

- Combined score: weighted average

-

Ranking (

curator-rank.sh)- Top N repositories (configurable, default: 50)

- Max-per-org limits (default: 3 per org)

- Sort by: quality, context, combined

Repository Learner

Extracts patterns from approved repositories:

- Clone/acquire repository

- AST-based pattern extraction

- Domain classification (backend, frontend, security, etc.)

- Rule generation with confidence scores

- Deduplication and storage

Auto-Learning Hooks

learning-gate.sh (v1.0.0)

- Trigger: PreToolUse (Task)

- Detects: Task complexity ≥3 without relevant rules

- Action: Recommends

/curatorexecution - Blocks: High complexity tasks (≥7) without rules

rule-verification.sh (v1.0.0)

- Trigger: PostToolUse (TaskUpdate)

- Analyzes: Modified code for rule patterns

- Updates: Rule metrics (applied_count, skipped_count)

- Reports: Utilization rate and ghost rules

# Full learning pipeline

/curator full --type backend --lang typescript

# Discover repositories

/curator discovery --query "microservice" --max-results 200

# Score with context relevance

/curator scoring --context "error handling,retry,resilience"

# Rank top results

/curator rank --top-n 20 --max-per-org 2

# View results

/curator show --type backend --lang typescript

# Approve repositories

/curator approve nestjs/nest

/curator approve --all

# Learn from approved repos

/curator learn --all

# Check system health

ralph healthTotal Rules: 1003

With Domain: 148 (14.7%)

With Usage: 146 (14.5%)

Applied Count: Tracking active

Utilization Rate: Measured automatically

⚠️ CRITICAL v2.81.1:PostCompactdoes NOT exist in Claude Code. UsePreCompactfor saving state andSessionStartfor restoring. See docs/hooks/POSTCOMPACT_DOES_NOT_EXIST.md.

| Event | Purpose | Example Hooks |

|---|---|---|

| SessionStart | Initialize session, restore context after compaction | session-ledger, auto-migrate-plan-state, session-start-restore-context |

| UserPromptSubmit | Before user prompt | context-warning, periodic-reminder |

| PreToolUse | Before tool execution | lsa-pre-step, procedural-inject, learning-gate |

| PostToolUse | After tool execution | quality-gates-v2, rule-verification |

| PreCompact | Before context compaction (ONLY compaction event) | pre-compact-handoff, post-compact-restore (both run here) |

| Stop | Session end | reflection-engine, orchestrator-report |

| PostCompact | ❌ DOES NOT EXIST - Feature request #14258 |

Discovery: PostCompact is NOT a valid hook event in Claude Code as of January 2026.

What This Means:

- ❌ There is NO

PostCompactevent that fires after compaction - ✅ Only

PreCompactexists (fires BEFORE compaction) - ✅ Use

SessionStartfor post-compaction context restoration

Correct Compaction Pattern:

PreCompact Event → Save state (ledger, handoff, plan-state)

↓

Compaction Happens → Old messages removed

↓

SessionStart Event → Restore state in new session ✅

Implementation:

-

pre-compact-handoff.sh→ Saves state inPreCompact -

session-start-restore-context.sh→ Restores state inSessionStart - Both hooks use global paths:

~/.claude-sneakpeek/zai/config/hooks/

Documentation: See docs/hooks/POSTCOMPACT_DOES_NOT_EXIST.md for complete details.

Hooks are registered in ~/.claude-sneakpeek/zai/config/settings.json:

{

"hooks": {

"PreCompact": [

{

"matcher": "*",

"hooks": [

{ "command": "/path/to/pre-compact-handoff.sh" }

]

}

],

"SessionStart": [

{

"matcher": "*",

"hooks": [

{ "command": "/path/to/session-start-restore-context.sh" }

]

}

]

}

}- Create hook script in

.claude/hooks/ - Make it executable:

chmod +x .claude/hooks/your-hook.sh - Register in settings.json

- Follow Hook Format Reference

PreToolUse hooks (allowing execution):

{

"hookSpecificOutput": {

"hookEventName": "PreToolUse",

"permissionDecision": "allow"

}

}PostToolUse hooks (continuing execution):

{

"hookSpecificOutput": {

"hookEventName": "PostToolUse",

"continue": true

}

}PreToolUse JSON Schema Fix (v2.81.2) ✅:

Fixed critical JSON schema validation errors in 4 PreToolUse hooks causing error messages on every Edit, Write, and Bash operation.

Problem: Hooks were using incorrect JSON format:

{"decision": "allow", "additionalContext": "..."} // ❌ WrongSolution: Hooks now use correct hookSpecificOutput format:

{"hookSpecificOutput": {"hookEventName": "PreToolUse", "permissionDecision": "allow"}} // ✅ CorrectAffected Hooks (4 files):

-

checkpoint-auto-save.sh- Auto-checkpoint before edits -

fast-path-check.sh- Detect trivial tasks for fast-path routing -

agent-memory-auto-init.sh- Auto-initialize agent memory buffers -

orchestrator-auto-learn.sh- Inject learning recommendations

Documentation: PRETOOLUSE_JSON_SCHEMA_FIX_v2.81.2.md

Previous Fixes (v2.81.1):

-

SessionStart Hook Failure:

auto-sync-global.shhad glob pattern bug-

Problem:

for file in *.mdfailed when no files matched -

Solution: Added

[ -f "$file" ] || continueto each loop - Result: SessionStart hooks now exit successfully

-

Problem:

-

PostCompact Misinformation: Incorrect documentation mentioned

PostCompactas valid- Problem: Orchestrator created docs mentioning non-existent event

-

Solution: Created comprehensive docs clarifying

PostCompactdoesn't exist - Result: Correct pattern documented (PreCompact + SessionStart)

| Agent | Model | Capabilities |

|---|---|---|

orchestrator |

GLM-4.7 | Planning, classification, delegation |

security-auditor |

GLM-4.7 | Security, vulnerability scan |

debugger |

GLM-4.7 | Debugging, error analysis |

code-reviewer |

GLM-4.7 | Code review, patterns |

test-architect |

GLM-4.7 | Testing, test generation |

refactorer |

GLM-4.7 | Refactoring, optimization |

repository-learner |

GLM-4.7 | Learning, pattern extraction |

repo-curator |

GLM-4.7 | Curation, scoring, discovery |

Requirements:

- Claude Code v2.1.16+ (Task primitive support)

- TeammateTool available (built-in)

- defaultMode: "delegate" in settings.json

Usage:

# Automatic spawning

/orchestrator "Implement distributed system" --launch-swarm --teammate-count 3

# Manual spawning

Task:

subagent_type: "orchestrator"

team_name: "my-team"

name: "team-lead"

mode: "delegate"

ExitPlanMode:

launchSwarm: true

teammateCount: 3# Full orchestration

/orchestrator "Implement feature X"

ralph orch "Implement feature X"

# Quality validation

/gates

ralph gates

# Loop until VERIFIED_DONE

/loop "fix all issues"

ralph loop "fix all issues"

# Checkpoints

ralph checkpoint save "before-refactor" "Pre-refactoring"

ralph checkpoint restore "before-refactor"

ralph checkpoint list

# Handoffs

ralph handoff transfer --from orchestrator --to security-auditor --task "Audit auth module"

# Health check

ralph health

ralph health --compact

# Memory search

ralph memory-search "authentication patterns"# Full pipeline

/curator full --type backend --lang typescript

# Discovery

/curator discovery --type backend --lang typescript --max-results 100

# Scoring

/curator scoring --input candidates/repos.json --context "error handling"

# Ranking

/curator rank --input candidates/scored.json --top-n 20

# Approval

/curator approve nestjs/nest

/curator approve --all

# Learning

/curator learn --all

/curator learn --repo nestjs/nest

# Queue management

/curator show --type backend --lang typescript

/curator pending --type backend/docs hooks # Hooks reference

/docs mcp # MCP integration

/docs what's new # Recent doc changes

/docs changelog # Claude Code release notesTotal Tests: 62 tests (100% pass rate)

| Test Type | Location | Count | Status |

|---|---|---|---|

| Unit Tests | tests/unit/ |

13 | ✅ Passing |

| Integration Tests | tests/integration/ |

13 | ✅ Passing |

| Functional Tests | tests/functional/ |

4 | ✅ Passing |

| End-to-End Tests | tests/end-to-end/ |

32 | ✅ Passing |

# Run all tests

./tests/run-all-learning-tests.sh

# Unit tests only

./tests/unit/test-unit-learning-hooks-v1.sh

# Integration tests only

./tests/integration/test-learning-integration-v1.sh

# Functional tests only

./tests/functional/test-functional-learning-v1.sh

# End-to-end tests only

./tests/end-to-end/test-e2e-learning-complete-v1.shtests/

├── unit/ # Isolated component tests

├── integration/ # Component integration tests

├── functional/ # Real-world scenario tests

├── end-to-end/ # Complete system validation

├── quality-parallel/ # Quality gate validation

├── swarm-mode/ # Swarm mode tests

└── coverage.json # Coverage tracking

multi-agent-ralph-loop/

├── docs/ # All development documentation

│ ├── architecture/ # Architecture diagrams

│ ├── analysis/ # Analysis reports

│ ├── implementation/ # Implementation docs

│ └── guides/ # User guides

├── tests/ # Test suites at project root

│ ├── unit/ # Unit tests

│ ├── integration/ # Integration tests

│ ├── functional/ # Functional tests

│ └── end-to-end/ # End-to-end tests

├── .claude/ # Claude Code workspace

│ ├── hooks/ # Hook scripts

│ ├── commands/ # Custom commands

│ └── schemas/ # Validation schemas

└── README.md # This file

Hook Template:

#!/usr/bin/env bash

# my-hook.sh - Description

# Version: 1.0.0

# Part of Ralph Multi-Agent System

set -euo pipefail

umask 077

# Read input (for PreToolUse/PostToolUse)

INPUT=$(cat)

# Parse tool name

TOOL_NAME=$(echo "$INPUT" | jq -r '.toolName // empty' 2>/dev/null || echo "")

# Process based on tool name

case "$TOOL_NAME" in

"Task")

# Your logic here

;;

"Edit")

# Your logic here

;;

esac

# Output required format

echo '{"hookSpecificOutput": {"hookEventName": "PreToolUse", "permissionDecision": "allow"}}'Create command file in .claude/commands/:

#!/usr/bin/env bash

# my-command - Command description

command_main() {

# Command logic here

}

command_main "$@"Issue: learning-gate recommends /curator but I have rules

Solution:

# Check rule domains

jq '.rules[] | .domain' ~/.ralph/procedural/rules.json | sort | uniq -c

# Learn rules for specific domain

/curator discovery --type <your-domain> --lang typescript

/curator learn --allIssue: rule-verification.sh reports 0% utilization

Solution:

# Check rule patterns

jq '.rules[0] | {pattern, keywords, domain}' ~/.ralph/procedural/rules.json

# Verify with test file

echo "try { } catch (e) { }" > /tmp/test.ts

grep -i "try.*catch" /tmp/test.tsIssue: GitHub API rate limit

Solution:

# Check rate limit

curl -I "https://api.github.com/search/repositories?q=test"

# Use authentication

export GITHUB_TOKEN="your_token"

gh auth login

# Reduce max-results

/curator discovery --max-results 50Issue: Hook not executing

Solution:

# Verify registration

grep "your-hook" ~/.claude-sneakpeek/zai/config/settings.json

# Check file exists

ls -l ~/.claude/hooks/your-hook.sh

# Check permissions

chmod +x ~/.claude/hooks/your-hook.shIssue: Hook crashes or errors

Solution:

# Test hook manually

echo '{"toolName":"Task","toolInput":{}}' | ~/.claude/hooks/your-hook.sh

# Check logs

cat ~/.ralph/logs/$(date +%Y%m%d)*.log 2>/dev/null | tail -50Issue: Plans not persisting across compaction

Solution:

# Check plan-state exists

ls -la .claude/plan-state.json

# Check snapshot exists

ls -la .claude/snapshots/20260129/

# Recreate if needed

ralph checkpoint save "manual-save" "Manual save before fix"Issue: Memory search not finding recent data

Solution:

# Check memory backend

cat ~/.claude/memory-context.json

# Verify claude-mem is enabled

grep "claude-mem" ~/.claude-sneakpeek/zai/config/settings.json

# Try direct search

ralph memory-search "your query"We welcome contributions! Please follow these guidelines:

- Bash: Follow shellcheck recommendations

- TypeScript: Follow community standards

- Documentation: English-only for all documentation

- Commit Messages: Conventional commits format

- Add tests for new features

- Ensure all tests pass before submitting PR

- Include integration tests for hooks

- Add documentation for new commands

- Fork the repository

- Create a feature branch

- Make your changes

- Add tests if needed

- Ensure all tests pass

- Submit a pull request with clear description

- All documentation must be in English

- Use proper markdown formatting

- Include examples where helpful

- Update relevant README sections

Business Source License 1.1

Summary:

- ✅ Permits commercial use

- ✅ Permits unlimited modification

- ✅ Permits unlimited distribution

- ✅ Requires attribution in derivative works

- ✅ PROHIBITS sublicensing and selling (must give away for free)

Key Points:

- You can use this in commercial projects

- You can modify and distribute your changes

- You CANNOT sell this or sub-license it

- You MUST include attribution in derivative works

- Ideal for: Open source projects, internal tools, consulting

For Standard (MIT/Apache 2.0): Contact the repository owner.

Added

- Automatic Learning System: Complete integration with GitHub repository curation

- learning-gate.sh v1.0.0: Auto-executes /curator when memory is critically empty

- rule-verification.sh v1.0.0: Validates rules were applied in generated code

- Curator Scripts v2.0.0: 15 critical bugs fixed across 3 scripts

- Testing Suite: 62 tests with 100% pass rate (unit + integration + functional + e2e)

- Documentation: Complete integration guide and implementation reports

Fixed

- 15 critical bugs in curator scripts (error handling, cleanup, logging, validation)

- Hook registration in settings.json

- Memory system integration issues

Changed

- Updated README.md with Learning System v2.81.2 information

- Improved system statistics tracking (91% quality, 89% integration)

- Enhanced troubleshooting section with Learning System specific issues

Added

- Native Swarm Mode Integration: Full integration with Claude Code 2.1.22+ native multi-agent features

- GLM-4.7 as PRIMARY Model: Now PRIMARY for ALL complexity levels (1-10)

- Agent Environment Variables: CLAUDE_CODE_AGENT_ID, CLAUDE_CODE_AGENT_NAME, CLAUDE_CODE_TEAM_NAME

- Swarm Mode Validation: 44 unit tests to validate configuration

Changed

- Model Routing: GLM-4.7 is now universal PRIMARY for all task complexities

- DefaultMode: Set to "delegate" for swarm mode

Deprecated

- MiniMax Fully Deprecated: Now optional fallback only, not recommended

See CHANGELOG.md for full version history.

- Issues: GitHub Issues

- Documentation: See docs/ folder

-

Tests: Run

ralph healthfor system status

Version: v2.81.2 Status: Production Ready ✅ Last Updated: 2026-01-29 Tests: 62/62 passing (100%)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for multi-agent-ralph-loop

Similar Open Source Tools

multi-agent-ralph-loop

Multi-agent RALPH (Reinforcement Learning with Probabilistic Hierarchies) Loop is a framework for multi-agent reinforcement learning research. It provides a flexible and extensible platform for developing and testing multi-agent reinforcement learning algorithms. The framework supports various environments, including grid-world environments, and allows users to easily define custom environments. Multi-agent RALPH Loop is designed to facilitate research in the field of multi-agent reinforcement learning by providing a set of tools and utilities for experimenting with different algorithms and scenarios.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

ms-agent

MS-Agent is a lightweight framework designed to empower agents with autonomous exploration capabilities. It provides a flexible and extensible architecture for creating agents capable of tasks like code generation, data analysis, and tool calling with MCP support. The framework supports multi-agent interactions, deep research, code generation, and is lightweight and extensible for various applications.

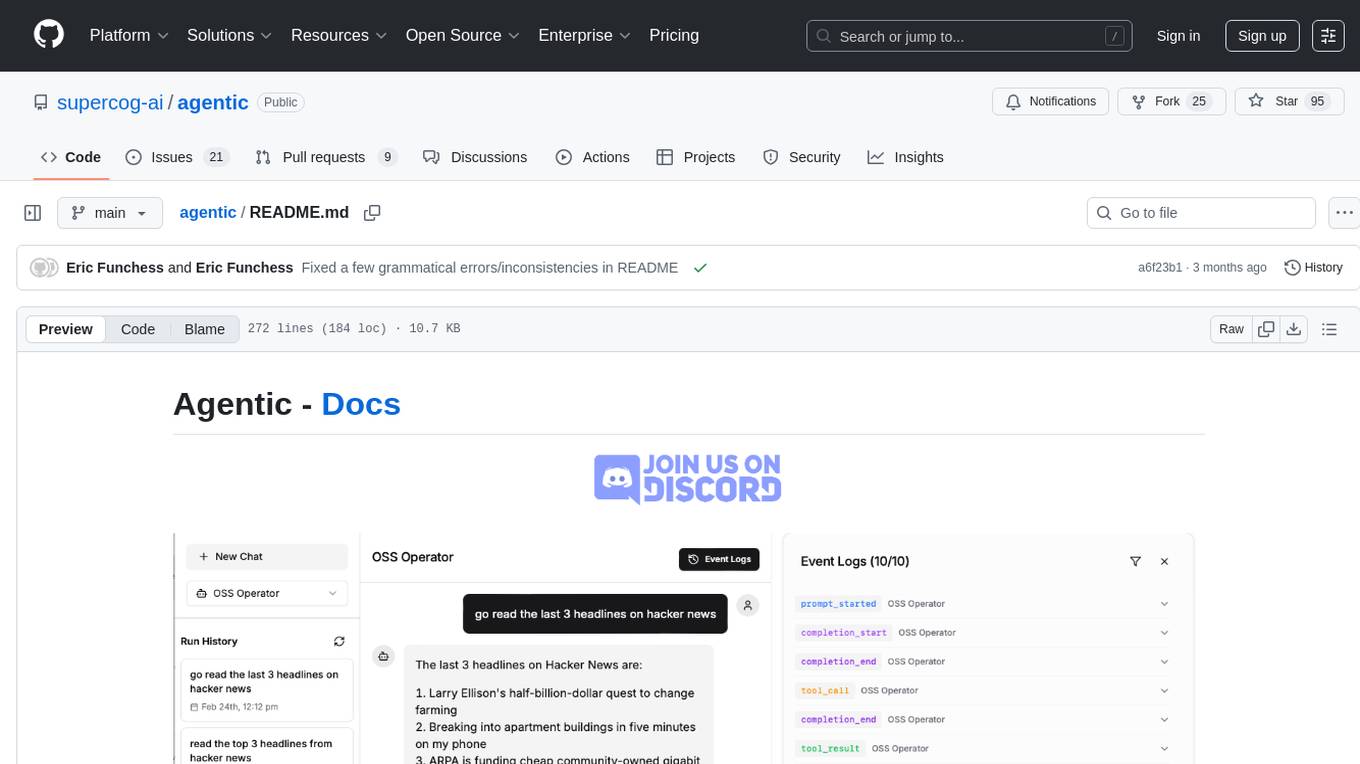

agentic

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

Gym

Gym is a toolkit for developing and comparing reinforcement learning algorithms. It provides a wide variety of environments ranging from simple grid worlds to complex 3D environments, allowing researchers to easily test and benchmark their algorithms. With a user-friendly interface and extensive documentation, Gym is suitable for both beginners and experts in the field of reinforcement learning.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

verl

veRL is a flexible and efficient reinforcement learning training framework designed for large language models (LLMs). It allows easy extension of diverse RL algorithms, seamless integration with existing LLM infrastructures, and flexible device mapping. The framework achieves state-of-the-art throughput and efficient actor model resharding with 3D-HybridEngine. It supports popular HuggingFace models and is suitable for users working with PyTorch FSDP, Megatron-LM, and vLLM backends.

langgraphjs

LangGraph.js is a library for building stateful, multi-actor applications with LLMs, offering benefits such as cycles, controllability, and persistence. It allows defining flows involving cycles, providing fine-grained control over application flow and state. Inspired by Pregel and Apache Beam, it includes features like loops, persistence, human-in-the-loop workflows, and streaming support. LangGraph integrates seamlessly with LangChain.js and LangSmith but can be used independently.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

AgentsMeetRL

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning. The criteria for identifying an agent project are multi-turn interactions or tool use. The project is based on code analysis from open-source repositories using GitHub Copilot Agent. The focus is on reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on technical choices.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

FLAME

FLAME is a lightweight and efficient deep learning framework designed for edge devices. It provides a simple and user-friendly interface for developing and deploying deep learning models on resource-constrained devices. With FLAME, users can easily build and optimize neural networks for tasks such as image classification, object detection, and natural language processing. The framework supports various neural network architectures and optimization techniques, making it suitable for a wide range of applications in the field of edge computing.

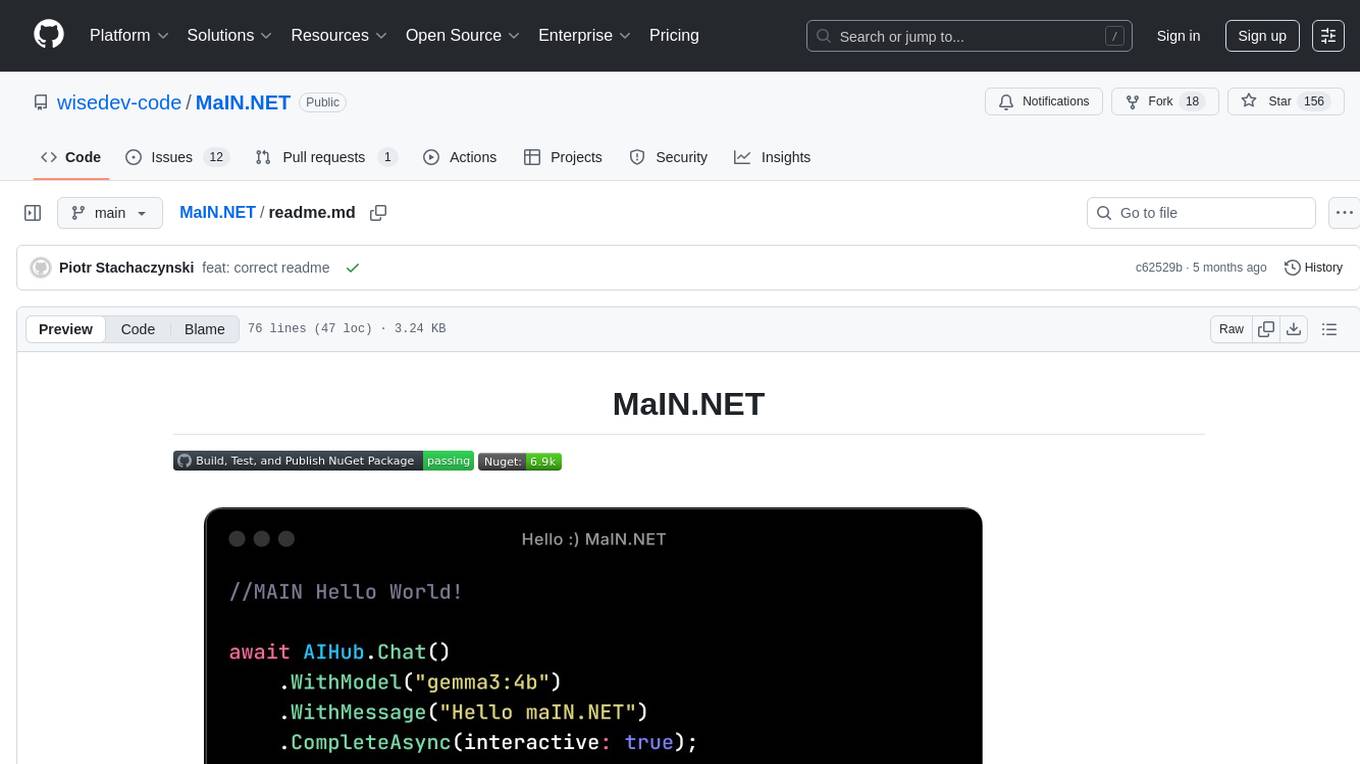

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

For similar tasks

llm-compression-intelligence

This repository presents the findings of the paper "Compression Represents Intelligence Linearly". The study reveals a strong linear correlation between the intelligence of LLMs, as measured by benchmark scores, and their ability to compress external text corpora. Compression efficiency, derived from raw text corpora, serves as a reliable evaluation metric that is linearly associated with model capabilities. The repository includes the compression corpora used in the paper, code for computing compression efficiency, and data collection and processing pipelines.

edsl

The Expected Parrot Domain-Specific Language (EDSL) package enables users to conduct computational social science and market research with AI. It facilitates designing surveys and experiments, simulating responses using large language models, and performing data labeling and other research tasks. EDSL includes built-in methods for analyzing, visualizing, and sharing research results. It is compatible with Python 3.9 - 3.11 and requires API keys for LLMs stored in a `.env` file.

fast-stable-diffusion

Fast-stable-diffusion is a project that offers notebooks for RunPod, Paperspace, and Colab Pro adaptations with AUTOMATIC1111 Webui and Dreambooth. It provides tools for running and implementing Dreambooth, a stable diffusion project. The project includes implementations by XavierXiao and is sponsored by Runpod, Paperspace, and Colab Pro.

RobustVLM

This repository contains code for the paper 'Robust CLIP: Unsupervised Adversarial Fine-Tuning of Vision Embeddings for Robust Large Vision-Language Models'. It focuses on fine-tuning CLIP in an unsupervised manner to enhance its robustness against visual adversarial attacks. By replacing the vision encoder of large vision-language models with the fine-tuned CLIP models, it achieves state-of-the-art adversarial robustness on various vision-language tasks. The repository provides adversarially fine-tuned ViT-L/14 CLIP models and offers insights into zero-shot classification settings and clean accuracy improvements.

TempCompass

TempCompass is a benchmark designed to evaluate the temporal perception ability of Video LLMs. It encompasses a diverse set of temporal aspects and task formats to comprehensively assess the capability of Video LLMs in understanding videos. The benchmark includes conflicting videos to prevent models from relying on single-frame bias and language priors. Users can clone the repository, install required packages, prepare data, run inference using examples like Video-LLaVA and Gemini, and evaluate the performance of their models across different tasks such as Multi-Choice QA, Yes/No QA, Caption Matching, and Caption Generation.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

bigcodebench

BigCodeBench is an easy-to-use benchmark for code generation with practical and challenging programming tasks. It aims to evaluate the true programming capabilities of large language models (LLMs) in a more realistic setting. The benchmark is designed for HumanEval-like function-level code generation tasks, but with much more complex instructions and diverse function calls. BigCodeBench focuses on the evaluation of LLM4Code with diverse function calls and complex instructions, providing precise evaluation & ranking and pre-generated samples to accelerate code intelligence research. It inherits the design of the EvalPlus framework but differs in terms of execution environment and test evaluation.

rag

RAG with txtai is a Retrieval Augmented Generation (RAG) Streamlit application that helps generate factually correct content by limiting the context in which a Large Language Model (LLM) can generate answers. It supports two categories of RAG: Vector RAG, where context is supplied via a vector search query, and Graph RAG, where context is supplied via a graph path traversal query. The application allows users to run queries, add data to the index, and configure various parameters to control its behavior.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.