AgentsMeetRL

An Awesome List of Agentic Model trained with Reinforcement Learning

Stars: 472

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning. The criteria for identifying an agent project are multi-turn interactions or tool use. The project is based on code analysis from open-source repositories using GitHub Copilot Agent. The focus is on reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on technical choices.

README:

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning:

- 🤖 The criteria for identifying an agent project are that it must have at least one of the following: multi-turn interactions or tool use (so TIR projects, Tool-Integrated Reasoning, are considered in this repo).

⚠️ This project is based on code analysis from open-source repositories using GitHub Copilot Agent, which may contain unfaithful cases. Although manually reviewed, there may still be omissions. If you find any errors, please don't hesitate to let us know immediately through issues or PRs - we warmly welcome them!- 🚀 We particularly focus on the reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on how these excellent open-source projects make their technical choices. See [Click to view technical details] under each table.

- 🤗 Feel free to submit your own projects anytime - we welcome contributions!

Some Enumeration:

- Enumeration for Reward Type:

- External Verifier: e.g., a compiler or math solver

- Rule-Based: e.g., a LaTeX parser with exact match scoring

- Model-Based: e.g., a trained verifier LLM or reward LLM

- Custom

| Github Repo | 🌟 Stars | Date | Org | Paper Link |

|---|---|---|---|---|

| siiRL |  |

2025.7 | Shanghai Innovation Institute | Paper |

| slime |  |

2025.6 | Tsinghua University (THUDM) | blog |

| agent-lightning |  |

2025.6 | Microsoft Research | Paper |

| AReaL |  |

2025.6 | AntGroup/Tsinghua | Paper |

| ROLL |  |

2025.6 | Alibaba | Paper |

| MARTI |  |

2025.5 | Tsinghua | -- |

| RL2 |  |

2025.4 | Accio | – |

| verifiers |  |

2025.3 | Individual | -- |

| oat |  |

2024.11 | NUS/Sea AI | Paper |

| veRL |  |

2024.10 | ByteDance | Paper |

| OpenRLHF |  |

2023.7 | OpenRLHF | Paper |

| trl |  |

2019.11 | HuggingFace | -- |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| siiRL | PPO/GRPO/CPGD/MARFT | Multi | Both | Multi | LLM/VLM/LLM-MAS PostTraining | Model/Rule | Planned |

| slime | GRPO/GSPO/REINFORCE++ | Single | Both | Both | Math/Code | External Verifier | Yes |

| agent-lightning | PPO/Custom/Automatic Prompt Optimization | Multi | Outcome | Multi | Calculator/SQL | Model/External/Rule | Yes |

| AReaL | PPO | Both | Outcome | Both | Math/Code | External | Yes |

| ROLL | PPO/GRPO/Reinforce++/TOPR/RAFT++ | Multi | Both | Multi | Math/QA/Code/Alignment | All | Yes |

| MARTI | PPO/GRPO/REINFORCE++/TTRL | Multi | Both | Multi | Math | All | Yes |

| RL2 | Dr. GRPO/PPO/DPO | Single | Both | Both | QA/Dialogue | Rule/Model/External | Yes |

| verifiers | GRPO | Multi | Outcome | Both | Reasoning/Math/Code | All | Code |

| oat | PPO/GRPO | Single | Outcome | Multi | Math/Alignment | External | No |

| veRL | PPO/GRPO | Single | Outcome | Both | Math/QA/Reasoning/Search | All | Yes |

| OpenRLHF | PPO/REINFORCE++/GRPO/DPO/IPO/KTO/RLOO | Multi | Both | Both | Dialogue/Chat/Completion | Rule/Model/External | Yes |

| trl | PPO/GRPO/DPO | Single | Both | Single | QA | Custom | No |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| AgentGym-RL |  |

2025.9 | Fudan University | Paper | veRL |

| Agent_Foundation_Models |  |

2025.8 | OPPO Personal AI Lab | Paper | veRL |

| SPA-RL-Agent |  |

2025.5 | PolyU | Paper | TRL |

| verl-agent |  |

2025.5 | NTU/Skywork | Paper | veRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| AgentGym-RL | PPO/GRPO/RLOO/REINFORCE++ | Single | Outcome | Multi | Web/Search/Game/Embodied/Science | Rule/Model/External | Yes (Web, Search, Env APIs) |

| Agent_Foundation_Models | DAPO/PPO | Single | Outcome | Single | QA/Code/Math | Rule/External | Yes |

| SPA-RL-Agent | PPO | Single | Process | Multi | Navigation/Web/TextGame | Model | No |

| verl-agent | PPO/GRPO/GiGPO/DAPO/RLOO/REINFORCE++ | Multi | Both | Multi | Phone Use/Math/Code/Web/TextGame | All | Yes |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| ASearcher |  |

2025.8 | Ant Research RL Lab Tsinghua University & UW |

Paper | RealHF/AReaL |

| Kimi-Researcher |  |

2025.6 | Moonshot AI | blog | Custom |

| TTI |  |

2025.6 | CMU | Paper | Custom |

| R-Search |  |

2025.6 | Individual | -- | veRL |

| R1-Searcher-plus |  |

2025.5 | RUC | Paper | Custom |

| StepSearch |  |

2025.5 | SenseTime | Paper | veRL |

| AutoRefine |  |

2025.5 | USTC | Paper | veRL |

| ZeroSearch |  |

2025.5 | Alibaba | Paper | veRL |

| WebThinker |  |

2025.4 | RUC | Paper | Custom |

| DeepResearcher |  |

2025.4 | SJTU | Paper | veRL |

| Search-R1 |  |

2025.3 | UIUC/Google | paper1, paper2 | veRL |

| R1-Searcher |  |

2025.3 | RUC | Paper | OpenRLHF |

| C-3PO |  |

2025.2 | Alibaba | Paper | OpenRLHF |

| WebAgent |  |

2025.1 | Alibaba | paper1, paper2 | LLaMA-Factory |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| ASearcher | PPO/GRPO + Decoupled PPO | Single | Outcome | Multi | Math/Code/SearchQA | External/Rule | Yes |

| Kimi-Researcher | REINFORCE | Single | Outcome | Multi | Research | Outcome | Search, Browse, Coding |

| TTI | REINFORCE/BC | Single | Outcome | Multi | Web | External | Web Browsing |

| R-Search | PPO/GRPO | Single | Both | Multi | QA/Search | All | Yes |

| R1-Searcher-plus | Custom | Single | Outcome | Multi | Search | Model | Search |

| StepSearch | PPO | Single | Process | Multi | QA | Model | Search |

| AutoRefine | PPO/GRPO | Multi | Both | Multi | RAG QA | Rule | Search |

| ZeroSearch | PPO/GRPO/REINFORCE | Single | Outcome | Multi | QA/Search | Rule | Yes |

| WebThinker | DPO | Single | Outcome | Multi | Reasoning/QA/Research | Model/External | Web Browsing |

| DeepResearcher | PPO/GRPO | Multi | Outcome | Multi | Research | All | Yes |

| Search-R1 | PPO/GRPO | Single | Outcome | Multi | Search | All | Search |

| R1-Searcher | PPO/DPO | Single | Both | Multi | Search | All | Yes |

| C-3PO | PPO | Multi | Outcome | Multi | Search | Model | Yes |

| WebAgent | DAPO | Multi | Process | Multi | Web | Model | Yes |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| MobileAgent |  |

2025.9 | X-PLUG (TongyiQwen) | paper | veRL |

| InfiGUI-G1 |  |

2025.8 | InfiX AI | Paper | veRL |

| Grounding-R1 |  |

2025.6 | Salesforce | blog | trl |

| AgentCPM-GUI |  |

2025.6 | OpenBMB/Tsinghua/RUC | Paper | Huggingface |

| ARPO |  |

2025.5 | CUHK/HKUST | Paper | veRL |

| GUI-G1 |  |

2025.5 | RUC | Paper | TRL |

| GUI-R1 |  |

2025.4 | CAS/NUS | Paper | veRL |

| UI-R1 |  |

2025.3 | vivo/CUHK | Paper | TRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| MobileAgent | semi-online RL | Single | Both | Multi | MobileGUI/Automation | Rule | Yes |

| InfiGUI-G1 | AEPO | Single | Outcome | Single | GUI/Grounding | Rule | No |

| Grounding-R1 | GRPO | Single | Outcome | Multi | GUI Grounding | Model | Yes |

| AgentCPM-GUI | GRPO | Single | Outcome | Multi | Mobile GUI | Model | Yes |

| ARPO | GRPO | Single | Outcome | Multi | GUI | External | Computer Use |

| GUI-G1 | GRPO | Single | Outcome | Single | GUI | Rule/External | No |

| GUI-R1 | GRPO | Single | Outcome | Multi | GUI | Rule | No |

| UI-R1 | GRPO | Single | Process | Both | GUI | Rule | Computer/Phone Use |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| MiroRL |  |

2025.8 | MiroMindAI | HF Repo | veRL |

| verl-tool |  |

2025.6 | TIGER-Lab | X | veRL |

| Multi-Turn-RL-Agent |  |

2025.5 | University of Minnesota | Paper | Custom |

| Tool-N1 |  |

2025.5 | NVIDIA | Paper | veRL |

| Tool-Star |  |

2025.5 | RUC | Paper | LLaMA-Factory |

| RL-Factory |  |

2025.5 | Simple-Efficient | model | veRL |

| ReTool |  |

2025.4 | ByteDance | Paper | veRL |

| AWorld |  |

2025.3 | Ant Group (inclusionAI) | Paper | veRL |

| Agent-R1 |  |

2025.3 | USTC | -- | veRL |

| ReCall |  |

2025.3 | BaiChuan | Paper | veRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| MiroRL | GRPO | Single | Both | Multi | Reasoning/Planning/ToolUse | Rule-based | MCP |

| verl-tool | PPO/GRPO | Single | Both | Both | Math/Code | Rule/External | Yes |

| Multi-Turn-RL-Agent | GRPO | Single | Both | Multi | Tool-use/Math | Rule/External | Yes |

| Tool-N1 | PPO | Single | Outcome | Multi | Math/Dialogue | All | Yes |

| Tool-Star | PPO/DPO/ORPO/SimPO/KTO | Single | Outcome | Multi | Multi-modal/Tool Use/Dialogue | Model/External | Yes |

| RL-Factory | GRPO | Multi | Both | Multi | Tool-use/NL2SQL | All | MCP |

| ReTool | PPO | Single | Outcome | Multi | Math | External | Code |

| AWorld | GRPO | Both | Outcome | Multi | Search/Web/Code | External/Rule | Yes |

| Agent-R1 | PPO/GRPO | Single | Both | Multi | Tool-use/QA | Model | Yes |

| ReCall | PPO/GRPO/RLOO/REINFORCE++/ReMax | Single | Outcome | Multi | Tool-use/Math/QA | All | Yes |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| ARIA |  |

2025.6 | Fudan University | Paper | Custom |

| AMPO |  |

2025.5 | Tongyi Lab, Alibaba | Paper | veRL |

| Trinity-RFT |  |

2025.5 | Alibaba | Paper | veRL |

| VAGEN |  |

2025.3 | RAGEN-AI | Paper | veRL |

| ART |  |

2025.3 | OpenPipe | Paper | TRL |

| OpenManus-RL |  |

2025.3 | UIUC/MetaGPT | -- | Custom |

| RAGEN |  |

2025.1 | RAGEN-AI | Paper | veRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| ARIA | REINFORCE | Both | Process | Multi | Negotiation/Bargaining | Other | No |

| AMPO | BC/AMPO(GRPO improvement) | Multi | Outcome | Multi | Social Interaction | Model-based | No |

| Trinity-RFT | PPO/GRPO | Single | Outcome | Both | Math/TextGame/Web | All | Yes |

| VAGEN | PPO/GRPO | Single | Both | Multi | TextGame/Navigation | All | Yes |

| ART | GRPO | Multi | Both | Multi | TextGame | All | Yes |

| OpenManus-RL | PPO/DPO/GRPO | Multi | Outcome | Multi | TextGame | All | Yes |

| RAGEN | PPO/GRPO | Single | Both | Multi | TextGame | All | Yes |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| RepoDeepSearch |  |

2025.8 | PKU, Bytedance, BIT | Paper | veRL |

| MedAgentGym |  |

2025.6 | Emory/Georgia Tech | Paper | Hugginface |

| CURE |  |

2025.6 | University of Chicago Princeton/ByteDance |

Paper | Huggingface |

| MASLab |  |

2025.5 | MASWorks | Paper | Custom |

| Time-R1 |  |

2025.5 | UIUC | Paper | veRL |

| ML-Agent |  |

2025.5 | MASWorks | Paper | Custom |

| SkyRL |  |

2025.4 | NovaSky | -- | veRL |

| digitalhuman |  |

2025.4 | Tencent | Paper | veRL |

| sweet_rl |  |

2025.3 | Meta/UCB | Paper | OpenRLHF |

| rllm |  |

2025.1 | Berkeley Sky Computing Lab BAIR / Together AI |

Notion Blog | veRL |

| open-r1 |  |

2025.1 | HuggingFace | -- | TRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| RepoDeepSearch | GRPO | Single | Both | Multi | Search/Repair | Rule/External | Yes |

| MedAgentGym | SFT/DPO/PPO/GRPO | Single | Outcome | Multi | Medical/Code | External | Yes |

| CURE | PPO | Single | Outcome | Single | Code | External | No |

| MASLab | NO RL | Multi | Outcome | Multi | Code/Math/Reasoning | External | Yes |

| Time-R1 | PPO/GRPO/DPO | Multi | Outcome | Multi | Temporal | All | Code |

| ML-Agent | Custom | Single | Process | Multi | Code | All | Yes |

| SkyRL | PPO/GRPO | Single | Outcome | Multi | Math/Code | All | Code |

| digitalhuman | PPO/GRPO/ReMax/RLOO | Multi | Outcome | Multi | Empathy/Math/Code/MultimodalQA | Rule/Model/External | Yes |

| sweet_rl | DPO | Multi | Process | Multi | Design/Code | Model | Web Browsing |

| rllm | PPO/GRPO | Single | Outcome | Multi | Code Edit | External | Yes |

| open-r1 | GRPO | Single | Outcome | Single | Math/Code | All | Yes |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| ARPO |  |

2025.7 | RUC, Kuaishou | Paper | veRL |

| terminal-bench-rl |  |

2025.7 | Individual (Danau5tin) | N/A | rLLM |

| MOTIF |  |

2025.6 | University of Maryland | Paper | trl |

| cmriat/l0 |  |

2025.6 | CMRIAT | Paper | veRL |

| agent-distillation |  |

2025.5 | KAIST | Paper | Custom |

| VDeepEyes |  |

2025.5 | Xiaohongshu/XJTU | Paper | veRL |

| EasyR1 |  |

2025.4 | Individual | repo1/paper2 | veRL |

| AutoCoA |  |

2025.3 | BJTU | Paper | veRL |

| ToRL |  |

2025.3 | SJTU | Paper | veRL |

| ReMA |  |

2025.3 | SJTU, UCL | Paper | veRL |

| Agentic-Reasoning |  |

2025.2 | Oxford | Paper | Custom |

| SimpleTIR |  |

2025.2 | NTU, Bytedance | Notion Blog | veRL |

| openrlhf_async_pipline |  |

2024.5 | OpenRLHF | Paper | OpenRLHF |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| ARPO | GRPO | Single | Outcome | Multi | Math/Coding | Model/Rule | Yes |

| terminal-bench-rl | GRPO | Single | Outcome | Multi | Coding/Terminal | Model+External Verifier | Yes |

| MOTIF | GRPO | Single | Outcome | Multi | QA | Rule | No |

| cmriat/l0 | PPO | Multi | Process | Multi | QA | All | Yes |

| agent-distillation | PPO | Single | Process | Multi | QA/Math | External | Yes |

| VDeepEyes | PPO/GRPO | Multi | Process | Multi | VQA | All | Yes |

| EasyR1 | GRPO | Single | Process | Multi | Vision-Language | Model | Yes |

| AutoCoA | GRPO | Multi | Outcome | Multi | Reasoning/Math/QA | All | Yes |

| ToRL | GRPO | Single | Outcome | Single | Math | Rule/External | Yes |

| ReMA | PPO | Multi | Outcome | Multi | Math | Rule | No |

| Agentic-Reasoning | Custom | Single | Process | Multi | QA/Math | External | Web Browsing |

| SimpleTIR | PPO/GRPO (with extensions) | Single | Outcome | Multi | Math, Coding | All | Yes |

| openrlhf_async_pipline | PPO/REINFORCE++/DPO/RLOO | Single | Outcome | Multi | Dialogue/Reasoning/QA | All | No |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| MEM1 |  |

2025.7 | MIT | Paper | veRL (based on Search-R1) |

| Memento |  |

2025.6 | UCL, Huawei | Paper | Custom |

| MemAgent |  |

2025.6 | Bytedance, Tsinghua-SIA | Paper | veRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| MEM1 | PPO/GRPO | Single | Outcome | Multi | WebShop/GSM8K/QA | Rule/Model | Yes |

| Memento | soft Q-Learning | Single | Outcome | Multi | Research/QA/Code/Web | External/Rule | Yes |

| MemAgent | PPO, GRPO, DPO | Multi | Outcome | Multi | Long-context QA | Rule/Model/External | Yes |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| Embodied-R1 |  |

2025.6 | Tianjing University | Paper | veRL |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| Embodied-R1 | GRPO | Single | Outcome | Single | Grounding/Waypoint | Rule | No |

| Github Repo | 🌟 Stars | Date | Org | Paper Link | RL Framework |

|---|---|---|---|---|---|

| MMedAgent-RL |  |

2025.8 | Unknown | paper | Unknown |

| DoctorAgent-RL |  |

2025.5 | UCAS/CAS/USTC | Paper | RAGEN |

| Biomni |  |

2025.3 | Stanford University (SNAP) | Paper | Custom |

📋 Click to view technical details

| Github Repo | RL Algorithm | Single/Multi Agent | Outcome/Process Reward | Single/Multi Turn | Task | Reward Type | Tool usage |

|---|---|---|---|---|---|---|---|

| MMedAgent-RL | Unknown | Multi | Unknown | Unknown | Unknown | Unknown | Unknown |

| DoctorAgent-RL | GRPO | Multi | Both | Multi | Consultation/Diagnosis | Model/Rule | No |

| Biomni | TBD | Single | TBD | Single | scRNAseq/CRISPR/ADMET/Knowledge | TBD | Yes |

| Github Repo | 🌟 Stars | Date | Org | Task |

|---|---|---|---|---|

| CompassVerifier |  |

2025.7 | Shanghai AI Lab | Knowledge/Math/Science/GeneralReasoning |

| Mind2Web-2 |  |

2025.6 | Ohio State University | Web |

| gem |  |

2025.5 | Sea AI Lab | Math/Code/Game/QA |

| MLE-Dojo |  |

2025.5 | GIT, Stanford | MLE |

| atropos |  |

2025.4 | Nous Research | Game/Code/Tool |

| InternBootcamp |  |

2025.4 | InternBootcamp | Coding/QA/Game |

| loong |  |

2025.3 | CAMEL-AI.org | RLVR |

| reasoning-gym |  |

2025.1 | open-thought | Math/Game |

| llmgym |  |

2025.1 | tensorzero | TextGame/Tool |

| debug-gym |  |

2024.11 | Microsoft Research | Debugging/Game/Code |

| gym-llm |  |

2024.8 | Rodrigo Sánchez Molina | Control/Game |

| AgentGym |  |

2024.6 | Fudan | Web/Game |

| tau-bench |  |

2024.6 | Sierra | Tool |

| appworld |  |

2024.6 | Stony Brook University | Phone Use |

| android_world |  |

2024.5 | Google Research | Phone Use |

| TheAgentCompany |  |

2024.3 | CMU, Duke | Coding |

| LlamaGym |  |

2024.3 | Rohan Pandey | Game |

| visualwebarena |  |

2024.1 | CMU | Web |

| LMRL-Gym |  |

2023.12 | UC Berkeley | Game |

| OSWorld |  |

2023.10 | HKU, CMU, Salesforce, Waterloo | Computer Use |

| webarena |  |

2023.7 | CMU | Web |

| AgentBench |  |

2023.7 | Tsinghua University | Game/Web/QA/Tool |

| WebShop |  |

2022.7 | Princeton-NLP | Web |

| ScienceWorld |  |

2022.3 | AllenAI | TextGame/ScienceQA |

| alfworld |  |

2020.10 | Microsoft, CMU, UW | Embodied |

| factorio-learning-environment |  |

2021.6 | JackHopkins | Game |

| jericho |  |

2018.10 | Microsoft, GIT | TextGame |

| TextWorld |  |

2018.6 | Microsoft Research | TextGame |

- JoyAgents-R1: Joint Evolution Dynamics for Versatile Multi-LLM Agents with Reinforcement Learning

- Shop-R1: Rewarding LLMs to Simulate Human Behavior in Online Shopping via Reinforcement Learning

- Training Long-Context, Multi-Turn Software Engineering Agents with Reinforcement Learning

- Acting Less is Reasoning More! Teaching Model to Act Efficiently

- Agentic Reasoning and Tool Integration for LLMs via Reinforcement Learning

- ComputerRL: Scaling End-to-End Online Reinforcement Learning for Computer Use Agents

- Atom-Searcher: Enhancing Agentic Deep Research via Fine-Grained Atomic Thought Reward

- MUA-RL: MULTI-TURN USER-INTERACTING AGENTREINFORCEMENT LEARNING FOR AGENTIC TOOL USE

- Understanding Tool-Integrated Reasoning

- Memory-R1: Enhancing Large Language Model Agents to Manage and Utilize Memories via Reinforcement Learning

- Encouraging Good Processes Without the Need for Good Answers: Reinforcement Learning for LLM Agent Planning

- SFR-DeepResearch: Towards Effective Reinforcement Learning for Autonomously Reasoning Single Agents

- WebExplorer: Explore and Evolve for Training Long-Horizon Web Agents

- EnvX: Agentize Everything with Agentic AI

- UI-TARS-2 Technical Report: Advancing GUI Agent with Multi-Turn Reinforcement Learning

- UI-Venus Technical Report: Building High-performance UI Agents with RFT

If you find this repository useful, please consider citing it:

@misc{agentsMeetRL,

title={When LLM Agents Meet Reinforcement Learning: A Comprehensive Survey},

author={AgentsMeetRL Contributors},

year={2025},

url={https://github.com/thinkwee/agentsMeetRL}

}Made with ❤️ by the AgentsMeetRL community

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AgentsMeetRL

Similar Open Source Tools

AgentsMeetRL

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning. The criteria for identifying an agent project are multi-turn interactions or tool use. The project is based on code analysis from open-source repositories using GitHub Copilot Agent. The focus is on reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on technical choices.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

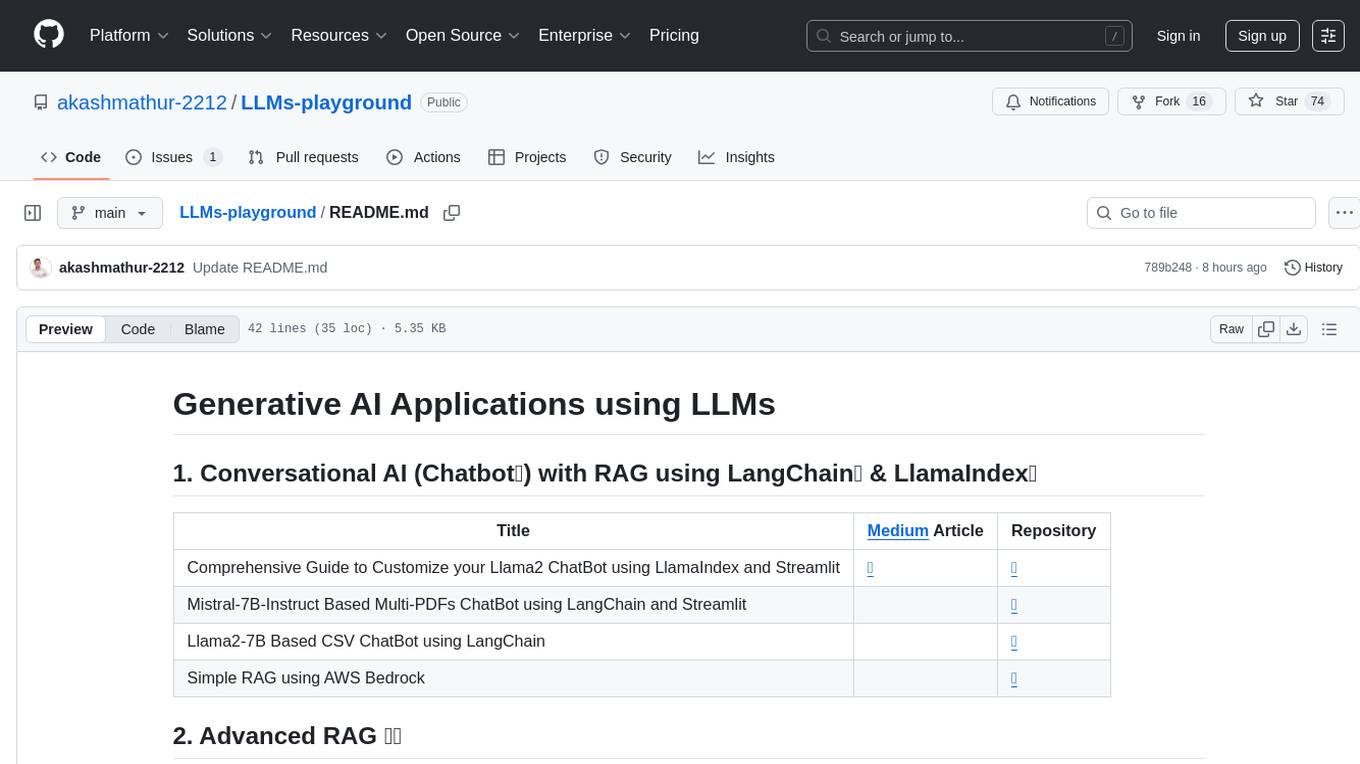

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

rag-in-action

rag-in-action is a GitHub repository that provides a practical course structure for developing a RAG system based on DeepSeek. The repository likely contains resources, code samples, and tutorials to guide users through the process of building and implementing a RAG system using DeepSeek technology. Users interested in learning about RAG systems and their development may find this repository helpful in gaining hands-on experience and practical knowledge in this area.

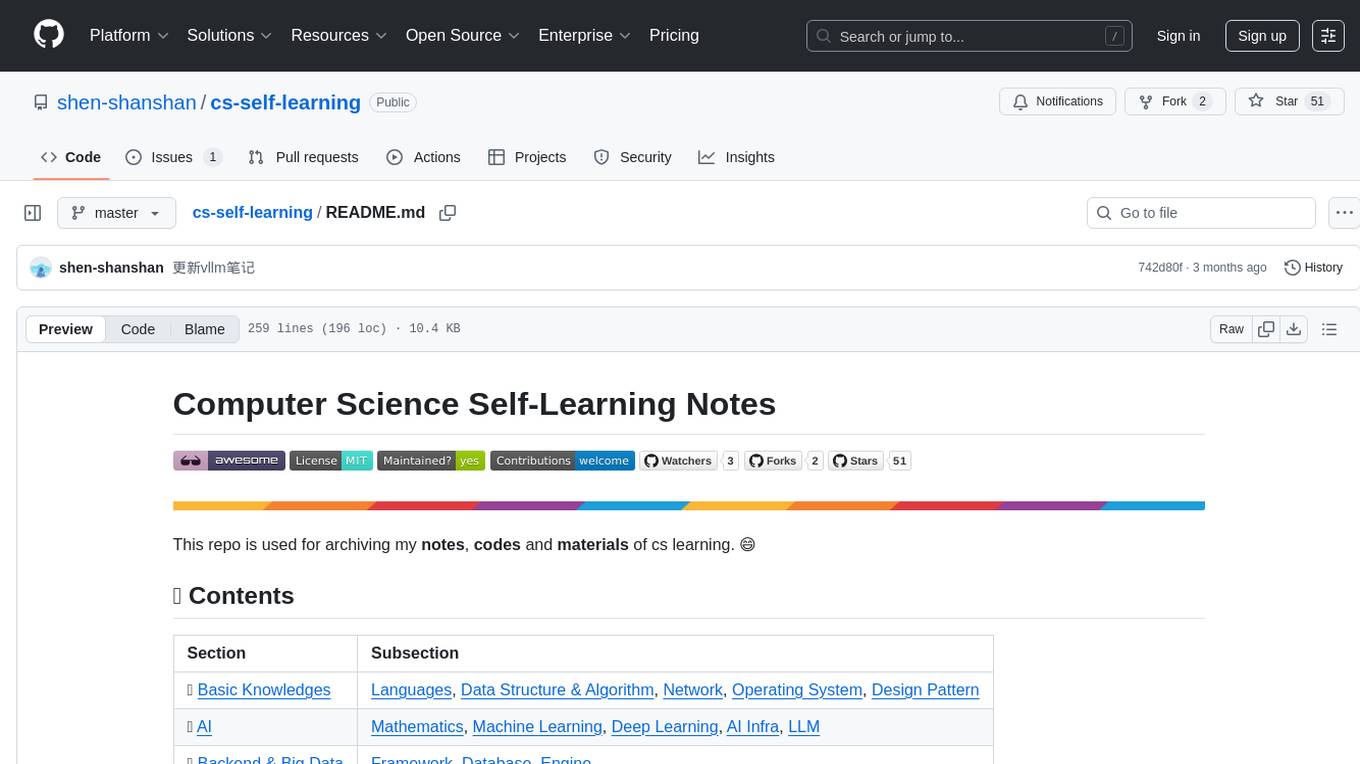

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

llm_rl

llm_rl is a repository that combines llm (language model) and rl (reinforcement learning) techniques. It likely focuses on using language models in reinforcement learning tasks, such as natural language understanding and generation. The repository may contain implementations of algorithms that leverage both llm and rl to improve performance in various tasks. Developers interested in exploring the intersection of language models and reinforcement learning may find this repository useful for research and experimentation.

bisheng

Bisheng is a leading open-source **large model application development platform** that empowers and accelerates the development and deployment of large model applications, helping users enter the next generation of application development with the best possible experience.

build-your-own-x-machine-learning

This repository provides a step-by-step guide for building your own machine learning models from scratch. It covers various machine learning algorithms and techniques, including linear regression, logistic regression, decision trees, and neural networks. The code examples are written in Python and include detailed explanations to help beginners understand the concepts behind machine learning. By following the tutorials in this repository, you can gain a deeper understanding of how machine learning works and develop your own models for different applications.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

ms-agent

MS-Agent is a lightweight framework designed to empower agents with autonomous exploration capabilities. It provides a flexible and extensible architecture for creating agents capable of tasks like code generation, data analysis, and tool calling with MCP support. The framework supports multi-agent interactions, deep research, code generation, and is lightweight and extensible for various applications.

For similar tasks

AgentsMeetRL

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning. The criteria for identifying an agent project are multi-turn interactions or tool use. The project is based on code analysis from open-source repositories using GitHub Copilot Agent. The focus is on reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on technical choices.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.