Gym

Build RL environments for LLM training

Stars: 645

Gym is a toolkit for developing and comparing reinforcement learning algorithms. It provides a wide variety of environments ranging from simple grid worlds to complex 3D environments, allowing researchers to easily test and benchmark their algorithms. With a user-friendly interface and extensive documentation, Gym is suitable for both beginners and experts in the field of reinforcement learning.

README:

NeMo Gym is a library for building reinforcement learning (RL) training environments for large language models (LLMs). It provides infrastructure to develop environments, scale rollout collection, and integrate seamlessly with your preferred training framework.

NeMo Gym is a component of the NVIDIA NeMo Framework, NVIDIA’s GPU-accelerated platform for building and training generative AI models.

- Scaffolding and patterns to accelerate environment development: multi-step, multi-turn, and user modeling scenarios

- Contribute environments without expert knowledge of the entire RL training loop

- Test environments and throughput end-to-end, independent of the RL training loop

- Interoperable with existing environments, systems, and RL training frameworks

- Growing collection of training environments and datasets for Reinforcement Learning from Verifiable Reward (RLVR)

[!IMPORTANT] NeMo Gym is currently in early development. You should expect evolving APIs, incomplete documentation, and occasional bugs. We welcome contributions and feedback - for any changes, please open an issue first to kick off discussion!

NeMo Gym is designed to run on standard development machines:

-

GPU: Not required for NeMo Gym library operation

- GPU may be needed for specific resource servers or model inference (see individual server documentation)

- CPU: Any modern x86_64 or ARM64 processor (e.g., Intel, AMD, Apple Silicon)

- RAM: Minimum 8 GB (16 GB+ recommended for larger environments)

- Storage: Minimum 5 GB free disk space for installation and basic usage

-

Operating System:

- Linux (Ubuntu 20.04+, or equivalent)

- macOS (11.0+ for x86_64, 12.0+ for Apple Silicon)

- Windows (via WSL2)

- Python: 3.12 or higher

- Git: For cloning the repository

- Internet Connection: Required for downloading dependencies and API access

-

API Keys: OpenAI API key with available credits (for the quickstart examples)

- Other model providers supported (Azure OpenAI, self-hosted models via vLLM)

- Ray: Automatically installed as a dependency (no separate setup required)

# Clone the repository

git clone [email protected]:NVIDIA-NeMo/Gym.git

cd Gym

# Install UV (Python package manager)

curl -LsSf https://astral.sh/uv/install.sh | sh

source $HOME/.local/bin/env

# Create virtual environment

uv venv --python 3.12

source .venv/bin/activate

# Install NeMo Gym

uv sync --extra dev --group docsCreate an env.yaml file that contains your OpenAI API key and the policy model you want to use. Replace your-openai-api-key with your actual key. This file helps keep your secrets out of version control while still making them available to NeMo Gym.

echo "policy_base_url: https://api.openai.com/v1

policy_api_key: your-openai-api-key

policy_model_name: gpt-4.1-2025-04-14" > env.yaml[!NOTE] We use GPT-4.1 in this quickstart because it provides low latency (no reasoning step) and works reliably out-of-the-box. NeMo Gym is not limited to OpenAI models—you can use self-hosted models via vLLM or any OpenAI-compatible inference server. See the documentation for details.

Terminal 1 (start servers):

# Start servers (this will keep running)

config_paths="resources_servers/example_single_tool_call/configs/example_single_tool_call.yaml,\

responses_api_models/openai_model/configs/openai_model.yaml"

ng_run "+config_paths=[${config_paths}]"Terminal 2 (interact with agent):

# In a NEW terminal, activate environment

source .venv/bin/activate

# Interact with your agent

python responses_api_agents/simple_agent/client.pyTerminal 2 (keep servers running in Terminal 1):

# Create a simple dataset with one query

echo '{"responses_create_params":{"input":[{"role":"developer","content":"You are a helpful assistant."},{"role":"user","content":"What is the weather in Seattle?"}]}}' > weather_query.jsonl

# Collect verified rollouts

ng_collect_rollouts \

+agent_name=example_single_tool_call_simple_agent \

+input_jsonl_fpath=weather_query.jsonl \

+output_jsonl_fpath=weather_rollouts.jsonl

# View the result

cat weather_rollouts.jsonl | python -m json.toolThis generates training data with verification scores!

Terminal 1 with the running servers: Ctrl+C to stop the ng_run process.

Now that you can generate rollouts, choose your path:

-

Use an existing training environment — Browse the Available Resource Servers below to find a training-ready environment that matches your goals.

-

Build a custom training environment — Implement or integrate existing tools and define task verification logic. Get started with the Creating a Resource Server tutorial.

NeMo Gym includes a curated collection of resource servers for training and evaluation across multiple domains:

Purpose: Demonstrate NeMo Gym patterns and concepts.

| Name | Demonstrates | Config | README |

|---|---|---|---|

| Multi Step | Multi-step tool calling | example_multi_step.yaml | README |

| Session State Mgmt | Session state management (in-memory) | example_session_state_mgmt.yaml | README |

| Single Tool Call | Basic single-step tool calling | example_single_tool_call.yaml | README |

Purpose: Training-ready environments with curated datasets.

[!TIP] Each resource server includes example data, configuration files, and tests. See each server's README for details.

| Resource Server | Domain | Dataset | Description | Value | Config | Train | Validation | License |

|---|---|---|---|---|---|---|---|---|

| Calendar | agent | Nemotron-RL-agent-calendar_scheduling | - | - | config | ✓ | ✓ | Apache 2.0 |

| Google Search | agent | Nemotron-RL-knowledge-web_search-mcqa | Multi-choice question answering problems with search tools integrated | Improve knowledge-related benchmarks with search tools | config | ✓ | - | Apache 2.0 |

| Math Advanced Calculations | agent | Nemotron-RL-math-advanced_calculations | An instruction following math environment with counter-intuitive calculators | Improve instruction following capabilities in specific math environments | config | ✓ | - | Apache 2.0 |

| Workplace Assistant | agent | Nemotron-RL-agent-workplace_assistant | Workplace assistant multi-step tool-using environment | Improve multi-step tool use capability | config | ✓ | ✓ | Apache 2.0 |

| Code Gen | coding | nemotron-RL-coding-competitive_coding | - | - | config | ✓ | ✓ | Apache 2.0 |

| Mini Swe Agent | coding | SWE-Gym | A software development with mini-swe-agent orchestration | Improve software development capabilities, like SWE-bench | config | ✓ | ✓ | MIT |

| Instruction Following | instruction_following | Nemotron-RL-instruction_following | Instruction following datasets targeting IFEval and IFBench style instruction following capabilities | Improve IFEval and IFBench | config | ✓ | - | Apache 2.0 |

| Structured Outputs | instruction_following | Nemotron-RL-instruction_following-structured_outputs | Check if responses are following structured output requirements in prompts | Improve instruction following capabilities | config | ✓ | ✓ | Apache 2.0 |

| Mcqa | knowledge | Nemotron-RL-knowledge-mcqa | Multi-choice question answering problems | Improve benchmarks like MMLU / GPQA / HLE | config | ✓ | - | Apache 2.0 |

| Math With Judge | math | Nemotron-RL-math-OpenMathReasoning | Math dataset with math-verify and LLM-as-a-judge | Improve math capabilities including AIME 24 / 25 | config | ✓ | ✓ | Creative Commons Attribution 4.0 International |

| Math With Judge | math | Nemotron-RL-math-stack_overflow | - | - | config | ✓ | ✓ | Creative Commons Attribution-ShareAlike 4.0 International |

- Documentation - Technical reference docs

- Tutorials - Hands-on tutorials and practical examples

We'd love your contributions! Here's how to get involved:

- Report Issues - Bug reports and feature requests

- Contributing Guide - How to contribute code, docs, new environments, or training framework integrations

If you use NeMo Gym in your research, please cite it using the following BibTeX entry:

@misc{nemo-gym,

title = {NeMo Gym: An Open Source Library for Scaling Reinforcement Learning Environments for LLM},

howpublished = {\url{https://github.com/NVIDIA-NeMo/Gym}},

author={NVIDIA},

year = {2025},

note = {GitHub repository},

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Gym

Similar Open Source Tools

Gym

Gym is a toolkit for developing and comparing reinforcement learning algorithms. It provides a wide variety of environments ranging from simple grid worlds to complex 3D environments, allowing researchers to easily test and benchmark their algorithms. With a user-friendly interface and extensive documentation, Gym is suitable for both beginners and experts in the field of reinforcement learning.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

multi-agent-ralph-loop

Multi-agent RALPH (Reinforcement Learning with Probabilistic Hierarchies) Loop is a framework for multi-agent reinforcement learning research. It provides a flexible and extensible platform for developing and testing multi-agent reinforcement learning algorithms. The framework supports various environments, including grid-world environments, and allows users to easily define custom environments. Multi-agent RALPH Loop is designed to facilitate research in the field of multi-agent reinforcement learning by providing a set of tools and utilities for experimenting with different algorithms and scenarios.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

verl

veRL is a flexible and efficient reinforcement learning training framework designed for large language models (LLMs). It allows easy extension of diverse RL algorithms, seamless integration with existing LLM infrastructures, and flexible device mapping. The framework achieves state-of-the-art throughput and efficient actor model resharding with 3D-HybridEngine. It supports popular HuggingFace models and is suitable for users working with PyTorch FSDP, Megatron-LM, and vLLM backends.

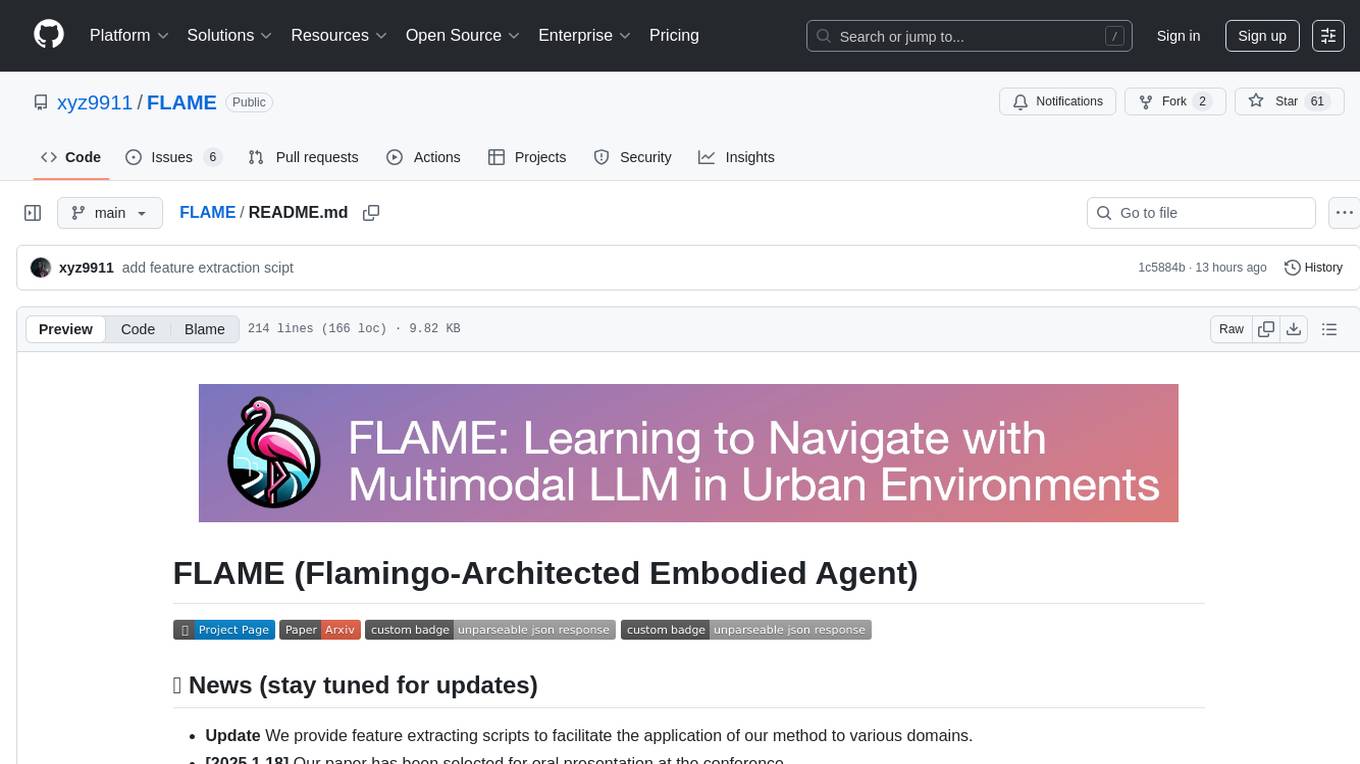

FLAME

FLAME is a lightweight and efficient deep learning framework designed for edge devices. It provides a simple and user-friendly interface for developing and deploying deep learning models on resource-constrained devices. With FLAME, users can easily build and optimize neural networks for tasks such as image classification, object detection, and natural language processing. The framework supports various neural network architectures and optimization techniques, making it suitable for a wide range of applications in the field of edge computing.

For similar tasks

Gym

Gym is a toolkit for developing and comparing reinforcement learning algorithms. It provides a wide variety of environments ranging from simple grid worlds to complex 3D environments, allowing researchers to easily test and benchmark their algorithms. With a user-friendly interface and extensive documentation, Gym is suitable for both beginners and experts in the field of reinforcement learning.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

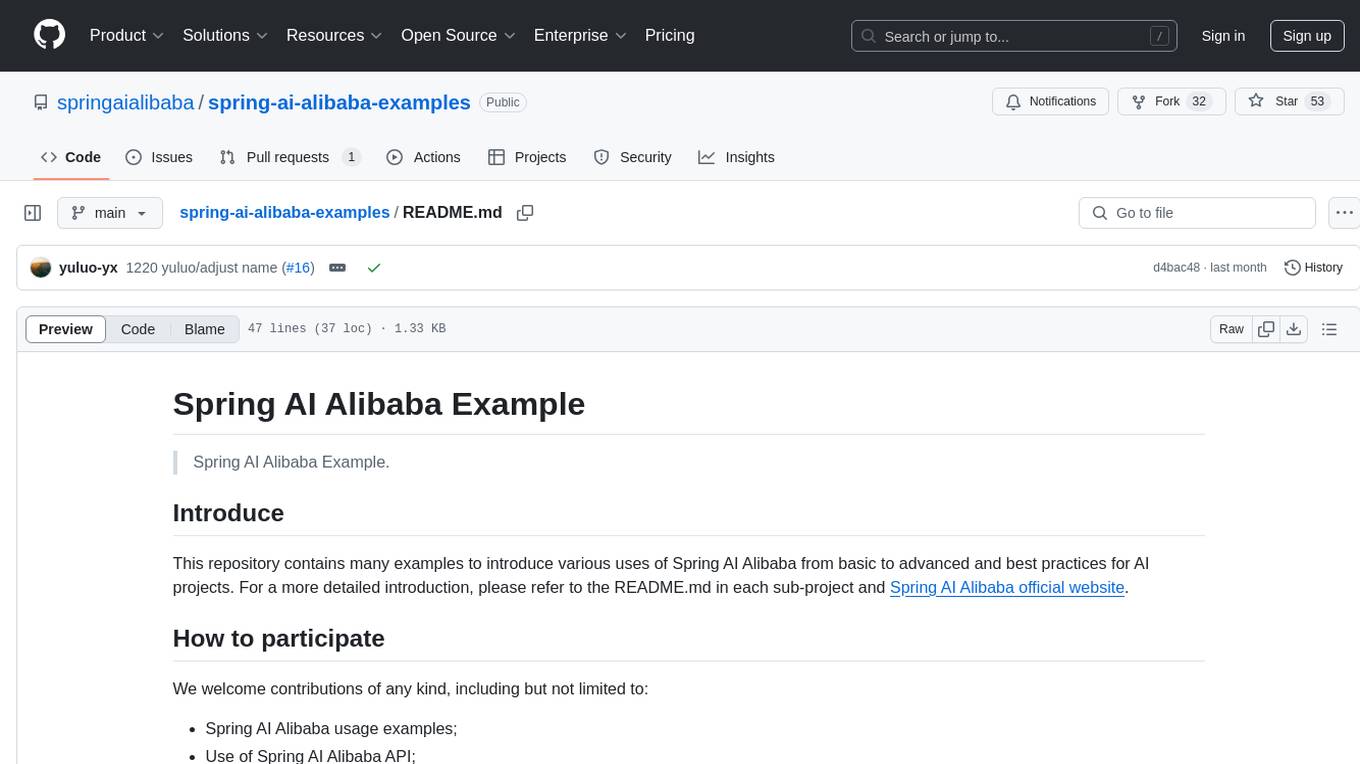

spring-ai-alibaba-examples

This repository contains examples showcasing various uses of Spring AI Alibaba, from basic to advanced, and best practices for AI projects. It welcomes contributions related to Spring AI Alibaba usage examples, API usage, Spring AI usage examples, and best practices for AI projects. The project structure is designed to modularize functions for easy access and use.

brain4j

Brain4J is a lightweight, performant, and open-source machine learning framework for Java. Designed with portability and speed in mind, it is optimized for high performance and ideal for those looking to implement machine learning solutions in pure Java. The framework provides tools and functionalities to facilitate the development of machine learning models within Java applications, offering ease of use and efficiency.

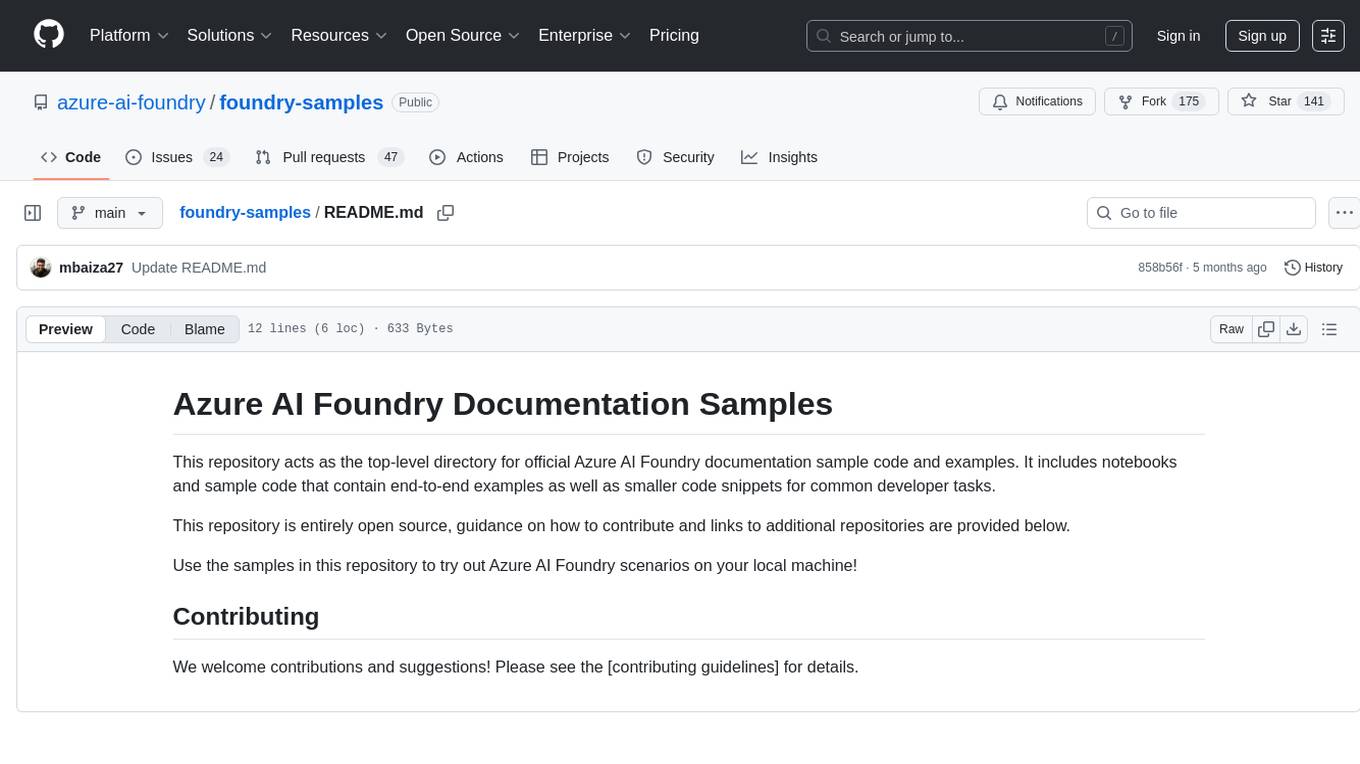

foundry-samples

The 'foundry-samples' repository serves as the main directory for official Azure AI Foundry documentation sample code and examples. It contains notebooks and code snippets for various developer tasks, offering both end-to-end examples and smaller snippets. The repository is open source, encouraging contributions and providing guidance on how to contribute.

Co-LLM-Agents

This repository contains code for building cooperative embodied agents modularly with large language models. The agents are trained to perform tasks in two different environments: ThreeDWorld Multi-Agent Transport (TDW-MAT) and Communicative Watch-And-Help (C-WAH). TDW-MAT is a multi-agent environment where agents must transport objects to a goal position using containers. C-WAH is an extension of the Watch-And-Help challenge, which enables agents to send messages to each other. The code in this repository can be used to train agents to perform tasks in both of these environments.

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

asreview

The ASReview project implements active learning for systematic reviews, utilizing AI-aided pipelines to assist in finding relevant texts for search tasks. It accelerates the screening of textual data with minimal human input, saving time and increasing output quality. The software offers three modes: Oracle for interactive screening, Exploration for teaching purposes, and Simulation for evaluating active learning models. ASReview LAB is designed to support decision-making in any discipline or industry by improving efficiency and transparency in screening large amounts of textual data.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.