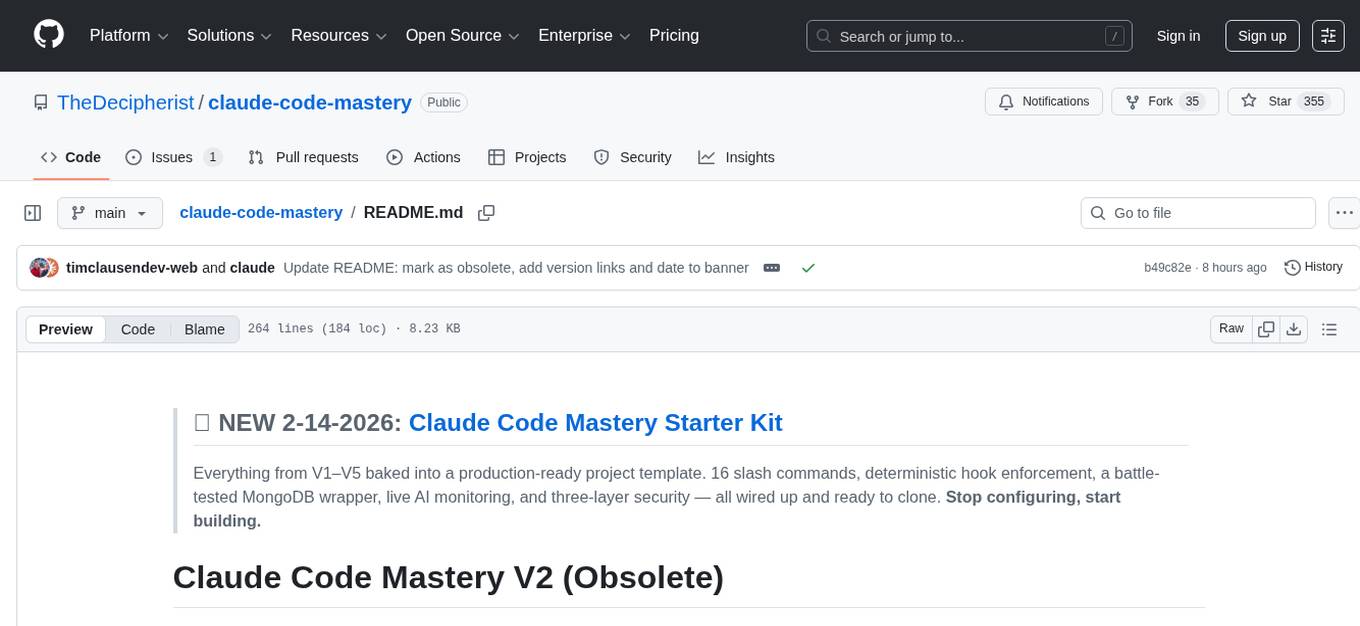

claude-code-mastery

The complete guide to Claude Code: CLAUDE.md, hooks, skills, MCP servers, and commands

Stars: 353

Claude Code Mastery is a comprehensive tool for maximizing Claude Code, offering a production-ready project template with 16 slash commands, deterministic hook enforcement, MongoDB wrapper, live AI monitoring, and three-layer security. It provides a security gatekeeper, project scaffolding blueprint, MCP server integration, workflow automation through custom commands, and emphasizes the importance of single-purpose chats to avoid performance degradation.

README:

🚀 NEW 2-14-2026: Claude Code Mastery Starter Kit

Everything from V1–V5 baked into a production-ready project template. 16 slash commands, deterministic hook enforcement, a battle-tested MongoDB wrapper, live AI monitoring, and three-layer security — all wired up and ready to clone. Stop configuring, start building.

The complete guide to maximizing Claude Code: Global CLAUDE.md, MCP Servers, Commands, Hooks, Skills, and Why Single-Purpose Chats Matter.

This version is obsolete by now. Please use the new Claude Code Mastery Starter Kit instead

Previous versions:

TL;DR: Your global

~/.claude/CLAUDE.mdis a security gatekeeper AND project scaffolding blueprint. MCP servers extend Claude's capabilities. Custom commands automate workflows. Hooks enforce rules deterministically (where CLAUDE.md can fail). Skills package reusable expertise. And research shows mixing topics in a single chat causes 39% performance degradation.

# Clone this repo

git clone https://github.com/TheDecipherist/claude-code-mastery.git

cd claude-code-mastery

# Copy hooks to your Claude config

mkdir -p ~/.claude/hooks

cp hooks/* ~/.claude/hooks/

chmod +x ~/.claude/hooks/*.sh

# Copy the settings template (review and customize first!)

cp templates/settings.json ~/.claude/settings.json

# Copy skills

mkdir -p ~/.claude/skills

cp -r skills/* ~/.claude/skills/📱 Read V3 on GitHub Pages | 📄 View GUIDE.md (V3)

| Part | Topic | Key Takeaway |

|---|---|---|

| 1 | Global CLAUDE.md as Security Gatekeeper | Define once, inherit everywhere |

| 2 | Project Scaffolding Rules | Every project follows same structure |

| 3 | MCP Servers | External tool integrations |

| 4 | Context7 | Live documentation access |

| 5 | Custom Commands | Workflow automation |

| 6 | Single-Purpose Chats | 39% degradation from topic mixing |

| 7 | Skills & Hooks | Enforcement over suggestion |

claude-code-mastery/

├── GUIDE.md # The complete guide

├── templates/

│ ├── global-claude.md # ~/.claude/CLAUDE.md template

│ ├── project-claude.md # ./CLAUDE.md starter

│ ├── settings.json # Hook configuration template

│ └── .gitignore # Recommended .gitignore

├── hooks/

│ ├── block-secrets.py # PreToolUse: Block .env access

│ ├── block-dangerous-commands.sh # PreToolUse: Block rm -rf, etc.

│ ├── end-of-turn.sh # Stop: Quality gates

│ ├── after-edit.sh # PostToolUse: Run formatters

│ └── notify.sh # Notification: Desktop alerts

├── skills/

│ ├── commit-messages/ # Generate conventional commits

│ │ └── SKILL.md

│ └── security-audit/ # Security vulnerability checks

│ └── SKILL.md

└── commands/

├── new-project.md # /new-project scaffold

├── security-check.md # /security-check audit

└── pre-commit.md # /pre-commit quality gates

- Claude Code installed

- Python 3.8+ (for Python hooks)

-

jq(for JSON parsing in shell hooks)

# Create hooks directory

mkdir -p ~/.claude/hooks

# Copy hook scripts

cp hooks/block-secrets.py ~/.claude/hooks/

cp hooks/block-dangerous-commands.sh ~/.claude/hooks/

cp hooks/end-of-turn.sh ~/.claude/hooks/

# Make shell scripts executable

chmod +x ~/.claude/hooks/*.sh# If you don't have settings.json yet

cp templates/settings.json ~/.claude/settings.json

# If you already have settings.json, merge the hooks section manually# Create skills directory

mkdir -p ~/.claude/skills

# Copy skills

cp -r skills/* ~/.claude/skills/# Copy template

cp templates/global-claude.md ~/.claude/CLAUDE.md

# Customize with your details

$EDITOR ~/.claude/CLAUDE.md# Start Claude Code

claude

# Check hooks are loaded

/hooks

# Check skills are loaded

/skillsCLAUDE.md rules are suggestions. Hooks are enforcement.

CLAUDE.md saying "don't edit .env"

→ Parsed by LLM

→ Weighed against other context

→ Maybe followed

PreToolUse hook blocking .env edits

→ Always runs

→ Returns exit code 2

→ Operation blocked. Period.

Real-world example from a community member:

"My PreToolUse hook blocks Claude from accessing secrets (.env files) a few times per week. Claude does not respect CLAUDE.md rules very rigorously."

| Code | Meaning |

|---|---|

| 0 | Success, allow operation |

| 1 | Error (shown to user only) |

| 2 | Block operation, feed stderr to Claude |

Research consistently shows topic mixing destroys accuracy:

| Study | Finding |

|---|---|

| Multi-turn conversations | 39% performance drop when mixing topics |

| Context rot | Recall decreases as context grows |

| Context pollution | 2% early misalignment → 40% failure rate |

Golden Rule: One Task, One Chat

Contributions welcome! Please:

- Fork the repository

- Create a feature branch

- Add your hooks, skills, or improvements

- Submit a PR with description

- [ ] More language-specific hooks (Go, Rust, Ruby)

- [ ] Additional skills (code review, documentation, testing)

- [ ] Framework-specific scaffolding templates

- [ ] MCP server configuration examples

- Claude Code Best Practices — Anthropic

- Effective Context Engineering — Anthropic

- Agent Skills — Claude Code Docs

- Hooks Reference — Claude Code Docs

- LLMs Get Lost In Multi-Turn Conversation — arXiv

- Context Rot Research — Chroma

- Claude Loads Secrets Without Permission — Knostic

- Claude Code Hooks: Guardrails That Actually Work

- Claude Code Hooks Mastery

- Claude Code Security Best Practices

MIT License - See LICENSE

Built with ❤️ by TheDecipherist and the Claude Code community

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for claude-code-mastery

Similar Open Source Tools

claude-code-mastery

Claude Code Mastery is a comprehensive tool for maximizing Claude Code, offering a production-ready project template with 16 slash commands, deterministic hook enforcement, MongoDB wrapper, live AI monitoring, and three-layer security. It provides a security gatekeeper, project scaffolding blueprint, MCP server integration, workflow automation through custom commands, and emphasizes the importance of single-purpose chats to avoid performance degradation.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

OpenOutreach

OpenOutreach is a self-hosted, open-source LinkedIn automation tool designed for B2B lead generation. It automates the entire outreach process in a stealthy, human-like way by discovering and enriching target profiles, ranking profiles using ML for smart prioritization, sending personalized connection requests, following up with custom messages after acceptance, and tracking everything in a built-in CRM with web UI. It offers features like undetectable behavior, fully customizable Python-based campaigns, local execution with CRM, easy deployment with Docker, and AI-ready templating for hyper-personalized messages.

buster

Buster is a modern analytics platform designed with AI in mind, focusing on self-serve experiences powered by Large Language Models. It addresses pain points in existing tools by advocating for AI-centric app development, cost-effective data warehousing, improved CI/CD processes, and empowering data teams to create powerful, user-friendly data experiences. The platform aims to revolutionize AI analytics by enabling data teams to build deep integrations and own their entire analytics stack.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

claude-code-settings

A repository collecting best practices for Claude Code settings and customization. It provides configuration files for customizing Claude Code's behavior and building an efficient development environment. The repository includes custom agents and skills for specific domains, interactive development workflow features, efficient development rules, and team workflow with Codex MCP. Users can leverage the provided configuration files and tools to enhance their development process and improve code quality.

Edit-Banana

Edit Banana is a universal content re-editor that allows users to transform fixed content into fully manipulatable assets. Powered by SAM 3 and multimodal large models, it enables high-fidelity reconstruction while preserving original diagram details and logical relationships. The platform offers advanced segmentation, fixed multi-round VLM scanning, high-quality OCR, user system with credits, multi-user concurrency, and a web interface. Users can upload images or PDFs to get editable DrawIO (XML) or PPTX files in seconds. The project structure includes components for segmentation, text extraction, frontend, models, and scripts, with detailed installation and setup instructions provided. The tool is open-source under the Apache License 2.0, allowing commercial use and secondary development.

llm4s

LLM4S provides a simple, robust, and scalable framework for building Large Language Models (LLM) applications in Scala. It aims to leverage Scala's type safety, functional programming, JVM ecosystem, concurrency, and performance advantages to create reliable and maintainable AI-powered applications. The framework supports multi-provider integration, execution environments, error handling, Model Context Protocol (MCP) support, agent frameworks, multimodal generation, and Retrieval-Augmented Generation (RAG) workflows. It also offers observability features like detailed trace logging, monitoring, and analytics for debugging and performance insights.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

claude-talk-to-figma-mcp

A Model Context Protocol (MCP) plugin named Claude Talk to Figma MCP that enables Claude Desktop and other AI tools to interact directly with Figma for AI-assisted design capabilities. It provides document interaction, element creation, smart modifications, text mastery, and component integration. Users can connect the plugin to Figma, start designing, and utilize various tools for document analysis, element creation, modification, text manipulation, and component management. The project offers installation instructions, AI client configuration options, usage patterns, command references, troubleshooting support, testing guidelines, architecture overview, contribution guidelines, version history, and licensing information.

inspector

A developer tool for testing and debugging Model Context Protocol (MCP) servers. It allows users to test the compliance of their MCP servers with the latest MCP specs, supports various transports like STDIO, SSE, and Streamable HTTP, features an LLM Playground for testing server behavior against different models, provides comprehensive logging and error reporting for MCP server development, and offers a modern developer experience with multiple server connections and saved configurations. The tool is built using Next.js and integrates MCP capabilities, AI SDKs from OpenAI, Anthropic, and Ollama, and various technologies like Node.js, TypeScript, and Next.js.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

ai-real-estate-assistant

AI Real Estate Assistant is a modern platform that uses AI to assist real estate agencies in helping buyers and renters find their ideal properties. It features multiple AI model providers, intelligent query processing, advanced search and retrieval capabilities, and enhanced user experience. The tool is built with a FastAPI backend and Next.js frontend, offering semantic search, hybrid agent routing, and real-time analytics.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

hia

HIA (Health Insights Agent) is an AI agent designed to analyze blood reports and provide personalized health insights. It features an intelligent agent-based architecture with multi-model cascade system, in-context learning, PDF upload and text extraction, secure user authentication, session history tracking, and a modern UI. The tech stack includes Streamlit for frontend, Groq for AI integration, Supabase for database, PDFPlumber for PDF processing, and Supabase Auth for authentication. The project structure includes components for authentication, UI, configuration, services, agents, and utilities. Contributions are welcome, and the project is licensed under MIT.

For similar tasks

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

devchat

DevChat is an open-source workflow engine that enables developers to create intelligent, automated workflows for engaging with users through a chat panel within their IDEs. It combines script writing flexibility, latest AI models, and an intuitive chat GUI to enhance user experience and productivity. DevChat simplifies the integration of AI in software development, unlocking new possibilities for developers.

LLM-Finetuning-Toolkit

LLM Finetuning toolkit is a config-based CLI tool for launching a series of LLM fine-tuning experiments on your data and gathering their results. It allows users to control all elements of a typical experimentation pipeline - prompts, open-source LLMs, optimization strategy, and LLM testing - through a single YAML configuration file. The toolkit supports basic, intermediate, and advanced usage scenarios, enabling users to run custom experiments, conduct ablation studies, and automate fine-tuning workflows. It provides features for data ingestion, model definition, training, inference, quality assurance, and artifact outputs, making it a comprehensive tool for fine-tuning large language models.

For similar jobs

claude-code-mastery

Claude Code Mastery is a comprehensive tool for maximizing Claude Code, offering a production-ready project template with 16 slash commands, deterministic hook enforcement, MongoDB wrapper, live AI monitoring, and three-layer security. It provides a security gatekeeper, project scaffolding blueprint, MCP server integration, workflow automation through custom commands, and emphasizes the importance of single-purpose chats to avoid performance degradation.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students