agent-service-toolkit

Full toolkit for running an AI agent service built with LangGraph, FastAPI and Streamlit

Stars: 3611

The AI Agent Service Toolkit is a comprehensive toolkit designed for running an AI agent service using LangGraph, FastAPI, and Streamlit. It includes a LangGraph agent, a FastAPI service, a client for interacting with the service, and a Streamlit app for providing a chat interface. The project offers a template for building and running agents with the LangGraph framework, showcasing a complete setup from agent definition to user interface. Key features include LangGraph Agent with latest features, FastAPI Service, Advanced Streaming support, Streamlit Interface, Multiple Agent Support, Asynchronous Design, Content Moderation, RAG Agent implementation, Feedback Mechanism, Docker Support, and Testing. The repository structure includes directories for defining agents, protocol schema, core modules, service, client, Streamlit app, and tests.

README:

A full toolkit for running an AI agent service built with LangGraph, FastAPI and Streamlit.

It includes a LangGraph agent, a FastAPI service to serve it, a client to interact with the service, and a Streamlit app that uses the client to provide a chat interface. Data structures and settings are built with Pydantic.

This project offers a template for you to easily build and run your own agents using the LangGraph framework. It demonstrates a complete setup from agent definition to user interface, making it easier to get started with LangGraph-based projects by providing a full, robust toolkit.

🎥 Watch a video walkthrough of the repo and app

Run directly in python

# At least one LLM API key is required

echo 'OPENAI_API_KEY=your_openai_api_key' >> .env

# uv is the recommended way to install agent-service-toolkit, but "pip install ." also works

# For uv installation options, see: https://docs.astral.sh/uv/getting-started/installation/

curl -LsSf https://astral.sh/uv/0.7.19/install.sh | sh

# Install dependencies. "uv sync" creates .venv automatically

uv sync --frozen

source .venv/bin/activate

python src/run_service.py

# In another shell

source .venv/bin/activate

streamlit run src/streamlit_app.pyRun with docker

echo 'OPENAI_API_KEY=your_openai_api_key' >> .env

docker compose watch-

LangGraph Agent and latest features: A customizable agent built using the LangGraph framework. Implements the latest LangGraph v0.3 features including human in the loop with

interrupt(), flow control withCommand, long-term memory withStore, andlanggraph-supervisor. - FastAPI Service: Serves the agent with both streaming and non-streaming endpoints.

- Advanced Streaming: A novel approach to support both token-based and message-based streaming.

- Streamlit Interface: Provides a user-friendly chat interface for interacting with the agent.

-

Multiple Agent Support: Run multiple agents in the service and call by URL path. Available agents and models are described in

/info - Asynchronous Design: Utilizes async/await for efficient handling of concurrent requests.

- Content Moderation: Implements LlamaGuard for content moderation (requires Groq API key).

- RAG Agent: A basic RAG agent implementation using ChromaDB - see docs.

- Feedback Mechanism: Includes a star-based feedback system integrated with LangSmith.

- Docker Support: Includes Dockerfiles and a docker compose file for easy development and deployment.

- Testing: Includes robust unit and integration tests for the full repo.

The repository is structured as follows:

-

src/agents/: Defines several agents with different capabilities -

src/schema/: Defines the protocol schema -

src/core/: Core modules including LLM definition and settings -

src/service/service.py: FastAPI service to serve the agents -

src/client/client.py: Client to interact with the agent service -

src/streamlit_app.py: Streamlit app providing a chat interface -

tests/: Unit and integration tests

-

Clone the repository:

git clone https://github.com/JoshuaC215/agent-service-toolkit.git cd agent-service-toolkit -

Set up environment variables: Create a

.envfile in the root directory. At least one LLM API key or configuration is required. See the.env.examplefile for a full list of available environment variables, including a variety of model provider API keys, header-based authentication, LangSmith tracing, testing and development modes, and OpenWeatherMap API key. -

You can now run the agent service and the Streamlit app locally, either with Docker or just using Python. The Docker setup is recommended for simpler environment setup and immediate reloading of the services when you make changes to your code.

To customize the agent for your own use case:

- Add your new agent to the

src/agentsdirectory. You can copyresearch_assistant.pyorchatbot.pyand modify it to change the agent's behavior and tools. - Import and add your new agent to the

agentsdictionary insrc/agents/agents.py. Your agent can be called by/<your_agent_name>/invokeor/<your_agent_name>/stream. - Adjust the Streamlit interface in

src/streamlit_app.pyto match your agent's capabilities.

If your agents or chosen LLM require file-based credential files or certificates, the privatecredentials/ has been provided for your development convenience. All contents, excluding the .gitkeep files, are ignored by git and docker's build process. See Working with File-based Credentials for suggested use.

This project includes a Docker setup for easy development and deployment. The compose.yaml file defines three services: postgres, agent_service and streamlit_app. The Dockerfile for each service is in their respective directories.

For local development, we recommend using docker compose watch. This feature allows for a smoother development experience by automatically updating your containers when changes are detected in your source code.

-

Make sure you have Docker and Docker Compose (>= v2.23.0) installed on your system.

-

Create a

.envfile from the.env.example. At minimum, you need to provide an LLM API key (e.g., OPENAI_API_KEY).cp .env.example .env # Edit .env to add your API keys -

Build and launch the services in watch mode:

docker compose watch

This will automatically:

- Start a PostgreSQL database service that the agent service connects to

- Start the agent service with FastAPI

- Start the Streamlit app for the user interface

-

The services will now automatically update when you make changes to your code:

- Changes in the relevant python files and directories will trigger updates for the relevant services.

- NOTE: If you make changes to the

pyproject.tomloruv.lockfiles, you will need to rebuild the services by runningdocker compose up --build.

-

Access the Streamlit app by navigating to

http://localhost:8501in your web browser. -

The agent service API will be available at

http://0.0.0.0:8080. You can also use the OpenAPI docs athttp://0.0.0.0:8080/redoc. -

Use

docker compose downto stop the services.

This setup allows you to develop and test your changes in real-time without manually restarting the services.

The repo includes a generic src/client/client.AgentClient that can be used to interact with the agent service. This client is designed to be flexible and can be used to build other apps on top of the agent. It supports both synchronous and asynchronous invocations, and streaming and non-streaming requests.

See the src/run_client.py file for full examples of how to use the AgentClient. A quick example:

from client import AgentClient

client = AgentClient()

response = client.invoke("Tell me a brief joke?")

response.pretty_print()

# ================================== Ai Message ==================================

#

# A man walked into a library and asked the librarian, "Do you have any books on Pavlov's dogs and Schrödinger's cat?"

# The librarian replied, "It rings a bell, but I'm not sure if it's here or not."The agent supports LangGraph Studio, the IDE for developing agents in LangGraph.

langgraph-cli[inmem] is installed with uv sync. You can simply add your .env file to the root directory as described above, and then launch LangGraph Studio with langgraph dev. Customize langgraph.json as needed. See the local quickstart to learn more.

You can also run the agent service and the Streamlit app locally without Docker, just using a Python virtual environment.

-

Create a virtual environment and install dependencies:

uv sync --frozen source .venv/bin/activate -

Run the FastAPI server:

python src/run_service.py

-

In a separate terminal, run the Streamlit app:

streamlit run src/streamlit_app.py

-

Open your browser and navigate to the URL provided by Streamlit (usually

http://localhost:8501).

The following are a few of the public projects that drew code or inspiration from this repo.

- PolyRAG - Extends agent-service-toolkit with RAG capabilities over both PostgreSQL databases and PDF documents.

- alexrisch/agent-web-kit - A Next.JS frontend for agent-service-toolkit

- raushan-in/dapa - Digital Arrest Protection App (DAPA) enables users to report financial scams and frauds efficiently via a user-friendly platform.

Please create a pull request editing the README or open a discussion with any new ones to be added! Would love to include more projects.

Contributions are welcome! Please feel free to submit a Pull Request. Currently the tests need to be run using the local development without Docker setup. To run the tests for the agent service:

-

Ensure you're in the project root directory and have activated your virtual environment.

-

Install the development dependencies and pre-commit hooks:

uv sync --frozen pre-commit install

-

Run the tests using pytest:

pytest

This project is licensed under the MIT License - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agent-service-toolkit

Similar Open Source Tools

agent-service-toolkit

The AI Agent Service Toolkit is a comprehensive toolkit designed for running an AI agent service using LangGraph, FastAPI, and Streamlit. It includes a LangGraph agent, a FastAPI service, a client for interacting with the service, and a Streamlit app for providing a chat interface. The project offers a template for building and running agents with the LangGraph framework, showcasing a complete setup from agent definition to user interface. Key features include LangGraph Agent with latest features, FastAPI Service, Advanced Streaming support, Streamlit Interface, Multiple Agent Support, Asynchronous Design, Content Moderation, RAG Agent implementation, Feedback Mechanism, Docker Support, and Testing. The repository structure includes directories for defining agents, protocol schema, core modules, service, client, Streamlit app, and tests.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

opencode

Opencode is an AI coding agent designed for the terminal. It is a tool that allows users to interact with AI models for coding tasks in a terminal-based environment. Opencode is open source, provider-agnostic, and focuses on a terminal user interface (TUI) for coding. It offers features such as client/server architecture, support for various AI models, and a strong emphasis on community contributions and feedback.

fastagency

FastAgency is a powerful tool that leverages the AutoGen framework to quickly build applications with multi-agent workflows. It supports various interfaces like ConsoleUI and MesopUI, allowing users to create interactive applications. The tool enables defining workflows between agents, such as students and teachers, and summarizing conversations. FastAgency aims to expand its capabilities by integrating with additional agentic frameworks like CrewAI, providing more options for workflow definition and AI tool integration.

fastagency

FastAgency is an open-source framework designed to accelerate the transition from prototype to production for multi-agent AI workflows. It provides a unified programming interface for deploying agentic workflows written in AG2 agentic framework in both development and productional settings. With features like seamless external API integration, a Tester Class for continuous integration, and a Command-Line Interface (CLI) for orchestration, FastAgency streamlines the deployment process, saving time and effort while maintaining flexibility and performance. Whether orchestrating complex AI agents or integrating external APIs, FastAgency helps users quickly transition from concept to production, reducing development cycles and optimizing multi-agent systems.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

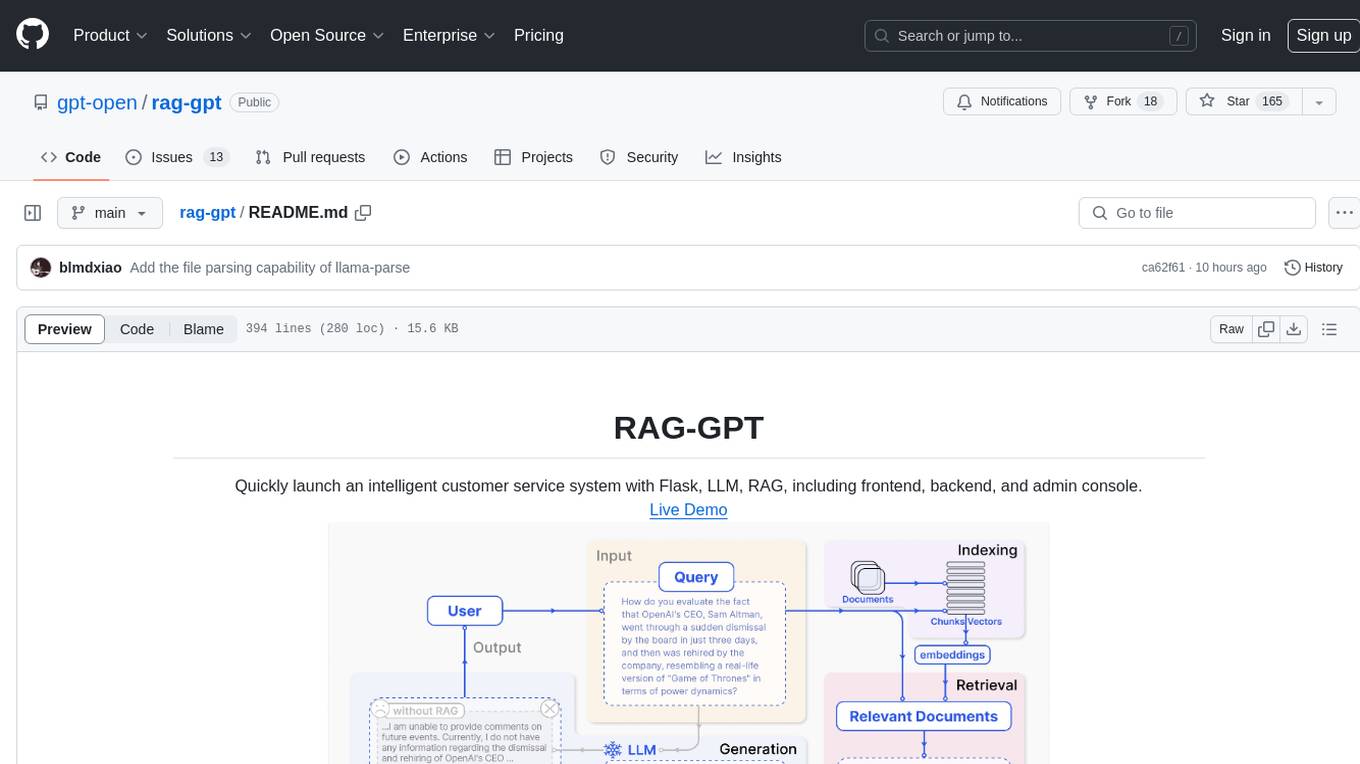

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

tinystruct

Tinystruct is a simple Java framework designed for easy development with better performance. It offers a modern approach with features like CLI and web integration, built-in lightweight HTTP server, minimal configuration philosophy, annotation-based routing, and performance-first architecture. Developers can focus on real business logic without dealing with unnecessary complexities, making it transparent, predictable, and extensible.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

vision-agent

AskUI Vision Agent is a powerful automation framework that enables you and AI agents to control your desktop, mobile, and HMI devices and automate tasks. It supports multiple AI models, multi-platform compatibility, and enterprise-ready features. The tool provides support for Windows, Linux, MacOS, Android, and iOS device automation, single-step UI automation commands, in-background automation on Windows machines, flexible model use, and secure deployment of agents in enterprise environments.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, offers quick setup for conversational service robots, integrates diverse knowledge bases, provides flexible configuration options, and features an attractive user interface.

For similar tasks

OpenAGI

OpenAGI is an AI agent creation package designed for researchers and developers to create intelligent agents using advanced machine learning techniques. The package provides tools and resources for building and training AI models, enabling users to develop sophisticated AI applications. With a focus on collaboration and community engagement, OpenAGI aims to facilitate the integration of AI technologies into various domains, fostering innovation and knowledge sharing among experts and enthusiasts.

GPTSwarm

GPTSwarm is a graph-based framework for LLM-based agents that enables the creation of LLM-based agents from graphs and facilitates the customized and automatic self-organization of agent swarms with self-improvement capabilities. The library includes components for domain-specific operations, graph-related functions, LLM backend selection, memory management, and optimization algorithms to enhance agent performance and swarm efficiency. Users can quickly run predefined swarms or utilize tools like the file analyzer. GPTSwarm supports local LM inference via LM Studio, allowing users to run with a local LLM model. The framework has been accepted by ICML2024 and offers advanced features for experimentation and customization.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

LongRoPE

LongRoPE is a method to extend the context window of large language models (LLMs) beyond 2 million tokens. It identifies and exploits non-uniformities in positional embeddings to enable 8x context extension without fine-tuning. The method utilizes a progressive extension strategy with 256k fine-tuning to reach a 2048k context. It adjusts embeddings for shorter contexts to maintain performance within the original window size. LongRoPE has been shown to be effective in maintaining performance across various tasks from 4k to 2048k context lengths.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

Awesome-AI-Agents

Awesome-AI-Agents is a curated list of projects, frameworks, benchmarks, platforms, and related resources focused on autonomous AI agents powered by Large Language Models (LLMs). The repository showcases a wide range of applications, multi-agent task solver projects, agent society simulations, and advanced components for building and customizing AI agents. It also includes frameworks for orchestrating role-playing, evaluating LLM-as-Agent performance, and connecting LLMs with real-world applications through platforms and APIs. Additionally, the repository features surveys, paper lists, and blogs related to LLM-based autonomous agents, making it a valuable resource for researchers, developers, and enthusiasts in the field of AI.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.