minions

Big & Small LLMs working together

Stars: 1160

Minions is a communication protocol that enables small on-device models to collaborate with frontier models in the cloud. By only reading long contexts locally, it reduces cloud costs with minimal or no quality degradation. The repository provides a demonstration of the protocol.

README:

What is this? Minions is a communication protocol that enables small on-device models to collaborate with frontier models in the cloud. By only reading long contexts locally, we can reduce cloud costs with minimal or no quality degradation. This repository provides a demonstration of the protocol. Get started below or see our paper and blogpost below for more information.

Paper: Minions: Cost-efficient Collaboration Between On-device and Cloud Language Models

Minions Blogpost: https://hazyresearch.stanford.edu/blog/2025-02-24-minions

Secure Minions Chat Blogpost: https://hazyresearch.stanford.edu/blog/2025-05-12-security

Looking for Secure Minions Chat? If you're interested in our end-to-end encrypted and chat system, please see the Secure Minions Chat README for detailed setup and usage instructions.

- Setup

- Minions Demo Application

- Minions WebGPU App

- Example Code

- Python Notebook

- Docker Support

- Command Line Interface

- Secure Minions Local-Remote Protocol

- Secure Minions Chat

- Apps

- Inference Estimator

- Miscellaneous Setup

- Maintainers

We have tested the following setup on Mac and Ubuntu with Python 3.10-3.11 (Note: Python 3.13 is not supported)

Optional: Create a virtual environment with your favorite package manager (e.g. conda, venv, uv)

conda create -n minions python=3.11Step 1: Clone the repository and install the Python package.

git clone https://github.com/HazyResearch/minions.git

cd minions

pip install -e . # installs the minions package in editable modenote: for optional MLX-LM install the package with the following command:

pip install -e ".[mlx]"note: for secure minions chat, install the package with the following command:

pip install -e ".[secure]"note: for optional Cartesia-MLX install, pip install the basic package and then follow the instructions below.

Step 2: Install a server for running the local model.

We support three servers for running local models: lemonade, ollama, and tokasaurus. You need to install at least one of these.

- You should use

ollamaif you do not have access to NVIDIA/AMD GPUs. Installollamafollowing the instructions here. To enable Flash Attention, runlaunchctl setenv OLLAMA_FLASH_ATTENTION 1and, if on a mac, restart the ollama app. - You should use

lemonadeif you have access to local AMD CPUs/GPUs/NPUs. Installlemonadefollowing the instructions here.- See the following for supported APU configurations: https://ryzenai.docs.amd.com/en/latest/llm/overview.html#supported-configurations

- After installing

lemonademake sure to launch the lemonade server. This can be done via the one-click Windows GUI installer which installs the Lemonade Server as a standalone tool. - Note: Lemonade does not support the Minion-CUA protocol at this time.

- You should use

tokasaurusif you have access to NVIDIA GPUs and you are running the Minions protocol, which benefits from the high-throughput oftokasaurus. Installtokasauruswith the following command:

pip install tokasaurus

Optional: Install Cartesia-MLX (only available on Apple Silicon)

- Download XCode

- Install the command line tools by running

xcode-select --install - Install the Nanobind🧮

pip install nanobind@git+https://github.com/wjakob/nanobind.git@2f04eac452a6d9142dedb957701bdb20125561e4

- Install the Cartesia Metal backend by running the following command:

pip install git+https://github.com/cartesia-ai/edge.git#subdirectory=cartesia-metal

- Install the Cartesia-MLX package by running the following command:

pip install git+https://github.com/cartesia-ai/edge.git#subdirectory=cartesia-mlx

Optional: Install llama-cpp-python

First, install the llama-cpp-python package:

# CPU-only installation

pip install llama-cpp-python

# For Metal on Mac (Apple Silicon/Intel)

CMAKE_ARGS="-DGGML_METAL=on" pip install llama-cpp-python

# For CUDA on NVIDIA GPUs

CMAKE_ARGS="-DGGML_CUDA=on" pip install llama-cpp-python

# For OpenBLAS CPU optimizations

CMAKE_ARGS="-DGGML_BLAS=ON -DGGML_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-pythonFor more installation options, see the llama-cpp-python documentation.

The client follows the basic pattern from the llama-cpp-python library:

from minions.clients import LlamaCppClient

# Initialize the client with a local model

client = LlamaCppClient(

model_path="/path/to/model.gguf",

chat_format="chatml", # Most modern models use "chatml" format

n_gpu_layers=35 # Set to 0 for CPU-only inference

)

# Run a chat completion

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What's the capital of France?"}

]

responses, usage, done_reasons = client.chat(messages)

print(responses[0]) # The generated responseYou can easily load models directly from Hugging Face:

client = LlamaCppClient(

model_path="dummy", # Will be replaced by downloaded model

model_repo_id="TheBloke/Mistral-7B-Instruct-v0.2-GGUF",

model_file_pattern="*Q4_K_M.gguf", # Optional - specify quantization

chat_format="chatml",

n_gpu_layers=35 # Offload 35 layers to GPU

)Step 3: Set your API key for at least one of the following cloud LLM providers.

If needed, create an OpenAI API Key or TogetherAI API key or DeepSeek API key for the cloud model.

# OpenAI

export OPENAI_API_KEY=<your-openai-api-key>

export OPENAI_BASE_URL=<your-openai-base-url> # Optional: Use a different OpenAI API endpoint

# Together AI

export TOGETHER_API_KEY=<your-together-api-key>

# OpenRouter

export OPENROUTER_API_KEY=<your-openrouter-api-key>

export OPENROUTER_BASE_URL=<your-openrouter-base-url> # Optional: Use a different OpenRouter API endpoint

# Perplexity

export PERPLEXITY_API_KEY=<your-perplexity-api-key>

export PERPLEXITY_BASE_URL=<your-perplexity-base-url> # Optional: Use a different Perplexity API endpoint

# Tokasaurus

export TOKASAURUS_BASE_URL=<your-tokasaurus-base-url> # Optional: Use a different Tokasaurus API endpoint

# DeepSeek

export DEEPSEEK_API_KEY=<your-deepseek-api-key>

# Anthropic

export ANTHROPIC_API_KEY=<your-anthropic-api-key>

# Mistral AI

export MISTRAL_API_KEY=<your-mistral-api-key>To try the Minion or Minions protocol, run the following commands:

pip install torch transformers

streamlit run app.pyIf you are seeing an error about the ollama client,

An error occurred: Failed to connect to Ollama. Please check that Ollama is downloaded, running and accessible. https://ollama.com/download

try running the following command:

OLLAMA_FLASH_ATTENTION=1 ollama serveThe Minions WebGPU app demonstrates the Minions protocol running entirely in the browser using WebGPU for local model inference and cloud APIs for supervision. This approach eliminates the need for local server setup while providing a user-friendly web interface.

- Browser-based: Runs entirely in your web browser with no local server required

- WebGPU acceleration: Uses WebGPU for fast local model inference

- Model selection: Choose from multiple pre-optimized models from MLC AI

- Real-time progress: See model loading progress and conversation logs in real-time

- Privacy-focused: Your API key and data never leave your browser

-

Navigate to the WebGPU app directory:

cd apps/minions-webgpu -

Install dependencies:

npm install

-

Start the development server:

npm start

-

Open your browser and navigate to the URL shown in the terminal (typically

http://localhost:5173)

The following example is for an ollama local client and an openai remote client.

The protocol is minion.

from minions.clients.ollama import OllamaClient

from minions.clients.openai import OpenAIClient

from minions.minion import Minion

local_client = OllamaClient(

model_name="llama3.2",

)

remote_client = OpenAIClient(

model_name="gpt-4o",

)

# Instantiate the Minion object with both clients

minion = Minion(local_client, remote_client)

context = """

Patient John Doe is a 60-year-old male with a history of hypertension. In his latest checkup, his blood pressure was recorded at 160/100 mmHg, and he reported occasional chest discomfort during physical activity.

Recent laboratory results show that his LDL cholesterol level is elevated at 170 mg/dL, while his HDL remains within the normal range at 45 mg/dL. Other metabolic indicators, including fasting glucose and renal function, are unremarkable.

"""

task = "Based on the patient's blood pressure and LDL cholesterol readings in the context, evaluate whether these factors together suggest an increased risk for cardiovascular complications."

# Execute the minion protocol for up to two communication rounds

output = minion(

task=task,

context=[context],

max_rounds=2

)The following example is for an ollama local client and an openai remote client.

The protocol is minions.

from minions.clients.ollama import OllamaClient

from minions.clients.openai import OpenAIClient

from minions.minions import Minions

from pydantic import BaseModel

class StructuredLocalOutput(BaseModel):

explanation: str

citation: str | None

answer: str | None

local_client = OllamaClient(

model_name="llama3.2",

temperature=0.0,

structured_output_schema=StructuredLocalOutput

)

remote_client = OpenAIClient(

model_name="gpt-4o",

)

# Instantiate the Minion object with both clients

minion = Minions(local_client, remote_client)

context = """

Patient John Doe is a 60-year-old male with a history of hypertension. In his latest checkup, his blood pressure was recorded at 160/100 mmHg, and he reported occasional chest discomfort during physical activity.

Recent laboratory results show that his LDL cholesterol level is elevated at 170 mg/dL, while his HDL remains within the normal range at 45 mg/dL. Other metabolic indicators, including fasting glucose and renal function, are unremarkable.

"""

task = "Based on the patient's blood pressure and LDL cholesterol readings in the context, evaluate whether these factors together suggest an increased risk for cardiovascular complications."

# Execute the minion protocol for up to two communication rounds

output = minion(

task=task,

doc_metadata="Medical Report",

context=[context],

max_rounds=2

)To run Minion/Minions in a notebook, checkout minions.ipynb.

docker build -t minions-docker .Note: The container automatically starts an Ollama service in the background for local model inference. This allows you to use models like llama3.2:3b without additional setup.

# Basic usage (includes Ollama service)

docker run -i minions-docker

# With Docker socket mounted (for Docker Model Runner)

docker run -i -v /var/run/docker.sock:/var/run/docker.sock minions-docker

# With API keys for remote models

docker run -i -e OPENAI_API_KEY=your_key -e ANTHROPIC_API_KEY=your_key minions-docker

# With custom Ollama host

docker run -i -e OLLAMA_HOST=0.0.0.0:11434 minions-docker

# For Streamlit app (legacy usage)

docker run -p 8501:8501 --env OPENAI_API_KEY=<your-openai-api-key> --env DEEPSEEK_API_KEY=<your-deepseek-api-key> minions-dockerThe Docker container supports a stdin/stdout interface for running various minion protocols. It expects JSON input with the following structure:

{

"local_client": {

"type": "ollama",

"model_name": "llama3.2:3b",

"port": 11434,

"kwargs": {}

},

"remote_client": {

"type": "openai",

"model_name": "gpt-4o",

"kwargs": {

"api_key": "your_openai_key"

}

},

"protocol": {

"type": "minion",

"max_rounds": 3,

"log_dir": "minion_logs",

"kwargs": {}

},

"call_params": {

"task": "Your task here",

"context": ["Context string 1", "Context string 2"],

"max_rounds": 2

}

}Basic Minion Protocol:

echo '{

"local_client": {

"type": "ollama",

"model_name": "llama3.2:3b"

},

"remote_client": {

"type": "openai",

"model_name": "gpt-4o"

},

"protocol": {

"type": "minion",

"max_rounds": 3

},

"call_params": {

"task": "Analyze the patient data and provide a diagnosis",

"context": ["Patient John Doe is a 60-year-old male with hypertension. Blood pressure: 160/100 mmHg. LDL cholesterol: 170 mg/dL."]

}

}' | docker run -i -e OPENAI_API_KEY=$OPENAI_API_KEY minions-dockerMinions (Parallel) Protocol:

echo '{

"local_client": {

"type": "ollama",

"model_name": "llama3.2:3b"

},

"remote_client": {

"type": "openai",

"model_name": "gpt-4o"

},

"protocol": {

"type": "minions"

},

"call_params": {

"task": "Analyze the financial data and extract key insights",

"doc_metadata": "Financial report",

"context": ["Revenue increased by 15% year-over-year. Operating expenses rose by 8%. Net profit margin improved to 12%."]

}

}' | docker run -i -e OPENAI_API_KEY=$OPENAI_API_KEY minions-dockerLocal Clients:

-

ollama: Uses Ollama for local inference (included in container) -

docker_model_runner: Uses Docker Model Runner for local inference

Remote Clients:

-

openai: OpenAI API -

anthropic: Anthropic API

-

minion: Single conversation protocol -

minions: Parallel processing protocol

The container outputs JSON with the following structure:

{

"success": true,

"result": {

"final_answer": "The analysis result...",

"supervisor_messages": [...],

"worker_messages": [...],

"remote_usage": {...},

"local_usage": {...}

},

"error": null

}Or on error:

{

"success": false,

"result": null,

"error": "Error message"

}-

OPENAI_API_KEY: OpenAI API key -

ANTHROPIC_API_KEY: Anthropic API key -

OLLAMA_HOST: Ollama service host (set to 0.0.0.0:11434 by default) -

PYTHONPATH: Python path (set to /app by default) -

PYTHONUNBUFFERED: Unbuffered output (set to 1 by default)

-

Ollama Models: The container will automatically pull models on first use (e.g.,

llama3.2:3b) - Docker Model Runner: Ensure Docker is running and accessible from within the container

- API Keys: Pass API keys as environment variables for security

- Volumes: Mount volumes for persistent workspaces or logs

-

Networking: Use

--network hostif you need to access local services

With Custom Volumes:

docker run -i \

-v /var/run/docker.sock:/var/run/docker.sock \

-v $(pwd)/logs:/app/minion_logs \

-v $(pwd)/workspace:/app/workspace \

-e OPENAI_API_KEY=$OPENAI_API_KEY \

minions-dockerInteractive Mode:

docker run -it \

-e OPENAI_API_KEY=$OPENAI_API_KEY \

minions-docker bashThen you can run the interface manually:

python minion_stdin_interface.py For running multiple queries without restarting the container and Ollama service each time:

1. Start a persistent container:

docker run -d --name minions-container -e OPENAI_API_KEY="$OPENAI_API_KEY" minions-docker2. Send queries to the running container:

echo '{

"local_client": {"type": "ollama", "model_name": "llama3.2:3b"},

"remote_client": {"type": "openai", "model_name": "gpt-4o"},

"protocol": {"type": "minion", "max_rounds": 1},

"call_params": {"task": "How many times did Roger Federer end the year as No.1?"}

}' | docker exec -i minions-container /app/start_minion.sh3. Send additional queries (fast, no restart delay):

echo '{

"local_client": {"type": "ollama", "model_name": "llama3.2:3b"},

"remote_client": {"type": "openai", "model_name": "gpt-4o"},

"protocol": {"type": "minion", "max_rounds": 1},

"call_params": {"task": "What is the capital of France?"}

}' | docker exec -i minions-container /app/start_minion.sh4. Clean up when done:

docker stop minions-container

docker rm minions-containerAdvantages of persistent containers:

- ✅ Ollama stays running - no restart delays between queries

- ✅ Models stay loaded - faster subsequent queries

- ✅ Resource efficient - one container handles multiple queries

- ✅ Automatic model pulling - models are downloaded on first use

To run Minion/Minions in a CLI, checkout minions_cli.py.

Set your choice of local and remote models by running the following command. The format is <provider>/<model_name>. Choice of providers are ollama, openai, anthropic, together, perplexity, openrouter, groq, and mlx.

export MINIONS_LOCAL=ollama/llama3.2

export MINIONS_REMOTE=openai/gpt-4ominions --helpminions --context <path_to_context> --protocol <minion|minions>The Secure Minions Local-Remote Protocol (secure/minions_secure.py) provides an end-to-end encrypted implementation of the Minions protocol that enables secure communication between a local worker model and a remote supervisor server. This protocol includes attestation verification, perfect forward secrecy, and replay protection.

Install the secure dependencies:

pip install -e ".[secure]"from minions.clients import OllamaClient

from secure.minions_secure import SecureMinionProtocol

# Initialize local client

local_client = OllamaClient(model_name="llama3.2")

# Create secure protocol instance

protocol = SecureMinionProtocol(

supervisor_url="https://your-supervisor-server.com",

local_client=local_client,

max_rounds=3,

system_prompt="You are a helpful AI assistant."

)

# Run a secure task

result = protocol(

task="Analyze this document for key insights",

context=["Your document content here"],

max_rounds=2

)

print(f"Final Answer: {result['final_answer']}")

print(f"Session ID: {result['session_id']}")

print(f"Log saved to: {result['log_file']}")

# Clean up the session

protocol.end_session()python secure/minions_secure.py \

--supervisor_url https://your-supervisor-server.com \

--local_client_type ollama \

--local_model llama3.2 \

--max_rounds 3To install secure minions chat, install the package with the following command:

pip install -e ".[secure]"See the Secure Minions Chat README for additional details on how to setup clients and run the secure chat protocol.

The apps/ directory contains specialized applications demonstrating various use cases:

- 📊 A2A-Minions - Agent-to-Agent integration server

- 🎭 Character Chat - Role-playing with AI personas

- 🔍 Document Search - Multi-method document retrieval

- 📚 Story Teller - Creative storytelling with illustrations

- 🛠️ Tools Comparison - MCP tools performance comparison

- 🌐 WebGPU App - Browser-based Minions protocol

Minions provides a utility to estimate LLM inference speed on your hardware. The inference estimator helps you:

- Analyze your hardware capabilities (GPU, MPS, or CPU)

- Calculate peak performance for your models

- Estimate tokens per second and completion time

Run the estimator directly from the command line to check how fast a model will run:

python -m minions.utils.inference_estimator --model llama3.2 --tokens 1000 --describeArguments:

-

--model: Model name from the supported model list (e.g., llama3.2, mistral7b) -

--tokens: Number of tokens to estimate generation time for -

--describe: Show detailed hardware and model performance statistics -

--quantized: Specify that the model is quantized -

--quant-bits: Quantization bit-width (4, 8, or 16)

You can also use the inference estimator in your Python code:

from minions.utils.inference_estimator import InferenceEstimator

# Initialize the estimator for a specific model

estimator = InferenceEstimator(

model_name="llama3.2", # Model name

is_quant=True, # Is model quantized?

quant_bits=4 # Quantization level (4, 8, 16)

)

# Estimate performance for 1000 tokens

tokens_per_second, estimated_time = estimator.estimate(1000)

print(f"Estimated speed: {tokens_per_second:.1f} tokens/sec")

print(f"Estimated time: {estimated_time:.2f} seconds")

# Get detailed stats

detailed_info = estimator.describe(1000)

print(detailed_info)

# Calibrate with your actual model client for better accuracy

# (requires a model client that implements a chat() method)

estimator.calibrate(my_model_client, sample_tokens=32, prompt="Hello")The estimator uses a roofline model that considers both compute and memory bandwidth limitations and applies empirical calibration to improve accuracy. The calibration data is cached at ~/.cache/ie_calib.json for future use.

export AZURE_OPENAI_API_KEY=your-api-key

export AZURE_OPENAI_ENDPOINT=https://your-resource-name.openai.azure.com/

export AZURE_OPENAI_API_VERSION=2024-02-15-previewHere's an example of how to use Azure OpenAI with the Minions protocol in your own code:

from minions.clients.ollama import OllamaClient

from minions.clients.azure_openai import AzureOpenAIClient

from minions.minion import Minion

local_client = OllamaClient(

model_name="llama3.2",

)

remote_client = AzureOpenAIClient(

model_name="gpt-4o", # This should match your deployment name

api_key="your-api-key",

azure_endpoint="https://your-resource-name.openai.azure.com/",

api_version="2024-02-15-preview",

)

# Instantiate the Minion object with both clients

minion = Minion(local_client, remote_client)- Avanika Narayan (contact: [email protected])

- Dan Biderman (contact: [email protected])

- Sabri Eyuboglu (contact: [email protected])

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for minions

Similar Open Source Tools

minions

Minions is a communication protocol that enables small on-device models to collaborate with frontier models in the cloud. By only reading long contexts locally, it reduces cloud costs with minimal or no quality degradation. The repository provides a demonstration of the protocol.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

Awesome-Efficient-MoE

Awesome Efficient MoE is a GitHub repository that provides an implementation of Mixture of Experts (MoE) models for efficient deep learning. The repository includes code for training and using MoE models, which are neural network architectures that combine multiple expert networks to improve performance on complex tasks. MoE models are particularly useful for handling diverse data distributions and capturing complex patterns in data. The implementation in this repository is designed to be efficient and scalable, making it suitable for training large-scale MoE models on modern hardware. The code is well-documented and easy to use, making it accessible for researchers and practitioners interested in leveraging MoE models for their deep learning projects.

Fast-LLM

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

Main

This repository contains material related to the new book _Synthetic Data and Generative AI_ by the author, including code for NoGAN, DeepResampling, and NoGAN_Hellinger. NoGAN is a tabular data synthesizer that outperforms GenAI methods in terms of speed and results, utilizing state-of-the-art quality metrics. DeepResampling is a fast NoGAN based on resampling and Bayesian Models with hyperparameter auto-tuning. NoGAN_Hellinger combines NoGAN and DeepResampling with the Hellinger model evaluation metric.

deepflow

DeepFlow is an open-source project that provides deep observability for complex cloud-native and AI applications. It offers Zero Code data collection with eBPF for metrics, distributed tracing, request logs, and function profiling. DeepFlow is integrated with SmartEncoding to achieve Full Stack correlation and efficient access to all observability data. With DeepFlow, cloud-native and AI applications automatically gain deep observability, removing the burden of developers continually instrumenting code and providing monitoring and diagnostic capabilities covering everything from code to infrastructure for DevOps/SRE teams.

FLAME

FLAME is a lightweight and efficient deep learning framework designed for edge devices. It provides a simple and user-friendly interface for developing and deploying deep learning models on resource-constrained devices. With FLAME, users can easily build and optimize neural networks for tasks such as image classification, object detection, and natural language processing. The framework supports various neural network architectures and optimization techniques, making it suitable for a wide range of applications in the field of edge computing.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

context-portal

Context-portal is a versatile tool for managing and visualizing data in a collaborative environment. It provides a user-friendly interface for organizing and sharing information, making it easy for teams to work together on projects. With features such as customizable dashboards, real-time updates, and seamless integration with popular data sources, Context-portal streamlines the data management process and enhances productivity. Whether you are a data analyst, project manager, or team leader, Context-portal offers a comprehensive solution for optimizing workflows and driving better decision-making.

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

airllm

AirLLM is a tool that optimizes inference memory usage, enabling large language models to run on low-end GPUs without quantization, distillation, or pruning. It supports models like Llama3.1 on 8GB VRAM. The tool offers model compression for up to 3x inference speedup with minimal accuracy loss. Users can specify compression levels, profiling modes, and other configurations when initializing models. AirLLM also supports prefetching and disk space management. It provides examples and notebooks for easy implementation and usage.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

rag-in-action

rag-in-action is a GitHub repository that provides a practical course structure for developing a RAG system based on DeepSeek. The repository likely contains resources, code samples, and tutorials to guide users through the process of building and implementing a RAG system using DeepSeek technology. Users interested in learning about RAG systems and their development may find this repository helpful in gaining hands-on experience and practical knowledge in this area.

ktransformers

KTransformers is a flexible Python-centric framework designed to enhance the user's experience with advanced kernel optimizations and placement/parallelism strategies for Transformers. It provides a Transformers-compatible interface, RESTful APIs compliant with OpenAI and Ollama, and a simplified ChatGPT-like web UI. The framework aims to serve as a platform for experimenting with innovative LLM inference optimizations, focusing on local deployments constrained by limited resources and supporting heterogeneous computing opportunities like GPU/CPU offloading of quantized models.

For similar tasks

Detection-and-Classification-of-Alzheimers-Disease

This tool is designed to detect and classify Alzheimer's Disease using Deep Learning and Machine Learning algorithms on an early basis, which is further optimized using the Crow Search Algorithm (CSA). Alzheimer's is a fatal disease, and early detection is crucial for patients to predetermine their condition and prevent its progression. By analyzing MRI scanned images using Artificial Intelligence technology, this tool can classify patients who may or may not develop AD in the future. The CSA algorithm, combined with ML algorithms, has proven to be the most effective approach for this purpose.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

AMIE-pytorch

Implementation of the general framework for AMIE, from the paper Towards Conversational Diagnostic AI, out of Google Deepmind. This repository provides a Pytorch implementation of the AMIE framework, aimed at enabling conversational diagnostic AI. It is a work in progress and welcomes collaboration from individuals with a background in deep learning and an interest in medical applications.

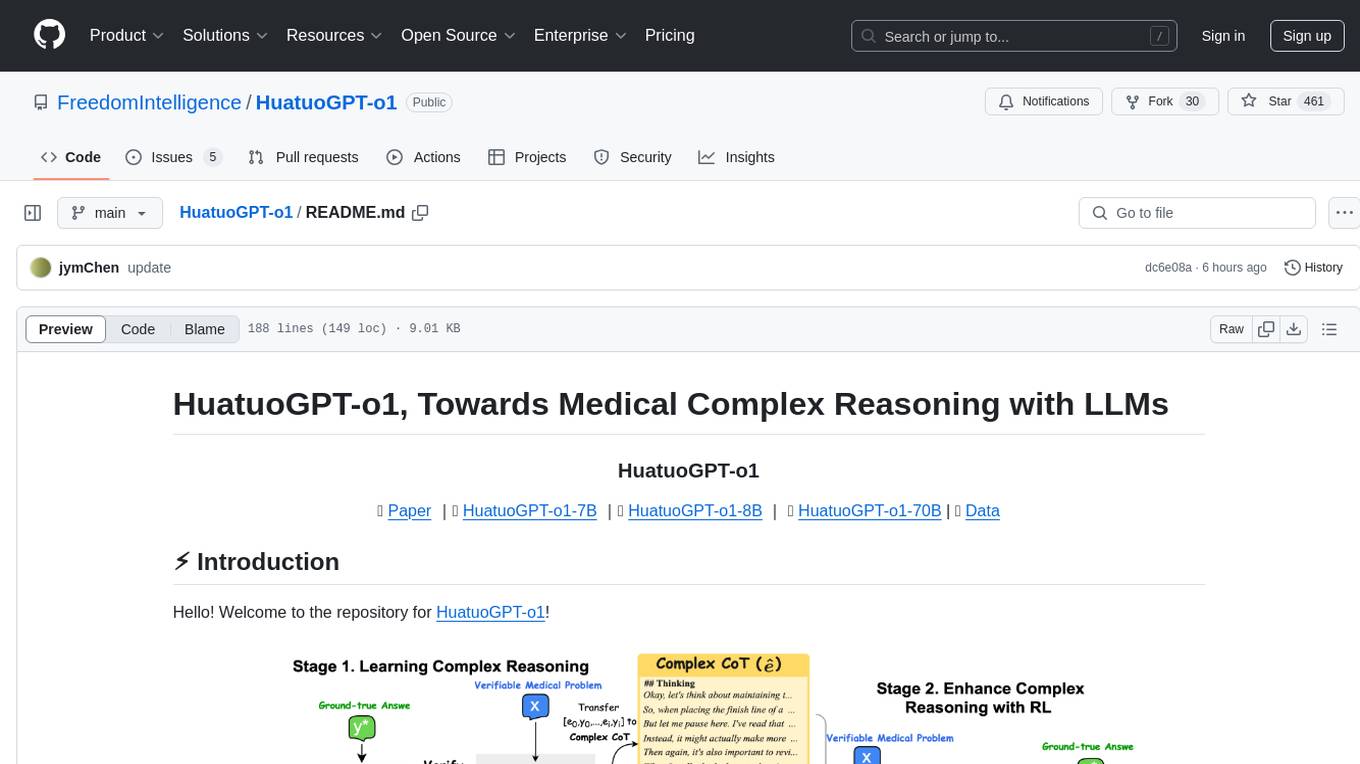

HuatuoGPT-o1

HuatuoGPT-o1 is a medical language model designed for advanced medical reasoning. It can identify mistakes, explore alternative strategies, and refine answers. The model leverages verifiable medical problems and a specialized medical verifier to guide complex reasoning trajectories and enhance reasoning through reinforcement learning. The repository provides access to models, data, and code for HuatuoGPT-o1, allowing users to deploy the model for medical reasoning tasks.

minions

Minions is a communication protocol that enables small on-device models to collaborate with frontier models in the cloud. By only reading long contexts locally, it reduces cloud costs with minimal or no quality degradation. The repository provides a demonstration of the protocol.

opencharacter

OpenCharacter is an open-source tool that allows users to create and run characters locally with local models or use the hosted version. The stack includes Next.js for frontend, TailwindCSS for styling, Drizzle ORM for database access, NextAuth for authentication, Cloudflare D1 for serverless databases, Cloudflare Pages for hosting, and ShadcnUI as the component library. Users can integrate OpenCharacter with OpenRouter by configuring the OpenRouter API key. The tool is fully scalable, composable, and cost-effective, with powerful tools like Wrangler for database management and migrations. No environment variables are needed, making it easy to use and deploy.

OneClickLLAMA

OneClickLLAMA is a tool designed to run local LLM models such as Qwen2.5 and SakuraLLM with ease. It can be used in conjunction with various OpenAI format translators and analyzers, including LinguaGacha and KeywordGacha. By following the setup guides provided on the page, users can optimize performance and achieve a 3-5 times speed improvement compared to default settings. The tool requires a minimum of 8GB dedicated graphics memory, preferably NVIDIA, and the latest version of graphics drivers installed. Users can download the tool from the release page, choose the appropriate model based on usage and memory size, and start the tool by selecting the corresponding launch script.

ComfyUI-IF_LLM

ComfyUI-IF_AI_LLM is a lighter version of ComfyUI-IF_AI_tools, providing custom nodes to run local and API LLMs and LMMs. It supports various models like Ollama, LlamaCPP, LMstudio, Koboldcpp, TextGen, Transformers, and APIs such as Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI. Users can create their own profiles (SystemPrompts) with custom presets. The tool offers features like xAI Grok Vision, Mistral, Google Gemini, Anthropic Haiku, OpenAI preview, auto prompts generation, image generation with IF_PROMPTImaGEN via Dalle3, and more. Installation involves searching for IF_LLM in the manager or manually installing ComfyUI-IF_AI_ImaGenPromptMaker by cloning the repository and installing requirements.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.