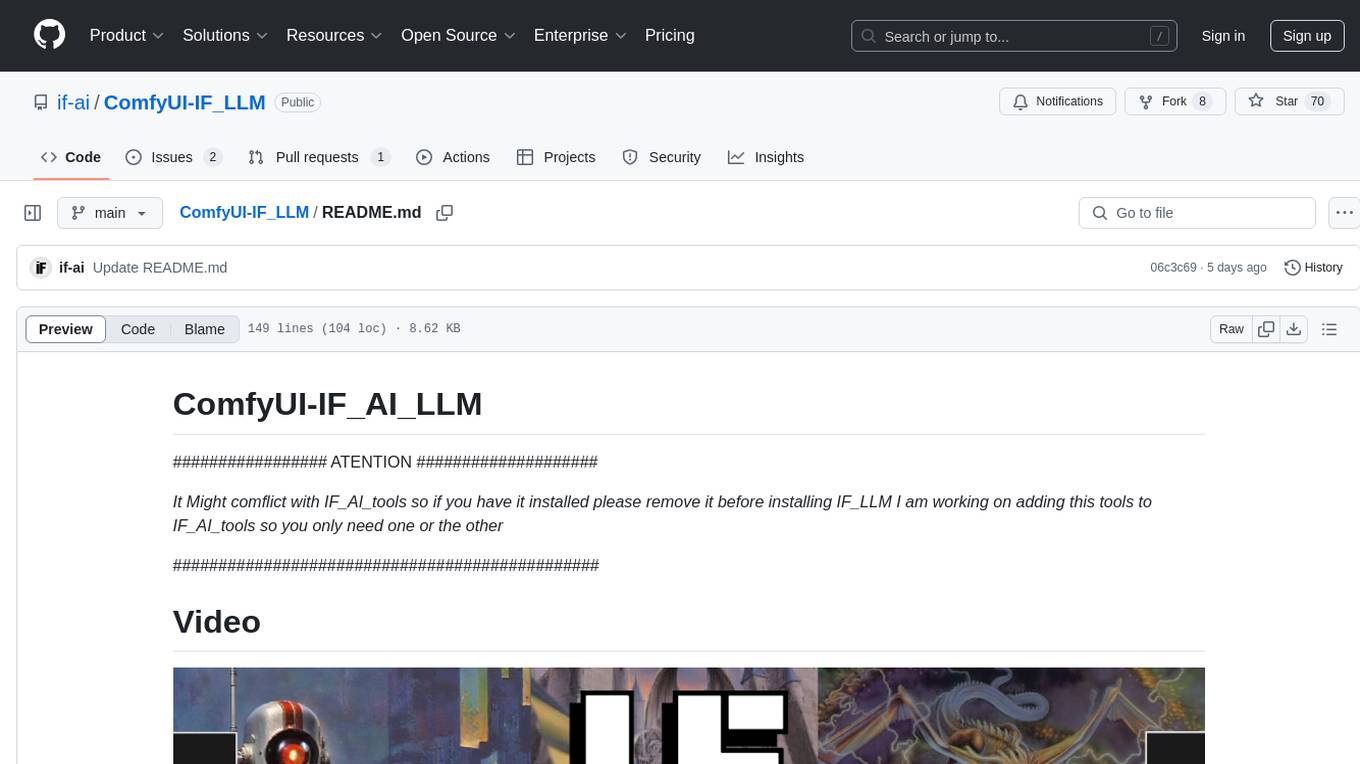

ComfyUI-IF_LLM

Run Local and API LLMs, Features Gemini2 image generation, DEEPSEEK R1, QwenVL2.5, QWQ32B, Ollama, LlamaCPP LMstudio, Koboldcpp, TextGen, Transformers or via APIs Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI and create your own charcters assistants (SystemPrompts) with custom presets

Stars: 99

ComfyUI-IF_AI_LLM is a lighter version of ComfyUI-IF_AI_tools, providing custom nodes to run local and API LLMs and LMMs. It supports various models like Ollama, LlamaCPP, LMstudio, Koboldcpp, TextGen, Transformers, and APIs such as Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI. Users can create their own profiles (SystemPrompts) with custom presets. The tool offers features like xAI Grok Vision, Mistral, Google Gemini, Anthropic Haiku, OpenAI preview, auto prompts generation, image generation with IF_PROMPTImaGEN via Dalle3, and more. Installation involves searching for IF_LLM in the manager or manually installing ComfyUI-IF_AI_ImaGenPromptMaker by cloning the repository and installing requirements.

README:

################# ATENTION ####################

It Might comflict with IF_AI_tools so if you have it installed please remove it before installing IF_LLM I am working on adding this tools to IF_AI_tools so you only need one or the other

###############################################

Lighter version of ComfyUI-IF_AI_tools is a set of custom nodes to Run Local and API LLMs and LMMs, supports Ollama, LlamaCPP LMstudio, Koboldcpp, TextGen, Transformers or via APIs Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI and create your own profiles (SystemPrompts) with custom presets and muchmore

You can technically use any LLM API that you want, but for the best expirience install Ollama and set it up.

- Visit ollama.com for more information.

To install Ollama models just open CMD or any terminal and type the run command follow by the model name such as

ollama run llama3.2-visionIf you want to use omost

ollama run impactframes/dolphin_llama3_omostif you need a good smol model

ollama run ollama run llama3.2Optionally Set enviromnet variables for any of your favourite LLM API keys "XAI_API_KEY", "GOOGLE_API_KEY", "ANTHROPIC_API_KEY", "MISTRAL_API_KEY", "OPENAI_API_KEY" or "GROQ_API_KEY" with those names or otherwise it won't pick it up you can also use .env file to store your keys

[NEW] xAI Grok Vision, Mistral, Google Gemini exp 114, Anthropic 3.5 Haiku, OpenAI 01 preview [NEW] Wildcard System [NEW] Local Models Koboldcpp, TextGen, LlamaCPP, LMstudio, Ollama [NEW] Auto prompts auto generation for Image Prompt Maker runs jobs on batches automatically [NEW] Image generation with IF_PROMPTImaGEN via Dalle3 [NEW] Endpoints xAI, Transformers, [NEW] IF_profiles System Prompts with Reasoning/Reflection/Reward Templates and custom presets [NEW] WF such as GGUF and FluxRedux

- Gemini, Groq, Mistral, OpenAI, Anthropic, Google, xAI, Transformers, Koboldcpp, TextGen, LlamaCPP, LMstudio, Ollama

- Omost_tool the first tool

- Vision Models Haiku/GPT4oMini?Geminiflash/Qwen2-VL

- [Ollama-Omost]https://ollama.com/impactframes/dolphin_llama3_omost can be 2x to 3x faster than other Omost Models

LLama3 and Phi3 IF_AI Prompt mkr models released

ollama run impactframes/llama3_ifai_sd_prompt_mkr_q4km:latest

ollama run impactframes/ifai_promptmkr_dolphin_phi3:latest

https://huggingface.co/impactframes/llama3_if_ai_sdpromptmkr_q4km

https://huggingface.co/impactframes/ifai_promptmkr_dolphin_phi3_gguf

- Open the manager search for IF_LLM and install

- Navigate to your ComfyUI

custom_nodesfolder, typeCMDon the address bar to open a command prompt, and run the following command to clone the repository:git clone https://github.com/if-ai/ComfyUI-IF_LLM.git

OR

-

In ComfyUI protable version just dounle click

embedded_install.bator typeCMDon the address bar on the newly createdcustom_nodes\ComfyUI-IF_LLMfolder typeH:\ComfyUI_windows_portable\python_embeded\python.exe -m pip install -r requirements.txt

replace

C:\for your Drive letter where you have the ComfyUI_windows_portable directory -

On custom environment activate the environment and move to the newly created ComfyUI-IF_LLM

cd ComfyUI-IF_LLM python -m pip install -r requirements.txtIf you want to use AWQ to save VRAM and up to 3x faster inference you need to install triton and autoawq

pip install triton

pip install --no-deps --no-build-isolation autoawq

I also have precompiled wheels for FA2 sageattention and trton for windows 10 for cu126 and pytorch 2.6.3 and python 12+ https://huggingface.co/impactframes/ComfyUI_desktop_wheels_win_cp12_cu126/tree/main

- IF_prompt_MKR

- A similar tool available for Stable Diffusion WebUI

None yet

ancient Megastructure, small lone figure

You can try out these workflow examples directly in ComfyDeploy!

| Workflow | Try It |

|---|

|CD_FLUX_LoRA||

|CD_HYVid_I2V_&_T2V_Native_IFLLM||

|CD_HYVid_I2V_&_T2V_i2VLora_Native|

|

|CD_HYVid_I2V_Lora_KjWrapper|

|

- [ ] IMPROVED PROFILES

- [ ] OMNIGEN

- [ ] QWENFLUX

- [ ] VIDEOGEN

- [ ] AUDIOGEN

If you find this tool useful, please consider supporting my work by:

- Starring the repository on GitHub: ComfyUI-IF_AI_tools

- Subscribing to my YouTube channel: Impact Frames

- Follow me on X: Impact Frames X Thank You!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ComfyUI-IF_LLM

Similar Open Source Tools

ComfyUI-IF_LLM

ComfyUI-IF_AI_LLM is a lighter version of ComfyUI-IF_AI_tools, providing custom nodes to run local and API LLMs and LMMs. It supports various models like Ollama, LlamaCPP, LMstudio, Koboldcpp, TextGen, Transformers, and APIs such as Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI. Users can create their own profiles (SystemPrompts) with custom presets. The tool offers features like xAI Grok Vision, Mistral, Google Gemini, Anthropic Haiku, OpenAI preview, auto prompts generation, image generation with IF_PROMPTImaGEN via Dalle3, and more. Installation involves searching for IF_LLM in the manager or manually installing ComfyUI-IF_AI_ImaGenPromptMaker by cloning the repository and installing requirements.

tgpt

tgpt is a cross-platform command-line interface (CLI) tool that allows users to interact with AI chatbots in the Terminal without needing API keys. It supports various AI providers such as KoboldAI, Phind, Llama2, Blackbox AI, and OpenAI. Users can generate text, code, and images using different flags and options. The tool can be installed on GNU/Linux, MacOS, FreeBSD, and Windows systems. It also supports proxy configurations and provides options for updating and uninstalling the tool.

Auto-Gmail-Creator

Auto-Gmail-Creator is an open-source automation script designed for Python enthusiasts to learn automation basics and for marketers to create multiple Google accounts efficiently. The script automates the process of creating Gmail accounts using sms-activate.org API for phone verification. It handles the download of Chromedriver or Geckodriver automatically and can be customized to prevent blocking. The tool is useful for projects related to automation, scraping, and machine learning.

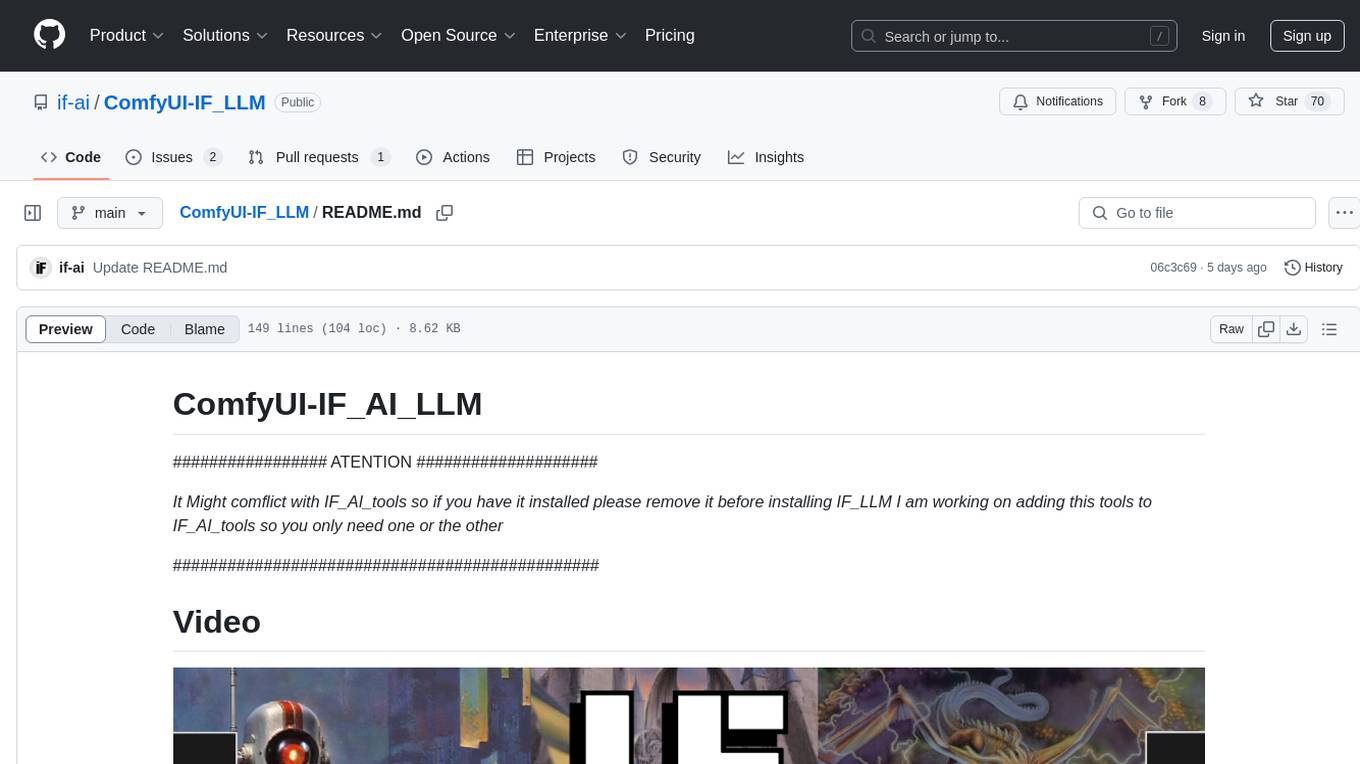

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

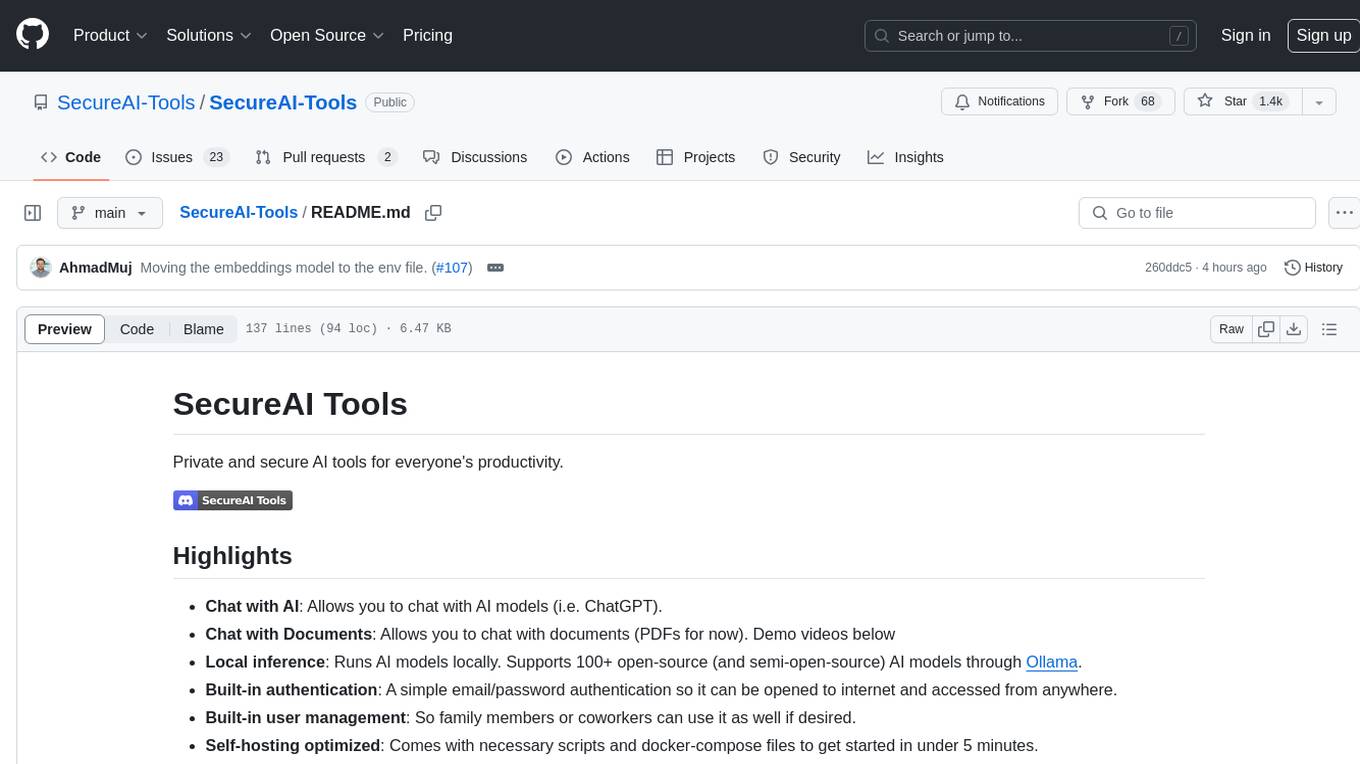

RirikoBot

RirikoBot is a powerful AI-powered Discord bot with features like Twitch Live Notifier, Giveaways, OpenAI, Stable Diffusion, Moderations, Anime / Manga Finder, and more. It is based on Discord.js v14 and can be hosted on a PC or a Server. Users can interact with the bot through various commands to access different functionalities.

LLMinator

LLMinator is a Gradio-based tool with an integrated chatbot designed to locally run and test Language Model Models (LLMs) directly from HuggingFace. It provides an easy-to-use interface made with Gradio, LangChain, and Torch, offering features such as context-aware streaming chatbot, inbuilt code syntax highlighting, loading any LLM repo from HuggingFace, support for both CPU and CUDA modes, enabling LLM inference with llama.cpp, and model conversion capabilities.

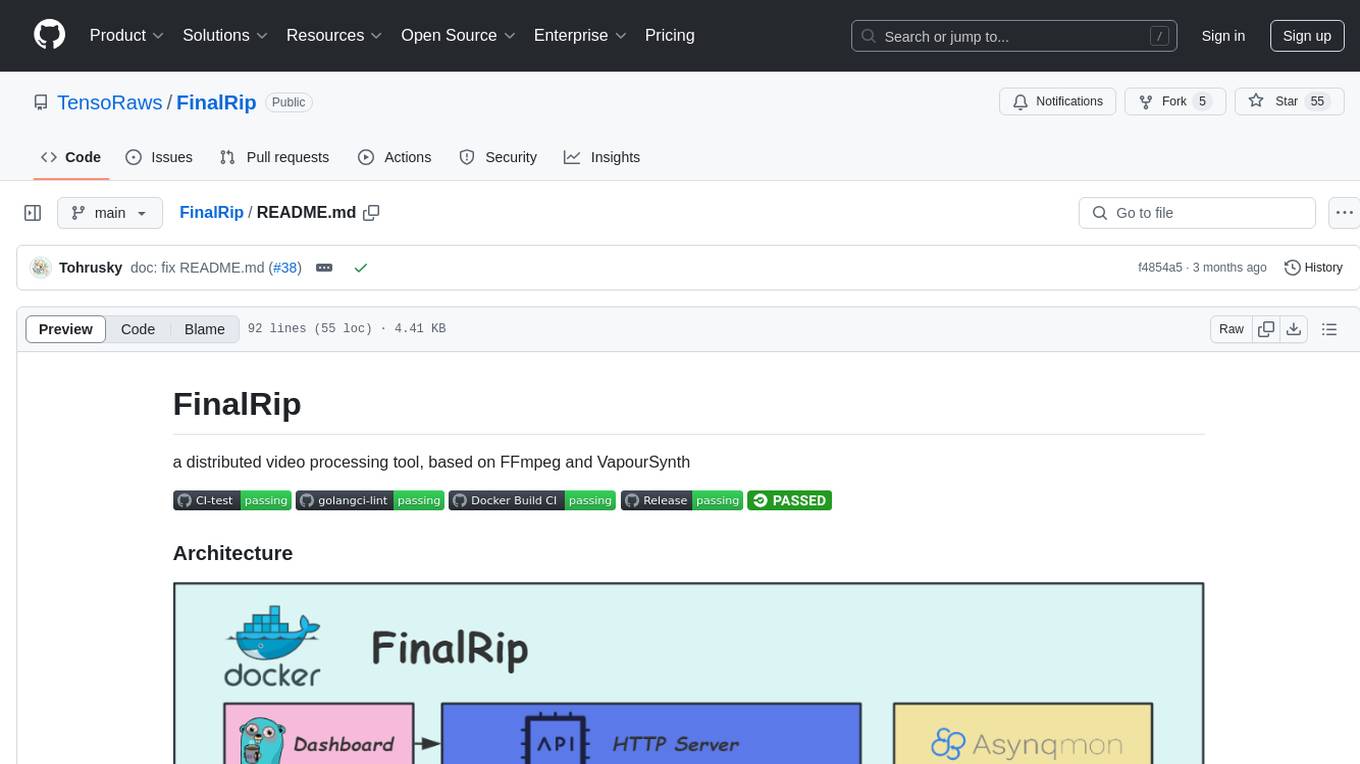

FinalRip

FinalRip is a distributed video processing tool based on FFmpeg and VapourSynth. It cuts the original video into multiple clips, processes each clip in parallel, and merges them into the final video. Users can deploy the system in a distributed way, configure settings via environment variables or remote config files, and develop/test scripts in the vs-playground environment. It supports Nvidia GPU, AMD GPU with ROCm support, and provides a dashboard for selecting compatible scripts to process videos.

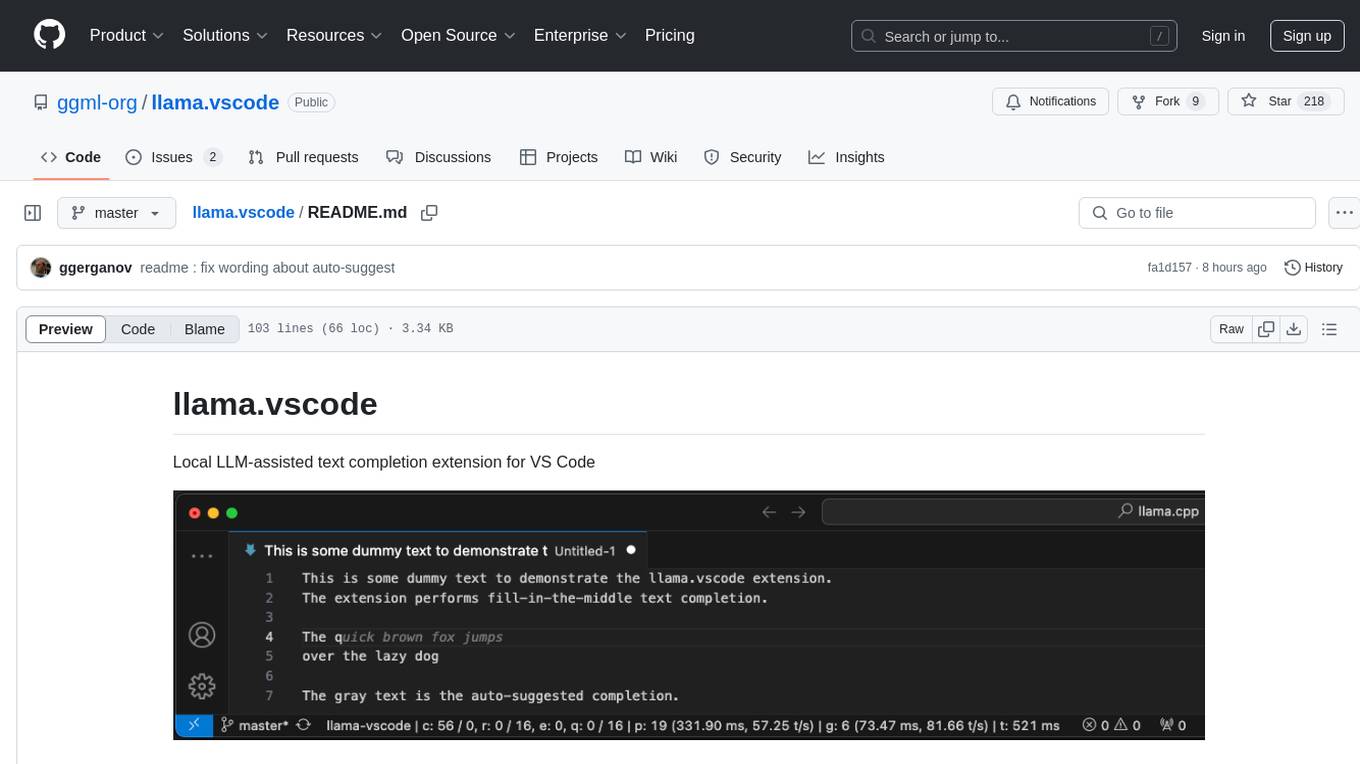

llama.vscode

llama.vscode is a local LLM-assisted text completion extension for Visual Studio Code. It provides auto-suggestions on input, allows accepting suggestions with shortcuts, and offers various features to enhance text completion. The extension is designed to be lightweight and efficient, enabling high-quality completions even on low-end hardware. Users can configure the scope of context around the cursor and control text generation time. It supports very large contexts and displays performance statistics for better user experience.

GeminiChatUp

Gemini ChatUp is a chat application utilizing the Google GeminiPro API Key. It supports responsive layout and can store multiple sets of conversations with customizable parameters for each set. Users can log in with a test account or provide their own API Key to deploy the feature. The application also offers user authentication through Edge config in Vercel, allowing users to add usernames and passwords in JSON format. Local deployment is possible by installing dependencies, setting up environment variables, and running the application locally.

modelence

Modelence is an all-in-one TypeScript framework for startups shipping production apps, aiming to eliminate boilerplate for standard web app features. It provides authentication, database setup, cron jobs, AI observability, and email functionalities. Modelence requires Node.js 20.20 or higher. Developers can create projects, install dependencies, and start the development server quickly. For local development, contributors can clone the repository, install dependencies, build the package, and test changes in a real application. Modelence offers examples for further guidance.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

dstack

Dstack is an open-source orchestration engine for running AI workloads in any cloud. It supports a wide range of cloud providers (such as AWS, GCP, Azure, Lambda, TensorDock, Vast.ai, CUDO, RunPod, etc.) as well as on-premises infrastructure. With Dstack, you can easily set up and manage dev environments, tasks, services, and pools for your AI workloads.

open-cuak

Open CUAK (Computer Use Agent) is a platform for managing automation agents at scale, designed to run and manage thousands of automation agents with reliability. It allows for abundant productivity by ensuring scalability and profitability. The project aims to usher in a new era of work with equally distributed productivity, making it open-sourced for real businesses and real people. The core features include running operator-like automation workflows locally, vision-based automation, turning any browser into an operator-companion, utilizing a dedicated remote browser, and more.

tap4-ai-webui

Tap4 AI Web UI is an open source AI tools directory built by Tap4 AI Tools Directory. The project aims to help everyone build their own AI Tools Directory easily. Users can fork the project, deploy it to Vercel with one click, and update their own AI tools using the data list in the project. The web UI features internationalization, SEO friendliness, dynamic sitemap generation, fast shipping, NEXT 14 with app route, and integration with Supabase serverless database.

go-genai

The Google Gen AI Go SDK is a tool that allows developers to utilize Google's advanced generative AI models, such as Gemini, to create AI-powered features and applications. With this SDK, users can generate text from text-only input or text-and-images input (multimodal) with ease. The tool provides seamless integration with Google's AI models, enabling developers to harness the power of AI for various use cases.

ollama4j-web-ui

Ollama4j Web UI is a Java-based web interface built using Spring Boot and Vaadin framework for Ollama users with Java and Spring background. It allows users to interact with various models running on Ollama servers, providing a fully functional web UI experience. The project offers multiple ways to run the application, including via Docker, Docker Compose, or as a standalone JAR. Users can configure the environment variables and access the web UI through a browser. The project also includes features for error handling on the UI and settings pane for customizing default parameters.

For similar tasks

ComfyUI-IF_LLM

ComfyUI-IF_AI_LLM is a lighter version of ComfyUI-IF_AI_tools, providing custom nodes to run local and API LLMs and LMMs. It supports various models like Ollama, LlamaCPP, LMstudio, Koboldcpp, TextGen, Transformers, and APIs such as Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI. Users can create their own profiles (SystemPrompts) with custom presets. The tool offers features like xAI Grok Vision, Mistral, Google Gemini, Anthropic Haiku, OpenAI preview, auto prompts generation, image generation with IF_PROMPTImaGEN via Dalle3, and more. Installation involves searching for IF_LLM in the manager or manually installing ComfyUI-IF_AI_ImaGenPromptMaker by cloning the repository and installing requirements.

ComfyUI-IF_AI_tools

ComfyUI-IF_AI_tools is a set of custom nodes for ComfyUI that allows you to generate prompts using a local Large Language Model (LLM) via Ollama. This tool enables you to enhance your image generation workflow by leveraging the power of language models.

Awesome-AI-GPTs

Awesome AI GPTs is an open repository that collects resources and fun ways to use OpenAI GPTs. It includes databases, search tools, open-source projects, articles, attack and defense strategies, installation of custom plugins, knowledge bases, and community interactions related to GPTs. Users can find curated lists, leaked prompts, and various GPT applications in this repository. The project aims to empower users with AI capabilities and foster collaboration in the AI community.

kor

Kor is a prototype tool designed to help users extract structured data from text using Language Models (LLMs). It generates prompts, sends them to specified LLMs, and parses the output. The tool works with the parsing approach and is integrated with the LangChain framework. Kor is compatible with pydantic v2 and v1, and schema is typed checked using pydantic. It is primarily used for extracting information from text based on provided reference examples and schema documentation. Kor is designed to work with all good-enough LLMs regardless of their support for function/tool calling or JSON modes.

Awesome-LLM-Survey

This repository, Awesome-LLM-Survey, serves as a comprehensive collection of surveys related to Large Language Models (LLM). It covers various aspects of LLM, including instruction tuning, human alignment, LLM agents, hallucination, multi-modal capabilities, and more. Researchers are encouraged to contribute by updating information on their papers to benefit the LLM survey community.

awesome-gpt-prompt-engineering

Awesome GPT Prompt Engineering is a curated list of resources, tools, and shiny things for GPT prompt engineering. It includes roadmaps, guides, techniques, prompt collections, papers, books, communities, prompt generators, Auto-GPT related tools, prompt injection information, ChatGPT plug-ins, prompt engineering job offers, and AI links directories. The repository aims to provide a comprehensive guide for prompt engineering enthusiasts, covering various aspects of working with GPT models and improving communication with AI tools.

ComfyUI_VLM_nodes

ComfyUI_VLM_nodes is a repository containing various nodes for utilizing Vision Language Models (VLMs) and Language Models (LLMs). The repository provides nodes for tasks such as structured output generation, image to music conversion, LLM prompt generation, automatic prompt generation, and more. Users can integrate different models like InternLM-XComposer2-VL, UForm-Gen2, Kosmos-2, moondream1, moondream2, JoyTag, and Chat Musician. The nodes support features like extracting keywords, generating prompts, suggesting prompts, and obtaining structured outputs. The repository includes examples and instructions for using the nodes effectively.

AI-Prompt-Genius

AI Prompt Genius is a Chrome extension that allows you to curate a custom library of AI prompts. It is built using React web app and Tailwind CSS with DaisyUI components. The extension enables users to create and manage AI prompts for various purposes. It provides a user-friendly interface for organizing and accessing AI prompts efficiently. AI Prompt Genius is designed to enhance productivity and creativity by offering a personalized collection of prompts tailored to individual needs. Users can easily install the extension from the Chrome Web Store and start using it to generate AI prompts for different tasks.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.