Code

AI Coding Agent Framework

Stars: 95

A3S Code is an embeddable AI coding agent framework in Rust that allows users to build agents capable of reading, writing, and executing code with tool access, planning, and safety controls. It is production-ready with features like permission system, HITL confirmation, skill-based tool restrictions, and error recovery. The framework is extensible with 19 trait-based extension points and supports lane-based priority queue for scalable multi-machine task distribution.

README:

Embeddable AI coding agent framework in Rust — Build agents that read, write, and execute code with tool access, planning, and safety controls.

let agent = Agent::new("agent.hcl").await?;

let session = agent.session(".", None)?;

let result = session.send("Refactor auth to use JWT").await?;- Embeddable — Rust library, not a service. Node.js and Python bindings included. CLI for terminal use.

- Production-Ready — Permission system, HITL confirmation, skill-based tool restrictions, and error recovery (parse retries, tool timeout, circuit breaker).

- Extensible — 19 trait-based extension points, all with working defaults. Slash commands, tool search, and multi-agent teams.

- Scalable — Lane-based priority queue with multi-machine task distribution.

# Rust

cargo add a3s-code-core

# Node.js

npm install @a3s-lab/code

# Python

pip install a3s-codeCreate agent.hcl:

default_model = "anthropic/claude-sonnet-4-20250514"

providers {

name = "anthropic"

api_key = env("ANTHROPIC_API_KEY")

}Rust

use a3s_code_core::{Agent, SessionOptions};

#[tokio::main]

async fn main() -> anyhow::Result<()> {

let agent = Agent::new("agent.hcl").await?;

let session = agent.session(".", None)?;

let result = session.send("What files handle authentication?", None).await?;

println!("{}", result.text);

// With options

let session = agent.session(".", Some(

SessionOptions::new()

.with_default_security()

.with_builtin_skills()

.with_planning(true)

))?;

let result = session.send("Refactor auth + update tests", None).await?;

Ok(())

}TypeScript

import { Agent } from '@a3s-lab/code';

const agent = await Agent.create('agent.hcl');

const session = agent.session('.', {

defaultSecurity: true,

builtinSkills: true,

planning: true,

});

const result = await session.send('Refactor auth + update tests');

console.log(result.text);Python

from a3s_code import Agent, SessionOptions

agent = Agent("agent.hcl")

session = agent.session(".", SessionOptions(

default_security=True,

builtin_skills=True,

planning=True,

))

result = session.send("Refactor auth + update tests")

print(result.text)| Category | Tools | Description |

|---|---|---|

| File Operations |

read, write, edit, patch

|

Read/write files, apply diffs |

| Search |

grep, glob, ls

|

Search content, find files, list directories |

| Execution | bash |

Execute shell commands |

| Sandbox | sandbox |

MicroVM execution via A3S Box (sandbox feature) |

| Web |

web_fetch, web_search

|

Fetch URLs, search the web |

| Git | git_worktree |

Create/list/remove/status git worktrees for parallel work |

| Subagents | task |

Delegate to specialized child agents |

| Parallel | batch |

Execute multiple tools concurrently in one call |

Permission System — Allow/Deny/Ask rules per tool with wildcard matching:

use a3s_code_core::permissions::PermissionPolicy;

SessionOptions::new()

.with_permission_checker(Arc::new(

PermissionPolicy::new()

.allow("read(*)")

.deny("bash(*)")

.ask("write(*)")

))Default Security Provider — Auto-redact PII (SSN, API keys, emails, credit cards), prompt injection detection, SHA256 hashing:

SessionOptions::new().with_default_security()HITL Confirmation — Require human approval for sensitive operations:

SessionOptions::new()

.with_confirmation_manager(Arc::new(

ConfirmationManager::new(ConfirmationPolicy::enabled(), event_tx)

))Skill-Based Tool Restrictions — Skills define allowed tools via allowed-tools field, enforced at execution time.

7 built-in skills (4 code assistance + 3 tool documentation). Custom skills are Markdown files with YAML frontmatter:

---

name: api-design

description: Review API design for RESTful principles

allowed-tools: "read(*), grep(*)"

kind: instruction

tags: [api, design]

version: 1.0.0

---

# API Design Review

Check for RESTful principles, naming conventions, error handling.SessionOptions::new()

.with_builtin_skills() // Enable all 7 built-in skills

.with_skills_from_dir("./skills") // Load custom skillsDecompose complex tasks into dependency-aware execution plans with wave-based parallel execution:

SessionOptions::new()

.with_planning(true)

.with_goal_tracking(true)The planner creates steps with dependencies. Independent steps execute in parallel waves via tokio::JoinSet. Goal tracking monitors progress across multiple turns.

Send image attachments alongside text prompts. Requires a vision-capable model (Claude Sonnet, GPT-4o).

use a3s_code_core::Attachment;

// Send a prompt with an image

let image = Attachment::from_file("screenshot.png")?;

let result = session.send_with_attachments(

"What's in this screenshot?",

&[image],

None,

).await?;

// Tools can return images too

let output = ToolOutput::success("Screenshot captured")

.with_images(vec![Attachment::png(screenshot_bytes)]);Supported formats: JPEG, PNG, GIF, WebP. Image data is base64-encoded for both Anthropic and OpenAI providers.

Interactive session commands dispatched before the LLM. Custom commands via the SlashCommand trait:

| Command | Description |

|---|---|

/help |

List available commands |

/compact |

Manually trigger context compaction |

/cost |

Show token usage and estimated cost |

/model |

Show or switch the current model |

/clear |

Clear conversation history |

/history |

Show conversation turn count and token stats |

/tools |

List registered tools |

use a3s_code_core::commands::{SlashCommand, CommandContext, CommandOutput};

struct PingCommand;

impl SlashCommand for PingCommand {

fn name(&self) -> &str { "ping" }

fn description(&self) -> &str { "Pong!" }

fn execute(&self, _args: &str, _ctx: &CommandContext) -> CommandOutput {

CommandOutput::text("pong")

}

}

session.register_command(Arc::new(PingCommand));When the MCP ecosystem grows large (100+ tools), injecting all tool descriptions wastes context. Tool Search selects only relevant tools per-turn based on keyword matching:

use a3s_code_core::tool_search::{ToolIndex, ToolSearchConfig};

let mut index = ToolIndex::new(ToolSearchConfig::default());

index.add("mcp__github__create_issue", "Create a GitHub issue", &["github", "issue"]);

index.add("mcp__postgres__query", "Run a SQL query", &["sql", "database"]);

// Integrated into AgentLoop — filters tools automatically before each LLM callWhen configured via AgentConfig::tool_index, the agent loop extracts the last user message, searches the index, and only sends matching tools to the LLM. Builtin tools are always included.

Peer-to-peer multi-agent collaboration through a shared task board and message passing:

use a3s_code_core::agent_teams::{AgentTeam, TeamConfig, TeamRole};

let mut team = AgentTeam::new("refactor-auth", TeamConfig::default());

team.add_member("lead", TeamRole::Lead);

team.add_member("worker-1", TeamRole::Worker);

team.add_member("reviewer", TeamRole::Reviewer);

// Post a task to the board

team.task_board().post("Refactor auth module", "lead", None);

// Worker claims and works on it

let task = team.task_board().claim("worker-1");

// Complete → Review → Approve/Reject workflow

team.task_board().complete("task-1", "Refactored to JWT");

team.task_board().approve("task-1");Supports Lead/Worker/Reviewer roles, mpsc peer messaging, broadcast, and a full task lifecycle (Open → InProgress → InReview → Done/Rejected).

Customize the agent's behavior without overriding the core agentic capabilities. The default prompt (tool usage strategy, autonomous behavior, completion criteria) is always preserved:

use a3s_code_core::{SessionOptions, SystemPromptSlots};

SessionOptions::new()

.with_prompt_slots(SystemPromptSlots {

role: Some("You are a senior Rust developer".into()),

guidelines: Some("Use clippy. No unwrap(). Prefer Result.".into()),

response_style: Some("Be concise. Use bullet points.".into()),

extra: Some("This project uses tokio and axum.".into()),

})| Slot | Position | Behavior |

|---|---|---|

role |

Before core | Replaces default "You are A3S Code..." identity |

guidelines |

After core | Appended as ## Guidelines section |

response_style |

Replaces section | Replaces default ## Response Format

|

extra |

End | Freeform instructions (backward-compatible) |

TypeScript

const session = agent.session('.', {

role: 'You are a senior Rust developer',

guidelines: 'Use clippy. No unwrap(). Prefer Result.',

responseStyle: 'Be concise. Use bullet points.',

extra: 'This project uses tokio and axum.',

});Python

opts = SessionOptions()

opts.role = "You are a senior Rust developer"

opts.guidelines = "Use clippy. No unwrap(). Prefer Result."

opts.response_style = "Be concise. Use bullet points."

opts.extra = "This project uses tokio and axum."

session = agent.session(".", opts)Interactive AI coding agent in the terminal:

# Install

cargo install a3s-code-cli

# Interactive REPL

a3s-code

# One-shot mode

a3s-code "Explain the auth module"

# Custom config

a3s-code -c agent.hcl -m openai/gpt-4o "Fix the tests"Config auto-discovery: -c flag → A3S_CONFIG env → ~/.a3s/config.hcl → ./agent.hcl

Tool execution is routed through a priority queue backed by a3s-lane:

| Lane | Priority | Tools | Behavior |

|---|---|---|---|

| Control | P0 (highest) | pause, resume, cancel | Sequential |

| Query | P1 | read, glob, grep, ls, web_fetch, web_search | Parallel |

| Execute | P2 | bash, write, edit, delete | Sequential |

| Generate | P3 (lowest) | LLM calls | Sequential |

Higher-priority tasks preempt queued lower-priority tasks. Configure per-lane concurrency:

let queue_config = SessionQueueConfig {

query_max_concurrency: 10,

execute_max_concurrency: 5,

enable_metrics: true,

..Default::default()

};

SessionOptions::new().with_queue_config(queue_config)Advanced features: retry policies, rate limiting, priority boost, pressure monitoring, DLQ.

Route bash commands through an A3S Box MicroVM for isolated execution. Requires the sandbox Cargo feature.

Transparent routing — configure once, bash tool uses sandbox automatically:

use a3s_code_core::{SessionOptions, SandboxConfig};

SessionOptions::new().with_sandbox(SandboxConfig {

image: "ubuntu:22.04".into(),

memory_mb: 512,

network: false,

..SandboxConfig::default()

})Explicit sandbox tool — with sandbox feature enabled, the LLM can call the sandbox tool directly. Workspace is mounted at /workspace inside the MicroVM.

Enable:

a3s-code-core = { version = "0.7", features = ["sandbox"] }Offload tool execution to external workers via three handler modes:

| Mode | Behavior |

|---|---|

| Internal (default) | Execute within agent process |

| External | Send to external workers, wait for completion |

| Hybrid | Execute internally + notify external observers |

// Route Execute lane to external workers

session.set_lane_handler(SessionLane::Execute, LaneHandlerConfig {

mode: TaskHandlerMode::External,

timeout_ms: 120_000,

}).await;

// Worker loop

let tasks = session.pending_external_tasks().await;

for task in tasks {

let result = execute_task(&task).await;

session.complete_external_task(&task.task_id, result).await;

}All policies are replaceable via traits with working defaults:

| Extension Point | Purpose | Default |

|---|---|---|

| SecurityProvider | Input taint, output sanitization | DefaultSecurityProvider |

| PermissionChecker | Tool access control | PermissionPolicy |

| ConfirmationProvider | Human confirmation | ConfirmationManager |

| ContextProvider | RAG retrieval | FileSystemContextProvider |

| EmbeddingProvider | Vector embeddings for semantic search | OpenAiEmbeddingProvider |

| VectorStore | Embedding storage and similarity search | InMemoryVectorStore |

| SessionStore | Session persistence | FileSessionStore |

| MemoryStore | Long-term memory backend (from a3s-memory) |

InMemoryStore |

| Tool | Custom tools | 12 built-in tools |

| Planner | Task decomposition | LlmPlanner |

| HookHandler | Event handling | HookEngine |

| HookExecutor | Event execution | HookEngine |

| McpTransport | MCP protocol | StdioTransport |

| HttpClient | HTTP requests | ReqwestClient |

| SessionCommand | Queue tasks | ToolCommand |

| LlmClient | LLM interface | Anthropic/OpenAI |

| BashSandbox | Shell execution isolation | LocalBashExecutor |

| SkillValidator | Skill activation logic | DefaultSkillValidator |

| SkillScorer | Skill relevance ranking | DefaultSkillScorer |

// Example: custom security provider

impl SecurityProvider for MyProvider {

fn taint_input(&self, text: &str) { /* ... */ }

fn sanitize_output(&self, text: &str) -> String { /* ... */ }

}

SessionOptions::new().with_security_provider(Arc::new(MyProvider))5 core components (stable, not replaceable) + 19 extension points (replaceable via traits):

Agent (config-driven)

├── CommandRegistry (slash commands: /help, /cost, /model, /clear, ...)

└── AgentSession (workspace-bound)

├── AgentLoop (core execution engine)

│ ├── ToolExecutor (13 built-in tools, batch parallel execution)

│ ├── ToolIndex (per-turn tool filtering for large MCP sets)

│ ├── SystemPromptSlots (role, guidelines, response_style, extra)

│ ├── Planning (task decomposition + wave execution)

│ └── HITL Confirmation

├── SessionLaneQueue (a3s-lane backed)

│ ├── Control (P0) → Query (P1) → Execute (P2) → Generate (P3)

│ └── External Task Distribution

├── HookEngine (8 lifecycle events)

├── Security (PII redaction, injection detection)

├── Skills (instruction injection + tool permissions)

├── Context (RAG providers: filesystem, vector)

└── Memory (AgentMemory: working/short-term/long-term via a3s-memory)

AgentTeam (multi-agent coordination)

├── TeamTaskBoard (post → claim → complete → review → approve/reject)

├── TeamMember[] (Lead, Worker, Reviewer roles)

└── mpsc channels (peer-to-peer messaging + broadcast)

A3S Code uses HCL configuration format exclusively.

default_model = "anthropic/claude-sonnet-4-20250514"

providers {

name = "anthropic"

api_key = env("ANTHROPIC_API_KEY")

}default_model = "anthropic/claude-sonnet-4-20250514"

providers {

name = "anthropic"

api_key = env("ANTHROPIC_API_KEY")

}

providers {

name = "openai"

api_key = env("OPENAI_API_KEY")

}

queue {

query_max_concurrency = 10

execute_max_concurrency = 5

enable_metrics = true

enable_dlq = true

retry_policy {

strategy = "exponential"

max_retries = 3

initial_delay_ms = 100

}

rate_limit {

limit_type = "per_second"

max_operations = 100

}

priority_boost {

strategy = "standard"

deadline_ms = 300000

}

pressure_threshold = 50

}

search {

timeout = 30

engine {

google { enabled = true, weight = 1.5 }

bing { enabled = true, weight = 1.0 }

}

}

storage_backend = "file"

sessions_dir = "./sessions"

skill_dirs = ["./skills"]

agent_dirs = ["./agents"]

max_tool_rounds = 50

thinking_budget = 10000let agent = Agent::new("agent.hcl").await?; // From file

let agent = Agent::new(hcl_string).await?; // From string

let agent = Agent::from_config(config).await?; // From struct

let session = agent.session(".", None)?; // Create session

let session = agent.session(".", Some(options))?; // With options

let session = agent.resume_session("id", options)?; // Resume saved session// Prompt execution

let result = session.send("prompt", None).await?;

let (rx, handle) = session.stream("prompt", None).await?;

// Multi-modal (vision)

let result = session.send_with_attachments("Describe", &[image], None).await?;

let (rx, handle) = session.stream_with_attachments("Describe", &[image], None).await?;

// Session persistence

session.save().await?;

let id = session.session_id();

// Slash commands

session.register_command(Arc::new(MyCommand));

let registry = session.command_registry();

// Memory

session.remember_success("task", &["tool"], "result").await?;

session.recall_similar("query", 5).await?;

// Direct tool access

let content = session.read_file("src/main.rs").await?;

let output = session.bash("cargo test").await?;

let files = session.glob("**/*.rs").await?;

let matches = session.grep("TODO").await?;

let result = session.tool("edit", args).await?;

// Queue management

session.set_lane_handler(lane, config).await;

let tasks = session.pending_external_tasks().await;

session.complete_external_task(&task_id, result).await;

let stats = session.queue_stats().await;

let metrics = session.queue_metrics().await;

let dead = session.dead_letters().await;SessionOptions::new()

// Security

.with_default_security()

.with_security_provider(Arc::new(MyProvider))

// Skills

.with_builtin_skills()

.with_skills_from_dir("./skills")

// Planning

.with_planning(true)

.with_goal_tracking(true)

// Context / RAG

.with_fs_context(".")

.with_context_provider(provider)

// Memory

.with_file_memory("./memory")

// Session persistence

.with_file_session_store("./sessions")

// Auto-compact

.with_auto_compact(true)

.with_auto_compact_threshold(0.80)

// Error recovery

.with_parse_retries(3)

.with_tool_timeout(30_000)

.with_circuit_breaker(5)

// Queue

.with_queue_config(queue_config)

// Prompt customization (slot-based, preserves core agentic behavior)

.with_prompt_slots(SystemPromptSlots {

role: Some("You are a Python expert".into()),

guidelines: Some("Follow PEP 8".into()),

..Default::default()

})

// Extensions

.with_permission_checker(policy)

.with_confirmation_manager(mgr)

.with_skill_registry(registry)

.with_hook_engine(hooks)from a3s_code import Agent, SessionOptions, builtin_skills

# Create agent

agent = Agent("agent.hcl")

# Create session

opts = SessionOptions()

opts.model = "anthropic/claude-sonnet-4-20250514"

opts.builtin_skills = True

opts.role = "You are a Python expert"

opts.guidelines = "Follow PEP 8. Use type hints."

session = agent.session(".", opts)

# Send / Stream

result = session.send("Explain auth module")

for event in session.stream("Refactor auth"):

if event.event_type == "text_delta":

print(event.text, end="")

# Direct tools

content = session.read_file("src/main.py")

output = session.bash("pytest")

files = session.glob("**/*.py")

matches = session.grep("TODO")

result = session.tool("git_worktree", {"command": "list"})

# Memory

session.remember_success("task", ["tool"], "result")

items = session.recall_similar("auth", 5)

# Hooks

session.register_hook("audit", "pre_tool_use", handler_fn)

# Queue

stats = session.queue_stats()

dead = session.dead_letters()

# Persistence

session.save()

resumed = agent.resume_session(session.session_id, opts)import { Agent } from '@a3s-lab/code';

// Create agent

const agent = await Agent.create('agent.hcl');

// Create session

const session = agent.session('.', {

model: 'anthropic/claude-sonnet-4-20250514',

builtinSkills: true,

role: 'You are a TypeScript expert',

guidelines: 'Use strict mode. Prefer interfaces over types.',

});

// Send / Stream

const result = await session.send('Explain auth module');

const stream = await session.stream('Refactor auth');

for await (const event of stream) {

if (event.type === 'text_delta') process.stdout.write(event.text);

}

// Direct tools

const content = await session.readFile('src/main.ts');

const output = await session.bash('npm test');

const files = await session.glob('**/*.ts');

const matches = await session.grep('TODO');

const result = await session.tool('git_worktree', { command: 'list' });

// Memory

await session.rememberSuccess('task', ['tool'], 'result');

const items = await session.recallSimilar('auth', 5);

// Hooks

session.registerHook('audit', 'pre_tool_use', handlerFn);

// Queue

const stats = await session.queueStats();

const dead = await session.deadLetters();

// Persistence

await session.save();

const resumed = agent.resumeSession(session.sessionId, options);All examples use real LLM configuration from ~/.a3s/config.hcl or $A3S_CONFIG.

| # | Example | Feature |

|---|---|---|

| 01 | 01_basic_send |

Non-streaming prompt execution |

| 02 | 02_streaming |

Real-time AgentEvent stream |

| 03 | 03_multi_turn |

Context preservation across turns |

| 04 | 04_model_switching |

Provider/model override + temperature |

| 05 | 05_planning |

Task decomposition + goal tracking |

| 06 | 06_skills_security |

Built-in skills + security provider |

| 07 | 07_direct_tools |

Bypass LLM, call tools directly |

| 08 | 08_hooks |

Lifecycle event interception |

| 09 | 09_queue_lanes |

Priority-based tool scheduling |

| 10 | 10_resilience |

Auto-compaction, circuit breaker, parse retries |

cargo run --example 01_basic_send

cargo run --example 02_streaming

# ... through 10_resilience| Language | File | Coverage |

|---|---|---|

| Rust | core/examples/test_git_worktree.rs |

Git worktree tool: direct calls + LLM-driven |

| Rust | core/examples/test_prompt_slots.rs |

Prompt slots: role, guidelines, response style, extra |

| Python | sdk/python/examples/agentic_loop_demo.py |

Basic send, streaming, multi-turn, planning, skills, security |

| Python | sdk/python/examples/advanced_features_demo.py |

Direct tools, hooks, queue/lanes, security, resilience, memory |

| Python | sdk/python/examples/test_git_worktree.py |

Git worktree tool: direct calls + LLM-driven |

| Python | sdk/python/examples/test_prompt_slots.py |

Prompt slots: role, guidelines, response style, extra |

| Node.js | sdk/node/examples/agentic_loop_demo.js |

Basic send, streaming, multi-turn, planning, skills, security |

| Node.js | sdk/node/examples/advanced_features_demo.js |

Direct tools, hooks, queue/lanes, security, resilience, memory |

| Node.js | sdk/node/examples/test_git_worktree.js |

Git worktree tool: direct calls + LLM-driven |

| Node.js | sdk/node/examples/test_prompt_slots.js |

Prompt slots: role, guidelines, response style, extra |

-

integration_tests— Complete feature test suite -

test_task_priority— Lane-based priority preemption with real LLM -

test_external_task_handler— Multi-machine coordinator/worker pattern -

test_lane_features— A3S Lane v0.4.0 advanced features -

test_builtin_skills— Built-in skills demonstration -

test_custom_skills_agents— Custom skills and agent definitions -

test_search_config— Web search configuration -

test_auto_compact— Context window auto-compaction -

test_security— Default and custom SecurityProvider -

test_batch_tool— Parallel tool execution via batch -

test_vector_rag— Semantic code search with filesystem context -

test_hooks— Lifecycle hook handlers (audit, block, transform) -

test_parallel_processing— Concurrent multi-session workloads -

test_git_worktree— Git worktree tool: create, list, remove, status + LLM-driven -

test_prompt_slots— System prompt slots: role, guidelines, response style, extra + tool verification

cargo test # All tests

cargo test --lib # Unit tests onlyTest Coverage: 1402 tests, 100% pass rate

- Follow Rust API guidelines

- Write tests for all new code

- Use

cargo fmtandcargo clippy - Update documentation

- Use Conventional Commits

Join us on Discord for questions, discussions, and updates.

MIT License - see LICENSE

- A3S Lane — Priority-based task queue with DLQ

- A3S Search — Multi-engine web search aggregator

- A3S Box — Secure sandbox runtime with TEE support

- A3S Event — Event-driven architecture primitives

Built by A3S Lab | Documentation | Discord

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Code

Similar Open Source Tools

Code

A3S Code is an embeddable AI coding agent framework in Rust that allows users to build agents capable of reading, writing, and executing code with tool access, planning, and safety controls. It is production-ready with features like permission system, HITL confirmation, skill-based tool restrictions, and error recovery. The framework is extensible with 19 trait-based extension points and supports lane-based priority queue for scalable multi-machine task distribution.

Conduit

Conduit is a unified Swift 6.2 SDK for local and cloud LLM inference, providing a single Swift-native API that can target Anthropic, OpenRouter, Ollama, MLX, HuggingFace, and Apple’s Foundation Models without rewriting your prompt pipeline. It allows switching between local, cloud, and system providers with minimal code changes, supports downloading models from HuggingFace Hub for local MLX inference, generates Swift types directly from LLM responses, offers privacy-first options for on-device running, and is built with Swift 6.2 concurrency features like actors, Sendable types, and AsyncSequence.

oh-my-pi

oh-my-pi is an AI coding agent for the terminal, providing tools for interactive coding, AI-powered git commits, Python code execution, LSP integration, time-traveling streamed rules, interactive code review, task management, interactive questioning, custom TypeScript slash commands, universal config discovery, MCP & plugin system, web search & fetch, SSH tool, Cursor provider integration, multi-credential support, image generation, TUI overhaul, edit fuzzy matching, and more. It offers a modern terminal interface with smart session management, supports multiple AI providers, and includes various tools for coding, task management, code review, and interactive questioning.

adk-rust

ADK-Rust is a comprehensive and production-ready Rust framework for building AI agents. It features type-safe agent abstractions with async execution and event streaming, multiple agent types including LLM agents, workflow agents, and custom agents, realtime voice agents with bidirectional audio streaming, a tool ecosystem with function tools, Google Search, and MCP integration, production features like session management, artifact storage, memory systems, and REST/A2A APIs, and a developer-friendly experience with interactive CLI, working examples, and comprehensive documentation. The framework follows a clean layered architecture and is production-ready and actively maintained.

zeroclaw

ZeroClaw is a fast, small, and fully autonomous AI assistant infrastructure built with Rust. It features a lean runtime, cost-efficient deployment, fast cold starts, and a portable architecture. It is secure by design, fully swappable, and supports OpenAI-compatible provider support. The tool is designed for low-cost boards and small cloud instances, with a memory footprint of less than 5MB. It is suitable for tasks like deploying AI assistants, swapping providers/channels/tools, and pluggable everything.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

PraisonAI

Praison AI is a low-code, centralised framework that simplifies the creation and orchestration of multi-agent systems for various LLM applications. It emphasizes ease of use, customization, and human-agent interaction. The tool leverages AutoGen and CrewAI frameworks to facilitate the development of AI-generated scripts and movie concepts. Users can easily create, run, test, and deploy agents for scriptwriting and movie concept development. Praison AI also provides options for full automatic mode and integration with OpenAI models for enhanced AI capabilities.

shodh-memory

Shodh-Memory is a cognitive memory system designed for AI agents to persist memory across sessions, learn from experience, and run entirely offline. It features Hebbian learning, activation decay, and semantic consolidation, packed into a single ~17MB binary. Users can deploy it on cloud, edge devices, or air-gapped systems to enhance the memory capabilities of AI agents.

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

celeste-python

Celeste AI is a type-safe, modality/provider-agnostic tool that offers unified interface for various providers like OpenAI, Anthropic, Gemini, Mistral, and more. It supports multiple modalities including text, image, audio, video, and embeddings, with full Pydantic validation and IDE autocomplete. Users can switch providers instantly, ensuring zero lock-in and a lightweight architecture. The tool provides primitives, not frameworks, for clean I/O operations.

skylos

Skylos is a privacy-first SAST tool for Python, TypeScript, and Go that bridges the gap between traditional static analysis and AI agents. It detects dead code, security vulnerabilities (SQLi, SSRF, Secrets), and code quality issues with high precision. Skylos uses a hybrid engine (AST + optional Local/Cloud LLM) to eliminate false positives, verify via runtime, find logic bugs, and provide context-aware audits. It offers automated fixes, end-to-end remediation, and 100% local privacy. The tool supports taint analysis, secrets detection, vulnerability checks, dead code detection and cleanup, agentic AI and hybrid analysis, codebase optimization, operational governance, and runtime verification.

roam-code

Roam is a tool that builds a semantic graph of your codebase and allows AI agents to query it with one shell command. It pre-indexes your codebase into a semantic graph stored in a local SQLite DB, providing architecture-level graph queries offline, cross-language, and compact. Roam understands functions, modules, tests coverage, and overall architecture structure. It is best suited for agent-assisted coding, large codebases, architecture governance, safe refactoring, and multi-repo projects. Roam is not suitable for real-time type checking, dynamic/runtime analysis, small scripts, or pure text search. It offers speed, dependency-awareness, LLM-optimized output, fully local operation, and CI readiness.

augustus

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

tokscale

Tokscale is a high-performance CLI tool and visualization dashboard for tracking token usage and costs across multiple AI coding agents. It helps monitor and analyze token consumption from various AI coding tools, providing real-time pricing calculations using LiteLLM's pricing data. Inspired by the Kardashev scale, Tokscale measures token consumption as users scale the ranks of AI-augmented development. It offers interactive TUI mode, multi-platform support, real-time pricing, detailed breakdowns, web visualization, flexible filtering, and social platform features.

nullclaw

NullClaw is the smallest fully autonomous AI assistant infrastructure, a static Zig binary that fits on any $5 board, boots in milliseconds, and requires nothing but libc. It features an impossibly small 678 KB static binary with no runtime or framework overhead, near-zero memory usage, instant startup, true portability across different CPU architectures, and a feature-complete stack with 22+ providers, 11 channels, and 18+ tools. The tool is lean by default, secure by design, fully swappable with core systems as vtable interfaces, and offers no lock-in with OpenAI-compatible provider support and pluggable custom endpoints.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

For similar tasks

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

letmedoit

LetMeDoIt AI is a virtual assistant designed to revolutionize the way you work. It goes beyond being a mere chatbot by offering a unique and powerful capability - the ability to execute commands and perform computing tasks on your behalf. With LetMeDoIt AI, you can access OpenAI ChatGPT-4, Google Gemini Pro, and Microsoft AutoGen, local LLMs, all in one place, to enhance your productivity.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

AIRAVAT

AIRAVAT is a multifunctional Android Remote Access Tool (RAT) with a GUI-based Web Panel that does not require port forwarding. It allows users to access various features on the victim's device, such as reading files, downloading media, retrieving system information, managing applications, SMS, call logs, contacts, notifications, keylogging, admin permissions, phishing, audio recording, music playback, device control (vibration, torch light, wallpaper), executing shell commands, clipboard text retrieval, URL launching, and background operation. The tool requires a Firebase account and tools like ApkEasy Tool or ApkTool M for building. Users can set up Firebase, host the web panel, modify Instagram.apk for RAT functionality, and connect the victim's device to the web panel. The tool is intended for educational purposes only, and users are solely responsible for its use.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

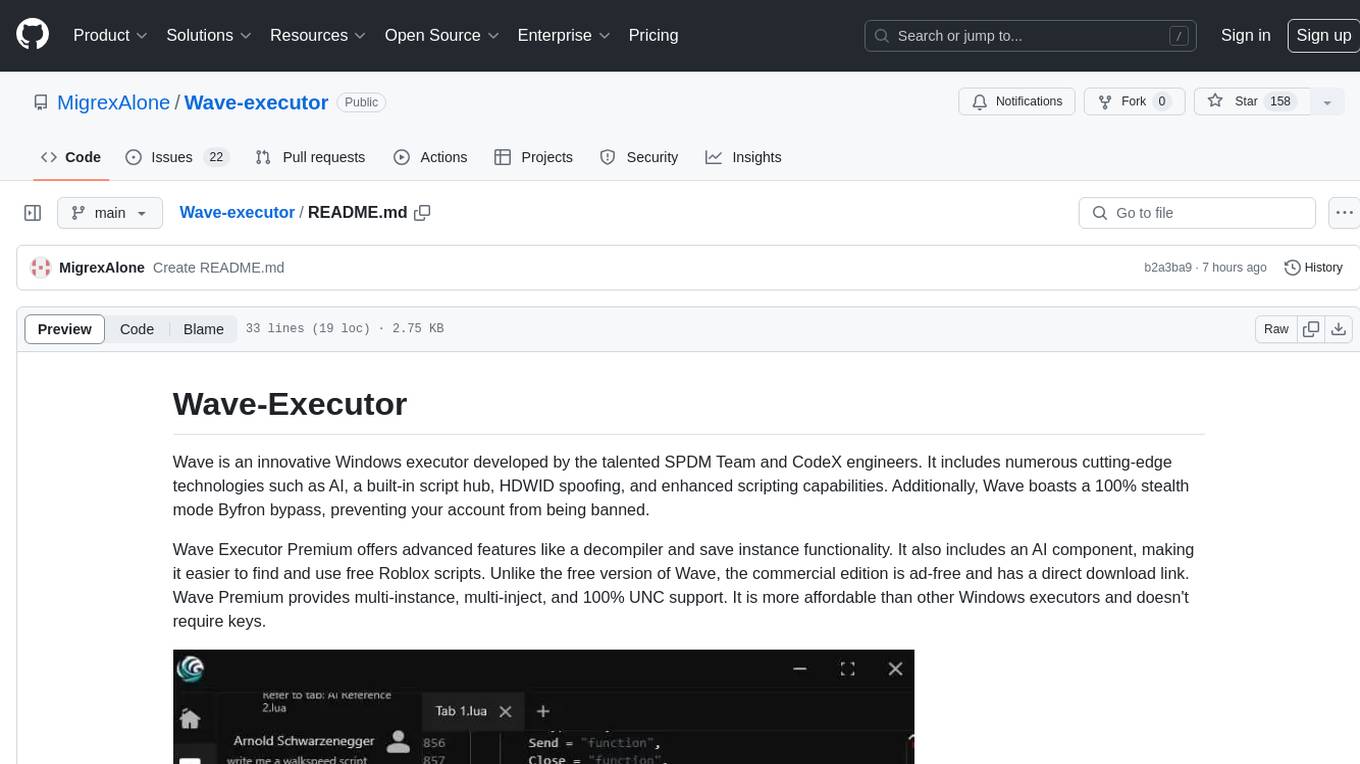

Wave-executor

Wave Executor is an innovative Windows executor developed by SPDM Team and CodeX engineers, featuring cutting-edge technologies like AI, built-in script hub, HDWID spoofing, and enhanced scripting capabilities. It offers a 100% stealth mode Byfron bypass, advanced features like decompiler and save instance functionality, and a commercial edition with ad-free experience and direct download link. Wave Premium provides multi-instance, multi-inject, and 100% UNC support, making it a cost-effective option for executing scripts in popular Roblox games.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.