Best AI tools for< Optimize Cost-performance >

20 - AI tool Sites

Weaviate

Weaviate is an AI-native database designed to bring intuitive AI-native applications to life with less hallucination, data leakage, and vendor lock-in. It offers features like Hybrid Search, Retrieval-Augmented Generation, Generative Feedback Loops, and Cost-performance optimization. Weaviate empowers developers to build AI-native applications with flexible, reliable, open-source foundations, tailored AI infrastructure patterns, and over 1M monthly downloads. The platform is known for its best-in-class hybrid search, integrations with major LLMs, and ease of deployment.

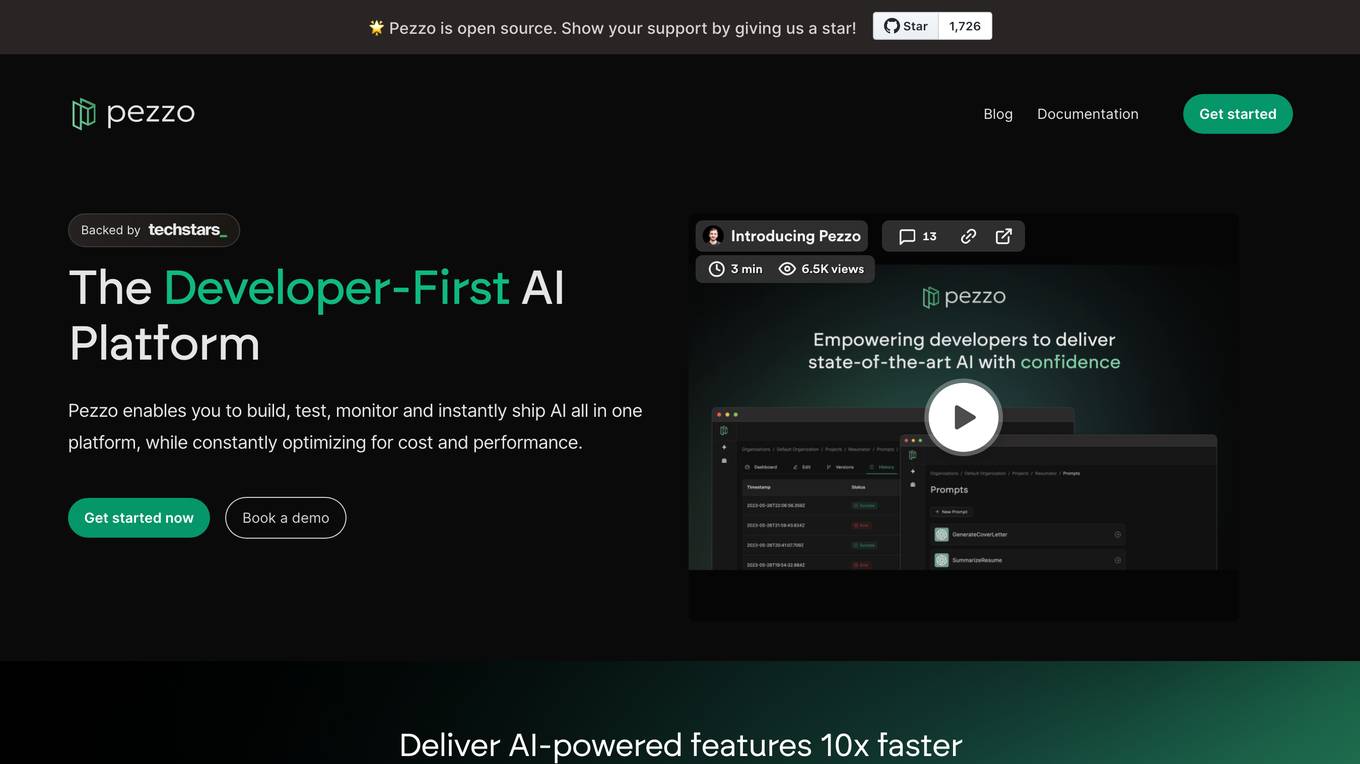

Pezzo

Pezzo is an open-source platform that enables developers to build, test, monitor, and ship AI features quickly and efficiently. It provides a range of powerful features to streamline the workflow, including prompt management, observability, troubleshooting, and collaboration tools. With Pezzo, teams can deliver impactful AI features in sync and optimize for cost and performance.

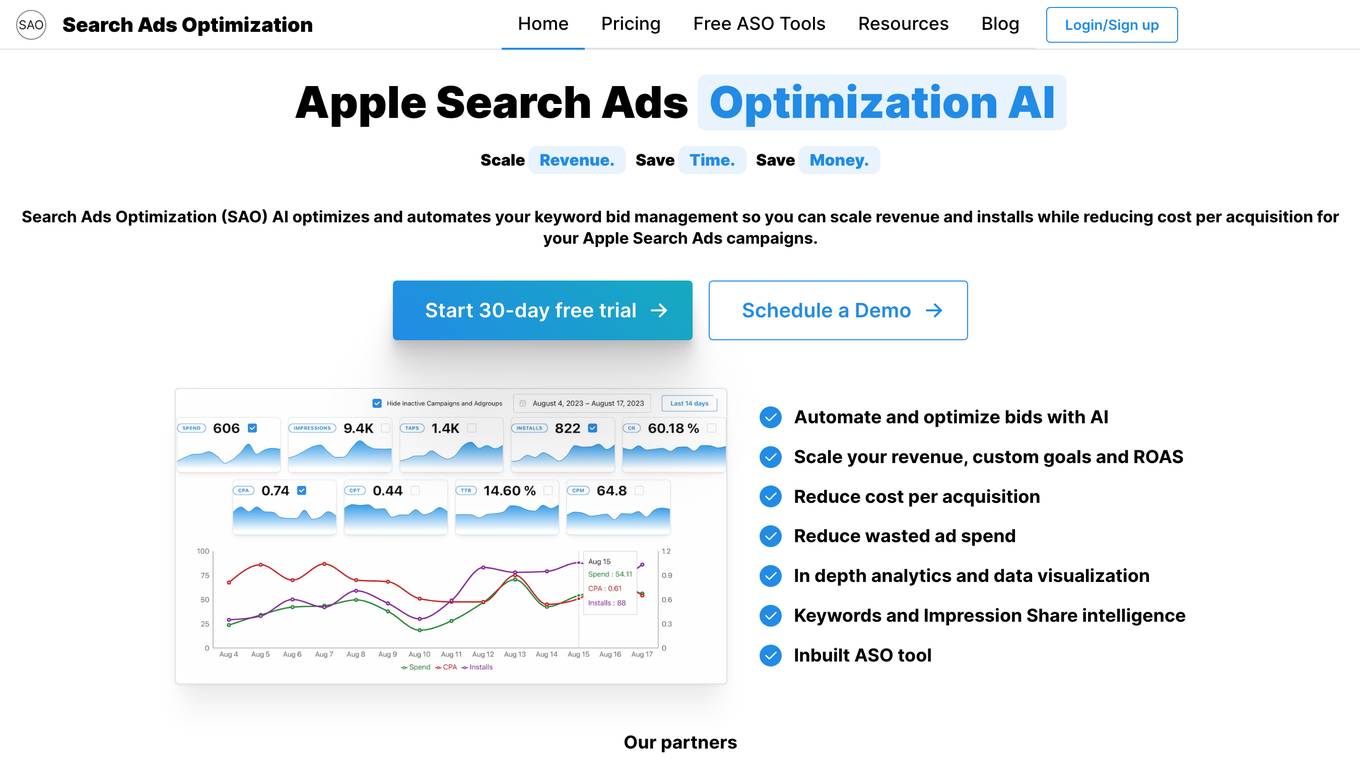

SAO Search Ads Optimization

SAO (Search Ads Optimization) is an AI optimization platform for Apple Search Ads that automates keyword bid management to help scale revenue, increase installs, and reduce cost per acquisition. It offers in-depth analytics, data visualization, and advanced ASO tools for optimizing and automating Apple Search Ads campaigns. Trusted by app companies worldwide, SAO uses AI automation to run 24/7 with real-time data, allowing users to focus on strategic tasks while the platform manages campaigns efficiently.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

Keebo

Keebo is an AI tool designed for Snowflake optimization, offering automated query, cost, and tuning optimization. It is the only fully-automated Snowflake optimizer that dynamically adjusts to save customers 25% and more. Keebo's patented technology, based on cutting-edge research, optimizes warehouse size, clustering, and memory without impacting performance. It learns and adjusts to workload changes in real-time, setting up in just 30 minutes and delivering savings within 24 hours. The tool uses telemetry metadata for optimizations, providing full visibility and adjustability for complex scenarios and schedules.

Granica AI

Granica AI is an AI data readiness platform that helps users build and manage high-quality data for AI projects at scale. The platform uses AI to continuously improve the AI-readiness of data, making projects faster and more impactful over time. Granica offers features such as data cost optimization, data privacy, data selection & curation, and more. The platform is trusted by category-defining companies for its efficiency in reducing storage costs and improving data security.

Inpulse.ai

Inpulse.ai is an AI platform that revolutionizes inventory management and supplier ordering for restaurant chains. It assists managers in making informed decisions by accurately forecasting sales, anticipating production needs, and optimizing food supplies. The platform provides real-time performance monitoring, automated production planning, and centralized data management to help restaurants improve their margins and reduce waste. Inpulse.ai is used by over 3,000 restaurants, food kiosks, and bakeries on a daily basis, offering a comprehensive solution to streamline operations and boost profitability.

Autron

Autron is an AI-powered advertising tool designed to help businesses boost sales and optimize their advertising cost of sales (ACoS) on Amazon. The tool offers fully automated campaigns for Sponsored Products, Sponsored Brands, and Sponsored Display, leveraging AI technology to make data-driven decisions and optimize ad performance. Autron's technology simulates, predicts, and delivers decisions for users, acting as a virtual data scientist and machine learning specialist to help businesses grow on autopilot. With Autron, users can set simple goals based on their business objectives and let the tool handle keyword and ASIN research, providing deeper insights into sales growth and advertising performance.

cloudNito

cloudNito is an AI-driven platform that specializes in cloud cost optimization and management for businesses using AWS services. The platform offers automated cost optimization, comprehensive insights and analytics, unified cloud management, anomaly detection, cost and usage explorer, recommendations for waste reduction, and resource optimization. By leveraging advanced AI solutions, cloudNito aims to help businesses efficiently manage their AWS cloud resources, reduce costs, and enhance performance.

Hypergro

Hypergro is an AI-powered platform that specializes in UGC video ads for smart customer acquisition. Leveraging the 4th Generation of AI-powered growth marketing on Meta and Youtube, Hypergro helps businesses discover their audience, drive sales, and increase revenue through real-time AI insights. The platform offers end-to-end solutions for creating impactful short video ads that combine creator authenticity with AI-driven research for compelling storytelling. With a focus on precision targeting, competitor analysis, and in-depth research, Hypergro ensures maximum ROI for brands looking to elevate their growth strategies.

EverSQL

EverSQL is an AI-powered tool designed for SQL query optimization, database observability, and cost reduction for PostgreSQL and MySQL databases. It automatically optimizes SQL queries using smart AI-based algorithms, provides ongoing performance insights, and helps reduce monthly database costs by offering optimization recommendations. With over 100,000 professionals trusting EverSQL, it aims to save time, improve database performance, and enhance cost-efficiency without accessing sensitive data.

Unify

Unify is an AI tool that offers a unified platform for accessing and comparing various Language Models (LLMs) from different providers. It allows users to combine models for faster, cheaper, and better responses, optimizing for quality, speed, and cost-efficiency. Unify simplifies the complex task of selecting the best LLM by providing transparent benchmarks, personalized routing, and performance optimization tools.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

Portkey

Portkey is a control panel for production AI applications that offers an AI Gateway, Prompts, Guardrails, and Observability Suite. It enables teams to ship reliable, cost-efficient, and fast apps by providing tools for prompt engineering, enforcing reliable LLM behavior, integrating with major agent frameworks, and building AI agents with access to real-world tools. Portkey also offers seamless AI integrations for smarter decisions, with features like managed hosting, smart caching, and edge compute layers to optimize app performance.

Forma.ai

Forma.ai is an AI-powered sales performance management software designed to optimize and run sales compensation processes efficiently. The platform offers features such as AI-powered plan configuration, connected modeling for optimization, end-to-end automation of sales comp management, flexible data integrations, and next-gen automation. Forma.ai provides advantages such as faster decision-making, revenue capture, cost reduction, flexibility, and scalability. However, some disadvantages include the need for AI skills, potential data security concerns, and initial learning curve. The application is suitable for jobs in sales operations, finance, human resources, sales compensation planning, and sales performance data management. Users can find Forma.ai using keywords like sales comp, AI-powered software, sales performance management, sales incentives, and sales compensation. The tool can be used for tasks like design with AI, plan and model, deploy and manage, optimize comp plans, and automate sales comp.

DevSecCops

DevSecCops is an AI-driven automation platform designed to revolutionize DevSecOps processes. The platform offers solutions for cloud optimization, machine learning operations, data engineering, application modernization, infrastructure monitoring, security, compliance, and more. With features like one-click infrastructure security scan, AI engine security fixes, compliance readiness using AI engine, and observability, DevSecCops aims to enhance developer productivity, reduce cloud costs, and ensure secure and compliant infrastructure management. The platform leverages AI technology to identify and resolve security issues swiftly, optimize AI workflows, and provide cost-saving techniques for cloud architecture.

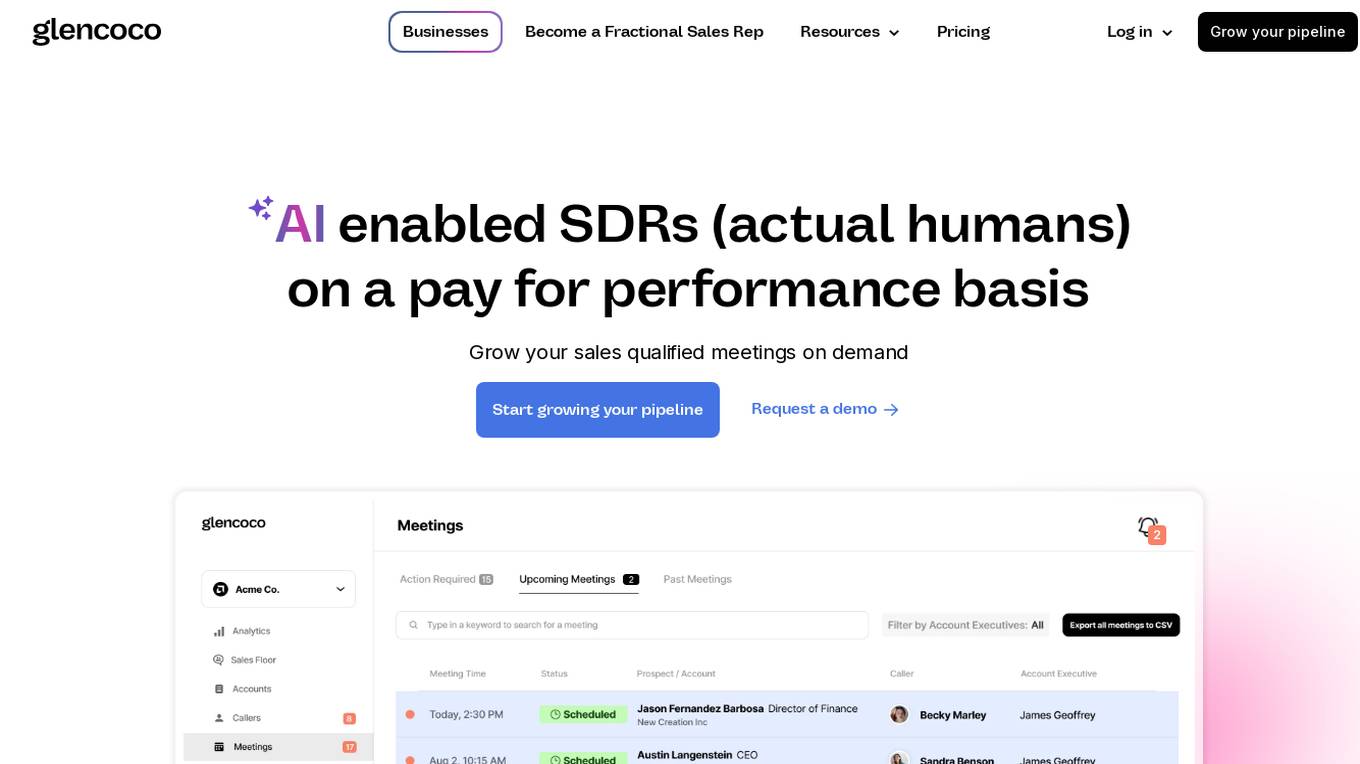

Glencoco

Glencoco is a tech-enabled sales marketplace that empowers businesses to become fractional sales representatives. The platform offers AI-enabled SDRs on a pay-for-performance basis, helping businesses grow their pipeline by finding the right prospects and maximizing ROI. Glencoco provides insights on prospect responses, integrates dialing and email solutions, and allows users to set up campaigns, select sales development reps, and optimize results. The platform combines human contractors with AI workflows to deliver successful outbound sales motions effortlessly.

Pixis

Pixis is a codeless AI infrastructure designed for growth marketing, offering purpose-built AI solutions to scale demand generation. The platform leverages transparent AI infrastructure to optimize campaign results across platforms, with features such as targeting AI, creative AI, and performance AI. Pixis helps reduce customer acquisition cost, generate creative assets quickly, refine audience targeting, and deliver contextual communication in real-time. The platform also provides an AI savings calculator to estimate the returns from leveraging its codeless AI infrastructure for marketing. With success stories showcasing significant improvements in various marketing metrics, Pixis aims to empower businesses to unlock the capabilities of AI for enhanced performance and results.

RankBot

RankBot is an AI-driven SEO tool that revolutionizes the way websites optimize their search engine rankings. By leveraging artificial intelligence, RankBot automates the process of building backlinks and enhancing on-page SEO, eliminating the need for manual labor and extensive keyword research. With personalized strategies and effortless SEO mastery, RankBot empowers users to outsmart competitors and achieve top search engine rankings with minimal effort. The tool provides simple reporting, tracks keyword performance, and offers a user-friendly interface for seamless optimization. Despite its confidence, RankBot aims to complement traditional SEO agencies rather than replace them, offering a cost-effective and efficient solution for website optimization.

Rawbot

Rawbot is an AI model comparison tool that simplifies the process of selecting the best AI models for projects and applications. It allows users to compare AI models side-by-side, understand their strengths and weaknesses, and make informed decisions. Rawbot supports a wide range of AI models and helps users optimize performance, identify customization opportunities, analyze cost and efficiency, and make informed decisions for successful outcomes in research, development, and business applications.

20 - Open Source AI Tools

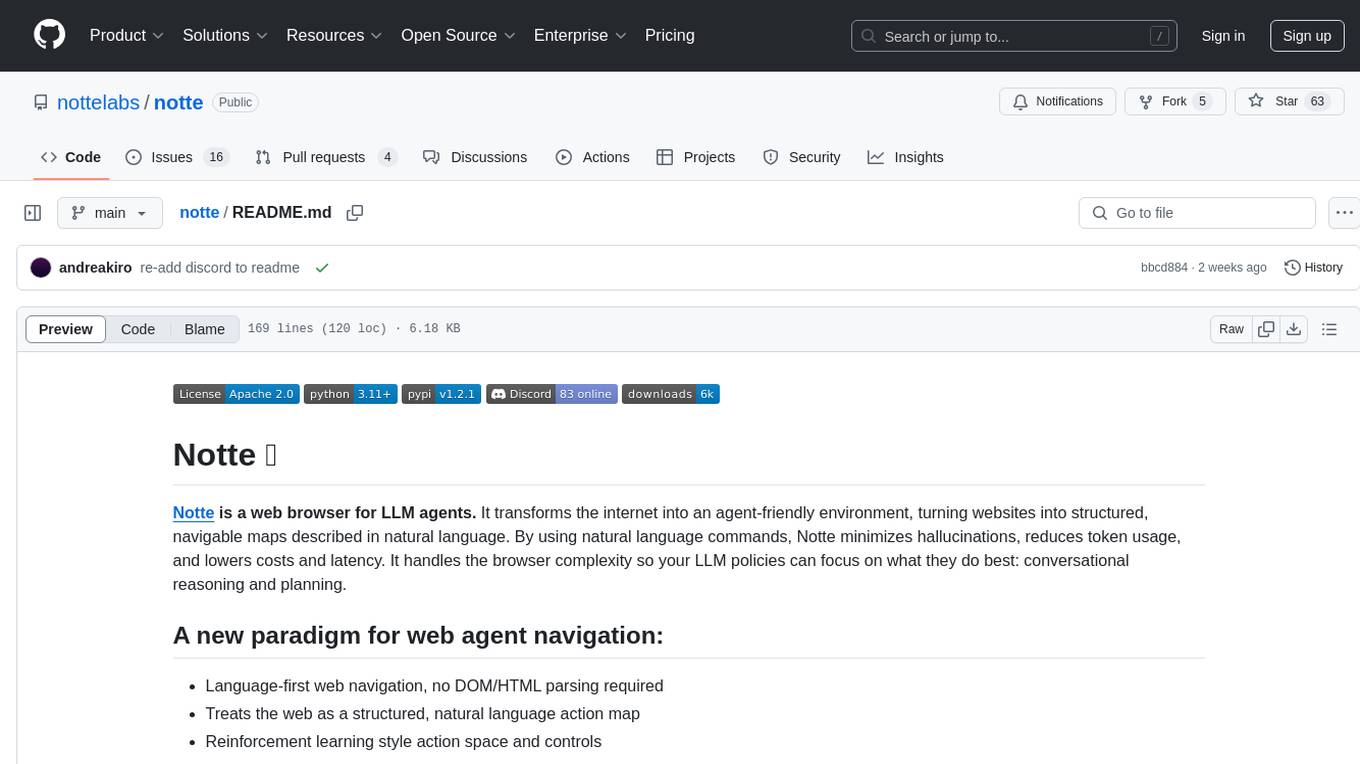

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

llmaz

llmaz is an easy, advanced inference platform for large language models on Kubernetes. It aims to provide a production-ready solution that integrates with state-of-the-art inference backends. The platform supports efficient model distribution, accelerator fungibility, SOTA inference, various model providers, multi-host support, and scaling efficiency. Users can quickly deploy LLM services with minimal configurations and benefit from a wide range of advanced inference backends. llmaz is designed to optimize cost and performance while supporting cutting-edge researches like Speculative Decoding or Splitwise on Kubernetes.

langwatch

LangWatch is a monitoring and analytics platform designed to track, visualize, and analyze interactions with Large Language Models (LLMs). It offers real-time telemetry to optimize LLM cost and latency, a user-friendly interface for deep insights into LLM behavior, user analytics for engagement metrics, detailed debugging capabilities, and guardrails to monitor LLM outputs for issues like PII leaks and toxic language. The platform supports OpenAI and LangChain integrations, simplifying the process of tracing LLM calls and generating API keys for usage. LangWatch also provides documentation for easy integration and self-hosting options for interested users.

swift-ocr-llm-powered-pdf-to-markdown

Swift OCR is a powerful tool for extracting text from PDF files using OpenAI's GPT-4 Turbo with Vision model. It offers flexible input options, advanced OCR processing, performance optimizations, structured output, robust error handling, and scalable architecture. The tool ensures accurate text extraction, resilience against failures, and efficient handling of multiple requests.

optscale

OptScale is an open-source FinOps and MLOps platform that provides cloud cost optimization for all types of organizations and MLOps capabilities like experiment tracking, model versioning, ML leaderboards.

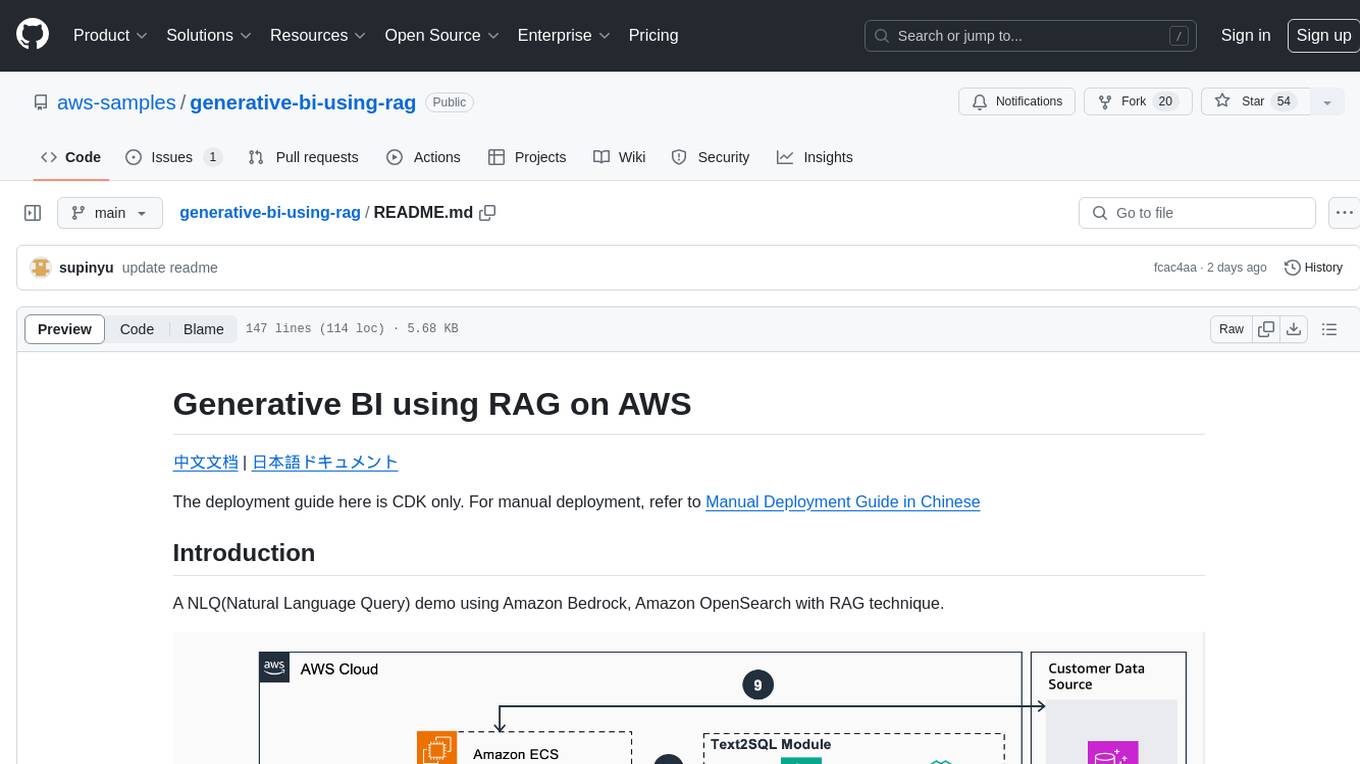

generative-bi-using-rag

Generative BI using RAG on AWS is a comprehensive framework designed to enable Generative BI capabilities on customized data sources hosted on AWS. It offers features such as Text-to-SQL functionality for querying data sources using natural language, user-friendly interface for managing data sources, performance enhancement through historical question-answer ranking, and entity recognition. It also allows customization of business information, handling complex attribution analysis problems, and provides an intuitive question-answering UI with a conversational approach for complex queries.

spiceai

Spice is a portable runtime written in Rust that offers developers a unified SQL interface to materialize, accelerate, and query data from any database, data warehouse, or data lake. It connects, fuses, and delivers data to applications, machine-learning models, and AI-backends, functioning as an application-specific, tier-optimized Database CDN. Built with industry-leading technologies such as Apache DataFusion, Apache Arrow, Apache Arrow Flight, SQLite, and DuckDB. Spice makes it fast and easy to query data from one or more sources using SQL, co-locating a managed dataset with applications or machine learning models, and accelerating it with Arrow in-memory, SQLite/DuckDB, or attached PostgreSQL for fast, high-concurrency, low-latency queries.

JetStream

JetStream is a throughput and memory optimized engine for LLM inference on XLA devices, starting with TPUs (and GPUs in future -- PRs welcome). It is designed to provide high performance and scalability for large language models, enabling efficient inference on cloud-based TPUs. JetStream leverages XLA to optimize the execution of LLM models, resulting in faster and more efficient inference. Additionally, JetStream supports quantization techniques to further enhance performance and reduce memory consumption. By utilizing JetStream, developers can deploy and run LLM models on TPUs with ease, achieving optimal performance and cost-effectiveness.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

incubator-kie-optaplanner

A fast, easy-to-use, open source AI constraint solver for software developers. OptaPlanner is a powerful tool that helps developers solve complex optimization problems by providing a constraint satisfaction solver. It allows users to model and solve planning and scheduling problems efficiently, improving decision-making processes and resource allocation. With OptaPlanner, developers can easily integrate optimization capabilities into their applications, leading to better performance and cost-effectiveness.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

LLMSys-PaperList

This repository provides a comprehensive list of academic papers, articles, tutorials, slides, and projects related to Large Language Model (LLM) systems. It covers various aspects of LLM research, including pre-training, serving, system efficiency optimization, multi-model systems, image generation systems, LLM applications in systems, ML systems, survey papers, LLM benchmarks and leaderboards, and other relevant resources. The repository is regularly updated to include the latest developments in this rapidly evolving field, making it a valuable resource for researchers, practitioners, and anyone interested in staying abreast of the advancements in LLM technology.

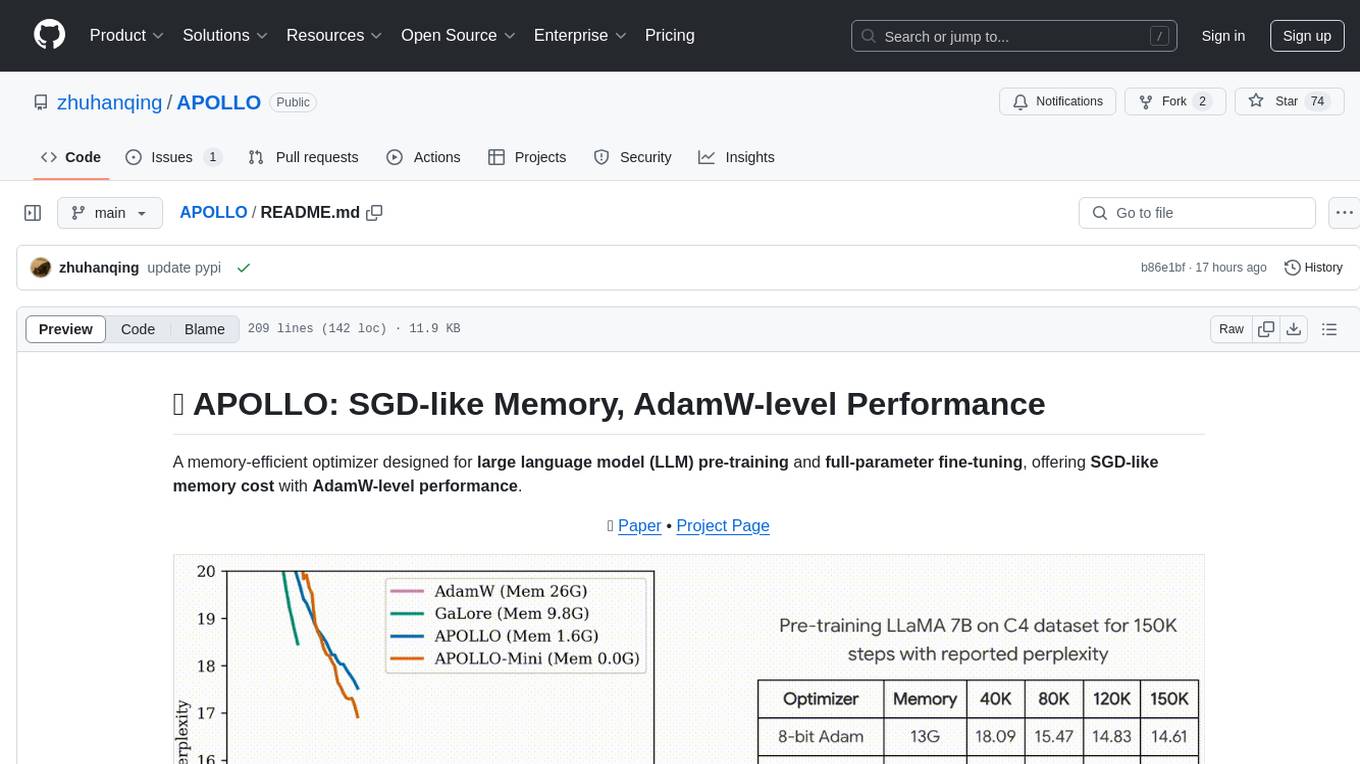

APOLLO

APOLLO is a memory-efficient optimizer designed for large language model (LLM) pre-training and full-parameter fine-tuning. It offers SGD-like memory cost with AdamW-level performance. The optimizer integrates low-rank approximation and optimizer state redundancy reduction to achieve significant memory savings while maintaining or surpassing the performance of Adam(W). Key contributions include structured learning rate updates for LLM training, approximated channel-wise gradient scaling in a low-rank auxiliary space, and minimal-rank tensor-wise gradient scaling. APOLLO aims to optimize memory efficiency during training large language models.

CodeFuse-ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project caches pre-generated model results to reduce response time for similar requests and enhance user experience. It integrates various embedding frameworks and local storage options, offering functionalities like cache-writing, cache-querying, and cache-clearing through RESTful API. The tool supports multi-tenancy, system commands, and multi-turn dialogue, with features for data isolation, database management, and model loading schemes. Future developments include data isolation based on hyperparameters, enhanced system prompt partitioning storage, and more versatile embedding models and similarity evaluation algorithms.

ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project facilitates sharing and exchanging technologies related to large model semantic cache through open-source collaboration.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

guidellm

GuideLLM is a powerful tool for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM helps users gauge the performance, resource needs, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. Key features include performance evaluation, resource optimization, cost estimation, and scalability testing.

20 - OpenAI Gpts

Supplier Relationship Management Advisor

Streamlines supplier interactions to optimize organizational efficiency and cost-effectiveness.

Calorie Count & Cut Cost: Food Data

Apples vs. Oranges? Optimize your low-calorie diet. Compare food items. Get tailored advice on satiating, nutritious, cost-effective food choices based on 240 items.

Customer Acquisition Cost (CAC) Calculator

Professional analyst for CAC insights and summaries

Category Management Advisor

Advises on strategic sourcing and procurement to optimize category management.

Production Controlling Advisor

Guides financial planning and cost management in production.

ChefGPT

I'm a master chef with expertise in recipes, cost analysis, and kitchen optimization. First upload your inventory and cost list, so I can offer recipes from your inventory with cost analysis.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

EnggBott (Construction Work Package Assistant)

I organize my thoughts using ontology matrices, for detailed CWP advice.

AzurePilot | Steer & Streamline Your Cloud Costs🌐

Specialized advisor on Azure costs and optimizations

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

CV & Resume ATS Optimize + 🔴Match-JOB🔴

Professional Resume & CV Assistant 📝 Optimize for ATS 🤖 Tailor to Job Descriptions 🎯 Compelling Content ✨ Interview Tips 💡