Best AI tools for< Cli Usage >

20 - AI tool Sites

Vilosia

Vilosia is an AI-powered platform that helps medium and large enterprises with internal development teams to visualize their software architecture, simplify migration, and improve system modularity. The platform uses Gen AI to automatically add event triggers to the codebase, enabling users to understand data flow, system dependencies, domain boundaries, and external APIs. Vilosia also offers AI workflow analysis to extract workflows from function call chains and identify database usage. Users can scan their codebase using CLI client & CI/CD integration and stay updated with new features through the newsletter.

GPT CLI

GPT CLI is an all-in-one AI tool that allows users to build their own AI command-line interface tools using ChatGPT. It provides various plugins such as AI Commit, AI Command, AI Translate, and more, enabling users to streamline their workflow and automate tasks through natural language commands. With GPT CLI, users can easily generate Git commit messages, execute commands, translate text, and perform various other AI-powered tasks directly from the command line.

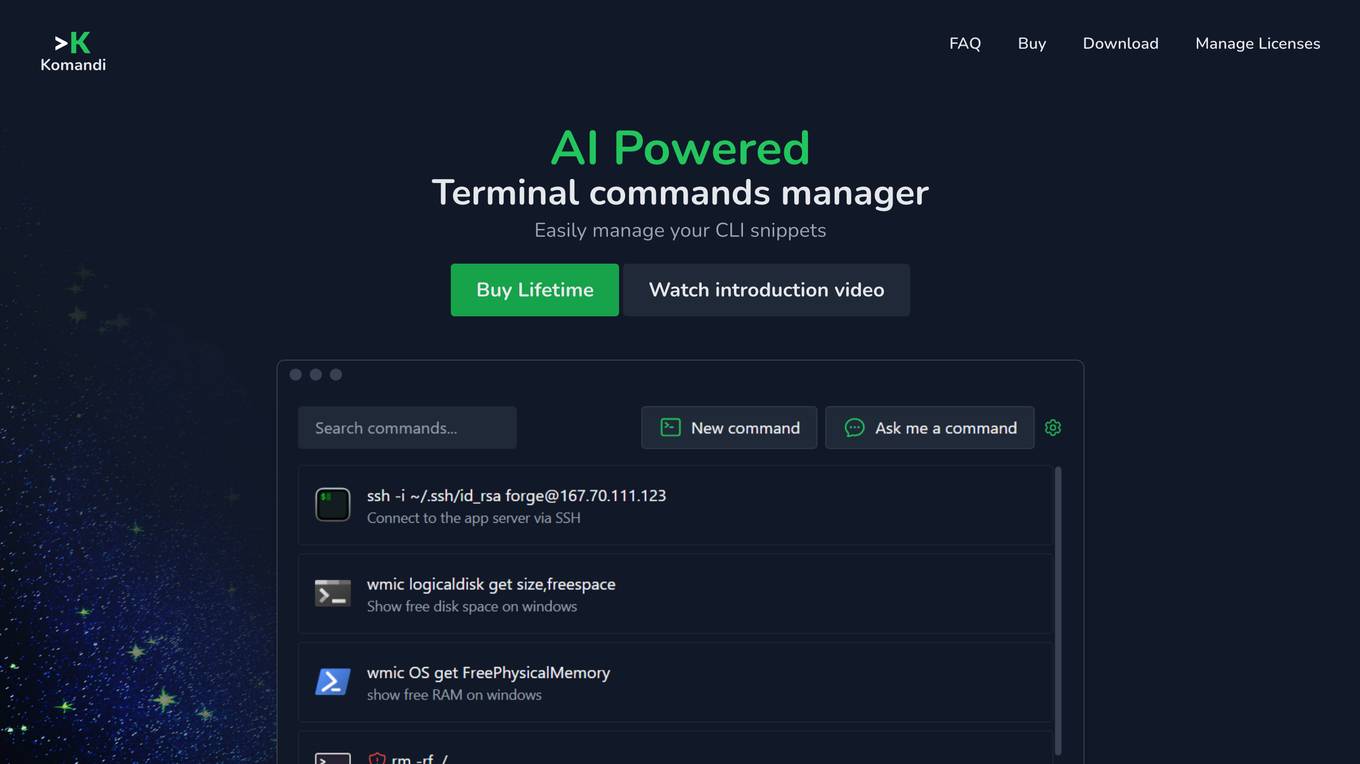

Komandi

Komandi is an AI-powered CLI/Terminal commands manager that allows users to easily manage their CLI snippets. It enables users to generate terminal commands from natural language prompts using AI, insert, favorite, copy, and execute commands, detect potentially dangerous commands, and more. Komandi is designed for developers and system administrators to streamline their command-line operations and enhance productivity.

Kel

Kel is an AI Assistant designed to operate within the Command Line Interface (CLI). It offers users the ability to automate repetitive tasks, boost productivity, and enhance the intelligence and efficiency of their CLI experience. Kel supports multiple Language Model Models (LLMs) including OpenAI, Anthropic, and Ollama. Users can upload files to interact with their artifacts and bring their own API key for integration. The tool is free and open source, allowing for community contributions on GitHub. For support, users can reach out to the Kel team.

CommandAI

CommandAI is a powerful command line utility tool that leverages the capabilities of artificial intelligence to enhance user experience and productivity. It allows users to interact with the command line interface using natural language commands, making it easier for both beginners and experienced users to perform complex tasks efficiently. With CommandAI, users can streamline their workflow, automate repetitive tasks, and access advanced features through simple text-based interactions. The tool is designed to simplify the command line experience and provide intelligent assistance to users in executing commands and managing their system effectively.

Aurora Terminal Agent

Aurora Terminal Agent is an AI-powered terminal assistant designed to enhance productivity and efficiency in terminal operations. The tool leverages artificial intelligence to provide real-time insights, automate tasks, and streamline workflows for terminal operators. With its intuitive interface and advanced algorithms, Aurora Terminal Agent revolutionizes the way terminals manage their operations, leading to improved performance and cost savings.

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

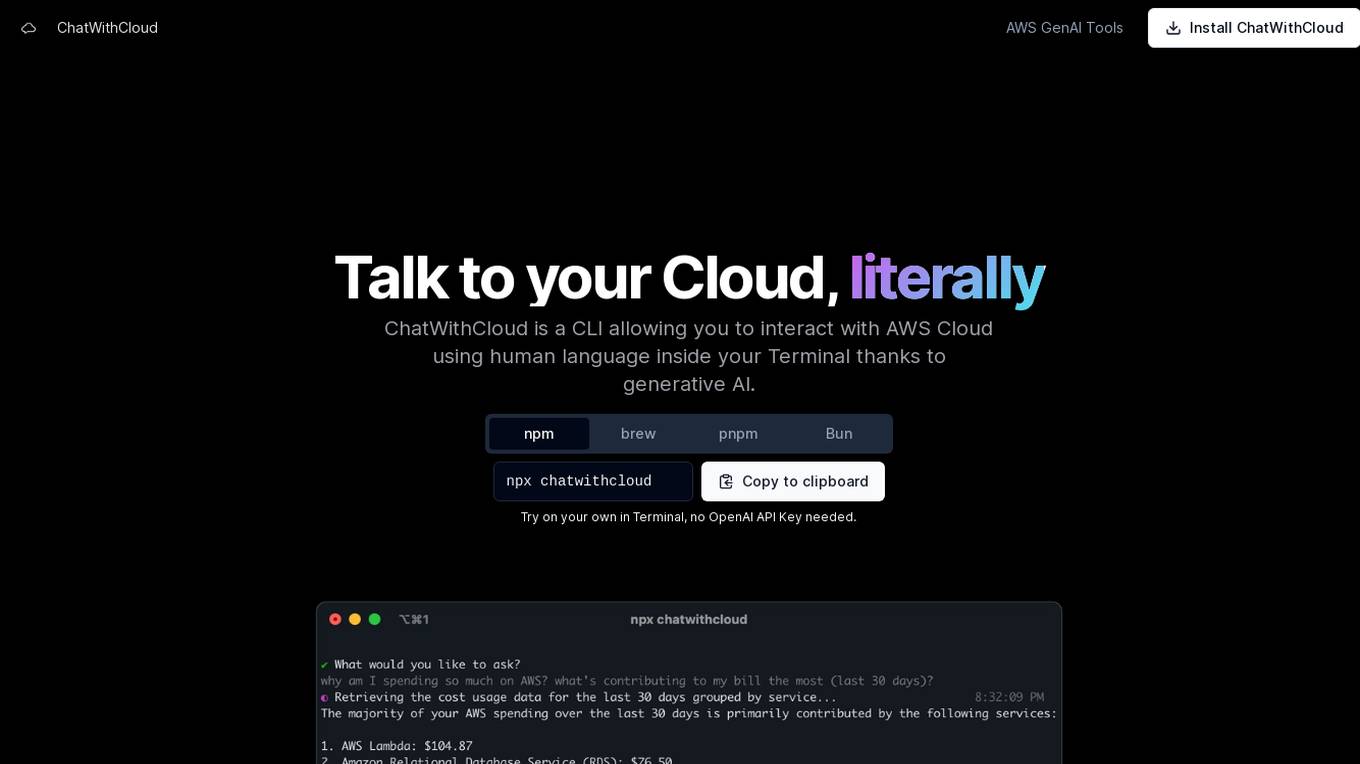

ChatWithCloud

ChatWithCloud is a command-line interface (CLI) tool that enables users to interact with AWS Cloud using natural language within the Terminal, powered by generative AI. It allows users to perform various tasks such as cost analysis, security analysis, troubleshooting, and fixing infrastructure issues without the need for an OpenAI API Key. The tool offers both a lifetime license option and a managed subscription model for users' convenience.

InitRunner

InitRunner is an open-source AI tool that allows users to create AI agents quickly by converting a simple config file into a functional AI agent. It offers features such as document search, long-term memory, and a live dashboard. Users can define agent roles, tools, and behaviors in YAML format without the need to learn a specific framework. The tool provides full auditability with every decision logged in an immutable SQLite log. It enables users to swap providers easily, schedule tasks, and integrate custom tools. InitRunner also supports autonomous planning, execution, and adaptation of multi-step tasks. The platform includes built-in capabilities like RAG, memory, reusable skills, and an OpenAI-compatible API server, all configurable in YAML without external services required.

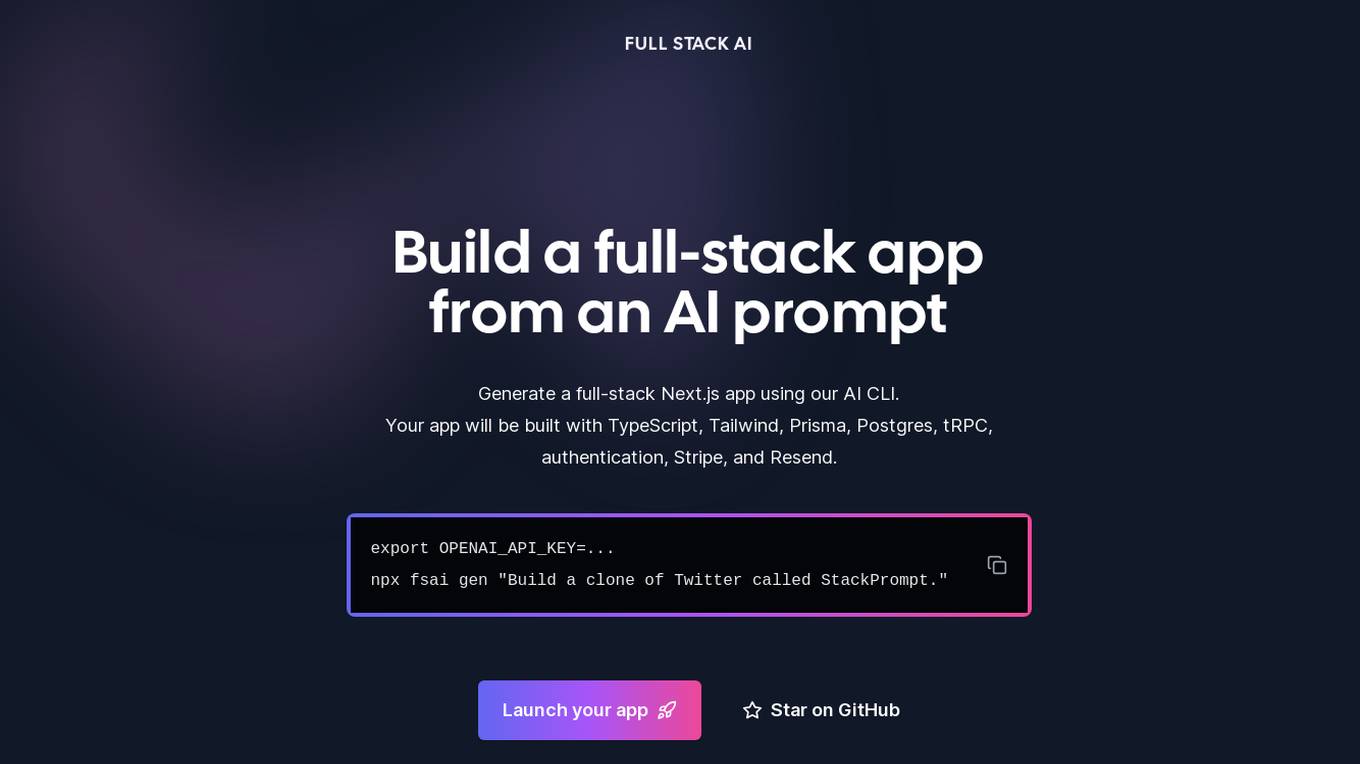

Full Stack AI

Full Stack AI is a tool that allows users to generate a full-stack Next.js app using an AI CLI. The app will be built with TypeScript, Tailwind, Prisma, Postgres, tRPC, authentication, Stripe, and Resend.

Comfy Org

Comfy Org is an open-source AI tooling platform dedicated to advancing and democratizing AI technology. The platform offers tools like node manager, node registry, CLI, automated testing, and public documentation to support the ComfyUI ecosystem. Comfy Org aims to make state-of-the-art AI models accessible to a wider audience by fostering an open-source and community-driven approach. The team behind Comfy Org consists of individuals passionate about developing and maintaining various components of the platform, ensuring a reliable and secure environment for users to explore and contribute to AI tooling.

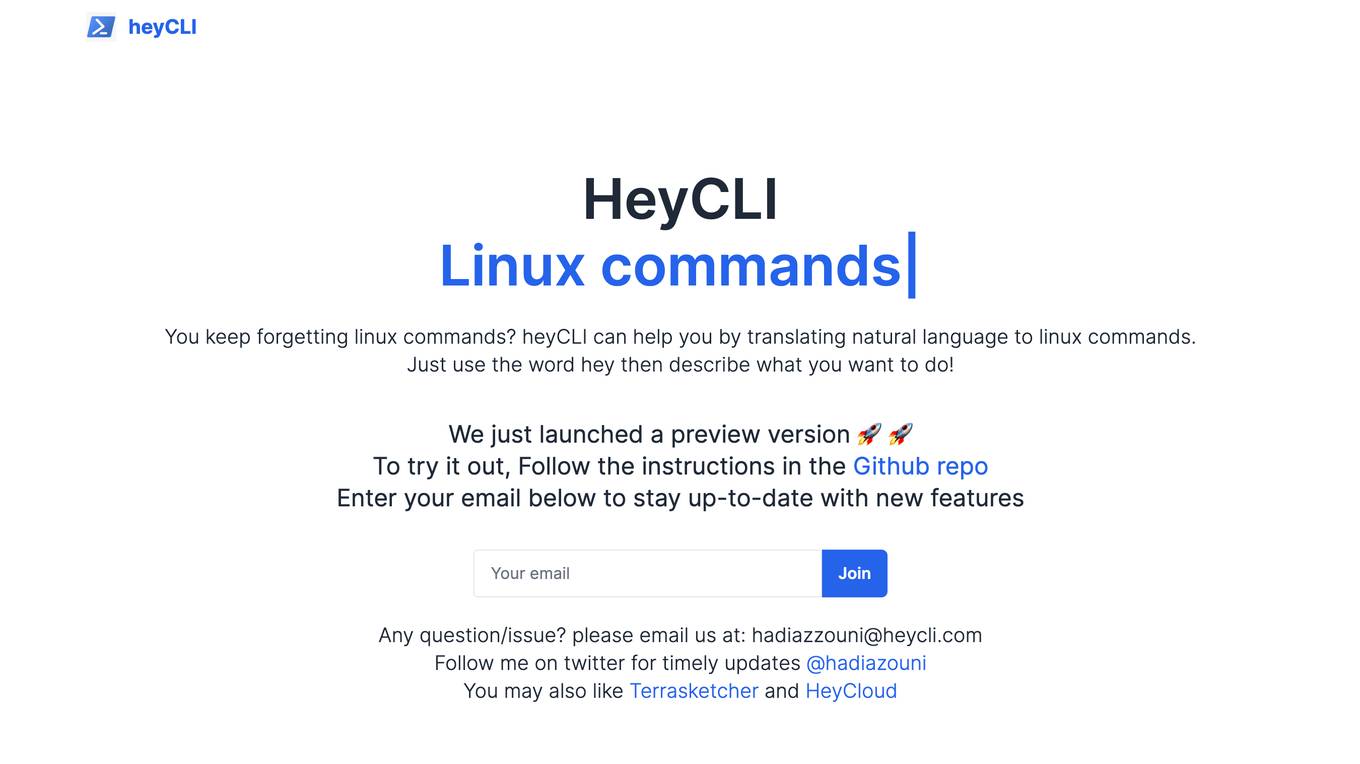

heyCLI

heyCLI is a command-line interface (CLI) tool that allows users to interact with their Linux systems using natural language. It is designed to make it easier for users to perform common tasks without having to memorize complex commands. heyCLI is still in its early stages of development, but it has the potential to be a valuable tool for both new and experienced Linux users.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

Cursor

Cursor is an AI-powered coding tool designed to make developers extraordinarily productive. It offers features such as AI-powered coding assistance in the IDE and CLI, mixed precision training, learning rate scheduling, and an experiment config system. Cursor is trusted by millions of professional developers for its accuracy, speed, and efficiency in coding tasks. It provides a seamless integration with popular platforms like GitHub and Slack, enhancing team collaboration and code review processes.

Pixeebot

Pixeebot is an automated product security engineer that helps developers fix vulnerabilities, harden code, squash bugs, and improve code quality. It integrates with your existing workflow and can be used locally via CLI or through the GitHub app. Pixeebot is powered by the open source Codemodder framework, which allows you to build your own custom codemods.

Hanabi.rest

Hanabi.rest is an AI-based API building platform that allows users to create REST APIs from natural language and screenshots using AI technology. Users can deploy the APIs on Cloudflare Workers and roll them out globally. The platform offers a live editor for testing database access and API endpoints, generates code compatible with various runtimes, and provides features like sharing APIs via URL, npm package integration, and CLI dump functionality. Hanabi.rest simplifies API design and deployment by leveraging natural language processing, image recognition, and v0.dev components.

Vite

Vite is a lightning-fast development toolchain for Vue.js. It combines the best parts of Vue CLI, webpack, and Rollup into a single tool that's both powerful and easy to use. With Vite, you can develop Vue.js applications with incredible speed and efficiency.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

Tolgee

Tolgee is an AI-powered localization tool that allows developers to easily translate their apps into any language. With features like in-context translation, AI translation, and collaboration tools, Tolgee streamlines the localization process and eliminates the need for manual translations. It offers a JavaScript SDK, CLI, and REST API for seamless integration. Tolgee is trusted by over 40,000 users worldwide and has been praised for its user-friendly interface and efficient localization capabilities.

ODIN

ODIN is a powerful internet scanning search engine designed for scanning and cataloging internet assets. It offers enhanced scanning capabilities, faster refresh rates, and comprehensive visibility into open ports. With over 45 modules covering various aspects like HTTP, Elasticsearch, and Redis, ODIN enriches data and provides accurate and up-to-date information. The application uses AI/ML algorithms to detect exposed buckets, files, and potential vulnerabilities. Users can perform granular searches, access exploit information, and integrate effortlessly with ODIN's API, SDKs, and CLI. ODIN allows users to search for hosts, exposed buckets, exposed files, and subdomains, providing detailed insights and supporting diverse threat intelligence applications.

1 - Open Source AI Tools

pianotrans

ByteDance's Piano Transcription is a PyTorch implementation for transcribing piano recordings into MIDI files with pedals. This repository provides a simple GUI and packaging for Windows and Nix on Linux/macOS. It supports using GPU for inference and includes CLI usage. Users can upgrade the tool and report issues to the upstream project. The tool focuses on providing MIDI files, and any other improvements to transcription results should be directed to the original project.

5 - OpenAI Gpts

Angular Versions Checker

This GPT helps you find the right matched Node, Angular CLI, RxJS, TypeScript versions of your Angular project.