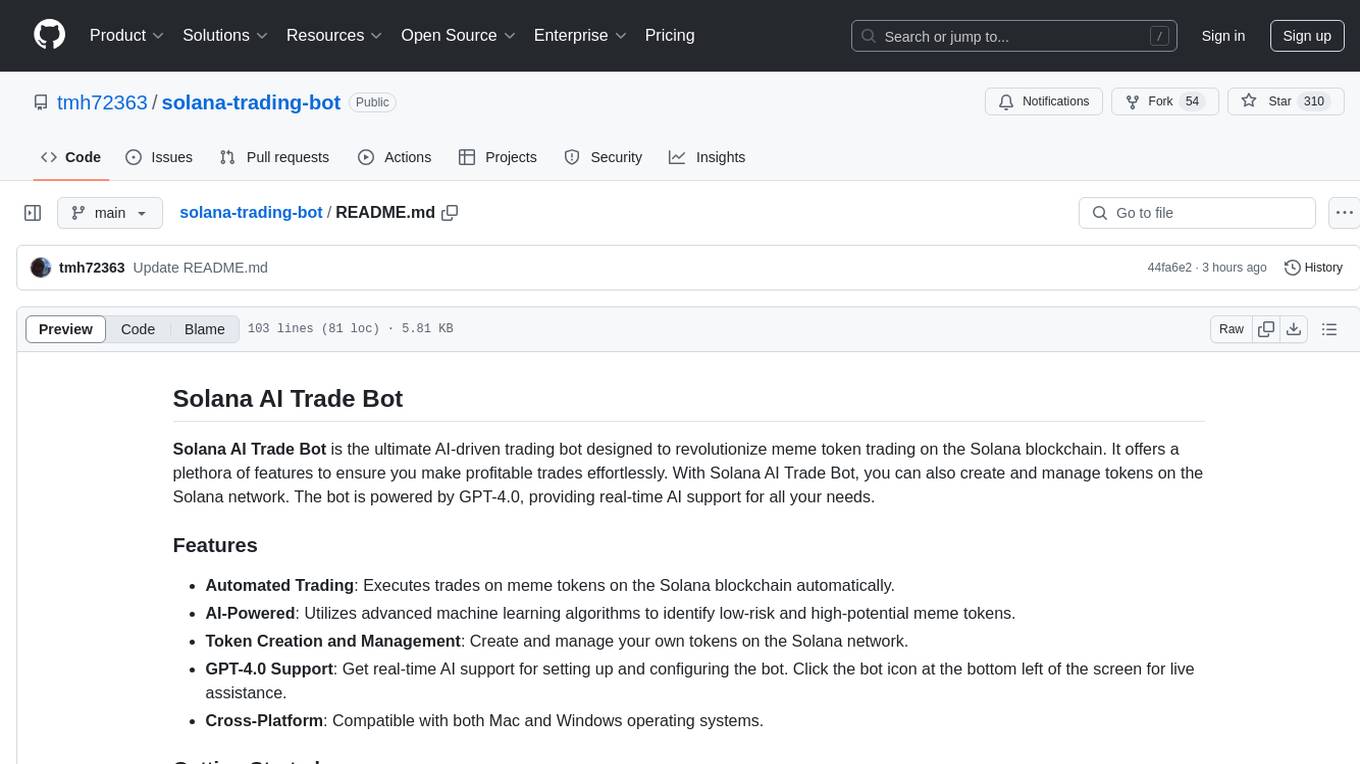

solana-trading-bot

Trade memecoins on Solana using AI algorithms

Stars: 53

Solana AI Trade Bot is an advanced trading tool specifically designed for meme token trading on the Solana blockchain. It leverages AI technology powered by GPT-4.0 to automate trades, identify low-risk/high-potential tokens, and assist in token creation and management. The bot offers cross-platform compatibility and a range of configurable settings for buying, selling, and filtering tokens. Users can benefit from real-time AI support and enhance their trading experience with features like automatic selling, slippage management, and profit/loss calculations. To optimize performance, it is recommended to connect the bot to a private light node for efficient trading execution.

README:

Solana AI Trade Bot is the ultimate AI-driven trading bot designed to revolutionize meme token trading on the Solana blockchain. It offers a plethora of features to ensure you make profitable trades effortlessly. With Solana AI Trade Bot, you can also create and manage tokens on the Solana network. The bot is powered by GPT-4.0, providing real-time AI support for all your needs.

- Automated Trading: Executes trades on meme tokens on the Solana blockchain automatically.

- AI-Powered: Utilizes advanced machine learning algorithms to identify low-risk and high-potential meme tokens.

- Token Creation and Management: Create and manage your own tokens on the Solana network.

- GPT-4.0 Support: Get real-time AI support for setting up and configuring the bot. Click the bot icon at the bottom left of the screen for live assistance.

- Cross-Platform: Compatible with both Mac and Windows operating systems.

- Download the packaged version from here.

- Extract the ZIP file with password

solanabot. - Fill

config.jsfile with your settings. - Double-click on the

solanabot.exeapplication to start the bot.

-

PRIVATE_KEY- Your wallet's private key.

-

RPC_ENDPOINT- HTTPS RPC endpoint for interacting with the Solana network. -

RPC_WEBSOCKET_ENDPOINT- WebSocket RPC endpoint for real-time updates from the Solana network. -

COMMITMENT_LEVEL- The commitment level of transactions (e.g., "finalized" for the highest level of security).

-

LOG_LEVEL- Set logging level, e.g.,info,debug,trace, etc. -

ONE_TOKEN_AT_A_TIME- Set totrueto process buying one token at a time. -

COMPUTE_UNIT_LIMIT- Compute limit used to calculate fees. -

COMPUTE_UNIT_PRICE- Compute price used to calculate fees. -

PRE_LOAD_EXISTING_MARKETS- Bot will load all existing markets in memory on start.- This option should not be used with public RPC.

-

CACHE_NEW_MARKETS- Set totrueto cache new markets.- This option should not be used with public RPC.

-

TRANSACTION_EXECUTOR- Set towarpto use warp infrastructure for executing transactions, or set it to jito to use JSON-RPC jito executer- For more details checkout warp section

-

CUSTOM_FEE- If using warp or jito executors this value will be used for transaction fees instead ofCOMPUTE_UNIT_LIMITandCOMPUTE_UNIT_LIMIT- Minimum value is 0.0001 SOL, but we recommend using 0.006 SOL or above

- On top of this fee, minimal solana network fee will be applied

-

QUOTE_MINT- Which pools to snipe, USDC or WSOL. -

QUOTE_AMOUNT- Amount used to buy each new token. -

AUTO_BUY_DELAY- Delay in milliseconds before buying a token. -

MAX_BUY_RETRIES- Maximum number of retries for buying a token. -

BUY_SLIPPAGE- Slippage %

-

AUTO_SELL- Set totrueto enable automatic selling of tokens.- If you want to manually sell bought tokens, disable this option.

-

MAX_SELL_RETRIES- Maximum number of retries for selling a token. -

AUTO_SELL_DELAY- Delay in milliseconds before auto-selling a token. -

PRICE_CHECK_INTERVAL- Interval in milliseconds for checking the take profit and stop loss conditions.- Set to zero to disable take profit and stop loss.

-

PRICE_CHECK_DURATION- Time in milliseconds to wait for stop loss/take profit conditions.- If you don't reach profit or loss bot will auto sell after this time.

- Set to zero to disable take profit and stop loss.

-

TAKE_PROFIT- Percentage profit at which to take profit.- Take profit is calculated based on quote mint.

-

STOP_LOSS- Percentage loss at which to stop the loss.- Stop loss is calculated based on quote mint.

-

SELL_SLIPPAGE- Slippage %.

-

USE_SNIPE_LIST- Set totrueto enable buying only tokens listed insnipe-list.txt.- Pool must not exist before the bot starts.

- If token can be traded before bot starts nothing will happen. Bot will not buy the token.

-

SNIPE_LIST_REFRESH_INTERVAL- Interval in milliseconds to refresh the snipe list.- You can update snipe list while bot is running. It will pickup the new changes each time it does refresh.

Note: When using snipe list filters below will be disabled.

-

FILTER_CHECK_INTERVAL- Interval in milliseconds for checking if pool match the filters.- Set to zero to disable filters.

-

FILTER_CHECK_DURATION- Time in milliseconds to wait for pool to match the filters.- If pool doesn't match the filter buy will not happen.

- Set to zero to disable filters.

-

CONSECUTIVE_FILTER_MATCHES- How many times in a row pool needs to match the filters.- This is useful because when pool is burned (and rugged), other filters may not report the same behavior. eg. pool size may still have old value

-

CHECK_IF_MUTABLE- Set totrueto buy tokens only if their metadata are not mutable. -

CHECK_IF_SOCIALS- Set totrueto buy tokens only if they have at least 1 social. -

CHECK_IF_MINT_IS_RENOUNCED- Set totrueto buy tokens only if their mint is renounced. -

CHECK_IF_FREEZABLE- Set totrueto buy tokens only if they are not freezable. -

CHECK_IF_BURNED- Set totrueto buy tokens only if their liquidity pool is burned. -

MIN_POOL_SIZE- Bot will buy only if the pool size is greater than or equal the specified amount.- Set

0to disable.

- Set

-

MAX_POOL_SIZE- Bot will buy only if the pool size is less than or equal the specified amount.- Set

0to disable.

- Set

If you use a public RPC provider, chances are you will be rate limited within a few seconds/minutes. Or the connection will be too slow to be effective. This bot works best when connected to a private light node.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for solana-trading-bot

Similar Open Source Tools

solana-trading-bot

Solana AI Trade Bot is an advanced trading tool specifically designed for meme token trading on the Solana blockchain. It leverages AI technology powered by GPT-4.0 to automate trades, identify low-risk/high-potential tokens, and assist in token creation and management. The bot offers cross-platform compatibility and a range of configurable settings for buying, selling, and filtering tokens. Users can benefit from real-time AI support and enhance their trading experience with features like automatic selling, slippage management, and profit/loss calculations. To optimize performance, it is recommended to connect the bot to a private light node for efficient trading execution.

SirChatalot

A Telegram bot that proves you don't need a body to have a personality. It can use various text and image generation APIs to generate responses to user messages. For text generation, the bot can use: * OpenAI's ChatGPT API (or other compatible API). Vision capabilities can be used with GPT-4 models. Function calling can be used with Function calling. * Anthropic's Claude API. Vision capabilities can be used with Claude 3 models. Function calling can be used with tool use. * YandexGPT API Bot can also generate images with: * OpenAI's DALL-E * Stability AI * Yandex ART This bot can also be used to generate responses to voice messages. Bot will convert the voice message to text and will then generate a response. Speech recognition can be done using the OpenAI's Whisper model. To use this feature, you need to install the ffmpeg library. This bot is also support working with files, see Files section for more details. If function calling is enabled, bot can generate images and search the web (limited).

composer-trade-mcp

Composer Trade MCP is the official Composer Model Context Protocol (MCP) server designed for LLMs like Cursor and Claude to validate investment ideas through backtesting and trade multiple strategies in parallel. Users can create automated investing strategies using indicators like RSI, MA, and EMA, backtest their ideas, find tailored strategies, monitor performance, and control investments. The tool provides a fast feedback loop for AI to validate hypotheses and offers a diverse range of equity and crypto offerings for building portfolios.

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

RAGMeUp

RAG Me Up is a generic framework that enables users to perform Retrieve and Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. Best run on GPU with 16GB vRAM. Users can combine RAG with fine-tuning using LLaMa2Lang repository. The tool allows configuration for LLM, data, LLM parameters, prompt, and document splitting. Funding is sought to democratize AI and advance its applications.

RAGMeUp

RAG Me Up is a generic framework that enables users to perform Retrieve, Answer, Generate (RAG) on their own dataset easily. It consists of a small server and UIs for communication. The tool can run on CPU but is optimized for GPUs with at least 16GB of vRAM. Users can combine RAG with fine-tuning using the LLaMa2Lang repository. The tool provides a configurable RAG pipeline without the need for coding, utilizing indexing and inference steps to accurately answer user queries.

smartcat

Smartcat is a CLI interface that brings language models into the Unix ecosystem, allowing power users to leverage the capabilities of LLMs in their daily workflows. It features a minimalist design, seamless integration with terminal and editor workflows, and customizable prompts for specific tasks. Smartcat currently supports OpenAI, Mistral AI, and Anthropic APIs, providing access to a range of language models. With its ability to manipulate file and text streams, integrate with editors, and offer configurable settings, Smartcat empowers users to automate tasks, enhance code quality, and explore creative possibilities.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

cognita

Cognita is an open-source framework to organize your RAG codebase along with a frontend to play around with different RAG customizations. It provides a simple way to organize your codebase so that it becomes easy to test it locally while also being able to deploy it in a production ready environment. The key issues that arise while productionizing RAG system from a Jupyter Notebook are: 1. **Chunking and Embedding Job** : The chunking and embedding code usually needs to be abstracted out and deployed as a job. Sometimes the job will need to run on a schedule or be trigerred via an event to keep the data updated. 2. **Query Service** : The code that generates the answer from the query needs to be wrapped up in a api server like FastAPI and should be deployed as a service. This service should be able to handle multiple queries at the same time and also autoscale with higher traffic. 3. **LLM / Embedding Model Deployment** : Often times, if we are using open-source models, we load the model in the Jupyter notebook. This will need to be hosted as a separate service in production and model will need to be called as an API. 4. **Vector DB deployment** : Most testing happens on vector DBs in memory or on disk. However, in production, the DBs need to be deployed in a more scalable and reliable way. Cognita makes it really easy to customize and experiment everything about a RAG system and still be able to deploy it in a good way. It also ships with a UI that makes it easier to try out different RAG configurations and see the results in real time. You can use it locally or with/without using any Truefoundry components. However, using Truefoundry components makes it easier to test different models and deploy the system in a scalable way. Cognita allows you to host multiple RAG systems using one app. ### Advantages of using Cognita are: 1. A central reusable repository of parsers, loaders, embedders and retrievers. 2. Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team. 3. Fully API driven - which allows integration with other systems. > If you use Cognita with Truefoundry AI Gateway, you can get logging, metrics and feedback mechanism for your user queries. ### Features: 1. Support for multiple document retrievers that use `Similarity Search`, `Query Decompostion`, `Document Reranking`, etc 2. Support for SOTA OpenSource embeddings and reranking from `mixedbread-ai` 3. Support for using LLMs using `Ollama` 4. Support for incremental indexing that ingests entire documents in batches (reduces compute burden), keeps track of already indexed documents and prevents re-indexing of those docs.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

For similar tasks

stockbot-on-groq

StockBot Powered by Groq is an AI-powered chatbot that provides lightning-fast responses with live interactive stock charts, financial data, news, screeners, and more. Leveraging Groq's speed and Vercel's AI SDK, StockBot offers real-time conversation with natural language processing, interactive TradingView charts, adaptive interfaces, and multi-asset market coverage. It is designed for entertainment and instructional use, not for investment advice.

FinVeda

FinVeda is a dynamic financial literacy app that aims to solve the problem of low financial literacy rates in India by providing a platform for financial education. It features an AI chatbot, finance blogs, market trends analysis, SIP calculator, and finance quiz to help users learn finance with finesse. The app is free and open-source, licensed under the GNU General Public License v3.0. FinVeda was developed at IIT Jammu's Udyamitsav'24 Hackathon, where it won first place in the GenAI track and third place overall.

solana-trading-bot

Solana AI Trade Bot is an advanced trading tool specifically designed for meme token trading on the Solana blockchain. It leverages AI technology powered by GPT-4.0 to automate trades, identify low-risk/high-potential tokens, and assist in token creation and management. The bot offers cross-platform compatibility and a range of configurable settings for buying, selling, and filtering tokens. Users can benefit from real-time AI support and enhance their trading experience with features like automatic selling, slippage management, and profit/loss calculations. To optimize performance, it is recommended to connect the bot to a private light node for efficient trading execution.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

awesome-quant-ai

Awesome Quant AI is a curated list of resources focusing on quantitative investment and trading strategies using artificial intelligence and machine learning in finance. It covers key challenges in quantitative finance, AI/ML technical fit, predictive modeling, sequential decision-making, synthetic data generation, contextual reasoning, mathematical foundations, design approach, quantitative trading strategies, tools and platforms, learning resources, books, research papers, community, and conferences. The repository aims to provide a comprehensive resource for those interested in the intersection of AI, machine learning, and quantitative finance, with a focus on extracting alpha while managing risk in financial systems.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

Awesome-AI-Market-Maps

Awesome AI Market Maps is a curated list of Artificial Intelligence startup market maps from 2025 and 2024, featuring over 275 market maps by top VCs, industry analysts, and AI practitioners. The list is organized by quarter, showcasing hot AI topics and the industry's rapid evolution. The data collection workflow includes various tools like ChatGPT, Google Gemini, and human-in-the-loop curation. The repository is regularly updated with new market maps, providing a comprehensive resource for the AI community.

qlib

Qlib is an open-source, AI-oriented quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It covers the entire chain of quantitative investment, from alpha seeking to order execution. The platform empowers researchers to explore ideas and implement productions using AI technologies in quantitative investment. Qlib collaboratively solves key challenges in quantitative investment by releasing state-of-the-art research works in various paradigms. It provides a full ML pipeline for data processing, model training, and back-testing, enabling users to perform tasks such as forecasting market patterns, adapting to market dynamics, and modeling continuous investment decisions.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.