AI-Player

A minecraft mod which aims to add a "second player" into the game which will actually be intelligent.

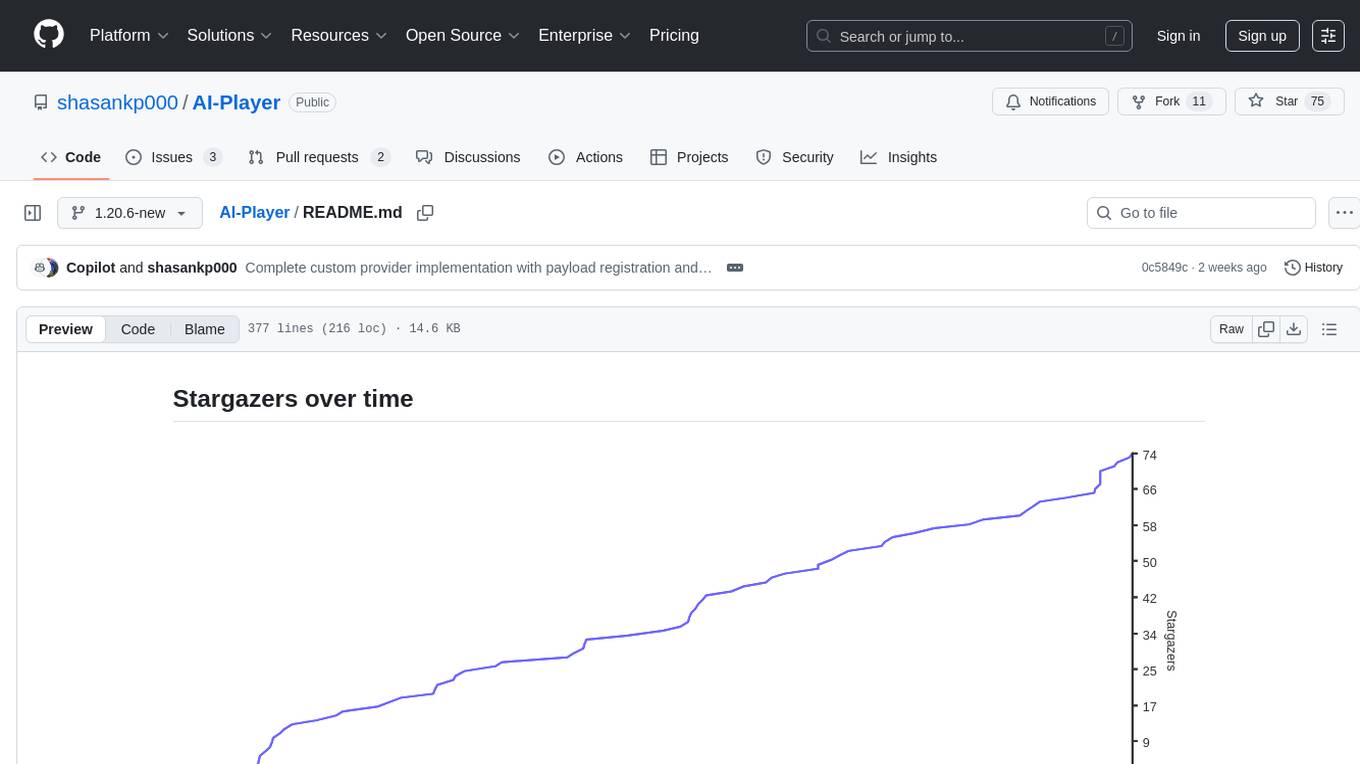

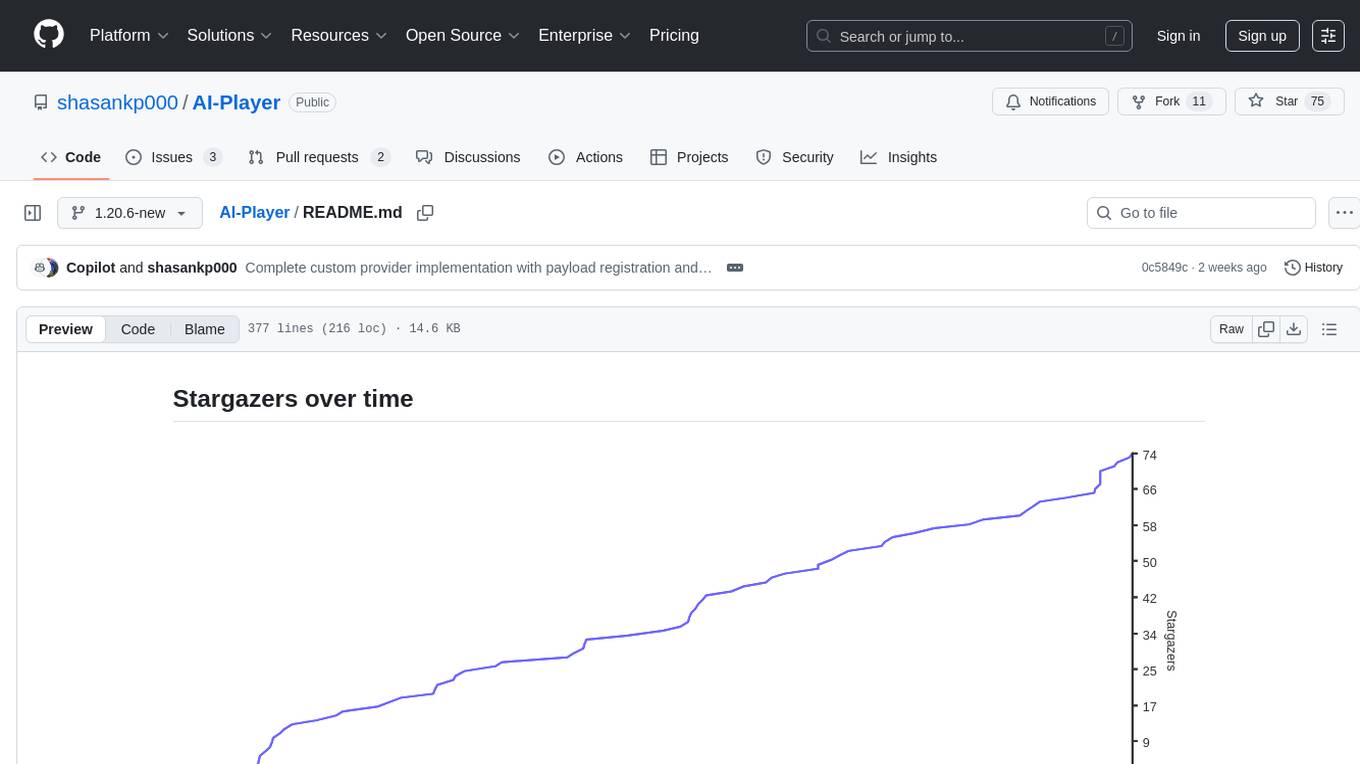

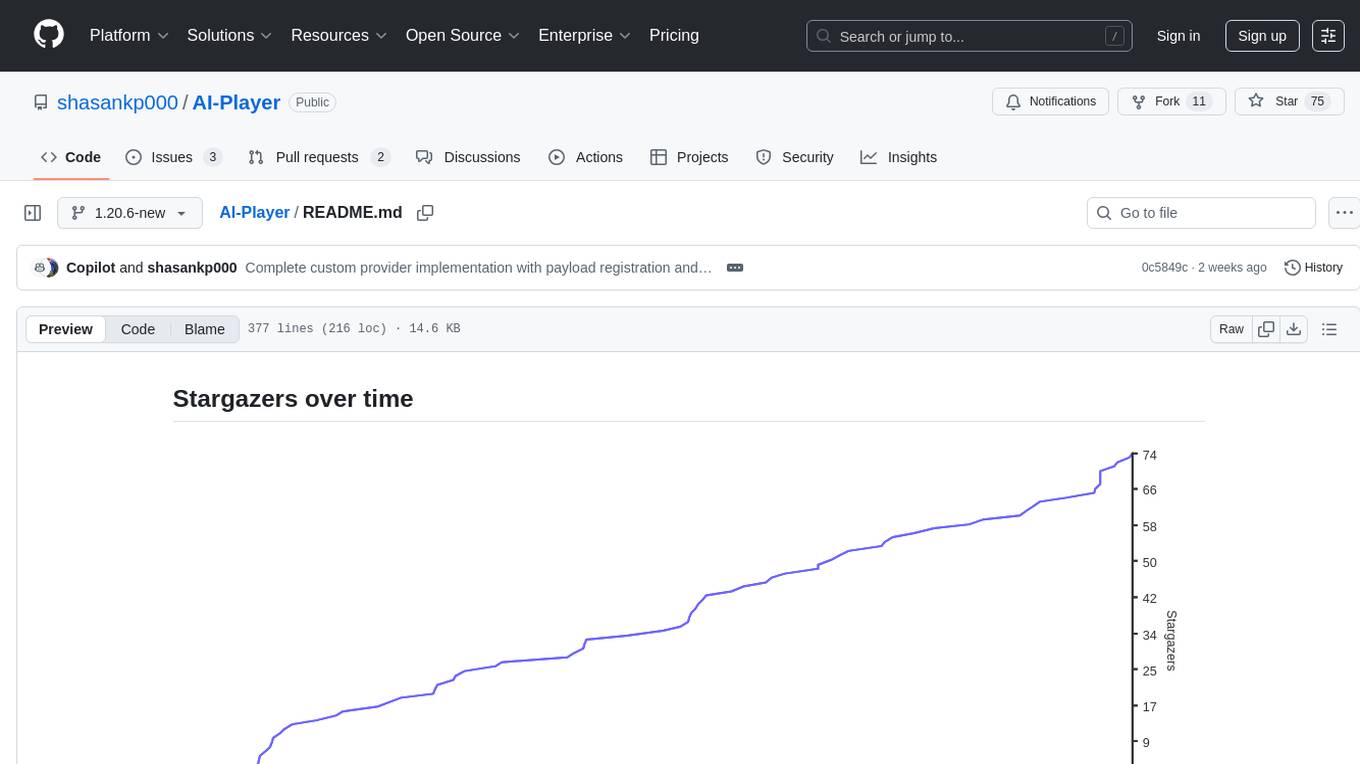

Stars: 75

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

README:

This project so far is the result of thousands of hours of endless reasearch, trials and errors, and just the simple goal of eliminating loneliness from minecraft as much as possible. If you liked my work, please consider donating so that I can continue to work on this project in peace and can actually prove to my parents that my work is making a difference. (Also not having to ask for pocket money from mom).

Just know that I won't ever give up on this project.

If anyone is interested on the underlying algorithms I am working on for increased intelligence for the minecraft bot, feel free to check out this repository:

https://github.com/shasankp000/AI-Tricks

I am open to suggestions/improvements, if any. (Obviously there will be improvements from my own end).

The footages for the bot conversation and config manager here in the github page is a bit outated. Check the modrinth page and download the mod to stay updated.

A minecraft mod which aims to add a "second player" into the game which will actually be intelligent.

Ever felt lonely while playing minecraft alone during that two-week phase? Well, this mod aims to solve that problem of loneliness, not just catering to this particular use case, but even (hopefully in the future) to play in multiplayer servers as well.

Please note that this is not some sort of a commercialised AI product. This project is just a solution to a problem many Minecraft players have faced and do continue to face.

I had to add that statement up there to prevent misunderstandings.

This mod relies on the internal code of the Carpet mod, please star the repository of the mod: https://github.com/gnembon/fabric-carpet (Giving credit where it's due)

This mod also relies on the ollama4j project. https://github.com/amithkoujalgi/ollama4j

- From this github page, just download from the releases section or follow the steps in usage section to build and test.

- Modrinth: https://modrinth.com/mod/ai-player/

- Curseforge: https://www.curseforge.com/minecraft/mc-mods/ai-player

Since the new semester in my college has started this month, I am gonna be under really heavy pressure, since I have to study 11 subjects in total (Machine learning, Linear Algebra, Physics, CyberSecurity, and a lot more lol). Don't worry though I won't stop working on this project, it's just that the updates will be quite slower.

It's understandable if y'all leave by then or give up on this project, so, I won't mind. :))

Thank you all from the core of my heart for the support so far. I never imagined we would come this far.

Latest Update: 21/8/2025 at 04:08 AM IST (Indian Standard Time)

Please have patience while waiting for updates. Since I am the only guy working on this project, it does take me time to address all the issues/add in new features.

- Fixed a lot of bugs that got overlooked in the previous testing phase.

I realized that many of the commitments made in the previous announcement were too ambitious to implement in a single update. To keep development smooth, this release is the first part of a two-part update.

This update focuses on core system rewrites and better AI decision-making, laying the foundation for the next wave of features.

Also support for versions below 1.20.6 has been dropped due to codebase changes that I simply can't handle migrating by myself. However others are free to port to lower versions.

Upcoming second part will have the update in 1.20.6 as the final update for 1.20.6 and also will have a new update to directly 1.21.6 where hence the future versions will continue onwards.

- Fully redesigned Natural Language Processing (NLP) — no more "I couldn’t understand you."

- This is a new and experimental system I’ve been designing and rigorously testing over the last month.

- Results are promising, but not yet up to my personal standards — expect further refinements in future updates.

- New Retrieval-Augmented Generation (RAG) system integrated with a database and web search.

- The AI now provides accurate factual information about Minecraft, drastically reducing hallucinations.

- Supported search providers:

- Gemini API

- Serper API

- Brave Search API (in development, will push this to the next patch instead)

- Added a task chaining system:

- You give a high-level instruction → the bot automatically breaks it into smaller tasks → executes step by step.

- Go to a location

- Go to a location and mine resources

- Detect nearby blocks & entities

- Report stats (health, oxygen, hunger, etc.)

AI Player now supports multiple LLM providers (configurable via API keys):

- OpenAI

- Anthropic Claude

- Google Gemini

- xAI Grok

- Custom OpenAI-Compatible Providers (e.g., OpenRouter, TogetherAI, Perplexity)

To use a custom OpenAI-compatible provider:

-

Set the provider mode: Add

-Daiplayer.llmMode=customto your JVM arguments -

Configure in-game: Open the API Keys screen and set:

- Custom API URL (e.g.,

https://openrouter.ai/api/v1) - Custom API Key (your provider's API key)

- Custom API URL (e.g.,

- Select model: Choose from the available models fetched from your provider

See CUSTOM_PROVIDERS.md for detailed instructions and supported providers.

If you select the Gemini Search as the web search tool for the LLM, it will use the API key you have set as your LLM provider in the settings.json5 file automatically.

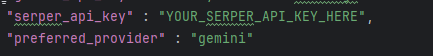

For https://serper.dev/ search, get an api key from serper.dev and then navigate to the config folder in game, open the ai_search_config.json and put the key:

(Note: I couldn’t test all of these myself except the Gemini API since API keys are costly, but the integrations are ready.)

- Fixed bug where JVM arguments were not being read.

- Removed owo-lib. AI-Player now uses an in-house config system.

- Fixed API keys saving issues.

- Added a new Launcher Detection System. Since Modrinth launcher was conflicting by it's own variables path system so the QTable was not being loaded. Supports: Vanilla MC Launcher, Modrinth App, MultiMC, Prism Launcher, Curseforge launcher, ATLauncher, and even unknown launchers which would be unsupported by default, assuming they follow the vanilla mc launcher's path schemes.

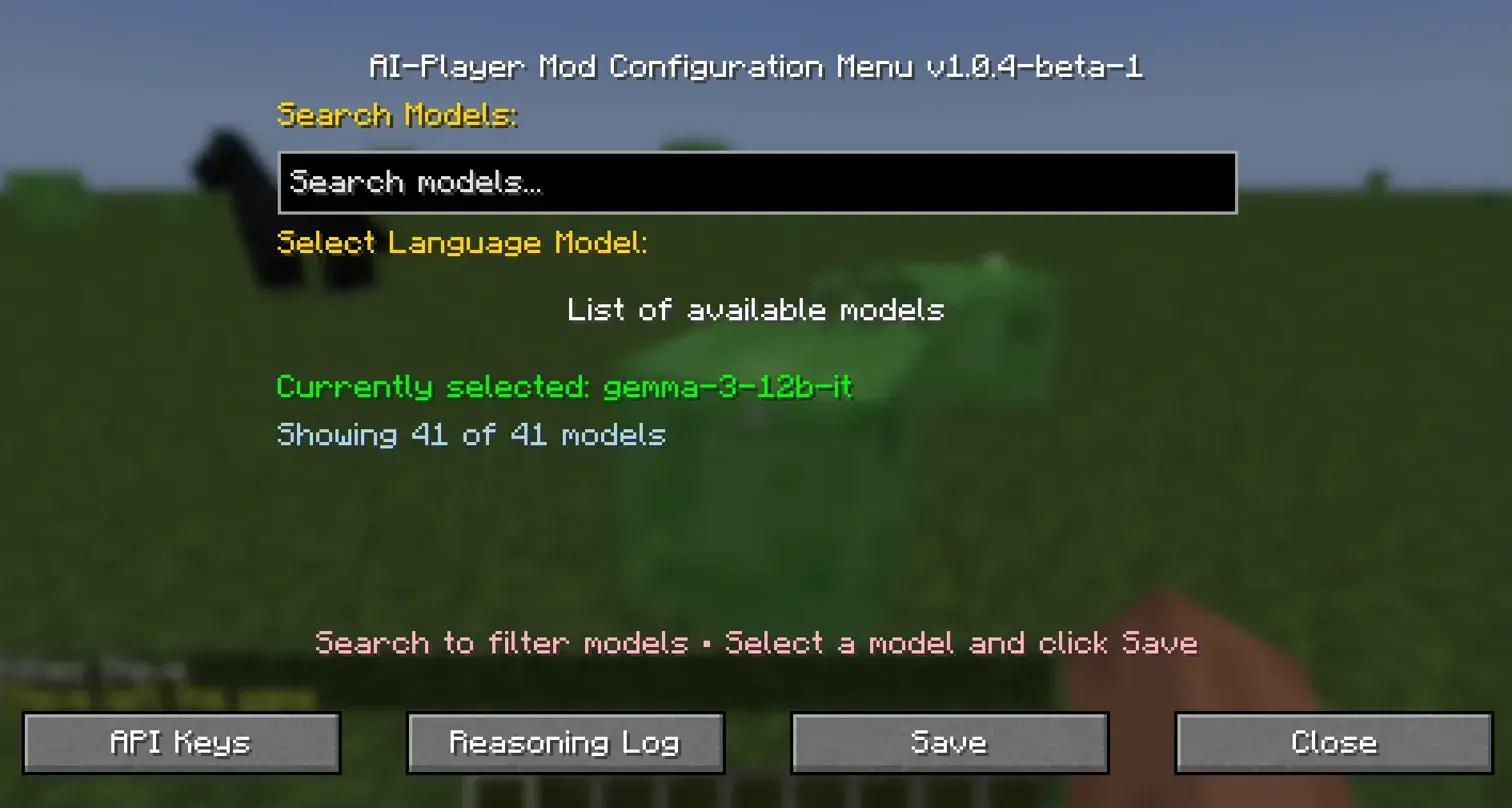

- Revamped the Config Manager UI with a responsive UI along with a search option for providers with a lot of models (like gemini).

- While this update may look small on the surface, designing the systems, writing the code, and debugging took a huge amount of time.

- On top of this, I’ve picked up more freelance contracts and need to focus on my final-year project.

- Updates will continue — just at a slower pace.

Here’s what’s planned for the next patch:

-

Combat & Survival Enhancements

- Bot uses weapons (including ranged) to fend off mobs.

- Reflex module upgrades.

- More natural world interactions (e.g., sleeping at night).

- A more lightweight but more powerful logic engine that will replace the current LLM based reasoning for the Meta-Decision Layer

-

Improved Path Tracer

- Smarter navigation through water and complex terrain. Abilities such as bridging upwards with blocks.

-

Self-Goal Assignment System

- Bot assigns itself goals like a real player.

- Will initiate conversations with players and move autonomously.

-

Mood System (design phase)

- Adds emotional context and varied behavior.

-

Player2 Integration

- Highly requested — this will be the first major feature of the second update.

- Switch to Deep-Q learning instead of traditonal q-learning (TLDR: use a neural network instead of a table)

- Create custom movement code for the bot for precise movement instead of carpet's server sided movement code.

- Implement human consciousness level reasoning??? (to some degree maybe) (BIG MAYBE)

I can proudly say that all bugs in this current version, has been for good, squashed.

mob related reflex actions

https://github.com/user-attachments/assets/1700e1ff-234a-456f-ab37-6dac754b3a94

environment reaction

https://github.com/user-attachments/assets/786527d3-d400-4acd-94f0-3ad433557239

If you want to manually build and test this project, follow from step 1.

For playing the game, download the jar file either from modrinth or the releases section and go directly to step 6.

Step 1. Download Java 21.

This project is built on java 17 to support carpet mod's updated API.

Go to: https://bell-sw.com/pages/downloads/#jdk-21-lts

Click on Download MSI and finish the installation process. [Windows]

For linux users, depending on your system install openjdk-21-jdk package.

Step 2. Download IntelliJ idea community edition.

https://www.jetbrains.com/idea/download/?section=windows

Step 3. Download the project.

If you have git setup in your machine already you can just clone the project to your machine and then open it in intellij

Or alternatively download it as a zip file, extract it and then open it in intellij

Step 4. Configure the project SDK.

Click on the settings gear.

Go to Project Structure

Configure the SDK here, set it to liberica 21

Step 5. Once done wait for intellij to build the project sources, this will take a while as it basically downloads minecraft to run in a test version.

If you happen to see some errors, go to the right sidebar, click on the elephant icon (gradle)

And click on the refresh button, besides the plus icon. Additionally you can go to the terminal icon on the bottom left

And type ./graldew build

Below instructions are same irrespective of build from intellij or direct mod download.

Step 6. Setup ollama.

Go to https://ollama.com/

Download based on your operating system.

After installation, run ollama from your desktop. This will launch the ollama server.

This can be accessed in your system tray

Now open a command line client, on windows, search CMD or terminal and then open it.

1. In cmd or terminal type `ollama pull nomic-embed-text (if not already done).

2. Type `ollama pull llama3.2`

3. Type `ollama rm gemma2 (if you still have it installed) (for previous users only)

4. Type `ollama rm llama2 (if you still have it installed) (for previous users only)

5. If you have run the mod before go to your .minecraft folder, navigate to a folder called config, and delete a file called settings.json5 (for previous users only)

Then make sure you have turned on ollama server.

Step 7: Download the dependencies

Step 8: Launch the game.

Step 9: Type /configMan in chat and select llama3.2 as the language model, then hit save and exit.

Step 10: Then type /bot spawn <yourBotName> <training (for training mode, this mode won't connect to language model) and play (for normal usage)

This section is to describe the usage of the mod in-game

Main command

/bot

Sub commands:

spawm <bot> This command is used to spawn a bot with the desired name.

walk <bot> <till> This command will make the bot walk forward for a specific amount of seconds.

go_to <bot> <x> <y> <z> This command is supposed to make the bot go to the specified co-ordinates, by finding the shortest path to it. It is still a work in progress as of the moment.

send_message_to <bot> <message> This command will help you to talk to the bot.

teleport_forward <bot> This command will teleport the bot forward by 1 positive block

test_chat_message <bot> A test command to make sure that the bot can send messages.

detect_entities <bot> A command which is supposed to detect entities around the bot

use-key <W,S, A, D, LSHIFT, SPRINT, UNSNEAK, UNSPRINT> <bot>

release-all-keys <bot> <botName>

look <north, south, east, west>

detectDangerZone // Detects lava pools and cliffs nearby

getHotBarItems // returns a list of the items in it's hotbar

getSelectedItem // gets the currently selected item

getHungerLevel // gets it's hunger levels

getOxygenLevel // gets the oxygen level of the bot

equipArmor // gets the bot to put on the best armor in it's inventory

removeArmor // work in progress.

Example Usage:

/bot spawn Steve training

The above command changes credits go to Mr. Álvaro Carvalho

And yes since this mod relies on carpet mod, you can spawn a bot using carpet mod's commands too and try the mod. But if you happen to be playing in offline mode, then I recommend using the mod's in built spawn command.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Player

Similar Open Source Tools

AI-Player

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

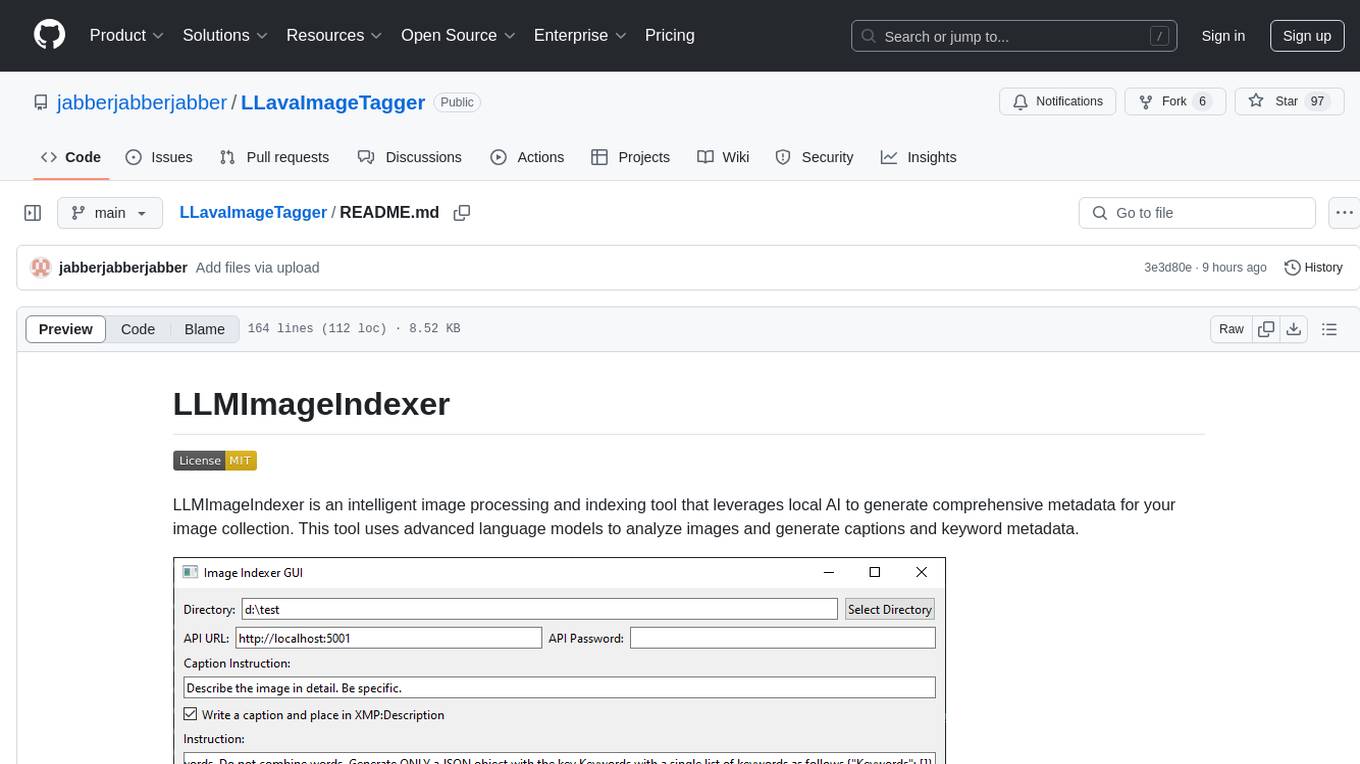

LLavaImageTagger

LLMImageIndexer is an intelligent image processing and indexing tool that leverages local AI to generate comprehensive metadata for your image collection. It uses advanced language models to analyze images and generate captions and keyword metadata. The tool offers features like intelligent image analysis, metadata enhancement, local processing, multi-format support, user-friendly GUI, GPU acceleration, cross-platform support, stop and start capability, and keyword post-processing. It operates directly on image file metadata, allowing users to manage files, add new files, and run the tool multiple times without reprocessing previously keyworded files. Installation instructions are provided for Windows, macOS, and Linux platforms, along with usage guidelines and configuration options.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

OSHW-SenseCAP-Watcher

SenseCAP Watcher is a monitoring device built on ESP32S3 with Himax WiseEye2 HX6538 AI chip, excelling in image and vector data processing. It features a camera, microphone, and speaker for visual, auditory, and interactive capabilities. With LLM-enabled SenseCraft suite, it understands commands, perceives surroundings, and triggers actions. The repository provides firmware, hardware documentation, and applications for the Watcher, along with detailed guides for setup, task assignment, and firmware flashing.

Helios

Helios is a powerful open-source tool for managing and monitoring your Kubernetes clusters. It provides a user-friendly interface to easily visualize and control your cluster resources, including pods, deployments, services, and more. With Helios, you can efficiently manage your containerized applications and ensure high availability and performance of your Kubernetes infrastructure.

LLPlayer

LLPlayer is a specialized media player designed for language learning, offering unique features such as dual subtitles, AI-generated subtitles, real-time OCR, real-time translation, word lookup, and more. It supports multiple languages, online video playback, customizable settings, and integration with browser extensions. Written in C#/WPF, LLPlayer is free, open-source, and aims to enhance the language learning experience through innovative functionalities.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

nextjs-ollama-llm-ui

This web interface provides a user-friendly and feature-rich platform for interacting with Ollama Large Language Models (LLMs). It offers a beautiful and intuitive UI inspired by ChatGPT, making it easy for users to get started with LLMs. The interface is fully local, storing chats in local storage for convenience, and fully responsive, allowing users to chat on their phones with the same ease as on a desktop. It features easy setup, code syntax highlighting, and the ability to easily copy codeblocks. Users can also download, pull, and delete models directly from the interface, and switch between models quickly. Chat history is saved and easily accessible, and users can choose between light and dark mode. To use the web interface, users must have Ollama downloaded and running, and Node.js (18+) and npm installed. Installation instructions are provided for running the interface locally. Upcoming features include the ability to send images in prompts, regenerate responses, import and export chats, and add voice input support.

chatty

Chatty is a private AI tool that runs large language models natively and privately in the browser, ensuring in-browser privacy and offline usability. It supports chat history management, open-source models like Gemma and Llama2, responsive design, intuitive UI, markdown & code highlight, chat with files locally, custom memory support, export chat messages, voice input support, response regeneration, and light & dark mode. It aims to bring popular AI interfaces like ChatGPT and Gemini into an in-browser experience.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

For similar tasks

rwkv.cpp

rwkv.cpp is a port of BlinkDL/RWKV-LM to ggerganov/ggml, supporting FP32, FP16, and quantized INT4, INT5, and INT8 inference. It focuses on CPU but also supports cuBLAS. The project provides a C library rwkv.h and a Python wrapper. RWKV is a large language model architecture with models like RWKV v5 and v6. It requires only state from the previous step for calculations, making it CPU-friendly on large context lengths. Users are advised to test all available formats for perplexity and latency on a representative dataset before serious use.

AI-Player

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.