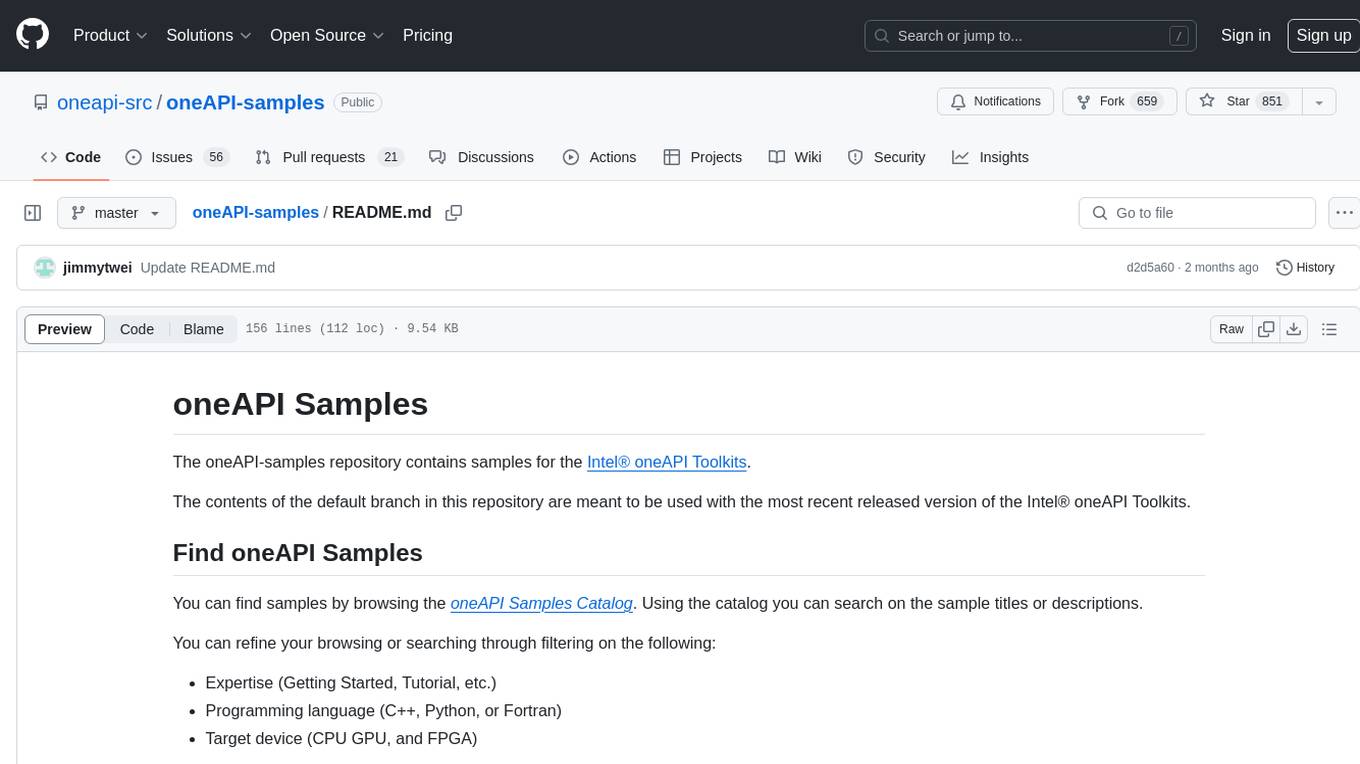

oneAPI-samples

Samples for Intel® oneAPI Toolkits

Stars: 1006

The oneAPI-samples repository contains a collection of samples for the Intel oneAPI Toolkits. These samples cover various topics such as AI and analytics, end-to-end workloads, features and functionality, getting started samples, Jupyter notebooks, direct programming, C++, Fortran, libraries, publications, rendering toolkit, and tools. Users can find samples based on expertise, programming language, and target device. The repository structure is organized by high-level categories, and platform validation includes Ubuntu 22.04, Windows 11, and macOS. The repository provides instructions for getting samples, including cloning the repository or downloading specific tagged versions. Users can also use integrated development environments (IDEs) like Visual Studio Code. The code samples are licensed under the MIT license.

README:

The oneAPI-samples repository contains samples for the Intel® oneAPI Toolkits.

The contents of the default branch in this repository are meant to be used with the most recent released version of the Intel® oneAPI Toolkits.

You can find samples by browsing the oneAPI Samples Catalog. Using the catalog you can search on the sample titles or descriptions.

You can refine your browsing or searching through filtering on the following:

- Expertise (Getting Started, Tutorial, etc.)

- Programming language (C++, Python, or Fortran)

- Target device (CPU GPU, and FPGA)

Clone the repository by entering the following command:

git clone https://github.com/oneapi-src/oneAPI-samples.git

Alternatively, you can download a zip file containing the primary branch in repository.

- Click the Code button.

- Select Download ZIP from the menu options.

- After downloading the file, unzip the repository contents.

If you need samples for an earlier version of any of the Intel® oneAPI Toolkits, then use a tagged version of the repository that corresponds with the toolkit version.

Clone an earlier version of the repository using Git by entering a command similar to the following:

git clone -b <tag> https://github.com/oneapi-src/oneAPI-samples.git

where <tag> is the GitHub tag corresponding to the toolkit version number, like 2024.2.0.

Alternatively, you can download a zip file containing a specific tagged version of the repository.

- Select the appropriate tag.

- Click the Code button.

- Select Download ZIP from the menu options.

- After downloading the file, unzip the repository contents.

The best oneAPI sample to start with depends on what you are trying to learn or types of problems you are trying to solve.

| If you want to learn about... | Start with... |

|---|---|

| the basics of writing, compiling, and building programs for CPUs, GPUs, or FPGAs |

Simple Add or Vector Add samples (You can use these samples as starter projects by removing unwanted elements and adding your code and build requirements.) |

| the basics of using artificial intelligence | Getting Started Samples for AI Tools |

| the basics of image rendering workloads and ray tracing | Getting Started Samples for Intel® oneAPI Rendering Toolkit (Render Kit) |

| how to modify or create build files for SYCL-compliant projects | Vector Add sample |

Note: The README.md included with each sample provides build instructions for all supported operating system. For samples run in Jupyter Notebooks, you might need to install or configure additional frameworks or package managers if you do not already have them on your system.

If you prefer to use an Integrated Development Environment (IDE) with these samples, you can download Visual Studio Code for use on Windows*, Linux*, and macOS*.

The oneAPI-sample repository is organized by high-level categories.

Intel(R) Xeon(R) Platinum 8468V

Intel(R) Data Center GPU Max 1100

OpenCL Driver: Intel(R) OpenCL, Intel(R) Xeon(R) Platinum 8468V OpenCL 3.0 (Build 0) [2024.18.7.0.11_160000]

Level Zero Driver: Intel(R) Level-Zero, Intel(R) Data Center GPU Max 1100 1.3 [1.3.28202]

oneAPI package version:

‐ Intel oneAPI HPC Toolkit Build Version: 2025.0.0.825

11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz

Intel(R) Iris(R) Xe Graphics OpenCL 3.0 NEO

OpenCL Driver: Intel(R) OpenCL, 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz OpenCL 3.0 (Build 0) [2024.18.9.0.28_160000]

Level Zero Driver: Intel(R) oneAPI Unified Runtime over Level-Zero, Intel(R) Iris(R) Xe Graphics 12.0.0 [1.3.27193]

oneAPI package version:

‐ Intel oneAPI HPC Toolkit Build Version: 2025.0.0.822

- If you are using Microsoft Visual Studio* 2019, you must use Microsoft Visual Studio 2019 version 16.4.0 or newer.

- Windows support for the FPGA code samples is limited to the FPGA emulator and optimization reports. Only Linux supports FPGA hardware compilation. See any FPGA code sample README.md for more details.

- If you encounter

Error MSB6003 The specified task executable ... could not be run...when building a sample program, it might be due to the length of the directory path. Move thebuilddirectory to a location with a shorter path. Build the sample in the new location.

A curated list of samples from oneAPI based projects, libraries, and tools. In addition, the most exciting samples from other AI projects that are not necessarily based on oneAPI are also listed here to provide you with the latest and valuable resources for augmenting your productivity.

- OpenVINO™ notebooks: A collection of ready-to-run Jupyter notebooks for learning and experimenting with the OpenVINO™ Toolkit, an open-source AI toolkit that makes it easier to write once, deploy anywhere. The notebooks introduce OpenVINO basics and teach developers how to leverage the API for optimized deep learning inference.

- Intel® Gaudi® Tutorials: Tutorials with step-by-step instructions for running PyTorch and PyTorch Lightning models on the Intel Gaudi AI Processor for training and inferencing, from beginner level to advanced users.

- Powered-by-Intel Leaderboard: This leaderboard celebrates and increases the discoverability of models developed on Intel hardware by the AI developer community. We provide developers with sample code and resources (developer programs) to deploy (inference) AI PC, Intel® Xeon® Scalable processors, Intel® Gaudi® processors, Intel® Arc™ GPUs, and Intel® Data Center GPUs.

- Intel® AI Reference Models: This repository contains links to pre-trained models, sample scripts, best practices, and step-by-step tutorials for many popular open-source machine learning models optimized by Intel to run on Intel® Xeon® Scalable processors and Intel® Data Center GPUs.

- awesome-oneapi: A community sourced list of awesome oneAPI and SYCL projects for solutions across a wide range of industry segments.

- Generative AI Examples: A collection of GenAI examples such as ChatQnA, Copilot, which illustrate the pipeline capabilities of the Open Platform for Enterprise AI (OPEA) project. OPEA is an ecosystem orchestration framework to integrate performant GenAI technologies & workflows leading to quicker GenAI adoption and business value.

Code samples are licensed under the MIT license. See License.txt for details.

Third-party program licenses can be found here: third-party-programs.txt.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for oneAPI-samples

Similar Open Source Tools

oneAPI-samples

The oneAPI-samples repository contains a collection of samples for the Intel oneAPI Toolkits. These samples cover various topics such as AI and analytics, end-to-end workloads, features and functionality, getting started samples, Jupyter notebooks, direct programming, C++, Fortran, libraries, publications, rendering toolkit, and tools. Users can find samples based on expertise, programming language, and target device. The repository structure is organized by high-level categories, and platform validation includes Ubuntu 22.04, Windows 11, and macOS. The repository provides instructions for getting samples, including cloning the repository or downloading specific tagged versions. Users can also use integrated development environments (IDEs) like Visual Studio Code. The code samples are licensed under the MIT license.

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

holohub

Holohub is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials, and benchmarks. It includes example applications, community components, package configurations, and tutorials. Users and developers of the Holoscan platform are invited to reuse and contribute to this repository. The repository provides detailed instructions on prerequisites, building, running applications, contributing, and glossary terms. It also offers a searchable catalog of available components on the Holoscan SDK User Guide website.

NeMo-Agent-Toolkit

NVIDIA NeMo Agent toolkit is a flexible, lightweight, and unifying library that allows you to easily connect existing enterprise agents to data sources and tools across any framework. It is framework agnostic, promotes reusability, enables rapid development, provides profiling capabilities, offers observability features, includes an evaluation system, features a user interface for interaction, and supports the Model Context Protocol (MCP). With NeMo Agent toolkit, users can move quickly, experiment freely, and ensure reliability across all agent-driven projects.

peridyno

PeriDyno is a CUDA-based, highly parallel physics engine targeted at providing real-time simulation of physical environments for intelligent agents. It is designed to be easy to use and integrate into existing projects, and it provides a wide range of features for simulating a variety of physical phenomena. PeriDyno is open source and available under the Apache 2.0 license.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

csghub-server

CSGHub Server is a part of the open source and reliable large model assets management platform - CSGHub. It focuses on management of models, datasets, and other LLM assets through REST API. Key features include creation and management of users and organizations, auto-tagging of model and dataset labels, search functionality, online preview of dataset files, content moderation for text and image, download of individual files, tracking of model and dataset activity data. The tool is extensible and customizable, supporting different git servers, flexible LFS storage system configuration, and content moderation options. The roadmap includes support for more Git servers, Git LFS, dataset online viewer, model/dataset auto-tag, S3 protocol support, model format conversion, and model one-click deploy. The project is licensed under Apache 2.0 and welcomes contributions.

OM1

OpenMind's OM1 is a modular AI runtime empowering developers to create and deploy multimodal AI agents across digital environments and physical robots. OM1 agents process diverse inputs like web data, social media, camera feeds, and LIDAR, enabling actions including motion, autonomous navigation, and natural conversations. The goal is to create highly capable human-focused robots that are easy to upgrade and reconfigure for different physical form factors. OM1 features a modular architecture, supports new hardware via plugins, offers web-based debugging display, and pre-configured endpoints for various services.

bedrock-engineer

Bedrock Engineer is an autonomous software development agent application that utilizes Amazon Bedrock. It allows users to customize, create/edit files, execute commands, search the web, use a knowledge base, utilize multi-agents, generate images, and more. The tool provides an interactive chat interface with AI agents, file system operations, web search capabilities, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select and customize agents, choose from various tools like file system operations, web search, Amazon Bedrock integration, and system command execution. Additionally, the tool offers features for website generation, connecting to design system data sources, AWS Step Functions ASL definition generation, diagram creation using natural language descriptions, and multi-language support.

ai-chat-android

AI Chat Android demonstrates Google's Generative AI on Android with Firebase Realtime Database. It showcases Gemini API integration, Jetpack Compose UI elements, Android architecture components with Hilt, Kotlin Coroutines for background tasks, and Firebase Realtime Database integration for real-time events. The project follows Google's official architecture guidance with a modularized structure for reusability, parallel building, and decentralized focusing.

ctakes

Apache cTAKES is a clinical Text Analysis and Knowledge Extraction System that focuses on extracting knowledge from clinical text through Natural Language Processing (NLP) techniques. It is modular and employs rule-based and machine learning methods to extract concepts such as symptoms, procedures, diagnoses, medications, and anatomy with attributes and standard codes. cTAKES can identify temporal events, dates, and times, placing events in a patient timeline. It supports various biomedical text processing tasks and can handle different types of clinical and health-related narratives using multiple data standards. cTAKES is widely used in research initiatives and encourages contributions from professionals, researchers, doctors, and students from diverse backgrounds.

radicalbit-ai-monitoring

The Radicalbit AI Monitoring Platform provides a comprehensive solution for monitoring Machine Learning and Large Language models in production. It helps proactively identify and address potential performance issues by analyzing data quality, model quality, and model drift. The repository contains files and projects for running the platform, including UI, API, SDK, and Spark components. Installation using Docker compose is provided, allowing deployment with a K3s cluster and interaction with a k9s container. The platform documentation includes a step-by-step guide for installation and creating dashboards. Community engagement is encouraged through a Discord server. The roadmap includes adding functionalities for batch and real-time workloads, covering various model types and tasks.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

PrivateDocBot

PrivateDocBot is a local LLM-powered chatbot designed for secure document interactions. It seamlessly merges Chainlit user-friendly interface with localized language models, tailored for sensitive data. The project streamlines data access by deciphering intricate user guides and extracting vital insights from complex PDF reports. Equipped with advanced technology, it offers an engaging conversational experience, redefining data interaction and empowering users with control.

For similar tasks

oneAPI-samples

The oneAPI-samples repository contains a collection of samples for the Intel oneAPI Toolkits. These samples cover various topics such as AI and analytics, end-to-end workloads, features and functionality, getting started samples, Jupyter notebooks, direct programming, C++, Fortran, libraries, publications, rendering toolkit, and tools. Users can find samples based on expertise, programming language, and target device. The repository structure is organized by high-level categories, and platform validation includes Ubuntu 22.04, Windows 11, and macOS. The repository provides instructions for getting samples, including cloning the repository or downloading specific tagged versions. Users can also use integrated development environments (IDEs) like Visual Studio Code. The code samples are licensed under the MIT license.

azure-openai-service-proxy

The Azure OpenAI Proxy service aims to simplify access to an Azure OpenAI `Playground-like` experience by supporting Azure OpenAI SDKs, LangChain, and REST endpoints for developer events, workshops, and hackathons. Users can access the service using a timebound `event code`. The solution documentation is available for reference.

spring-ai-examples

This repository contains various examples of using Spring AI. Users can clone the entire project or use SpringCLI to select individual projects and create them locally. It includes a project-catalog.yml for adding as a project catalog to Spring CLI. Users can create projects locally using 'spring boot new' or mix a project's functionality into an existing project using 'spring boot add'. Be cautious about building against newer versions of Spring Boot than your project, as it may lead to build or test errors.

python-projects-2024

Welcome to `OPEN ODYSSEY 1.0` - an Open-source extravaganza for Python and AI/ML Projects. Collaborating with MLH (Major League Hacking), this repository welcomes contributions in the form of fixing outstanding issues, submitting bug reports or new feature requests, adding new projects, implementing new models, and encouraging creativity. Follow the instructions to contribute by forking the repository, cloning it to your PC, creating a new folder for your project, and making a pull request. The repository also features a special Leaderboard for top contributors and offers certificates for all participants and mentors. Follow `OPEN ODYSSEY 1.0` on social media for swift approval of your quest.

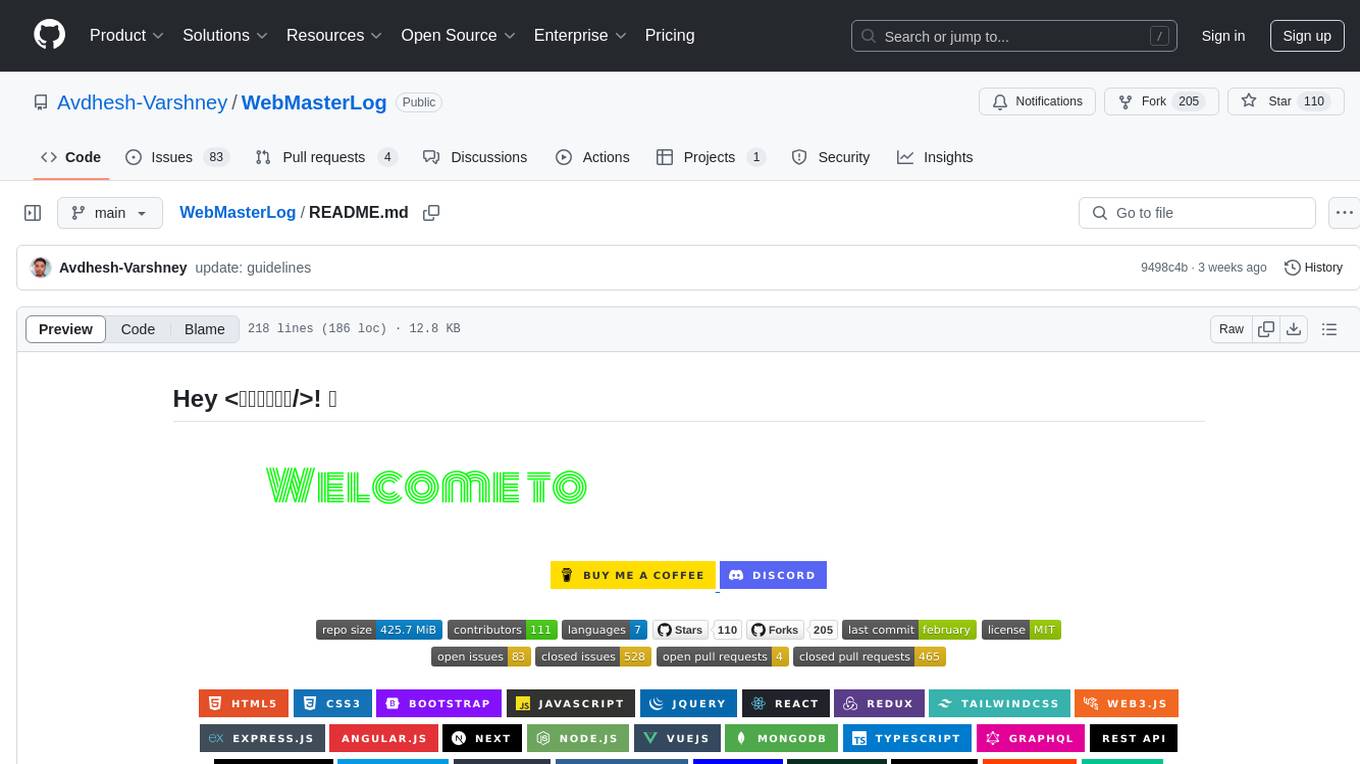

WebMasterLog

WebMasterLog is a comprehensive repository showcasing various web development projects built with front-end and back-end technologies. It highlights interactive user interfaces, dynamic web applications, and a spectrum of web development solutions. The repository encourages contributions in areas such as adding new projects, improving existing projects, updating documentation, fixing bugs, implementing responsive design, enhancing code readability, and optimizing project functionalities. Contributors are guided to follow specific guidelines for project submissions, including directory naming conventions, README file inclusion, project screenshots, and commit practices. Pull requests are reviewed based on criteria such as proper PR template completion, originality of work, code comments for clarity, and sharing screenshots for frontend updates. The repository also participates in various open-source programs like JWOC, GSSoC, Hacktoberfest, KWOC, 24 Pull Requests, IWOC, SWOC, and DWOC, welcoming valuable contributors.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

aily-blockly

Aily Blockly is a blockly IDE under the Aily Project, providing AI-assisted programming capabilities for non-professional users. It aims to integrate numerous AI capabilities to help hardware developers develop more smoothly, ultimately achieving natural language programming. The software offers features like Engineering Project Management, Library Manager, Serial Debug Tool, AI Project Generation, AI Code Generation, AI Library Conversion, Development Board Configuration Generation, and Lightning Compilation Tool. It is currently in the alpha stage, suitable for prototype verification and educational teaching.

vasttools

This repository contains a collection of tools that can be used with vastai. The tools are free to use, modify and distribute. If you find this useful and wish to donate your welcome to send your donations to the following wallets. BTC 15qkQSYXP2BvpqJkbj2qsNFb6nd7FyVcou XMR 897VkA8sG6gh7yvrKrtvWningikPteojfSgGff3JAUs3cu7jxPDjhiAZRdcQSYPE2VGFVHAdirHqRZEpZsWyPiNK6XPQKAg RVN RSgWs9Co8nQeyPqQAAqHkHhc5ykXyoMDUp USDT(ETH ERC20) 0xa5955cf9fe7af53bcaa1d2404e2b17a1f28aac4f Paypal PayPal.Me/cryptolabsZA

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.