neo

The Application Engine for the AI Era. A multi-threaded, AI-native runtime with a persistent Scene Graph, enabling AI agents to introspect and mutate the living application structure in real-time.

Stars: 3134

Neo.mjs is a revolutionary Application Engine for the web that offers true multithreading and context engineering, enabling desktop-class UI performance and AI-driven runtime mutation. It is not a framework but a complete runtime and toolchain for enterprise applications, excelling in single page apps and browser-based multi-window applications. With a pioneering Off-Main-Thread architecture, Neo.mjs ensures butter-smooth UI performance by keeping the main thread free for flawless user interactions. The latest version, v11, introduces AI-native capabilities, allowing developers to work with AI agents as first-class partners in the development process. The platform offers a suite of dedicated Model Context Protocol servers that give agents the context they need to understand, build, and reason about the code, enabling a new level of human-AI collaboration.

README:

🚀 True Multithreading Meets Context Engineering — Build Desktop-Class UIs with an AI Co-Developer.

⚡ The Mental Model: Neo.mjs is a multi-threaded application runtime where UI components are persistent objects—not ephemeral render results. This architectural shift enables desktop-class performance, multi-window orchestration, and AI-driven runtime mutation that traditional frameworks cannot achieve.

💻 Neo.mjs is not a framework; it is an Application Engine for the web. Just as Unreal Engine provides a complete runtime and toolchain for games, Neo.mjs provides a multi-threaded runtime and AI-native toolchain for enterprise applications.

Imagine web applications that never jank, no matter how complex the logic, how many real-time updates they handle, or how many browser windows they span. Neo.mjs is engineered from the ground up to deliver desktop-like fluidity and scalability. While it excels for Single Page Apps (SPAs), Neo.mjs is simply the best option for browser-based multi-window applications, operating fundamentally different from traditional frameworks.

By leveraging a pioneering Off-Main-Thread (OMT) architecture, Neo.mjs ensures your UI remains butter-smooth. The main thread is kept free for one purpose: flawless user interactions and seamless DOM updates.

But performance is only half the story. With v11, Neo.mjs becomes the world's first AI-native frontend platform, designed to be developed with AI agents as first-class partners in your workflow.

Neo.mjs is an Application Engine, not a website builder. It is a mature, enterprise-grade platform with over 130,000 lines of production-ready code.

✅ Perfect For:

- Financial & Trading Platforms: processing 10k+ ticks/sec without UI freeze.

- Multi-Window Workspaces: IDEs, Control Rooms, and Dashboards that span multiple screens.

- AI-Native Interfaces: Apps where the UI structure must be mutable by AI agents at runtime.

- Enterprise SPAs: Complex logic that demands the stability of a desktop application.

❌ Not Designed For:

- Static content sites or simple blogs (Use Astro/Next.js).

- Teams looking for "React with a different syntax."

- Developers unwilling to embrace the Actor Model (Workers).

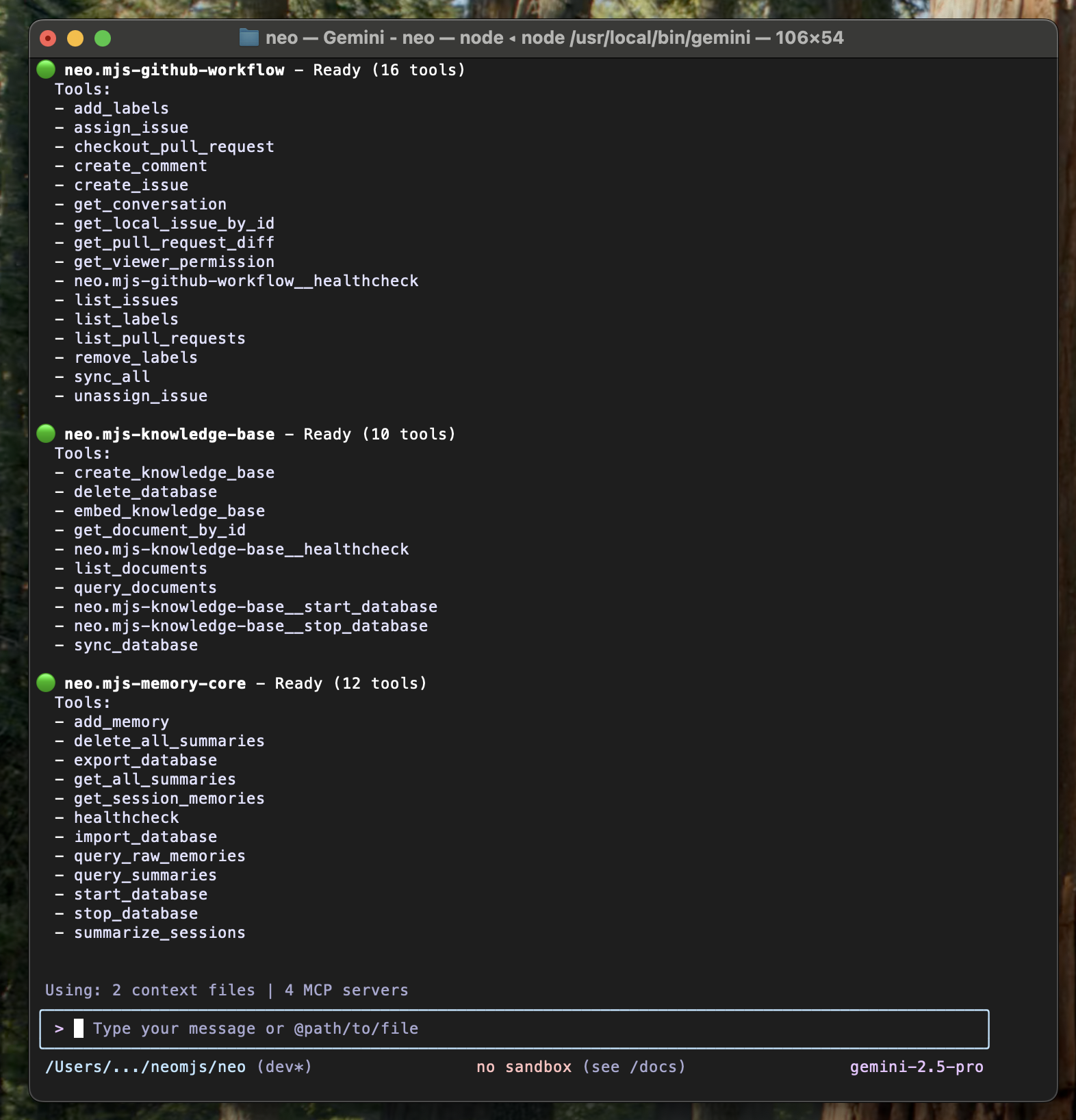

Neo.mjs v11 introduces a revolutionary approach to software development: Context Engineering. We've moved beyond simple "AI-assisted" coding to create a truly AI-native platform where AI agents are deeply integrated partners in the development process. This is made possible by a suite of dedicated Model Context Protocol (MCP) servers that give agents the context they need to understand, build, and reason about your code.

This isn't just about generating code; it's about creating a self-aware development environment that accelerates velocity, improves quality, and enables a new level of human-AI collaboration.

-

🧠 The Knowledge Base Server: Gives agents a deep, semantic understanding of your project. Powered by ChromaDB and Gemini embeddings, it allows agents to perform semantic searches across your entire codebase, documentation, and historical tickets. An agent can ask, "How does VDOM diffing work?" and get the exact source files and architectural guides relevant to the currently checked-out version.

-

💾 The Memory Core Server: Provides agents with persistent, long-term memory. Every interaction—prompt, thought process, and response is stored, allowing the agent to learn from experience, recall past decisions, and maintain context across multiple sessions. This transforms the agent from a stateless tool into a true collaborator that grows with your project.

-

🤖 The GitHub Workflow Server: Closes the loop by enabling agents to participate directly in your project's lifecycle. It provides tools for autonomous PR reviews, issue management, and bi-directional synchronization of GitHub issues into a local, queryable set of markdown files. This removes the human bottleneck in code review and project management.

-

⚡️ The Agent Runtime: Empower your agents to act as autonomous developers. Instead of passively asking for information, agents can write and execute complex scripts using the Neo.mjs AI SDK. This enables advanced workflows like self-healing code, automated refactoring, and data migration—running locally at machine speed.

AI agents are "blind" in traditional compiled frameworks (React, Svelte) because the code they write (JSX/Templates) is destroyed by the build step—the runtime reality (DOM nodes) looks nothing like the source.

In Neo.mjs, the map IS the territory. Because components are persistent objects in the App Worker (not ephemeral DOM nodes), the AI can query their state, methods, and inheritance chain at any time. It's like inspecting a running game character in Unreal Engine, rather than parsing the pixels on the screen. The AI sees exactly what the engine sees, enabling it to reason about state, inheritance, and topology with perfect accuracy. Furthermore, the Neural Link allows agents to query the runtime for the Ground Truth, enabling them to verify reality and solve complex, runtime-dependent problems that static analysis cannot touch.

This powerful tooling, co-created with AI agents, enabled us to ship 388 architectural enhancements in just 6 weeks. This order-of-magnitude increase in velocity proves that Context Engineering solves the complexity bottleneck. To learn more about this paradigm shift, read our blog post: 388 Tickets in 6 Weeks: Context Engineering Done Right.

Most frameworks offer a "Read-Only" view of your code to AI. Neo.mjs offers "Read/Write" access to the Runtime.

Because Neo.mjs components are persistent objects (Lego Technic) rather than transient DOM nodes (Melted Plastic), the Neural Link allows AI agents to connect directly to the engine's memory.

-

Introspection: Agents can query the Scene Graph (

get_component_tree) to understand the exact state of the UI. - Mutation: Agents can hot-patch class methods, modify state, or re-parent components in real-time without a reload.

- Verification: Agents can simulate user events (clicks, drags) to verify their own fixes.

Run this command:

npx neo-app@latestThis one-liner sets up everything you need to start building with Neo.mjs, including:

- A new app workspace.

- A pre-configured app shell.

- A local development server.

- Launching your app in a new browser window — all in one go.

📖 More details? Check out our Getting Started Guide

🧑🎓 Make sure to dive into the Learning Section

Next steps:

- ⭐ Experience stunning Demos & Examples here: Neo.mjs Examples Portal

- Many more are included inside the repos apps & examples folders.

- 📘 All Blog Posts are listed here: Neo.mjs Blog

- 🤖 Get started with the AI Knowledge Base Quick Start Guide.

Frameworks compile away. Engines stay alive.

In traditional frameworks, the source code is a blueprint that gets destroyed to create the DOM. In Neo.mjs, the source code instantiates a Scene Graph—a hierarchy of persistent objects that lives in the App Worker.

- Lego Technic vs. Duplo: Most framework components are like "melted plastic"—once rendered, they lose their identity. Neo.mjs components are like Lego Technic: precision-engineered parts that retain their state, methods, and relationships at runtime.

- The JSON Protocol: Because every component adheres to a strict serialization protocol (toJSON), the entire application behaves as a mutable graph.

- Runtime Permutation: This allows AI agents (or you) to inspect, dismantle, and reconfigure the application on the fly—changing layouts, moving dashboards between windows, or hot-swapping themes without a reload.

It's not just a view library; it's a construction kit for adaptive, living applications.

You're building F1 apps with Toyota parts.

Traditional frameworks are reliable general-purpose tools (Toyota), sacrificing raw performance for mass adoption. Neo.mjs is precision-engineered for extreme performance (F1), designed for applications where "good enough" isn't acceptable.

-

Eliminate UI Freezes with True Multithreading:

"The browser's main thread should be treated like a neurosurgeon: only perform precise, scheduled operations with zero distractions." — Neo.mjs Core Philosophy

Neo.mjs's OMT architecture inherently prevents UI freezes. With our optimized rendering pipeline, your UI will remain consistently responsive, even during intense data processing. It achieves an astonishing rate of over 40,000 delta updates per second in optimized environments, potential, limited only by user interaction, not the platform.

-

Unprecedented Velocity with an AI-Native Workflow: The integrated MCP servers provide a "context-rich" environment where AI agents can work alongside human developers. This enables autonomous code reviews, deep codebase analysis, and a shared understanding of project history, dramatically accelerating development and solving the "bus factor" problem for complex projects.

-

Build Desktop-Class, Multi-Window Applications: Neo.mjs is the premier solution for building complex, multi-window web applications like trading platforms, browser-based IDEs, Outlook-style email clients, and multi-screen LLM interfaces. Its shared worker architecture allows a single application instance to run across multiple browser windows, with real-time state synchronization and the ability to move components between windows seamlessly.

-

Unmatched Developer Experience: Transpilation-Free ESM: Say goodbye to complex build steps. Neo.mjs apps run natively as ES Modules directly in the browser. This means zero builds or transpilation in dev mode, offering instant reloads and an unparalleled debugging experience where what you write is what you debug.

-

Inherent Security by Design: By prioritizing direct DOM API manipulation over string-based methods (like

innerHTML), Neo.mjs fundamentally reduces the attack surface for vulnerabilities like Cross-Site Scripting (XSS), building a more robust and secure application from the ground up.

Imagine a developer building a stock trading app with live feeds updating every millisecond. Traditional frameworks often choke, freezing the UI under the data flood. With Neo.mjs, the heavy lifting happens in worker threads, keeping the main thread free. Traders get real-time updates with zero lag, and the app feels like a native desktop tool. Now, imagine extending this with multiple synchronized browser windows, each displaying different real-time views, all remaining butter-smooth. That’s Neo.mjs in action — solving problems others can’t touch.

-

The Threading Subsystem: A dedicated orchestrator that isolates application logic (App Worker) from rendering logic (Main Thread) and data processing (Data Worker). This ensures the main thread is always free to respond to user input, eliminating UI jank at an architectural level.

-

The Rendering Pipeline: Just as a game engine sends draw calls to the GPU, the Neo.mjs App Worker sends compressed JSON deltas to the Main Thread. This Asymmetric VDOM approach is faster, more secure, and AI-friendly than main-thread diffing.

-

The Scene Graph (Object Permanence): Functioning as a persistent Application Engine, Neo.mjs components are live entities in the App Worker, functioning like nodes in a Scene Graph. They retain their state and identity even when detached from the DOM. This enables Runtime Permutation: the ability to drag active dashboards between windows or re-parent complex tools at runtime without losing context.

-

The Component System: Neo.mjs offers two powerful component models. Functional Components, introduced more recently, provide an easier onboarding experience and a modern, hook-based development style (

defineComponent,useConfig,useEvent), similar to other popular frameworks. They are ideal for simpler, more declarative UIs. For advanced use cases requiring granular control over VDOM changes and deeper integration with the engine's lifecycle, Class-Based Components offer superior power and flexibility, albeit with slightly more code overhead. Both models seamlessly interoperate, allowing you to choose the right tool for each part of your application while benefiting from the unparalleled performance of our multi-threaded architecture. Best of all, our functional components are free from the "memoization tax" (useMemo,useCallback) that plagues traditional UI libraries. -

The State Subsystem: Leveraging

Neo.state.Provider, Neo.mjs offers natively integrated, hierarchical state management. Components declare their data needs via a concisebindconfig. Thesebindfunctions act as powerful inline formulas, allowing Components to automatically react to changes and combine data from multiple state providers within the component hierarchy. This ensures dynamic, efficient updates — from simple property changes to complex computed values — all handled off the main thread.// Example: A component binding its text to state static config = { bind: { // 'data' here represents the combined state from all parent providers myComputedText: data => `User: ${data.userName || 'Guest'} | Status: ${data.userStatus || 'Offline'}` } }

-

The Logic Subsystem: View controllers ensure a clear separation of concerns, isolating business logic from UI components for easier maintenance, testing, and team collaboration.

-

Multi-Window & Single-Page Applications (SPAs)*: Beyond traditional SPAs, Neo.mjs excels at complex multi-window applications. Its unique architecture, powered by seamless cross-worker communication and extensible Main Thread addons, enables truly native-like, persistent experiences across browser windows.

-

The Module System: Neo.mjs apps run with zero runtime dependencies, just a few dev dependencies for tooling. This means smaller bundles, fewer conflicts, and a simpler dependency graph.

-

Unparalleled Debugging Experience: Benefit from Neo.mjs's built-in debugging capabilities. Easily inspect the full component tree across workers, live-modify component configurations directly in the browser console, and observe real-time UI updates, all without complex tooling setup.

-

Asymmetric VDOM & JSON Blueprints: Instead of a complex, class-based VNode tree, your application logic deals with simple, serializable JSON objects. These blueprints are sent to a dedicated VDOM worker for high-performance diffing, ensuring your main thread is never blocked by rendering calculations. This architecture is not only faster but also inherently more secure and easier for AI tools to generate and manipulate.

-

Async-Aware Component Lifecycle: With the

initAsync()lifecycle method, components can handle asynchronous setup (like fetching data or lazy-loading modules) before they are considered "ready." This eliminates entire classes of race conditions and UI flicker, allowing you to build complex, data-dependent components with confidence.

Diagram: A high-level overview of Neo.mjs's multi-threaded architecture (Main Thread, App Worker, VDom Worker, Canvas Worker, Data Worker, Service Worker, Backend). Optional workers fade in on hover on neomjs.com.

Just as a Game Engine consists of a Runtime (what players play) and an Editor (what developers use), Neo.mjs is split into two complementary layers.

Runs in the Browser. Production-Ready. Zero-Bloat.

This is the high-performance engine that powers your application. It operates on a multi-threaded architecture, isolating the main thread to ensure 60fps fluidity even under heavy load.

- App Worker: Runs your entire application logic, state, and virtual DOM diffing.

- Multi-Window Orchestration: A single engine instance can power multiple browser windows via SharedWorkers. Components can be dragged, dropped, and moved between windows seamlessly—essential for complex "Control Room" interfaces.

- Main Thread: Treated as a "dumb" renderer, only applying efficient DOM patches.

- Object Permanence: Components are persistent entities that can be moved, detached, and re-attached without losing state.

Runs in Node.js. Dev-Time Only. AI-Native.

This is the "Editor" for the AI era. It connects your development environment to the running engine, allowing AI agents to act as first-class collaborators.

- The Neural Link: A bi-directional bridge allowing agents to "see" and "mutate" the live runtime graph.

- MCP Servers: Provide agents with deep context (Knowledge Base) and memory (Memory Core).

- Context Engineering: A structured workflow where agents help you build the engine by understanding its architecture.

The Result: You don't just write code; you cultivate a living system with an AI partner that understands exactly how the engine works.

To appreciate the scope of Neo.mjs, it's important to understand its scale. This is not a micro-library; it's a comprehensive, enterprise-ready platform representing over a decade of architectural investment. Neo.mjs is an innovation factory.

The stats below, from January 2026, provide a snapshot of the ecosystem. For a deeper dive, you can explore the full Codebase Overview.

- ~45,000 lines of core platform source code

- ~36,000 lines across hundreds of working examples and flagship applications

- ~12,000 lines of production-grade theming

- ~14,000 lines of dedicated AI-native infrastructure

- ~53,000 lines of detailed JSDoc documentation

Total: Over 170,000 lines of curated code and documentation.

This is not a small library—it's a complete ecosystem with more source code than many established frameworks, designed for the most demanding use cases.

The v10 release marked a significant evolution of the Neo.mjs core, introducing a new functional component model and a revolutionary two-tier reactivity system. These principles form the bedrock of the framework today. We've published a five-part blog series that dives deep into this architecture:

-

A Frontend Love Story: Why the Strategies of Today Won't Build the Apps of Tomorrow

- An introduction to the core problems in modern frontend development and the architectural vision of Neo.mjs.

-

Deep Dive: Named vs. Anonymous State - A New Era of Component Reactivity

- Explore the powerful two-tier reactivity system that makes the "memoization tax" a thing of the past.

-

Beyond Hooks: A New Breed of Functional Components for a Multi-Threaded World

- Discover how functional components in a multi-threaded world eliminate the trade-offs of traditional hooks.

-

Deep Dive: The VDOM Revolution - JSON Blueprints & Asymmetric Rendering

- Learn how our off-thread VDOM engine uses simple JSON blueprints for maximum performance and security.

-

Deep Dive: The State Provider Revolution

- A look into the powerful, hierarchical state management system that scales effortlessly.

Neo.mjs’s class config system allows you to define and manage classes in a declarative and reusable way. This simplifies class creation, reduces boilerplate code, and improves maintainability.

import Component from '../../src/component/Base.mjs';

/**

* Lives within the App Worker

* @class MyComponent

* @extends Neo.component.Base

*/

class MyComponent extends Component {

static config = {

className : 'MyComponent',

myConfig_ : 'defaultValue', // Reactive property

domListeners: { // Direct DOM event binding

click: 'onClick'

}

}

// Triggered automatically by the config setter when myConfig changes

afterSetMyConfig(value, oldValue) {

console.log('myConfig changed:', value, oldValue);

}

// Executed in the App Worker. The Main Thread (UI) never sees this logic.

onClick(data) {

console.log('Clicked!', data);

}

}

export default Neo.setupClass(MyComponent);

// -------------------------------------------------------

// The Engine allows Runtime Mutation:

// An AI agent (or you) can inspect and modify this instance LIVE without a reload.

// -------------------------------------------------------

// Agent Command (via Neural Link):

Neo.get('my-component-id').set({

myConfig: 'newValue',

style : { color: 'red' } // Instant update via OMT Rendering Pipeline. No build step. No reload.

});For each config property ending with an underscore (_), Neo.mjs automatically generates a getter and a setter on the class prototype. These setters ensure that changes trigger corresponding lifecycle hooks, providing a powerful, built-in reactive system:

-

beforeGetMyConfig(value)(Optional) Called before the config value is returned via its getter, allowing for last-minute transformations. -

beforeSetMyConfig(value, oldValue)(Optional) Called before the config value is set, allowing you to intercept, validate, or modify the new value. Returning undefined will cancel the update. -

afterSetMyConfig(value, oldValue)(Optional) Called after the config value has been successfully set and a change has been detected, allowing for side effects or reactions to the new value.

For more details, check out the Class Config System documentation.

Have a question or want to connect with the community? We have two channels to help you out.

- 💬 Discord (Recommended for Questions & History): Our primary community hub. All conversations are permanently archived and searchable, making it the best place to ask questions and find past answers.

- ⚡️ Slack (For Real-Time Chat): Perfect for quick, real-time conversations. Please note that the free version's history is temporary (messages are deleted after 90 days).

To understand the long-term goals and future direction of the project, please see our strategic documents:

- ✨ The Vision: Learn about the core philosophy and the "why" behind our architecture.

- 🗺️ The Roadmap: See what we're working on now and what's planned for the future.

🛠️ Want to contribute? Check out our Contributing Guide.

Copyright (c) 2015 - today, Tobias Uhlig

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for neo

Similar Open Source Tools

neo

Neo.mjs is a revolutionary Application Engine for the web that offers true multithreading and context engineering, enabling desktop-class UI performance and AI-driven runtime mutation. It is not a framework but a complete runtime and toolchain for enterprise applications, excelling in single page apps and browser-based multi-window applications. With a pioneering Off-Main-Thread architecture, Neo.mjs ensures butter-smooth UI performance by keeping the main thread free for flawless user interactions. The latest version, v11, introduces AI-native capabilities, allowing developers to work with AI agents as first-class partners in the development process. The platform offers a suite of dedicated Model Context Protocol servers that give agents the context they need to understand, build, and reason about the code, enabling a new level of human-AI collaboration.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

kairon

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

deepdoctection

**deep** doctection is a Python library that orchestrates document extraction and document layout analysis tasks using deep learning models. It does not implement models but enables you to build pipelines using highly acknowledged libraries for object detection, OCR and selected NLP tasks and provides an integrated framework for fine-tuning, evaluating and running models. For more specific text processing tasks use one of the many other great NLP libraries. **deep** doctection focuses on applications and is made for those who want to solve real world problems related to document extraction from PDFs or scans in various image formats. **deep** doctection provides model wrappers of supported libraries for various tasks to be integrated into pipelines. Its core function does not depend on any specific deep learning library. Selected models for the following tasks are currently supported: * Document layout analysis including table recognition in Tensorflow with **Tensorpack**, or PyTorch with **Detectron2**, * OCR with support of **Tesseract**, **DocTr** (Tensorflow and PyTorch implementations available) and a wrapper to an API for a commercial solution, * Text mining for native PDFs with **pdfplumber**, * Language detection with **fastText**, * Deskewing and rotating images with **jdeskew**. * Document and token classification with all LayoutLM models provided by the **Transformer library**. (Yes, you can use any LayoutLM-model with any of the provided OCR-or pdfplumber tools straight away!). * Table detection and table structure recognition with **table-transformer**. * There is a small dataset for token classification available and a lot of new tutorials to show, how to train and evaluate this dataset using LayoutLMv1, LayoutLMv2, LayoutXLM and LayoutLMv3. * Comprehensive configuration of **analyzer** like choosing different models, output parsing, OCR selection. Check this notebook or the docs for more infos. * Document layout analysis and table recognition now runs with **Torchscript** (CPU) as well and **Detectron2** is not required anymore for basic inference. * [**new**] More angle predictors for determining the rotation of a document based on **Tesseract** and **DocTr** (not contained in the built-in Analyzer). * [**new**] Token classification with **LiLT** via **transformers**. We have added a model wrapper for token classification with LiLT and added a some LiLT models to the model catalog that seem to look promising, especially if you want to train a model on non-english data. The training script for LayoutLM can be used for LiLT as well and we will be providing a notebook on how to train a model on a custom dataset soon. **deep** doctection provides on top of that methods for pre-processing inputs to models like cropping or resizing and to post-process results, like validating duplicate outputs, relating words to detected layout segments or ordering words into contiguous text. You will get an output in JSON format that you can customize even further by yourself. Have a look at the **introduction notebook** in the notebook repo for an easy start. Check the **release notes** for recent updates. **deep** doctection or its support libraries provide pre-trained models that are in most of the cases available at the **Hugging Face Model Hub** or that will be automatically downloaded once requested. For instance, you can find pre-trained object detection models from the Tensorpack or Detectron2 framework for coarse layout analysis, table cell detection and table recognition. Training is a substantial part to get pipelines ready on some specific domain, let it be document layout analysis, document classification or NER. **deep** doctection provides training scripts for models that are based on trainers developed from the library that hosts the model code. Moreover, **deep** doctection hosts code to some well established datasets like **Publaynet** that makes it easy to experiment. It also contains mappings from widely used data formats like COCO and it has a dataset framework (akin to **datasets** so that setting up training on a custom dataset becomes very easy. **This notebook** shows you how to do this. **deep** doctection comes equipped with a framework that allows you to evaluate predictions of a single or multiple models in a pipeline against some ground truth. Check again **here** how it is done. Having set up a pipeline it takes you a few lines of code to instantiate the pipeline and after a for loop all pages will be processed through the pipeline.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

llama_deploy

llama_deploy is an async-first framework for deploying, scaling, and productionizing agentic multi-service systems based on workflows from llama_index. It allows building workflows in llama_index and deploying them seamlessly with minimal changes to code. The system includes services endlessly processing tasks, a control plane managing state and services, an orchestrator deciding task handling, and fault tolerance mechanisms. It is designed for high-concurrency scenarios, enabling real-time and high-throughput applications.

AgentPilot

Agent Pilot is an open source desktop app for creating, managing, and chatting with AI agents. It features multi-agent, branching chats with various providers through LiteLLM. Users can combine models from different providers, configure interactions, and run code using the built-in Open Interpreter. The tool allows users to create agents, manage chats, work with multi-agent workflows, branching workflows, context blocks, tools, and plugins. It also supports a code interpreter, scheduler, voice integration, and integration with various AI providers. Contributions to the project are welcome, and users can report known issues for improvement.

OM1

OpenMind's OM1 is a modular AI runtime empowering developers to create and deploy multimodal AI agents across digital environments and physical robots. OM1 agents process diverse inputs like web data, social media, camera feeds, and LIDAR, enabling actions including motion, autonomous navigation, and natural conversations. The goal is to create highly capable human-focused robots that are easy to upgrade and reconfigure for different physical form factors. OM1 features a modular architecture, supports new hardware via plugins, offers web-based debugging display, and pre-configured endpoints for various services.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

Local-Multimodal-AI-Chat

Local Multimodal AI Chat is a multimodal chat application that integrates various AI models to manage audio, images, and PDFs seamlessly within a single interface. It offers local model processing with Ollama for data privacy, integration with OpenAI API for broader AI capabilities, audio chatting with Whisper AI for accurate voice interpretation, and PDF chatting with Chroma DB for efficient PDF interactions. The application is designed for AI enthusiasts and developers seeking a comprehensive solution for multimodal AI technologies.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

BehaviorTree.CPP

BehaviorTree.CPP is a C++ 17 library that provides a framework to create BehaviorTrees. It was designed to be flexible, easy to use, reactive and fast. Even if our main use-case is robotics, you can use this library to build AI for games, or to replace Finite State Machines. There are few features which make BehaviorTree.CPP unique, when compared to other implementations: It makes asynchronous Actions, i.e. non-blocking, a first-class citizen. You can build reactive behaviors that execute multiple Actions concurrently (orthogonality). Trees are defined using a Domain Specific scripting language (based on XML), and can be loaded at run-time; in other words, even if written in C++, the morphology of the Trees is not hard-coded. You can statically link your custom TreeNodes or convert them into plugins and load them at run-time. It provides a type-safe and flexible mechanism to do Dataflow between Nodes of the Tree. It includes a logging/profiling infrastructure that allows the user to visualize, record, replay and analyze state transitions.

For similar tasks

neo

Neo.mjs is a revolutionary Application Engine for the web that offers true multithreading and context engineering, enabling desktop-class UI performance and AI-driven runtime mutation. It is not a framework but a complete runtime and toolchain for enterprise applications, excelling in single page apps and browser-based multi-window applications. With a pioneering Off-Main-Thread architecture, Neo.mjs ensures butter-smooth UI performance by keeping the main thread free for flawless user interactions. The latest version, v11, introduces AI-native capabilities, allowing developers to work with AI agents as first-class partners in the development process. The platform offers a suite of dedicated Model Context Protocol servers that give agents the context they need to understand, build, and reason about the code, enabling a new level of human-AI collaboration.

ai-gradio

ai-gradio is a Python package that simplifies the creation of machine learning apps using various models like OpenAI, Google's Gemini, Anthropic's Claude, LumaAI, CrewAI, XAI's Grok, and Hyperbolic. It provides easy installation with support for different providers and offers features like text chat, voice chat, video chat, code generation interfaces, and AI agent teams. Users can set API keys for different providers and customize interfaces for specific tasks.

aios-core

Synkra AIOS is a Framework for Universal AI Agents powered by AI. It is founded on Agent-Driven Agile Development, offering revolutionary capabilities for AI-driven development and more. Transform any domain with specialized AI expertise: software development, entertainment, creative writing, business strategy, personal well-being, and more. The framework follows a clear hierarchy of priorities: CLI First, Observability Second, UI Third. The CLI is where intelligence resides, all execution, decisions, and automation happen there. Observability is for observing and monitoring what happens in the CLI in real-time. The UI is for specific management and visualizations when necessary. The two key innovations of Synkra AIOS are Planejamento Agêntico and Desenvolvimento Contextualizado por Engenharia, which eliminate inconsistency in planning and loss of context in AI-assisted development.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.