minuet-ai.nvim

💃 Dance with Intelligence in Your Code. Minuet offers code completion as-you-type from popular LLMs including OpenAI, Gemini, Claude, Ollama, Llama.cpp, Codestral, and more.

Stars: 458

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

README:

- Minuet

- Features

- Requirements

- Installation

- Quick Start

- Selecting a Provider or Model

- Configuration

- API Keys

- Prompt

- Providers

- Commands

- API

- FAQ

- Enhancement

- Troubleshooting

- Contributing

- Acknowledgement

Minuet: Dance with Intelligence in Your Code 💃.

Minuet brings the grace and harmony of a minuet to your coding process.

Just as dancers move during a minuet.

- AI-powered code completion with dual modes:

- Specialized prompts and various enhancements for chat-based LLMs on code completion tasks.

- Fill-in-the-middle (FIM) completion for compatible models (DeepSeek, Codestral, Qwen, and others).

- Support for multiple AI providers (OpenAI, Claude, Gemini, Codestral, Ollama, Llama-cpp, and OpenAI-compatible services).

- Customizable configuration options.

- Streaming support to enable completion delivery even with slower LLMs.

- No proprietary binary running in the background. Just curl and your preferred LLM provider.

- Support

virtual-text,nvim-cmp,blink-cmp,built-in,mini.completionfrontend. - Act as an in-process LSP server to provide completions (opt-in feature).

With nvim-cmp / blink-cmp frontend:

With builtin completion frontend (requires nvim 0.11+):

With virtual text frontend:

- Neovim 0.10+.

- plenary.nvim

- optional: nvim-cmp

- optional: blink.cmp

- An API key for at least one of the supported AI providers

Lazy.nvim:

specs = {

{

'milanglacier/minuet-ai.nvim',

config = function()

require('minuet').setup {

-- Your configuration options here

}

end,

},

{ 'nvim-lua/plenary.nvim' },

-- optional, if you are using virtual-text frontend, nvim-cmp is not

-- required.

{ 'hrsh7th/nvim-cmp' },

-- optional, if you are using virtual-text frontend, blink is not required.

{ 'Saghen/blink.cmp' },

}Rocks.nvim:

Minuet is available on luarocks.org. Simply run Rocks install minuet-ai.nvim to install it like any other luarocks package.

require('minuet').setup {

virtualtext = {

auto_trigger_ft = {},

keymap = {

-- accept whole completion

accept = '<A-A>',

-- accept one line

accept_line = '<A-a>',

-- accept n lines (prompts for number)

-- e.g. "A-z 2 CR" will accept 2 lines

accept_n_lines = '<A-z>',

-- Cycle to prev completion item, or manually invoke completion

prev = '<A-[>',

-- Cycle to next completion item, or manually invoke completion

next = '<A-]>',

dismiss = '<A-e>',

},

},

}require('cmp').setup {

sources = {

{

-- Include minuet as a source to enable autocompletion

{ name = 'minuet' },

-- and your other sources

}

},

performance = {

-- It is recommended to increase the timeout duration due to

-- the typically slower response speed of LLMs compared to

-- other completion sources. This is not needed when you only

-- need manual completion.

fetching_timeout = 2000,

},

}

-- If you wish to invoke completion manually,

-- The following configuration binds `A-y` key

-- to invoke the configuration manually.

require('cmp').setup {

mapping = {

["<A-y>"] = require('minuet').make_cmp_map()

-- and your other keymappings

},

}require('blink-cmp').setup {

keymap = {

-- Manually invoke minuet completion.

['<A-y>'] = require('minuet').make_blink_map(),

},

sources = {

-- Enable minuet for autocomplete

default = { 'lsp', 'path', 'buffer', 'snippets', 'minuet' },

-- For manual completion only, remove 'minuet' from default

providers = {

minuet = {

name = 'minuet',

module = 'minuet.blink',

score_offset = 8, -- Gives minuet higher priority among suggestions

},

},

},

-- Recommended to avoid unnecessary request

completion = { trigger = { prefetch_on_insert = false } },

}Requirements:

- Neovim version 0.11 or higher is necessary for built-in completion.

require('minuet').setup {

lsp = {

enabled_ft = { 'toml', 'lua', 'cpp' },

-- Enables automatic completion triggering using `vim.lsp.completion.enable`

enabled_auto_trigger_ft = { 'cpp', 'lua' },

}

}The enabled_auto_trigger_ft setting is relevant only for built-in completion.

Mini.Completion users can ignore this option, as Mini.Completion uses all

available LSPs for auto-triggered completion.

For manually triggered completion, ensure vim.bo.omnifunc is set to

v:lua.vim.lsp.omnifunc and use <C-x><C-o> in Insert mode.

Recommendation:

For users of blink-cmp and nvim-cmp, it is recommended to use the native

source rather than through LSP for two main reasons:

-

blink-cmpandnvim-cmpoffer better sorting and async management when Minuet is utilized as a separate source rather than alongside a regular LSP such asclangd. -

With

blink-cmpandnvim-cmpnative sources, it's possible to configure Minuet for manual completion only, disabling automatic completion. However, when Minuet operates as an LSP server, it is impossible to determine whether completion is triggered automatically or manually.The LSP protocol specification defines three

triggerKindvalues:Invoked,TriggerCharacter, andTriggerForIncompleteCompletions. However, none of these specifically differentiates between manual and automatic completion requests.

Note: An upstream issue (tracked here) may cause unexpected indentation behavior when accepting multi-line completions.

Therefore, consider the following options:

- Ensure

config.add_single_line_entry = trueand only accept single-line completions. - Avoid using Minuet and built-in completion with languages where indentation affects semantics, such as Python.

Additional Note:

Users might call vim.lsp.completion.enable {autotrigger = true} during the

LspAttach event when the client supports completion. However, this is not the

desired behavior for Minuet. As an LLM completion source, Minuet can face

significant rate limits during automatic triggering.

Therefore, it's recommended to enable Minuet for automatic triggering using the

config.lsp.enabled_auto_trigger_ft setting.

For users who uses LspAttach event, it is recommeded to verify that the

server is not the Minuet server before enabling autotrigger. An example

configuration is shown below:

vim.api.nvim_create_autocmd('LspAttach', {

callback = function(args)

local client_id = args.data.client_id

local bufnr = args.buf

local client = vim.lsp.get_client_by_id(client_id)

if not client then

return

end

if client.server_capabilities.completionProvider and client.name ~= 'minuet' then

vim.lsp.completion.enable(true, client_id, bufnr, { autotrigger = true })

end

end,

desc = 'Enable built-in auto completion',

})require('minuet').setup {

provider = 'openai_compatible',

request_timeout = 2.5,

throttle = 1500, -- Increase to reduce costs and avoid rate limits

debounce = 600, -- Increase to reduce costs and avoid rate limits

provider_options = {

openai_compatible = {

api_key = 'OPENROUTER_API_KEY',

end_point = 'https://openrouter.ai/api/v1/chat/completions',

model = 'qwen/qwen2.5-32b-instruct',

name = 'Openrouter',

optional = {

max_tokens = 128,

top_p = 0.9,

provider = {

-- Prioritize throughput for faster completion

sort = 'throughput',

},

},

},

},

}-- you can use deepseek with both openai_fim_compatible or openai_compatible provider

require('minuet').setup {

provider = 'openai_fim_compatible',

provider_options = {

openai_fim_compatible = {

api_key = 'DEEPSEEK_API_KEY',

name = 'deepseek',

optional = {

max_tokens = 256,

top_p = 0.9,

},

},

},

}

-- or

require('minuet').setup {

provider = 'openai_compatible',

provider_options = {

openai_compatible = {

end_point = 'https://api.deepseek.com/v1/chat/completions',

api_key = 'DEEPSEEK_API_KEY',

name = 'deepseek',

optional = {

max_tokens = 256,

top_p = 0.9,

},

},

},

}require('minuet').setup {

provider = 'openai_fim_compatible',

n_completions = 1, -- recommend for local model for resource saving

-- I recommend beginning with a small context window size and incrementally

-- expanding it, depending on your local computing power. A context window

-- of 512, serves as an good starting point to estimate your computing

-- power. Once you have a reliable estimate of your local computing power,

-- you should adjust the context window to a larger value.

context_window = 512,

provider_options = {

openai_fim_compatible = {

api_key = 'TERM',

name = 'Ollama',

end_point = 'http://localhost:11434/v1/completions',

model = 'qwen2.5-coder:7b',

optional = {

max_tokens = 56,

top_p = 0.9,

},

},

},

}First, launch the llama-server with your chosen model.

Here's an example of a bash script to start the server if your system has less than 8GB of VRAM:

llama-server \

-hf ggml-org/Qwen2.5-Coder-1.5B-Q8_0-GGUF \

--port 8012 -ngl 99 -fa -ub 1024 -b 1024 \

--ctx-size 0 --cache-reuse 256require('minuet').setup {

provider = 'openai_fim_compatible',

n_completions = 1, -- recommend for local model for resource saving

-- I recommend beginning with a small context window size and incrementally

-- expanding it, depending on your local computing power. A context window

-- of 512, serves as an good starting point to estimate your computing

-- power. Once you have a reliable estimate of your local computing power,

-- you should adjust the context window to a larger value.

context_window = 512,

provider_options = {

openai_fim_compatible = {

api_key = 'TERM',

name = 'Llama.cpp',

end_point = 'http://localhost:8012/v1/completions',

-- The model is set by the llama-cpp server and cannot be altered

-- post-launch.

model = 'PLACEHOLDER',

optional = {

max_tokens = 56,

top_p = 0.9,

},

-- Llama.cpp does not support the `suffix` option in FIM completion.

-- Therefore, we must disable it and manually populate the special

-- tokens required for FIM completion.

template = {

prompt = function(context_before_cursor, context_after_cursor)

return '<|fim_prefix|>'

.. context_before_cursor

.. '<|fim_suffix|>'

.. context_after_cursor

.. '<|fim_middle|>'

end,

suffix = false,

},

},

},

}For additional example bash scripts to run llama.cpp based on your local computing power, please refer to recipes.md.

The gemini-flash and codestral models offer high-quality output with free

and fast processing. For optimal quality (albeit slower generation speed),

consider using the deepseek-chat model, which is compatible with both

openai-fim-compatible and openai-compatible providers. For local LLM

inference, you can deploy either qwen-2.5-coder or deepseek-coder-v2 through

Ollama using the openai-fim-compatible provider.

As of January 28, 2025: Due to high server demand, Deepseek users may experience significant response delays or timeout. We recommend trying alternative providers instead.

Minuet AI comes with the following defaults:

default_config = {

-- Enable or disable auto-completion. Note that you still need to add

-- Minuet to your cmp/blink sources. This option controls whether cmp/blink

-- will attempt to invoke minuet when minuet is included in cmp/blink

-- sources. This setting has no effect on manual completion; Minuet will

-- always be enabled when invoked manually. You can use the command

-- `Minuet cmp/blink toggle` to toggle this option.

cmp = {

enable_auto_complete = true,

},

blink = {

enable_auto_complete = true,

},

-- LSP is recommended only for built-in completion. If you are using

-- `cmp` or `blink`, utilizing LSP for code completion from Minuet is *not*

-- recommended.

lsp = {

enabled_ft = {},

-- Filetypes excluded from LSP activation. Useful when `enabled_ft` = { '*' }

disabled_ft = {},

-- Enables automatic completion triggering using `vim.lsp.completion.enable`

enabled_auto_trigger_ft = {},

-- Filetypes excluded from autotriggering. Useful when `enabled_auto_trigger_ft` = { '*' }

disabled_auto_trigger_ft = {},

-- if true, when the user is using blink or nvim-cmp, warn the user

-- that they should use the native source instead.

warn_on_blink_or_cmp = true,

},

virtualtext = {

-- Specify the filetypes to enable automatic virtual text completion,

-- e.g., { 'python', 'lua' }. Note that you can still invoke manual

-- completion even if the filetype is not on your auto_trigger_ft list.

auto_trigger_ft = {},

-- specify file types where automatic virtual text completion should be

-- disabled. This option is useful when auto-completion is enabled for

-- all file types i.e., when auto_trigger_ft = { '*' }

auto_trigger_ignore_ft = {},

keymap = {

accept = nil,

accept_line = nil,

accept_n_lines = nil,

-- Cycle to next completion item, or manually invoke completion

next = nil,

-- Cycle to prev completion item, or manually invoke completion

prev = nil,

dismiss = nil,

},

-- Whether show virtual text suggestion when the completion menu

-- (nvim-cmp or blink-cmp) is visible.

show_on_completion_menu = false,

},

provider = 'codestral',

-- the maximum total characters of the context before and after the cursor

-- 16000 characters typically equate to approximately 4,000 tokens for

-- LLMs.

context_window = 16000,

-- when the total characters exceed the context window, the ratio of

-- context before cursor and after cursor, the larger the ratio the more

-- context before cursor will be used. This option should be between 0 and

-- 1, context_ratio = 0.75 means the ratio will be 3:1.

context_ratio = 0.75,

throttle = 1000, -- only send the request every x milliseconds, use 0 to disable throttle.

-- debounce the request in x milliseconds, set to 0 to disable debounce

debounce = 400,

-- Control notification display for request status

-- Notification options:

-- false: Disable all notifications (use boolean false, not string "false")

-- "debug": Display all notifications (comprehensive debugging)

-- "verbose": Display most notifications

-- "warn": Display warnings and errors only

-- "error": Display errors only

notify = 'warn',

-- The request timeout, measured in seconds. When streaming is enabled

-- (stream = true), setting a shorter request_timeout allows for faster

-- retrieval of completion items, albeit potentially incomplete.

-- Conversely, with streaming disabled (stream = false), a timeout

-- occurring before the LLM returns results will yield no completion items.

request_timeout = 3,

-- If completion item has multiple lines, create another completion item

-- only containing its first line. This option only has impact for cmp and

-- blink. For virtualtext, no single line entry will be added.

add_single_line_entry = true,

-- The number of completion items encoded as part of the prompt for the

-- chat LLM. For FIM model, this is the number of requests to send. It's

-- important to note that when 'add_single_line_entry' is set to true, the

-- actual number of returned items may exceed this value. Additionally, the

-- LLM cannot guarantee the exact number of completion items specified, as

-- this parameter serves only as a prompt guideline.

n_completions = 3,

-- Defines the length of non-whitespace context after the cursor used to

-- filter completion text. Set to 0 to disable filtering.

--

-- Example: With after_cursor_filter_length = 3 and context:

--

-- "def fib(n):\n|\n\nfib(5)" (where | represents cursor position),

--

-- if the completion text contains "fib", then "fib" and subsequent text

-- will be removed. This setting filters repeated text generated by the

-- LLM. A large value (e.g., 15) is recommended to avoid false positives.

after_cursor_filter_length = 15,

-- proxy port to use

proxy = nil,

provider_options = {

-- see the documentation in each provider in the following part.

},

-- see the documentation in the `Prompt` section

default_system = {

template = '...',

prompt = '...',

guidelines = '...',

n_completion_template = '...',

},

default_system_prefix_first = {

template = '...',

prompt = '...',

guidelines = '...',

n_completion_template = '...',

},

default_fim_template = {

prompt = '...',

suffix = '...',

},

default_few_shots = { '...' },

default_chat_input = { '...' },

default_few_shots_prefix_first = { '...' },

default_chat_input_prefix_first = { '...' },

-- Config options for `Minuet change_preset` command

presets = {}

}Minuet AI requires API keys to function. Set the following environment variables:

-

OPENAI_API_KEYfor OpenAI -

GEMINI_API_KEYfor Gemini -

ANTHROPIC_API_KEYfor Claude -

CODESTRAL_API_KEYfor Codestral - Custom environment variable for OpenAI-compatible services (as specified in your configuration)

Note: Provide the name of the environment variable to Minuet, not the

actual value. For instance, pass OPENAI_API_KEY to Minuet, not the value

itself (e.g., sk-xxxx).

If using Ollama, you need to assign an arbitrary, non-null environment variable as a placeholder for it to function.

Alternatively, you can provide a function that returns the API key. This function should return the result instantly as it will be called for each completion request.

require('mineut').setup {

provider_options = {

openai_compatible = {

-- good

api_key = 'FIREWORKS_API_KEY', -- will read the environment variable FIREWORKS_API_KEY

-- good

api_key = function() return 'sk-xxxx' end,

-- bad

api_key = 'sk-xxxx',

}

}

}See prompt for the default prompt used by minuet and

instructions on customization.

Note that minuet employs two distinct prompt systems:

- A system designed for chat-based LLMs (OpenAI, OpenAI-Compatible, Claude, and Gemini)

- A separate system designed for Codestral and OpenAI-FIM-compatible models

You need to set the field provider in the config, the default provider is

codestral. For example:

require('minuet').setup {

provider = 'gemini'

}the following is the default configuration for OpenAI:

provider_options = {

openai = {

model = 'gpt-4o-mini',

system = "see [Prompt] section for the default value",

few_shots = "see [Prompt] section for the default value",

chat_input = "See [Prompt Section for default value]",

stream = true,

api_key = 'OPENAI_API_KEY',

optional = {

-- pass any additional parameters you want to send to OpenAI request,

-- e.g.

-- stop = { 'end' },

-- max_tokens = 256,

-- top_p = 0.9,

},

},

}The following configuration is not the default, but recommended to prevent request timeout from outputing too many tokens.

provider_options = {

openai = {

optional = {

max_tokens = 256,

},

},

}the following is the default configuration for Claude:

provider_options = {

claude = {

max_tokens = 512,

model = 'claude-3-5-haiku-20241022',

system = "see [Prompt] section for the default value",

few_shots = "see [Prompt] section for the default value",

chat_input = "See [Prompt Section for default value]",

stream = true,

api_key = 'ANTHROPIC_API_KEY',

optional = {

-- pass any additional parameters you want to send to claude request,

-- e.g.

-- stop_sequences = nil,

},

},

}Codestral is a text completion model, not a chat model, so the system prompt

and few shot examples does not apply. Note that you should use the

CODESTRAL_API_KEY, not the MISTRAL_API_KEY, as they are using different

endpoint. To use the Mistral endpoint, simply modify the end_point and

api_key parameters in the configuration.

the following is the default configuration for Codestral:

provider_options = {

codestral = {

model = 'codestral-latest',

end_point = 'https://codestral.mistral.ai/v1/fim/completions',

api_key = 'CODESTRAL_API_KEY',

stream = true,

template = {

prompt = "See [Prompt Section for default value]",

suffix = "See [Prompt Section for default value]",

},

optional = {

stop = nil, -- the identifier to stop the completion generation

max_tokens = nil,

},

},

}The following configuration is not the default, but recommended to prevent request timeout from outputing too many tokens.

provider_options = {

codestral = {

optional = {

max_tokens = 256,

stop = { '\n\n' },

},

},

}You should register the account and use the service from Google AI Studio instead of Google Cloud. You can get an API key via their Google API page.

The following config is the default.

provider_options = {

gemini = {

model = 'gemini-2.0-flash',

system = "see [Prompt] section for the default value",

few_shots = "see [Prompt] section for the default value",

chat_input = "See [Prompt Section for default value]",

stream = true,

api_key = 'GEMINI_API_KEY',

optional = {},

},

}The following configuration is not the default, but recommended to prevent request timeout from outputing too many tokens. You can also adjust the safety settings following the example:

provider_options = {

gemini = {

optional = {

generationConfig = {

maxOutputTokens = 256,

},

safetySettings = {

{

-- HARM_CATEGORY_HATE_SPEECH,

-- HARM_CATEGORY_HARASSMENT

-- HARM_CATEGORY_SEXUALLY_EXPLICIT

category = 'HARM_CATEGORY_DANGEROUS_CONTENT',

-- BLOCK_NONE

threshold = 'BLOCK_ONLY_HIGH',

},

},

},

},

}Use any providers compatible with OpenAI's chat completion API.

For example, you can set the end_point to

http://localhost:11434/v1/chat/completions to use ollama.

Note that not all openAI compatible services has streaming support, you should

change stream=false to disable streaming in case your services do not support

it.

The following config is the default.

provider_options = {

openai_compatible = {

model = 'qwen/qwen2.5-32b-instruct',

system = "see [Prompt] section for the default value",

few_shots = "see [Prompt] section for the default value",

chat_input = "See [Prompt Section for default value]",

stream = true,

end_point = 'https://openrouter.ai/api/v1/chat/completions',

api_key = 'OPENROUTER_API_KEY',

name = 'Openrouter',

optional = {

stop = nil,

max_tokens = nil,

},

}

}Use any provider compatible with OpenAI's completion API. This request uses the

text /completions endpoint, not /chat/completions endpoint, so system

prompts and few-shot examples are not applicable.

For example, you can set the end_point to

http://localhost:11434/v1/completions to use ollama, or set it to

http://localhost:8012/v1/completions to use llama.cpp.

Cmdline completion is available for models supported by these providers:

deepseek, ollama, and siliconflow.

Refer to the Completions Legacy section of the OpenAI documentation for details.

Please note that not all OpenAI-compatible services support streaming. If your

service does not support streaming, you should set stream=false to disable

it.

Additionally, for Ollama users, it is essential to verify whether the model's

template supports FIM completion. For example, qwen2.5-coder offers FIM

support, as suggested in its

template.

However it may come as a surprise to some users that, deepseek-coder does not

support the FIM template, and you should use deepseek-coder-v2 instead.

For example bash scripts to run llama.cpp based on your local

computing power, please refer to recipes.md. Note

that the model for llama.cpp must be determined when you launch the

llama.cpp server and cannot be changed thereafter.

provider_options = {

openai_fim_compatible = {

model = 'deepseek-chat',

end_point = 'https://api.deepseek.com/beta/completions',

api_key = 'DEEPSEEK_API_KEY',

name = 'Deepseek',

stream = true,

template = {

prompt = "See [Prompt Section for default value]",

suffix = "See [Prompt Section for default value]",

},

optional = {

stop = nil,

max_tokens = nil,

},

}

}The following configuration is not the default, but recommended to prevent request timeout from outputing too many tokens.

provider_options = {

openai_fim_compatible = {

optional = {

max_tokens = 256,

stop = { '\n\n' },

},

},

}The change_provider command allows you to change the provider after Minuet

has been setup.

Example usage: Minuet change_provider claude

The change_model command allows you to change both the provider and model in

one command. When called without arguments, it will open an interactive

selection menu using vim.ui.select to choose from available models. When

called with an argument, the format is provider:model.

Example usage:

-

Minuet change_model- Opens interactive model selection -

Minuet change_model gemini:gemini-1.5-pro-latest- Directly sets the model

Note: For openai_compatible and openai_fim_compatible providers, the model

completions in cmdline are determined by the name field in your

configuration. For example, if you configured:

provider_options.openai_compatible.name = 'Fireworks'When entering Minuet change_model openai_compatible: in the cmdline,

you'll see model completions specific to the Fireworks provider.

The change_preset command allows you to switch between config presets that

were defined during initial setup. Presets provide a convenient way to toggle

between different config sets. This is particularly useful when you need to:

- Switch between different cloud providers (such as Fireworks or Groq) for the

openai_compatibleprovider - Apply different throttle and debounce settings for different providers

When called, the command merges the selected preset with the current config table to create an updated configuration.

Usage syntax: Minuet change_preset preset_1

Presets can be configured during the initial setup process.

require('minuet').setup {

presets = {

preset_1 = {

-- Configuration for cloud-based requests with large context window

context_window = 20000,

request_timeout = 4,

throttle = 3000,

debounce = 1000,

provider = 'openai_compatible',

provider_options = {

openai_compatible = {

model = 'llama-3.3-70b-versatile',

api_key = 'GROQ_API_KEY',

name = 'Groq'

}

}

},

preset_2 = {

-- Configuration for local model with smaller context window

provider = 'openai_fim_compatible',

context_window = 2000,

throttle = 400,

debounce = 100,

provider_options = {

openai_fim_compatible = {

api_key = 'TERM',

name = 'Ollama',

end_point = 'http://localhost:11434/v1/completions',

model = 'qwen2.5-coder:7b',

optional = {

max_tokens = 256,

top_p = 0.9

}

}

}

}

}

}Enable or disable autocompletion for nvim-cmp or blink.cmp. While Minuet

must be added to your cmp/blink sources, this command only controls whether

Minuet is triggered during autocompletion. The command does not affect manual

completion behavior - Minuet remains active and available when manually

invoked.

Example usage: Minuet blink toggle, Minuet blink enable, Minuet blink disable

Enable or disable the automatic display of virtual-text completion in the

current buffer.

Example usage: Minuet virtualtext toggle, Minuet virtualtext enable,

Minuet virtualtext disable.

The Minuet LSP command provides commands for managing the in-process LSP server:

-

:Minuet lsp attach: Attach the Minuet LSP server to the current buffer. -

:Minuet lsp detach: Detach the Minuet LSP server from the current buffer. -

:Minuet lsp enable_auto_trigger: Enable automatic completion triggering usingvim.lsp.completion.enablefor current buffer. -

:Minuet lsp disable_auto_trigger: Disable automatic completion triggering for current buffer.

minuet-ai.nvim offers the following functions to customize your key mappings:

{

-- accept whole completion

require('minuet.virtualtext').action.accept,

-- accept by line

require('minuet.virtualtext').action.accept_line,

-- accept n lines (prompts for number)

require('minuet.virtualtext').action.accept_n_lines,

require('minuet.virtualtext').action.next,

require('minuet.virtualtext').action.prev,

require('minuet.virtualtext').action.dismiss,

-- whether the virtual text is visible in current buffer

require('minuet.virtualtext').action.is_visible,

}Minuet provides a Lualine component that displays the current status of Minuet requests. This component shows:

- The name of the active provider

- The current request count (e.g., "1/3")

- An animated spinner while processing

To use the Minuet Lualine component, add it to your Lualine configuration:

require('lualine').setup {

sections = {

lualine_x = {

require('minuet.lualine'),

'encoding',

'fileformat',

'filetype',

},

},

}Minuet emits three distinct events during its request workflow:

- MinuetRequestStartedPre: Triggered before a completion request is initiated. This allows for pre-request operations, such as logging or updating the user interface.

- MinuetRequestStarted: Triggered immediately after the completion request is dispatched, signaling that the request is in progress.

- MinuetRequestFinished: Triggered upon completion of the request.

Each event includes a data field containing the following properties:

-

provider: A string indicating the provider type (e.g., 'openai_compatible'). -

name: A string specifying the provider's name (e.g., 'OpenAI', 'Groq', 'Ollama'). -

n_requests: The number of requests encompassed in this completion cycle. -

request_idx(optional): The index of the current request, applicable when providers make multiple requests. -

timestamp: A Unix timestamp representing the start of the request cycle (corresponding to theMinuetRequestStartedPreevent).

You can configure the icons of completion items returned by minuet by using

the following snippet (referenced from cmp's

wiki):

local kind_icons = {

Number = '',

Array = '',

Variable = '',

-- and other icons

-- LLM Provider icons

claude = '',

openai = '',

codestral = '',

gemini = '',

Groq = '',

Openrouter = '',

Ollama = '',

['Llama.cpp'] = '',

Deepseek = ''

-- FALLBACK

fallback = '',

}

local source_icons = {

minuet = '',

nvim_lsp = '',

lsp = '',

buffer = '',

luasnip = '',

snippets = '',

path = '',

git = '',

tags = '',

-- FALLBACK

fallback = '',

}

local cmp = require 'cmp'

cmp.setup {

formatting = {

format = function(entry, vim_item)

-- Kind icons

-- This concatenates the icons with the name of the item kind

vim_item.kind = string.format('%s %s', kind_icons[vim_item.kind] or kind_icons.fallback, vim_item.kind)

-- Source

vim_item.menu = source_icons[entry.source.name] or source_icons.fallback

return vim_item

end,

},

}You can configure the icons of completion items returned by minuet by the following snippet:

To customize the kind icons:

local kind_icons = {

-- LLM Provider icons

claude = '',

openai = '',

codestral = '',

gemini = '',

Groq = '',

Openrouter = '',

Ollama = '',

['Llama.cpp'] = '',

Deepseek = ''

}

require('blink-cmp').setup {

appearance = {

use_nvim_cmp_as_default = true,

nerd_font_variant = 'normal',

kind_icons = kind_icons

},

}

To customize the source icons:

local source_icons = {

minuet = '',

orgmode = '',

otter = '',

nvim_lsp = '',

lsp = '',

buffer = '',

luasnip = '',

snippets = '',

path = '',

git = '',

tags = '',

cmdline = '',

latex_symbols = '',

cmp_nvim_r = '',

codeium = '',

-- FALLBACK

fallback = '',

}

require('blink-cmp').setup {

appearance = {

use_nvim_cmp_as_default = true,

nerd_font_variant = 'normal',

kind_icons = kind_icons

},

completion = {

menu = {

draw = {

columns = {

{ 'label', 'label_description', gap = 1 },

{ 'kind_icon', 'kind' },

{ 'source_icon' },

},

components = {

source_icon = {

-- don't truncate source_icon

ellipsis = false,

text = function(ctx)

return source_icons[ctx.source_name:lower()] or source_icons.fallback

end,

highlight = 'BlinkCmpSource',

},

},

},

},

}

}When using Minuet with auto-complete enabled, you may occasionally experience a

noticeable delay when pressing <CR> to move to the next line. This occurs

because Minuet triggers autocompletion at the start of a new line, while cmp

blocks the <CR> key, awaiting Minuet's response.

To address this issue, consider the following solutions:

- Unbind the

<CR>key from your cmp keymap. - Utilize cmp's internal API to avoid blocking calls, though be aware that this API may change without prior notice.

Here's an example of the second approach using Lua:

local cmp = require 'cmp'

opts.mapping = {

['<CR>'] = cmp.mapping(function(fallback)

-- use the internal non-blocking call to check if cmp is visible

if cmp.core.view:visible() then

cmp.confirm { select = true }

else

fallback()

end

end),

}With nvim-cmp:

{

'milanglacier/minuet-ai.nvim',

config = function()

require('minuet').setup {

-- Your configuration options here

}

end

},

{

'nvim-cmp',

optional = true,

opts = function(_, opts)

-- if you wish to use autocomplete

table.insert(opts.sources, 1, {

name = 'minuet',

group_index = 1,

priority = 100,

})

opts.performance = {

-- It is recommended to increase the timeout duration due to

-- the typically slower response speed of LLMs compared to

-- other completion sources. This is not needed when you only

-- need manual completion.

fetching_timeout = 2000,

}

opts.mapping = vim.tbl_deep_extend('force', opts.mapping or {}, {

-- if you wish to use manual complete

['<A-y>'] = require('minuet').make_cmp_map(),

})

end,

}With blink-cmp:

-- set the following line in your config/options.lua

vim.g.lazyvim_blink_main = true

{

'milanglacier/minuet-ai.nvim',

config = function()

require('minuet').setup {

-- Your configuration options here

}

end,

},

{

'saghen/blink.cmp',

optional = true,

opts = {

keymap = {

['<A-y>'] = {

function(cmp)

cmp.show { providers = { 'minuet' } }

end,

},

},

sources = {

-- if you want to use auto-complete

default = { 'minuet' },

providers = {

minuet = {

name = 'minuet',

module = 'minuet.blink',

score_offset = 100,

},

},

},

},

}You can enhance the content sent to the LLM for code completion by leveraging RAG support through the VectorCode package.

VectorCode contains two main components. The first is a standalone CLI program written in Python, available for installation via PyPI. This program is responsible for creating the vector database and processing RAG queries. The second component is a Neovim plugin that provides utility functions to send queries and manage buffer-related RAG information within Neovim.

We offer two example recipes demonstrating VectorCode integration: one for chat-based LLMs (Gemini) and another for the FIM model (Qwen-2.5-Coder), available in recipes.md.

For detailed instructions on setting up and using VectorCode, please refer to the official VectorCode documentation.

If your setup failed, there are two most likely reasons:

- You may set the API key incorrectly. Checkout the API Key section to see how to correctly specify the API key.

- You are using a model or a context window that is too large, causing

completion items to timeout before returning any tokens. This is

particularly common with local LLM. It is recommended to start with the

following settings to have a better understanding of your provider's inference

speed.

- Begin by testing with manual completions.

- Use a smaller context window (e.g.,

config.context_window = 768) - Use a smaller model

- Set a longer request timeout (e.g.,

config.request_timeout = 5)

To diagnose issues, set config.notify = debug and examine the output.

Contributions are welcome! Please feel free to submit a Pull Request.

-

cmp-ai: Reference for the integration with

nvim-cmp. - continue.dev: not a neovim plugin, but I find a lot LLM models from here.

- copilot.lua: Reference for the virtual text frontend.

- llama.vim: Reference for CLI parameters used to launch the llama-cpp server.

- crates.nvim: Reference for in-process LSP implemtation to provide completion.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for minuet-ai.nvim

Similar Open Source Tools

minuet-ai.nvim

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

model.nvim

model.nvim is a tool designed for Neovim users who want to utilize AI models for completions or chat within their text editor. It allows users to build prompts programmatically with Lua, customize prompts, experiment with multiple providers, and use both hosted and local models. The tool supports features like provider agnosticism, programmatic prompts in Lua, async and multistep prompts, streaming completions, and chat functionality in 'mchat' filetype buffer. Users can customize prompts, manage responses, and context, and utilize various providers like OpenAI ChatGPT, Google PaLM, llama.cpp, ollama, and more. The tool also supports treesitter highlights and folds for chat buffers.

pg_vectorize

pg_vectorize is a Postgres extension that automates text to embeddings transformation, enabling vector search and LLM applications with minimal function calls. It integrates with popular LLMs, provides workflows for vector search and RAG, and automates Postgres triggers for updating embeddings. The tool is part of the VectorDB Stack on Tembo Cloud, offering high-level APIs for easy initialization and search.

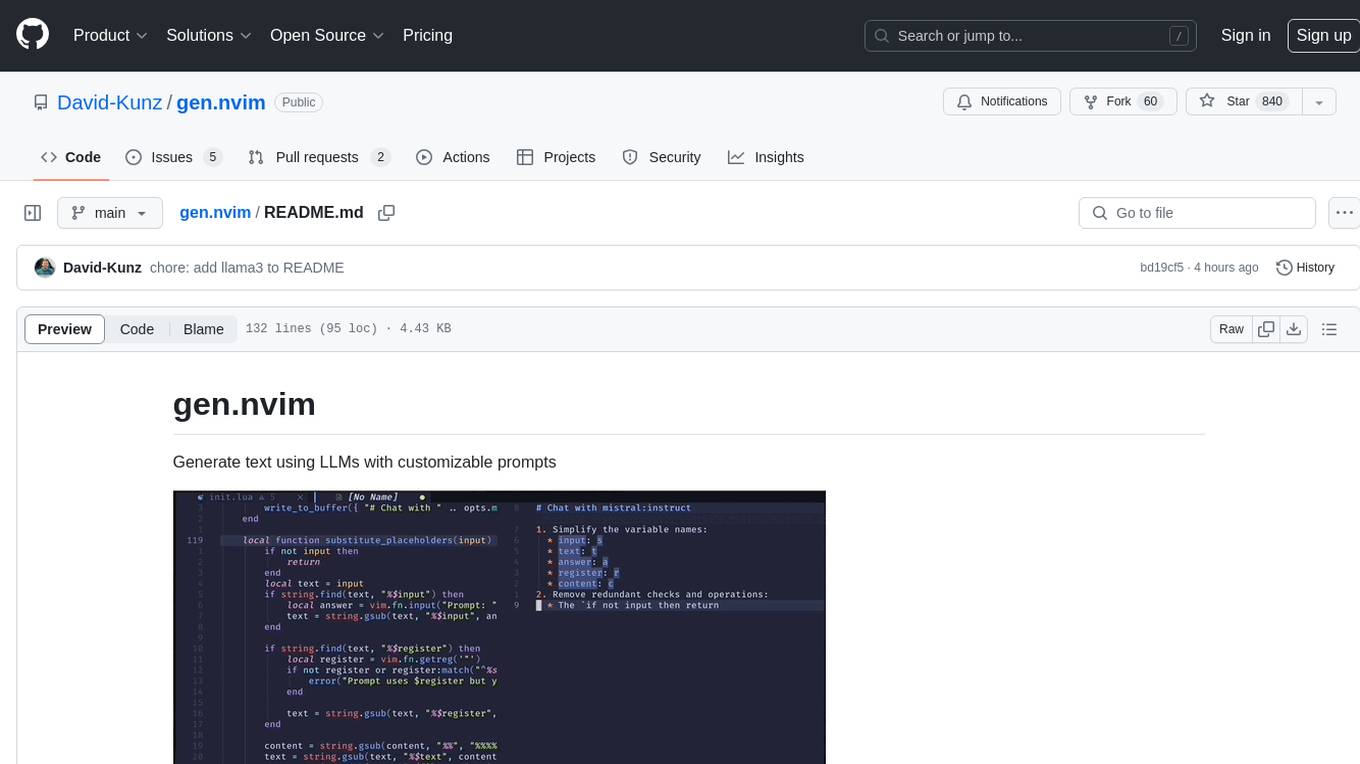

gen.nvim

gen.nvim is a tool that allows users to generate text using Language Models (LLMs) with customizable prompts. It requires Ollama with models like `llama3`, `mistral`, or `zephyr`, along with Curl for installation. Users can use the `Gen` command to generate text based on predefined or custom prompts. The tool provides key maps for easy invocation and allows for follow-up questions during conversations. Additionally, users can select a model from a list of installed models and customize prompts as needed.

perplexity-ai

Perplexity is a module that utilizes emailnator to generate new accounts, providing users with 5 pro queries per account creation. It enables the creation of new Gmail accounts with emailnator, ensuring unlimited pro queries. The tool requires specific Python libraries for installation and offers both a web interface and an API for different usage scenarios. Users can interact with the tool to perform various tasks such as account creation, query searches, and utilizing different modes for research purposes. Perplexity also supports asynchronous operations and provides guidance on obtaining cookies for account usage and account generation from emailnator.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

litegraph

LiteGraph is a property graph database designed for knowledge and artificial intelligence applications. It supports graph relationships, tags, labels, metadata, data, and vectors. LiteGraph can be used in-process with LiteGraphClient or as a standalone RESTful server with LiteGraph.Server. The latest version includes major internal refactor, batch APIs, enumeration APIs, statistics APIs, database caching, vector search enhancements, and bug fixes. LiteGraph allows for simple embedding into applications without user configuration. Users can create tenants, graphs, nodes, edges, and perform operations like finding routes and exporting to GEXF file. It also provides features for working with object labels, tags, data, and vectors, enabling filtering and searching based on various criteria. LiteGraph offers REST API deployment with LiteGraph.Server and Docker support with a Docker image available on Docker Hub.

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

auto-playwright

Auto Playwright is a tool that allows users to run Playwright tests using AI. It eliminates the need for selectors by determining actions at runtime based on plain-text instructions. Users can automate complex scenarios, write tests concurrently with or before functionality development, and benefit from rapid test creation. The tool supports various Playwright actions and offers additional options for debugging and customization. It uses HTML sanitization to reduce costs and improve text quality when interacting with the OpenAI API.

phidata

Phidata is a framework for building AI Assistants with memory, knowledge, and tools. It enables LLMs to have long-term conversations by storing chat history in a database, provides them with business context by storing information in a vector database, and enables them to take actions like pulling data from an API, sending emails, or querying a database. Memory and knowledge make LLMs smarter, while tools make them autonomous.

simpleAI

SimpleAI is a self-hosted alternative to the not-so-open AI API, focused on replicating main endpoints for LLM such as text completion, chat, edits, and embeddings. It allows quick experimentation with different models, creating benchmarks, and handling specific use cases without relying on external services. Users can integrate and declare models through gRPC, query endpoints using Swagger UI or API, and resolve common issues like CORS with FastAPI middleware. The project is open for contributions and welcomes PRs, issues, documentation, and more.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

scaleapi-python-client

The Scale AI Python SDK is a tool that provides a Python interface for interacting with the Scale API. It allows users to easily create tasks, manage projects, upload files, and work with evaluation tasks, training tasks, and Studio assignments. The SDK handles error handling and provides detailed documentation for each method. Users can also manage teammates, project groups, and batches within the Scale Studio environment. The SDK supports various functionalities such as creating tasks, retrieving tasks, canceling tasks, auditing tasks, updating task attributes, managing files, managing team members, and working with evaluation and training tasks.

For similar tasks

minuet-ai.nvim

Minuet AI is a Neovim plugin that integrates with nvim-cmp to provide AI-powered code completion using multiple AI providers such as OpenAI, Claude, Gemini, Codestral, and Huggingface. It offers customizable configuration options and streaming support for completion delivery. Users can manually invoke completion or use cost-effective models for auto-completion. The plugin requires API keys for supported AI providers and allows customization of system prompts. Minuet AI also supports changing providers, toggling auto-completion, and provides solutions for input delay issues. Integration with lazyvim is possible, and future plans include implementing RAG on the codebase and virtual text UI support.

awesome-code-ai

A curated list of AI coding tools, including code completion, refactoring, and assistants. This list includes both open-source and commercial tools, as well as tools that are still in development. Some of the most popular AI coding tools include GitHub Copilot, CodiumAI, Codeium, Tabnine, and Replit Ghostwriter.

companion-vscode

Quack Companion is a VSCode extension that provides smart linting, code chat, and coding guideline curation for developers. It aims to enhance the coding experience by offering a new tab with features like curating software insights with the team, code chat similar to ChatGPT, smart linting, and upcoming code completion. The extension focuses on creating a smooth contribution experience for developers by turning contribution guidelines into a live pair coding experience, helping developers find starter contribution opportunities, and ensuring alignment between contribution goals and project priorities. Quack collects limited telemetry data to improve its services and products for developers, with options for anonymization and disabling telemetry available to users.

CodeGeeX4

CodeGeeX4-ALL-9B is an open-source multilingual code generation model based on GLM-4-9B, offering enhanced code generation capabilities. It supports functions like code completion, code interpreter, web search, function call, and repository-level code Q&A. The model has competitive performance on benchmarks like BigCodeBench and NaturalCodeBench, outperforming larger models in terms of speed and performance.

probsem

ProbSem is a repository that provides a framework to leverage large language models (LLMs) for assigning context-conditional probability distributions over queried strings. It supports OpenAI engines and HuggingFace CausalLM models, and is flexible for research applications in linguistics, cognitive science, program synthesis, and NLP. Users can define prompts, contexts, and queries to derive probability distributions over possible completions, enabling tasks like cloze completion, multiple-choice QA, semantic parsing, and code completion. The repository offers CLI and API interfaces for evaluation, with options to customize models, normalize scores, and adjust temperature for probability distributions.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

chatgpt

The ChatGPT R package provides a set of features to assist in R coding. It includes addins like Ask ChatGPT, Comment selected code, Complete selected code, Create unit tests, Create variable name, Document code, Explain selected code, Find issues in the selected code, Optimize selected code, and Refactor selected code. Users can interact with ChatGPT to get code suggestions, explanations, and optimizations. The package helps in improving coding efficiency and quality by providing AI-powered assistance within the RStudio environment.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.