laragenie

🤖 An AI bot made for the command line that can read and understand any codebase from your Laravel app.

Stars: 135

Laragenie is an AI chatbot designed to understand and assist developers with their codebases. It runs on the command line from a Laravel app, helping developers onboard to new projects, understand codebases, and provide daily support. Laragenie accelerates workflow and collaboration by indexing files and directories, allowing users to ask questions and receive AI-generated responses. It supports OpenAI and Pinecone for processing and indexing data, making it a versatile tool for any repo in any language.

README:

Laragenie is an AI chatbot that runs on the command line from your Laravel app. It will be able to read and understand any of your codebases following a few simple steps:

- Set up your env variables OpenAI and Pinecone

- Publish and update the Laragenie config

- Index your files and/or full directories

- Ask your questions

It's as simple as that! Accelerate your workflow instantly and collaborate seamlessly with the quickest and most knowledgeable 'colleague' you've ever had.

This is a particularly useful CLI bot that can be used to:

- Onboard developer's to new projects.

- Assist both junior and senior developers in understanding a codebase, offering a cost-effective alternative to multiple one-on-one sessions with other developers.

- Provide convenient and readily available support on a daily basis as needed.

You are not limited to indexing files based in your Laravel project. You can use this for monorepo's, or indeed any repo in any language. You can of course use this tool to index files that are not code-related also.

All you need to do is run this CLI tool from the Laravel directory. Simple, right?! 🎉

[!NOTE]

If you are upgrading from a Laragenie version^1.0.63 > 1.1, there is a change to Pinecone environment variables. Please see OpenAI and Pinecone.

- Requirements

- Installation

- Useage

- Debugging

- Changelog

- Contributing

- Security Vulnerabilities

- Credits

- Licence

For specific versions that match your PHP, Laravel and Laragenie versions please see the table below:

| PHP | Laravel version | Laragenie version |

|---|---|---|

| ^8.1 | ^10.0 | >=1.0 <1.2 |

| ^8.2 | ^10.0, ^11.0 | ^1.2.0 |

This package uses Laravel Prompts which supports macOS, Linux, and Windows with WSL. Due to limitations in the Windows version of PHP, it is not currently possible to use Laravel Prompts on Windows outside of WSL.

For this reason, Laravel Prompts supports falling back to an alternative implementation such as the Symfony Console Question Helper.

You can install the package via composer:

composer require joshembling/laragenieYou can publish and run the migrations with:

php artisan vendor:publish --tag="laragenie-migrations"

php artisan migrateIf you don't want to publish migrations, you must toggle the database credentials in your Laragenie config to false. (See config file details below).

You can publish the config file with:

php artisan vendor:publish --tag="laragenie-config"This is the contents of the published config file:

return [

'bot' => [

'name' => 'Laragenie', // The name of your chatbot

'welcome' => 'Hello, I am Laragenie, how may I assist you today?', // Your welcome message

'instructions' => 'Write in markdown format. Try to only use factual data that can be pulled from indexed chunks.', // The chatbot instructions

],

'chunks' => [

'size' => 1000, // Maximum number of characters to separate chunks

],

'database' => [

'fetch' => true, // Fetch saved answers from previous questions

'save' => true, // Save answers to the database

],

'extensions' => [ // The file types you want to index

'php',

'blade.php',

'js',

],

'indexes' => [

'directories' => [], // The directores you want to index e.g. ['app/Models', 'app/Http/Controllers', '../frontend/src']

'files' => [], // The files you want to index e.g. ['tests/Feature/MyTest.php']

'removal' => [

'strict' => true, // User prompt on deletion requests of indexes

],

],

'openai' => [

'embedding' => [

'model' => 'text-embedding-3-small', // Text embedding model

'max_tokens' => 5, // Maximum tokens to use when embedding

],

'chat' => [

'model' => 'gpt-4-turbo-preview', // Your OpenAI GPT model

'temperature' => 0.1, // Set temperature between 0 and 1 (lower values will have less irrelevance)

],

],

'pinecone' => [

'topK' => 2, // Pinecone indexes to fetch

],

];This package uses OpenAI to process and generate responses and Pinecone to index your data.

You will need to create an OpenAI account with credits, generate an API key and add it to your .env file:

OPENAI_API_KEY=your-open-ai-key

[!IMPORTANT]

If you are using a Laragenie version prior to 1.1 and do not want to upgrade, go straight to Legacy Pinecone.

You will need to create a Pinecone account. There are two diferent types of account you can set up:

- Serverless

- Pod-based index

As of early 2024, Pinecone recommend you start with a serverless account. You can optionally set up an account with a payment method attached to get $100 in free credits, however, a free account allows up to 100,000 indexes - likely more than enough for any small-medium sized application.

Create an index with 1536 dimensions and the metric as 'cosine'. Then generate an api key and add these details to your .env file:

PINECONE_API_KEY=an-example-pinecone-api-key

PINECONE_INDEX_HOST='https://an-example-url.aaa.gcp-starter.pinecone.io'

Your host can be seen in the information box on your index page, alongside the metric, dimensions, pod type, cloud, region and environment.

[!TIP]

If you are upgrading to Laragenie ^1.1, you can safely remove the legacy environment variables:PINECONE_ENVIRONMENTandPINECONE_INDEX.

Important: If you are using Laragenie 1.0.63 or prior, you must use a regular Pinecone account and NOT a serverless account. When you are hinted to select an option on account creation, ensure you select 'Continue with pod-based index'.

Create an environment with 1536 dimensions and name it, generate an api key and add these details to your .env file:

PINECONE_API_KEY=your-pinecone-api-key

PINECONE_ENVIRONMENT=gcp-starter

PINECONE_INDEX=your-index

Once these are setup you will be able to run the following command from your root directory:

php artisan laragenieYou will get 4 options:

- Ask a question

- Index files

- Remove indexed files

- Something else

Use the arrow keys to toggle through the options and enter to select the command.

Note: you can only run this action once you have files indexed in your Pinecone vector database (skip to the ‘Index Files’ section if you wish to find out how to start indexing).

When your vector database has indexes you’ll be able to ask any questions relating to your codebase.

Answers can be generated in markdown format with code examples, or any format of your choosing. Use the bot.instructions config to write AI instructions as detailed as you need to.

Beneath each response you will see the generated cost (in US dollars), which will help keep close track of the expense. Cost of the response is added to your database, if migrations are enabled.

Costs can vary, but small responses will be less than $0.01. Much larger responses can be between $0.02–0.05.

As previously mentioned, when you have migrations enabled your questions will save to your database.

However, you may want to force AI usage (prevent fetching from the database) if you are unsatisfied with the initial answer. This will overwrite the answer already saved to the database.

To force an AI response, you will need to end all questions with an --ai flag e.g.

Tell me how users are saved to the database --ai.

This will ensure the AI model will re-assess your request, and outputs another answer (this could be the same answer depending on the GPT model you are using).

The quickest way to index files is to pass in singular values to the directories or files array in the Laragenie config. When you run the 'Index Files' command you will always have the option to reindex these files. This will help in keeping your Laragenie bot up to date.

Select 'yes', when prompted with Do you want to index your directories and files saved in your config?

'indexes' => [

'directories' => ['app/Models', 'app/Http/Controllers'],

'files' => ['tests/Feature/MyTest.php'],

'removal' => [

'strict' => true,

],

],If you select 'no', you can also index files in the following ways:

- Inputting a file name with it's namespace e.g.

app/Models/User.php - Inputting a full directory, e.g.

App- If you pass in a directory, Laragenie can only index files within this directory, and not its subdirectories.

- To index subdirectories you must explicitly pass the path e.g.

app/Modelsto index all of your models

- Inputting multiple files or directories in a comma separated list e.g.

app/Models, tests/Feature, app/Http/Controllers/Controller.php - Inputting multiple directories with wildcards e.g.

app/Models/*.php- Please note that the wildcards must still match the file extensions in your

laragenieconfig file.

- Please note that the wildcards must still match the file extensions in your

You may use Laragenie in any way that you wish; you are not limited to just indexing Laravel based files.

For example, your Laravel project may live in a monorepo with two root entries such as frontend and backend. In this instance, you could move up one level to index more directories and files e.g. ../frontend/src/ or ../frontend/components/Component.js.

You can add these to your directories and files in the Laragenie config:

'indexes' => [

'directories' => ['app/Models', 'app/Http/Controllers', '../frontend/src/'],

'files' => ['tests/Feature/MyTest.php', '../frontend/components/Component.js'],

'removal' => [

'strict' => true,

],

],Using this same method, you could technically index any files or directories you have access to on your server or local machine.

Ensure your extensions in your Laragenie config match all the file types that you want to index.

'extensions' => [

'php', 'blade.php', 'js', 'jsx', 'ts', 'tsx', // etc...

],Note: if your directories, paths or file names change, Laragenie will not be able to find the index if you decide to update/remove it later on (unless you truncate your entire vector database, or go into Pinecone and delete them manually).

You can remove indexed files using the same methods listed above, except from using your directories or files array in the Laragenie config - this is currently for indexing purposes only.

If you want to remove all files you may do so by selecting Remove all chunked data. Be warned that this will truncate your entire vector database and cannot be reversed.

To remove a comma separated list of files/directories, select the Remove data associated with a directory or specific file prompt as an option.

Strict removal, i.e. warning messages before files are removed, can be turned on/off by changing the 'strict' attribute to false in your config.

'indexes' => [

'removal' => [

'strict' => true,

],

],You can stop Laragenie using the following methods:

-

ctrl + c(Linux/Mac) - Selecting

No thanks, goodbyein the user menu after at least 1 prompt has run.

Have fun using Laragenie! 🤖

- If you have correctly added the required

.envvariables, but get an error such as "You didn't provide an API key", you may need to clear your cache and config:

php artisan config:clear

php artisan cache:clear- Likewise, if you get a 404 response and a Saloon exception when trying any of the four options, it's likely you do not have a non-serverless Pinecone database set up and are using a Laragenie version prior to 1.1. Please see OpenAI and Pinecone.

Please see CHANGELOG for more information on what has changed recently.

Please see CONTRIBUTING for details.

Please review our security policy on how to report security vulnerabilities.

The MIT License (MIT). Please see License File for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for laragenie

Similar Open Source Tools

laragenie

Laragenie is an AI chatbot designed to understand and assist developers with their codebases. It runs on the command line from a Laravel app, helping developers onboard to new projects, understand codebases, and provide daily support. Laragenie accelerates workflow and collaboration by indexing files and directories, allowing users to ask questions and receive AI-generated responses. It supports OpenAI and Pinecone for processing and indexing data, making it a versatile tool for any repo in any language.

json_repair

This simple package can be used to fix an invalid json string. To know all cases in which this package will work, check out the unit test. Inspired by https://github.com/josdejong/jsonrepair Motivation Some LLMs are a bit iffy when it comes to returning well formed JSON data, sometimes they skip a parentheses and sometimes they add some words in it, because that's what an LLM does. Luckily, the mistakes LLMs make are simple enough to be fixed without destroying the content. I searched for a lightweight python package that was able to reliably fix this problem but couldn't find any. So I wrote one How to use from json_repair import repair_json good_json_string = repair_json(bad_json_string) # If the string was super broken this will return an empty string You can use this library to completely replace `json.loads()`: import json_repair decoded_object = json_repair.loads(json_string) or just import json_repair decoded_object = json_repair.repair_json(json_string, return_objects=True) Read json from a file or file descriptor JSON repair provides also a drop-in replacement for `json.load()`: import json_repair try: file_descriptor = open(fname, 'rb') except OSError: ... with file_descriptor: decoded_object = json_repair.load(file_descriptor) and another method to read from a file: import json_repair try: decoded_object = json_repair.from_file(json_file) except OSError: ... except IOError: ... Keep in mind that the library will not catch any IO-related exception and those will need to be managed by you Performance considerations If you find this library too slow because is using `json.loads()` you can skip that by passing `skip_json_loads=True` to `repair_json`. Like: from json_repair import repair_json good_json_string = repair_json(bad_json_string, skip_json_loads=True) I made a choice of not using any fast json library to avoid having any external dependency, so that anybody can use it regardless of their stack. Some rules of thumb to use: - Setting `return_objects=True` will always be faster because the parser returns an object already and it doesn't have serialize that object to JSON - `skip_json_loads` is faster only if you 100% know that the string is not a valid JSON - If you are having issues with escaping pass the string as **raw** string like: `r"string with escaping\"" Adding to requirements Please pin this library only on the major version! We use TDD and strict semantic versioning, there will be frequent updates and no breaking changes in minor and patch versions. To ensure that you only pin the major version of this library in your `requirements.txt`, specify the package name followed by the major version and a wildcard for minor and patch versions. For example: json_repair==0.* In this example, any version that starts with `0.` will be acceptable, allowing for updates on minor and patch versions. How it works This module will parse the JSON file following the BNF definition:

unsight.dev

unsight.dev is a tool built on Nuxt that helps detect duplicate GitHub issues and areas of concern across related repositories. It utilizes Nitro server API routes, GitHub API, and a GitHub App, along with UnoCSS. The tool is deployed on Cloudflare with NuxtHub, using Workers AI, Workers KV, and Vectorize. It also offers a browser extension soon to be released. Users can try the app locally for tweaking the UI and setting up a full development environment as a GitHub App.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

mandark

Mandark is a lightweight AI tool that can perform various tasks, such as answering questions about codebases, editing files, verifying diffs, estimating token and cost before execution, and working with any codebase. It supports multiple AI models like Claude-3.5 Sonnet, Haiku, GPT-4o-mini, and GPT-4-turbo. Users can run Mandark without installation and easily interact with it through command line options. It offers flexibility in processing individual files or folders and allows for customization with optional AI model selection and output preferences.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

Bard-API

The Bard API is a Python package that returns responses from Google Bard through the value of a cookie. It is an unofficial API that operates through reverse-engineering, utilizing cookie values to interact with Google Bard for users struggling with frequent authentication problems or unable to authenticate via Google Authentication. The Bard API is not a free service, but rather a tool provided to assist developers with testing certain functionalities due to the delayed development and release of Google Bard's API. It has been designed with a lightweight structure that can easily adapt to the emergence of an official API. Therefore, using it for any other purposes is strongly discouraged. If you have access to a reliable official PaLM-2 API or Google Generative AI API, replace the provided response with the corresponding official code. Check out https://github.com/dsdanielpark/Bard-API/issues/262.

neural

Neural is a Vim and Neovim plugin that integrates various machine learning tools to assist users in writing code, generating text, and explaining code or paragraphs. It supports multiple machine learning models, focuses on privacy, and is compatible with Vim 8.0+ and Neovim 0.8+. Users can easily configure Neural to interact with third-party machine learning tools, such as OpenAI, to enhance code generation and completion. The plugin also provides commands like `:NeuralExplain` to explain code or text and `:NeuralStop` to stop Neural from working. Neural is maintained by the Dense Analysis team and comes with a disclaimer about sending input data to third-party servers for machine learning queries.

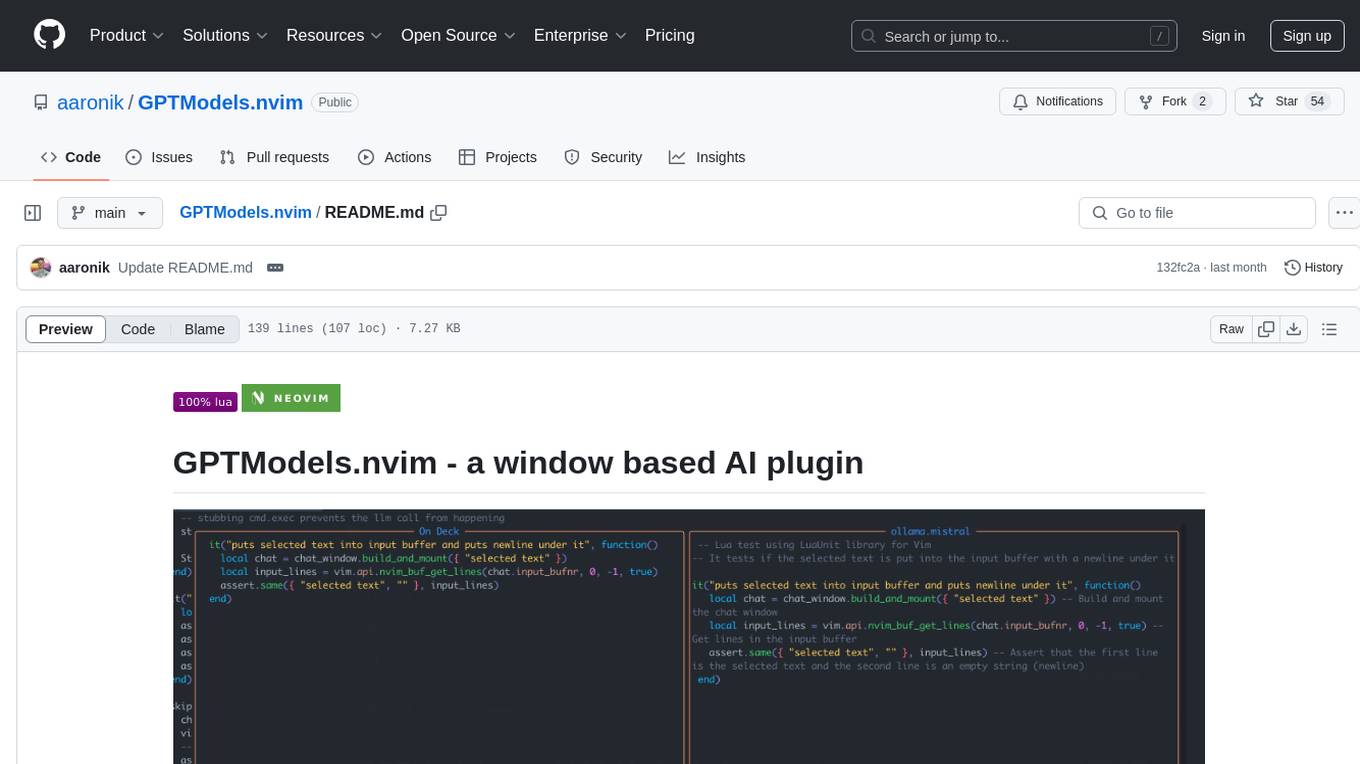

GPTModels.nvim

GPTModels.nvim is a window-based AI plugin for Neovim that enhances workflow with AI LLMs. It provides two popup windows for chat and code editing, focusing on stability and user experience. The plugin supports OpenAI and Ollama, includes LSP diagnostics, file inclusion, background processing, request cancellation, selection inclusion, and filetype inclusion. Developed with stability in mind, the plugin offers a seamless user experience with various features to streamline AI integration in Neovim.

chatgpt-vscode

ChatGPT-VSCode is a Visual Studio Code integration that allows users to prompt OpenAI's GPT-4, GPT-3.5, GPT-3, and Codex models within the editor. It offers features like using improved models via OpenAI API Key, Azure OpenAI Service deployments, generating commit messages, storing conversation history, explaining and suggesting fixes for compile-time errors, viewing code differences, and more. Users can customize prompts, quick fix problems, save conversations, and export conversation history. The extension is designed to enhance developer experience by providing AI-powered assistance directly within VS Code.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

cog-comfyui

Cog-comfyui allows users to run ComfyUI workflows on Replicate. ComfyUI is a visual programming tool for creating and sharing generative art workflows. With cog-comfyui, users can access a variety of pre-trained models and custom nodes to create their own unique artworks. The tool is easy to use and does not require any coding experience. Users simply need to upload their API JSON file and any necessary input files, and then click the "Run" button. Cog-comfyui will then generate the output image or video file.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

actions

Sema4.ai Action Server is a tool that allows users to build semantic actions in Python to connect AI agents with real-world applications. It enables users to create custom actions, skills, loaders, and plugins that securely connect any AI Assistant platform to data and applications. The tool automatically creates and exposes an API based on function declaration, type hints, and docstrings by adding '@action' to Python scripts. It provides an end-to-end stack supporting various connections between AI and user's apps and data, offering ease of use, security, and scalability.

airbyte_serverless

AirbyteServerless is a lightweight tool designed to simplify the management of Airbyte connectors. It offers a serverless mode for running connectors, allowing users to easily move data from any source to their data warehouse. Unlike the full Airbyte-Open-Source-Platform, AirbyteServerless focuses solely on the Extract-Load process without a UI, database, or transform layer. It provides a CLI tool, 'abs', for managing connectors, creating connections, running jobs, selecting specific data streams, handling secrets securely, and scheduling remote runs. The tool is scalable, allowing independent deployment of multiple connectors. It aims to streamline the connector management process and provide a more agile alternative to the comprehensive Airbyte platform.

For similar tasks

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

chat-ollama

ChatOllama is an open-source chatbot based on LLMs (Large Language Models). It supports a wide range of language models, including Ollama served models, OpenAI, Azure OpenAI, and Anthropic. ChatOllama supports multiple types of chat, including free chat with LLMs and chat with LLMs based on a knowledge base. Key features of ChatOllama include Ollama models management, knowledge bases management, chat, and commercial LLMs API keys management.

ChatIDE

ChatIDE is an AI assistant that integrates with your IDE, allowing you to converse with OpenAI's ChatGPT or Anthropic's Claude within your development environment. It provides a seamless way to access AI-powered assistance while coding, enabling you to get real-time help, generate code snippets, debug errors, and brainstorm ideas without leaving your IDE.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

googlegpt

GoogleGPT is a browser extension that brings the power of ChatGPT to Google Search. With GoogleGPT, you can ask ChatGPT questions and get answers directly in your search results. You can also use GoogleGPT to generate text, translate languages, and more. GoogleGPT is compatible with all major browsers, including Chrome, Firefox, Edge, and Safari.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.