onefilellm

Specify a github or local repo, github pull request, arXiv or Sci-Hub paper, Youtube transcript or documentation URL on the web and scrape into a text file and clipboard for easier LLM ingestion

Stars: 1712

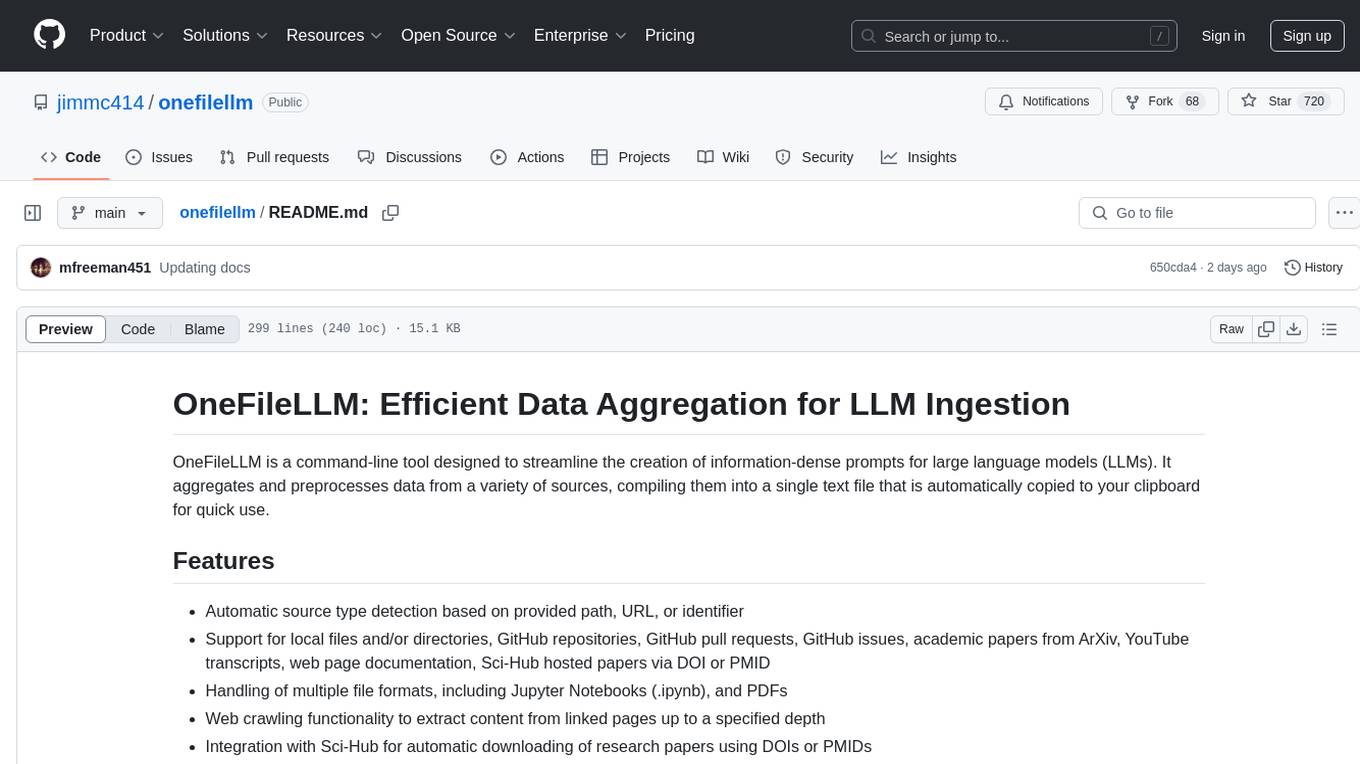

OneFileLLM is a command-line tool that streamlines the creation of information-dense prompts for large language models (LLMs). It aggregates and preprocesses data from various sources, compiling them into a single text file for quick use. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, token count reporting, and XML encapsulation of output for improved LLM performance. Users can easily access private GitHub repositories by generating a personal access token. The tool's output is encapsulated in XML tags to enhance LLM understanding and processing.

README:

Content Aggregator for LLMs - Aggregate and structure multi-source data into a single XML file for LLM context.

OneFileLLM is a command-line tool that automates data aggregation from various sources (local files, GitHub repos, web pages, PDFs, YouTube transcripts, etc.) and combines them into a single, structured XML output that's automatically copied to your clipboard for use with Large Language Models.

git clone https://github.com/jimmc414/onefilellm.git

cd onefilellm

pip install -r requirements.txtOneFileLLM is also available as a pip package. You can install it directly and use both the CLI and Python API without cloning the repository:

pip install onefilellmThis project can also be installed as a command-line tool, which allows you to run onefilellm directly from your terminal.

To install the CLI, run the following command in the project's root directory:

pip install -e .This will install the package in "editable" mode, meaning any changes you make to the source code will be immediately available to the command-line tool.

Once installed, you can use the onefilellm command instead of python onefilellm.py.

Synopsis:

onefilellm [OPTIONS] [INPUT_SOURCES...]

Example:

onefilellm ./docs/ https://github.com/user/project/issues/123All other command-line arguments and options work the same as the script-based approach.

For GitHub API access (recommended):

export GITHUB_TOKEN="your_personal_access_token"After installing via pip, OneFileLLM can be invoked directly from Python code.

from onefilellm import run

# Process inputs programmatically

run(["./docs/"])usage: onefilellm.py [-h] [-c]

[-f {text,markdown,json,html,yaml,doculing,markitdown}]

[--alias-add NAME [COMMAND_STRING ...]]

[--alias-remove NAME] [--alias-list] [--alias-list-core]

[--crawl-max-depth CRAWL_MAX_DEPTH]

[--crawl-max-pages CRAWL_MAX_PAGES]

[--crawl-user-agent CRAWL_USER_AGENT]

[--crawl-delay CRAWL_DELAY]

[--crawl-include-pattern CRAWL_INCLUDE_PATTERN]

[--crawl-exclude-pattern CRAWL_EXCLUDE_PATTERN]

[--crawl-timeout CRAWL_TIMEOUT] [--crawl-include-images]

[--crawl-no-include-code] [--crawl-no-extract-headings]

[--crawl-follow-links] [--crawl-no-clean-html]

[--crawl-no-strip-js] [--crawl-no-strip-css]

[--crawl-no-strip-comments] [--crawl-respect-robots]

[--crawl-concurrency CRAWL_CONCURRENCY]

[--crawl-restrict-path] [--crawl-no-include-pdfs]

[--crawl-no-ignore-epubs] [--help-topic [TOPIC]]

[inputs ...]

OneFileLLM - Content Aggregator for LLMs

positional arguments:

inputs Input paths, URLs, or aliases to process

options:

-h, --help show this help message and exit

-c, --clipboard Process text from clipboard

-f {text,markdown,json,html,yaml,doculing,markitdown}, --format {text,markdown,json,html,yaml,doculing,markitdown}

Override format detection for text input

--help-topic [TOPIC] Show help for specific topic (basic, aliases,

crawling, pipelines, examples, config)

## Quick Start Examples

### Local Files and Directories

```bash

python onefilellm.py research_paper.pdf config.yaml src/

python onefilellm.py *.py requirements.txt docs/ README.md

python onefilellm.py notebook.ipynb --format json

python onefilellm.py large_dataset.csv logs/ --format text

python onefilellm.py https://github.com/microsoft/vscode

python onefilellm.py https://github.com/openai/whisper/tree/main/whisper

python onefilellm.py https://github.com/microsoft/vscode/pull/12345

python onefilellm.py https://github.com/kubernetes/kubernetes/issues?state=all

python onefilellm.py https://github.com/kubernetes/kubernetes/issues?state=open

python onefilellm.py https://github.com/kubernetes/kubernetes/issues?state=closedYou can retrieve issues for a repository by specifying the state query parameter.

Use state=all (default) to fetch all issues, state=open for open issues only,

or state=closed for closed issues.

Is it possible to use this tool with different branches on a GitHub repository?

Yes. When you supply a GitHub URL that includes a branch (e.g., https://github.com/openai/whisper/tree/main/whisper), the tool parses the tree/ portion and sends the request with a ref parameter so that the specified branch or tag is retrieved.

python onefilellm.py https://docs.python.org/3/tutorial/

python onefilellm.py https://react.dev/learn/thinking-in-react

python onefilellm.py https://docs.stripe.com/api

python onefilellm.py https://kubernetes.io/docs/concepts/python onefilellm.py https://www.youtube.com/watch?v=dQw4w9WgXcQ

python onefilellm.py https://arxiv.org/abs/2103.00020

python onefilellm.py arxiv:1706.03762 PMID:35177773

python onefilellm.py doi:10.1038/s41586-021-03819-2python onefilellm.py https://github.com/jimmc414/hey-claude https://modelcontextprotocol.io/llms-full.txt https://github.com/anthropics/anthropic-sdk-python https://github.com/anthropics/anthropic-cookbook

python onefilellm.py https://github.com/openai/whisper/tree/main/whisper https://www.youtube.com/watch?v=dQw4w9WgXcQ ALIAS_MCP

python onefilellm.py https://github.com/microsoft/vscode/pull/12345 https://arxiv.org/abs/2103.00020

python onefilellm.py https://github.com/kubernetes/kubernetes/issues https://pytorch.org/docspython onefilellm.py --clipboard --format markdown

cat large_dataset.json | python onefilellm.py - --format json

curl -s https://api.github.com/repos/microsoft/vscode | python onefilellm.py -

echo 'Quick analysis task' | python onefilellm.py -python onefilellm.py --alias-add mcp "https://github.com/anthropics/mcp"

python onefilellm.py --alias-add modern-web \

"https://github.com/facebook/react https://reactjs.org/docs/ https://github.com/vercel/next.js"# Create placeholders with {}

python onefilellm.py --alias-add gh-search "https://github.com/search?q={}"

python onefilellm.py --alias-add gh-user "https://github.com/{}"

python onefilellm.py --alias-add arxiv-search "https://arxiv.org/search/?query={}"

# Use placeholders dynamically

python onefilellm.py gh-search "machine learning transformers"

python onefilellm.py gh-user "microsoft"

python onefilellm.py arxiv-search "attention mechanisms"python onefilellm.py --alias-add ai-research \

"arxiv:1706.03762 https://github.com/huggingface/transformers https://pytorch.org/docs"

python onefilellm.py --alias-add k8s-ecosystem \

"https://github.com/kubernetes/kubernetes https://kubernetes.io/docs/ https://github.com/istio/istio"

# Combine multiple aliases with live sources

python onefilellm.py ai-research k8s-ecosystem modern-web \

conference_notes.pdf local_experiments/python onefilellm.py --alias-list # Show all aliases

python onefilellm.py --alias-list-core # Show core aliases only

python onefilellm.py --alias-remove old-alias # Remove user alias

cat ~/.onefilellm_aliases/aliases.json # View raw JSON

--alias-add NAME [COMMAND_STRING ...]

Add or update a user-defined alias. Multiple arguments

after NAME will be joined as COMMAND_STRING.

--alias-remove NAME Remove a user-defined alias.

--alias-list List all effective aliases (user-defined aliases

override core aliases).

--alias-list-core List only pre-shipped (core) aliases.

Web Crawler Options:

--crawl-max-depth CRAWL_MAX_DEPTH

Maximum crawl depth (default: 3)

--crawl-max-pages CRAWL_MAX_PAGES

Maximum pages to crawl (default: 1000)

--crawl-user-agent CRAWL_USER_AGENT

User agent for web requests (default:

OneFileLLMCrawler/1.1)

--crawl-delay CRAWL_DELAY

Delay between requests in seconds (default: 0.25)

--crawl-include-pattern CRAWL_INCLUDE_PATTERN

Regex pattern for URLs to include

--crawl-exclude-pattern CRAWL_EXCLUDE_PATTERN

Regex pattern for URLs to exclude

--crawl-timeout CRAWL_TIMEOUT

Request timeout in seconds (default: 20)

--crawl-include-images

Include image URLs in output

--crawl-no-include-code

Exclude code blocks from output

--crawl-no-extract-headings

Exclude heading extraction

--crawl-follow-links Follow links to external domains

--crawl-no-clean-html

Disable readability cleaning

--crawl-no-strip-js Keep JavaScript code

--crawl-no-strip-css Keep CSS styles

--crawl-no-strip-comments

Keep HTML comments

--crawl-respect-robots

Respect robots.txt (default: ignore for backward

compatibility)

--crawl-concurrency CRAWL_CONCURRENCY

Number of concurrent requests (default: 3)

--crawl-restrict-path

Restrict crawl to paths under start URL

--crawl-no-include-pdfs

Skip PDF files

--crawl-no-ignore-epubs

Include EPUB filespython onefilellm.py https://docs.python.org/3/ \

--crawl-max-depth 4 --crawl-max-pages 800 \

--crawl-include-pattern ".*/(tutorial|library|reference)/" \

--crawl-exclude-pattern ".*/(whatsnew|faq)/"python onefilellm.py https://docs.aws.amazon.com/ec2/ \

--crawl-max-depth 3 --crawl-max-pages 500 \

--crawl-include-pattern ".*/(UserGuide|APIReference)/" \

--crawl-respect-robots --crawl-delay 0.5python onefilellm.py https://arxiv.org/list/cs.AI/recent \

--crawl-max-depth 2 --crawl-max-pages 100 \

--crawl-include-pattern ".*/(abs|pdf)/" \

--crawl-include-pdfs --crawl-delay 1.0python onefilellm.py ai-research protein-folding | \

llm -m claude-3-haiku "Extract key methodologies and datasets" | \

llm -m claude-3-sonnet "Identify experimental approaches" | \

llm -m gpt-4o "Compare methodologies across papers" | \

llm -m claude-3-opus "Generate novel research directions"python onefilellm.py \

https://github.com/competitor1/product \

https://competitor1.com/docs/ \

https://competitor2.com/api/ | \

llm -m claude-3-haiku "Extract features and capabilities" | \

llm -m gpt-4o "Compare and identify gaps" | \

llm -m claude-3-opus "Generate strategic recommendations"0 9 * * * python onefilellm.py \

https://arxiv.org/list/cs.AI/recent \

https://arxiv.org/list/cs.LG/recent | \

llm -m claude-3-haiku "Extract significant papers" | \

llm -m claude-3-sonnet "Summarize key developments" | \

mail -s "Daily AI Research Brief" [email protected]All output is encapsulated in XML for better LLM processing:

<onefilellm_output>

<source type="[source_type]" [additional_attributes]>

<[content_type]>

[Extracted content]

</[content_type]>

</source>

</onefilellm_output>- Local: Files and directories

- GitHub: Repositories, issues, pull requests

- Web: Pages with advanced crawling options

- Academic: ArXiv papers, DOIs, PMIDs

- Multimedia: YouTube transcripts

- Streams: stdin, clipboard

-

ofl_repo- OneFileLLM GitHub repository -

ofl_readme- OneFileLLM README file -

gh_search- GitHub search with placeholder -

arxiv_search- ArXiv search with placeholder

-

Alias Storage:

~/.onefilellm_aliases/aliases.json -

Environment Variables:

-

GITHUB_TOKEN- GitHub API access token -

OFFLINE_MODE- Set to1to skip network operations - Can use

.envfile in project root

-

python onefilellm.py --help-topic basic # Input sources and basic usage

python onefilellm.py --help-topic aliases # Alias system with real examples

python onefilellm.py --help-topic crawling # Web crawler patterns and ethics

python onefilellm.py --help-topic pipelines # 'llm' tool integration workflows

python onefilellm.py --help-topic examples # Advanced usage patterns

python onefilellm.py --help-topic config # Environment and configuration-

YouTube transcript errors: Fetching YouTube transcripts requires the

yt-dlptool. If you see errors aboutyt-dlpnot being found or failing, install it with:pip install yt-dlp

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for onefilellm

Similar Open Source Tools

onefilellm

OneFileLLM is a command-line tool that streamlines the creation of information-dense prompts for large language models (LLMs). It aggregates and preprocesses data from various sources, compiling them into a single text file for quick use. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, token count reporting, and XML encapsulation of output for improved LLM performance. Users can easily access private GitHub repositories by generating a personal access token. The tool's output is encapsulated in XML tags to enhance LLM understanding and processing.

rlama

RLAMA is a powerful AI-driven question-answering tool that seamlessly integrates with local Ollama models. It enables users to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored to their documentation needs. RLAMA follows a clean architecture pattern with clear separation of concerns, focusing on lightweight and portable RAG capabilities with minimal dependencies. The tool processes documents, generates embeddings, stores RAG systems locally, and provides contextually-informed responses to user queries. Supported document formats include text, code, and various document types, with troubleshooting steps available for common issues like Ollama accessibility, text extraction problems, and relevance of answers.

ahnlich

Ahnlich is a tool that provides multiple components for storing and searching similar vectors using linear or non-linear similarity algorithms. It includes 'ahnlich-db' for in-memory vector key value store, 'ahnlich-ai' for AI proxy communication, 'ahnlich-client-rs' for Rust client, and 'ahnlich-client-py' for Python client. The tool is not production-ready yet and is still in testing phase, allowing AI/ML engineers to issue queries using raw input such as images/text and features off-the-shelf models for indexing and querying.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

NextChat

NextChat is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro. It offers a compact client for Linux, Windows, and MacOS, with features like self-deployed LLMs compatibility, privacy-first data storage, markdown support, responsive design, and fast loading speed. Users can create, share, and debug chat tools with prompt templates, access various prompts, compress chat history, and use multiple languages. The tool also supports enterprise-level privatization and customization deployment, with features like brand customization, resource integration, permission control, knowledge integration, security auditing, private deployment, and continuous updates.

starknet-agent-kit

starknet-agent-kit is a NestJS-based toolkit for creating AI agents that can interact with the Starknet blockchain. It allows users to perform various actions such as retrieving account information, creating accounts, transferring assets, playing with DeFi, interacting with dApps, and executing RPC read methods. The toolkit provides a secure environment for developing AI agents while emphasizing caution when handling sensitive information. Users can make requests to the Starknet agent via API endpoints and utilize tools from Langchain directly.

echo-editor

Echo Editor is a modern AI-powered WYSIWYG rich-text editor for Vue, featuring a beautiful UI with shadcn-vue components. It provides AI-powered writing assistance, Markdown support with real-time preview, rich text formatting, tables, code blocks, custom font sizes and styles, Word document import, I18n support, extensible architecture for creating extensions, TypeScript and Tailwind CSS support. The tool aims to enhance the writing experience by combining advanced features with user-friendly design.

code-graph

Code-graph is a tool composed of FalkorDB Graph DB, Code-Graph-Backend, and Code-Graph-Frontend. It allows users to store and query graphs, manage backend logic, and interact with the website. Users can run the components locally by setting up environment variables and installing dependencies. The tool supports analyzing C & Python source files with plans to add support for more languages in the future. It provides a local repository analysis feature and a live demo accessible through a web browser.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

Free-GPT4-WEB-API

FreeGPT4-WEB-API is a Python server that allows you to have a self-hosted GPT-4 Unlimited and Free WEB API, via the latest Bing's AI. It uses Flask and GPT4Free libraries. GPT4Free provides an interface to the Bing's GPT-4. The server can be configured by editing the `FreeGPT4_Server.py` file. You can change the server's port, host, and other settings. The only cookie needed for the Bing model is `_U`.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

wikipedia-mcp

The Wikipedia MCP Server is a Model Context Protocol (MCP) server that provides real-time access to Wikipedia information for Large Language Models (LLMs). It allows AI assistants to retrieve accurate and up-to-date information from Wikipedia to enhance their responses. The server offers features such as searching Wikipedia, retrieving article content, getting article summaries, extracting specific sections, discovering links within articles, finding related topics, supporting multiple languages and country codes, optional caching for improved performance, and compatibility with Google ADK agents and other AI frameworks. Users can install the server using pipx, Smithery, PyPI, virtual environment, or from source. The server can be run with various options for transport protocol, language, country/locale, caching, access token, and more. It also supports Docker and Kubernetes deployment. The server provides MCP tools for interacting with Wikipedia, such as searching articles, getting article content, summaries, sections, links, coordinates, related topics, and extracting key facts. It also supports country/locale codes and language variants for languages like Chinese, Serbian, Kurdish, and Norwegian. The server includes example prompts for querying Wikipedia and provides MCP resources for interacting with Wikipedia through MCP endpoints. The project structure includes main packages, API implementation, core functionality, utility functions, and a comprehensive test suite for reliability and functionality testing.

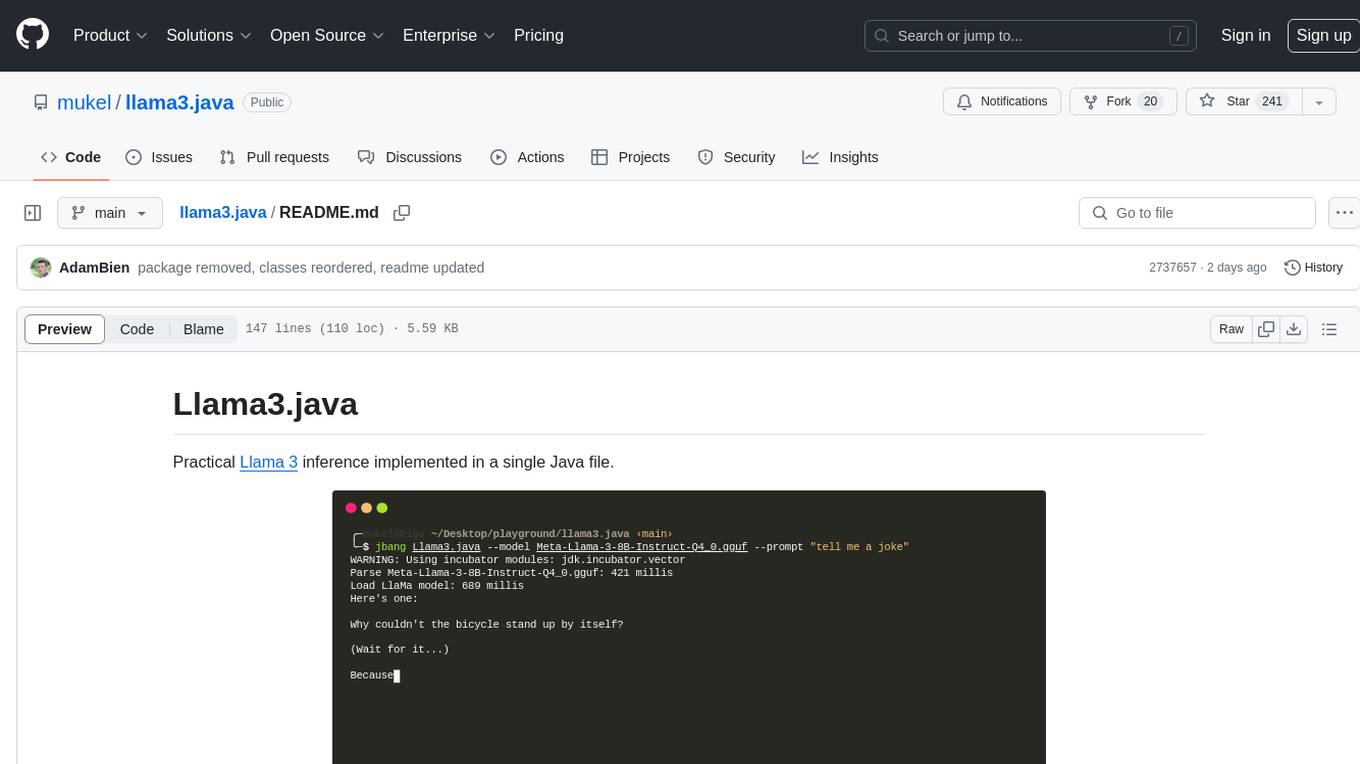

llama3.java

Llama3.java is a practical Llama 3 inference tool implemented in a single Java file. It serves as the successor of llama2.java and is designed for testing and tuning compiler optimizations and features on the JVM, especially for the Graal compiler. The tool features a GGUF format parser, Llama 3 tokenizer, Grouped-Query Attention inference, support for Q8_0 and Q4_0 quantizations, fast matrix-vector multiplication routines using Java's Vector API, and a simple CLI with 'chat' and 'instruct' modes. Users can download quantized .gguf files from huggingface.co for model usage and can also manually quantize to pure 'Q4_0'. The tool requires Java 21+ and supports running from source or building a JAR file for execution. Performance benchmarks show varying tokens/s rates for different models and implementations on different hardware setups.

celery-aio-pool

Celery AsyncIO Pool is a free software tool licensed under GNU Affero General Public License v3+. It provides an AsyncIO worker pool for Celery, enabling users to leverage the power of AsyncIO in their Celery applications. The tool allows for easy installation using Poetry, pip, or directly from GitHub. Users can configure Celery to use the AsyncIO pool provided by celery-aio-pool, or they can wait for the upcoming support for out-of-tree worker pools in Celery 5.3. The tool is actively maintained and welcomes contributions from the community.

CodeRAG

CodeRAG is an AI-powered code retrieval and assistance tool that combines Retrieval-Augmented Generation (RAG) with AI to provide intelligent coding assistance. It indexes your entire codebase for contextual suggestions based on your complete project, offering real-time indexing, semantic code search, and contextual AI responses. The tool monitors your code directory, generates embeddings for Python files, stores them in a FAISS vector database, matches user queries against the code database, and sends retrieved code context to GPT models for intelligent responses. CodeRAG also features a Streamlit web interface with a chat-like experience for easy usage.

For similar tasks

vespa

Vespa is a platform that performs operations such as selecting a subset of data in a large corpus, evaluating machine-learned models over the selected data, organizing and aggregating it, and returning it, typically in less than 100 milliseconds, all while the data corpus is continuously changing. It has been in development for many years and is used on a number of large internet services and apps which serve hundreds of thousands of queries from Vespa per second.

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

letsql

LETSQL is a data processing library built on top of Ibis and DataFusion to write multi-engine data workflows. It is currently in development and does not have a stable release. Users can install LETSQL from PyPI and use it to connect to data sources, read data, filter, group, and aggregate data for analysis. Contributions to the project are welcome, and the library is actively maintained with support available for any issues. LETSQL heavily relies on Ibis and DataFusion for its functionality.

onefilellm

OneFileLLM is a command-line tool that streamlines the creation of information-dense prompts for large language models (LLMs). It aggregates and preprocesses data from various sources, compiling them into a single text file for quick use. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, token count reporting, and XML encapsulation of output for improved LLM performance. Users can easily access private GitHub repositories by generating a personal access token. The tool's output is encapsulated in XML tags to enhance LLM understanding and processing.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

phospho

Phospho is a text analytics platform for LLM apps. It helps you detect issues and extract insights from text messages of your users or your app. You can gather user feedback, measure success, and iterate on your app to create the best conversational experience for your users.

OpenFactVerification

Loki is an open-source tool designed to automate the process of verifying the factuality of information. It provides a comprehensive pipeline for dissecting long texts into individual claims, assessing their worthiness for verification, generating queries for evidence search, crawling for evidence, and ultimately verifying the claims. This tool is especially useful for journalists, researchers, and anyone interested in the factuality of information.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.