auto-round

🎯An accuracy-first, highly efficient quantization toolkit for LLMs, designed to minimize quality degradation across Weight-Only Quantization, MXFP4, NVFP4, GGUF, and adaptive schemes.

Stars: 845

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

README:

English | 简体中文

AutoRound is an advanced quantization toolkit designed for Large Language Models (LLMs) and Vision-Language Models (VLMs). It achieves high accuracy at ultra-low bit widths (2–4 bits) with minimal tuning by leveraging sign-gradient descent and providing broad hardware compatibility. See our papers SignRoundV1 and SignRoundV2 for more details. For usage instructions, please refer to the User Guide.

-

[2025/12] The SignRoundV2 paper is available. Turn on

enable_alg_extand use the AutoScheme API for mixed-precision quantization to reproduce the results: Paper, Notes for evaluating LLaMA models. -

[2025/11] AutoRound has landed in LLM-Compressor: Usage, vLLM blog, RedHat blog, X post, Intel blog, Linkedin, 微信, 知乎.

-

[2025/11] An enhanced GGUF quantization algorithm is available via

--enable_alg_ext: Accuracy. -

[2025/10] AutoRound has been integrated into SGLang: Usage, LMSYS Blog, X post, Intel blog, Linkedin.

-

[2025/10] A mixed precision algorithm is available to generate schemes in minutes: Usage, Accuracy.

-

[2025/09] MXFP4 and NVFP4 dtypes is available: Accuracy.

-

[2025/08] An improved INT2 algorithm is available via

--enable_alg_ext: Accuracy -

[2025/07] GGUF format is supported: Usage.

-

[2025/05] AutoRound has been integrated into vLLM: Usage, Medium blog, 小红书.

-

[2025/05] AutoRound has been integrated into Transformers: Blog.

-

[2025/03] The INT2-mixed DeepSeek-R1 model (~200GB) retains 97.9% accuracy: Model.

✅ Superior Accuracy Delivers strong performance even at 2–3 bits example models, with leading results at 4 bits benchmark.

✅ Ecosystem Integration Seamlessly works with Transformers, vLLM, SGLang and more.

✅ Multiple Formats Export Support AutoRound, AutoAWQ, AutoGPTQ, and GGUF for maximum compatibility. Details are shown in export formats

✅ Fast Mixed Bits/Dtypes Scheme Generation Automatically configure in minutes, with about 1.1X-1.5X the model’s BF16 RAM size as overhead. Accuracy results and user guide.

✅ Optimized Round-to-Nearest Mode

Use --iters 0 for fast quantization with some accuracy drop for 4 bits. Details are shown in opt_rtn mode

✅ Affordable Quantization Cost Quantize 7B models in about 10 minutes on a single GPU. Details are shown in quantization costs

✅ 10+ VLMs Support Out-of-the-box quantization for 10+ vision-language models example models, support matrix

✅ Multiple Recipes

Choose from auto-round-best, auto-round, and auto-round-light to suit your needs. Details are shown in quantization recipes

✅ Advanced Utilities Includes multiple gpus quantization, multiple calibration datasets and support for 10+ runtime backends.

✅ Beyond weight only quantization. We are actively expanding support for additional datatypes such as MXFP, NVFP, W8A8, and more.

# CPU/Intel GPU/CUDA

pip install auto-round

# HPU

pip install auto-round-hpuBuild from Source

# CPU/Intel GPU/CUDA

pip install .

# HPU

python setup.py install hpuIf you encounter issues during quantization, try using pure RTN mode with iters=0, disable_opt_rtn=True. Additionally, using group_size=32 or mixed bits is recommended for better results..

The full list of supported arguments is provided by calling auto-round -h on the terminal.

ModelScope is supported for model downloads, simply set

AR_USE_MODELSCOPE=1.

auto-round \

--model Qwen/Qwen3-0.6B \

--scheme "W4A16" \

--format "auto_round" \

--output_dir ./tmp_autoroundWe offer another two recipes, auto-round-best and auto-round-light, designed for optimal accuracy and improved speed, respectively. Details are as follows.

Other Recipes

# Best accuracy, 3X slower, low_gpu_mem_usage could save ~20G but ~30% slower

auto-round-best \

--model Qwen/Qwen3-0.6B \

--scheme "W4A16" \

--low_gpu_mem_usage # 2-3X speedup, slight accuracy drop at W4 and larger accuracy drop at W2

auto-round-light \

--model Qwen/Qwen3-0.6B \

--scheme "W4A16"

In conclusion, we recommend using auto-round for W4A16 and auto-round-best with enable_alg_ext for W2A16. However, you may adjust the

configuration to suit your specific requirements and available resources.

from auto_round import AutoRound

# Load a model (supports FP8/BF16/FP16/FP32)

model_name_or_path = "Qwen/Qwen3-0.6B"

# Available schemes: "W2A16", "W3A16", "W4A16", "W8A16", "NVFP4", "MXFP4" (no real kernels), "GGUF:Q4_K_M", etc.

ar = AutoRound(model_name_or_path, scheme="W4A16")

# Highest accuracy (4–5× slower).

# `low_gpu_mem_usage=True` saves ~20GB VRAM but runs ~30% slower.

# ar = AutoRound(model_name_or_path, nsamples=512, iters=1000, low_gpu_mem_usage=True)

# Faster quantization (2–3× speedup) with slight accuracy drop at W4G128.

# ar = AutoRound(model_name_or_path, nsamples=128, iters=50, lr=5e-3)

# Supported formats: "auto_round" (default), "auto_gptq", "auto_awq", "llm_compressor", "gguf:q4_k_m", etc.

ar.quantize_and_save(output_dir="./qmodel", format="auto_round")Important Hyperparameters

-

scheme(str|dict|AutoScheme): The predefined quantization keys, e.g.W4A16,MXFP4,NVFP4,GGUF:Q4_K_M. For MXFP4/NVFP4, we recommend exporting to LLM-Compressor format. -

bits(int): Number of bits for quantization (default isNone). If not None, it will override the scheme setting. -

group_size(int): Size of the quantization group (default isNone). If not None, it will override the scheme setting. -

sym(bool): Whether to use symmetric quantization (default isNone). If not None, it will override the scheme setting. -

layer_config(dict): Configuration for layer_wise scheme (default isNone), mainly for customized mixed schemes.

-

enable_alg_ext(bool): [Experimental Feature] Only foriters>0. Enable algorithm variants for specific schemes (e.g., MXFP4/W2A16) that could bring notable improvements. Default isFalse. -

disable_opt_rtn(bool|None): Use pure RTN mode for specific schemes (e.g., GGUF and WOQ). Default isNone. If None, it defaults toFalsein most cases to improve accuracy, but may be set toTruedue to known issues.

-

iters(int): Number of tuning iterations (default is200). Common values: 0 (RTN mode), 50 (with lr=5e-3 recommended), 1000. Higher values increase accuracy but slow down tuning. -

lr(float): The learning rate for rounding value (default isNone). When None, it will be set to1.0/itersautomatically. -

batch_size(int): Batch size for training (default is8). 4 is also commonly used. - **

enable_deterministic_algorithms(bool)**: Whether to enable deterministic algorithms for reproducibility (default isFalse).

-

dataset(str|list|tuple|torch.utils.data.DataLoader): The dataset for tuning (default is"NeelNanda/pile-10k"). Supports local JSON files and dataset combinations, e.g."./tmp.json,NeelNanda/pile-10k:train,mbpp:train+validation+test". -

nsamples(int): Number of samples for tuning (default is128). -

seqlen(int): Data length of the sequence for tuning (default is2048).

-

enable_torch_compile(bool): If no exception is raised, typically we recommend setting it to True for faster quantization with lower resource. -

low_gpu_mem_usage(bool): Whether to offload intermediate features to CPU at the cost of ~20% more tuning time (default isFalse). -

low_cpu_mem_usage(bool): [Experimental Feature]Whether to enable saving immediately to reduce ram usage (default isTrue). -

device_map(str|dict|int): The device to be used for tuning, e.g.,auto,cpu,cuda,0,1,2(default is0). When usingauto, it will try to use all available GPUs.

| Format | Supported Schemes |

|---|---|

| auto_round | W4A16(Recommended), W2A16, W3A16, W8A16, W2A16G64, W2A16G32, MXFP4, MXFP8, MXFP4_RCEIL, MXFP8_RCEIL, NVFP4, FPW8A16, FP8_STATIC, BF16

|

| auto_awq | W4A16(Recommended), BF16 |

| auto_gptq | W4A16(Recommended), W2A16, W3A16, W8A16, W2A16G64, W2A16G32,BF16 |

| llm_compressor | NVFP4(Recommended), MXFP4, MXFP8, FPW8A16, FP8_STATIC

|

| gguf | GGUF:Q4_K_M(Recommended), GGUF:Q2_K_S, GGUF:Q3_K_S, GGUF:Q3_K_M, GGUF:Q3_K_L, GGUF:Q4_K_S, GGUF:Q5_K_S, GGUF:Q5_K_M, GGUF:Q6_K, GGUF:Q4_0, GGUF:Q4_1, GGUF:Q5_0, GGUF:Q5_1,GGUF:Q8_0 |

| fake | all schemes (only for research) |

AutoScheme provides an automatic algorithm to generate adaptive mixed bits/data-type quantization recipes. Please refer to the user guide for more details on AutoScheme.

from auto_round import AutoRound, AutoScheme

model_name = "Qwen/Qwen3-8B"

avg_bits = 3.0

scheme = AutoScheme(avg_bits=avg_bits, options=("GGUF:Q2_K_S", "GGUF:Q4_K_S"), ignore_scale_zp_bits=True)

layer_config = {"lm_head": "GGUF:Q6_K"}

# Change iters to 200 for non-GGUF schemes

ar = AutoRound(model=model_name, scheme=scheme, layer_config=layer_config, iters=0)

ar.quantize_and_save()Important Hyperparameters of AutoScheme

-

avg_bits(float): Target average bit-width for the entire model. Only quantized layers are included in the average bit calculation. -

options(str | list[str] | list[QuantizationScheme]): Candidate quantization schemes to choose from. It can be a single comma-separated string (e.g.,"W4A16,W2A16"), a list of strings (e.g.,["W4A16", "W2A16"]), or a list ofQuantizationSchemeobjects. -

ignore_scale_zp_bits(bool): Only supported in API usage. Determines whether to exclude the bits of scale and zero-point from the average bit-width calculation (default:False). -

shared_layers(Iterable[Iterable[str]], optional): Only supported in API usage. Defines groups of layers that share quantization settings. -

batch_size(int, optional): Only supported in API usage. Can be set to1to reduce VRAM usage at the expense of longer tuning time.

Click to expand

This feature is experimental and may be subject to changes.

By default, AutoRound only quantize the text module of VLMs and uses NeelNanda/pile-10k for calibration. To

quantize the entire model, you can enable quant_nontext_module by setting it to True, though support for this feature

is limited. For more information, please refer to the AutoRound readme.

from auto_round import AutoRound

# Load the model

model_name_or_path = "Qwen/Qwen2.5-VL-7B-Instruct"

# Quantize the model

ar = AutoRound(model_name_or_path, scheme="W4A16")

output_dir = "./qmodel"

ar.quantize_and_save(output_dir)from vllm import LLM, SamplingParams

prompts = [

"Hello, my name is",

]

sampling_params = SamplingParams(temperature=0.6, top_p=0.95)

model_name = "Intel/DeepSeek-R1-0528-Qwen3-8B-int4-AutoRound"

llm = LLM(model=model_name)

outputs = llm.generate(prompts, sampling_params)

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")Please note that support for the MoE models and visual language models is currently limited.

import sglang as sgl

llm = sgl.Engine(model_path="Intel/DeepSeek-R1-0528-Qwen3-8B-int4-AutoRound")

prompts = [

"Hello, my name is",

]

sampling_params = {"temperature": 0.6, "top_p": 0.95}

outputs = llm.generate(prompts, sampling_params)

for prompt, output in zip(prompts, outputs):

print(f"Prompt: {prompt}\nGenerated text: {output['text']}")AutoRound supports 10+ backends and automatically selects the best available backend based on the installed libraries and prompts the user to install additional libraries when a better backend is found.

Please avoid manually moving the quantized model to a different device (e.g., model.to('cpu')) during inference, as this may cause unexpected exceptions.

The support for Gaudi device is limited.

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Intel/DeepSeek-R1-0528-Qwen3-8B-int4-AutoRound"

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto", torch_dtype="auto")

tokenizer = AutoTokenizer.from_pretrained(model_name)

text = "There is a girl who likes adventure,"

inputs = tokenizer(text, return_tensors="pt").to(model.device)

print(tokenizer.decode(model.generate(**inputs, max_new_tokens=50)[0]))SignRoundV2: Closing the Performance Gap in Extremely Low-Bit Post-Training Quantization for LLMs (2025.12 paper)

Optimize Weight Rounding via Signed Gradient Descent for the Quantization of LLM (2023.09 paper)

TEQ: Trainable Equivalent Transformation for Quantization of LLMs (2023.10 paper)

Effective Post-Training Quantization for Large Language Models (2023.04 blog)

Check out Full Publication List.

Special thanks to open-source low precision libraries such as AutoGPTQ, AutoAWQ, GPTQModel, Triton, Marlin, and ExLLaMAV2 for providing low-precision CUDA kernels, which are leveraged in AutoRound.

If you find AutoRound helpful, please ⭐ star the repo and share it with your community!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for auto-round

Similar Open Source Tools

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

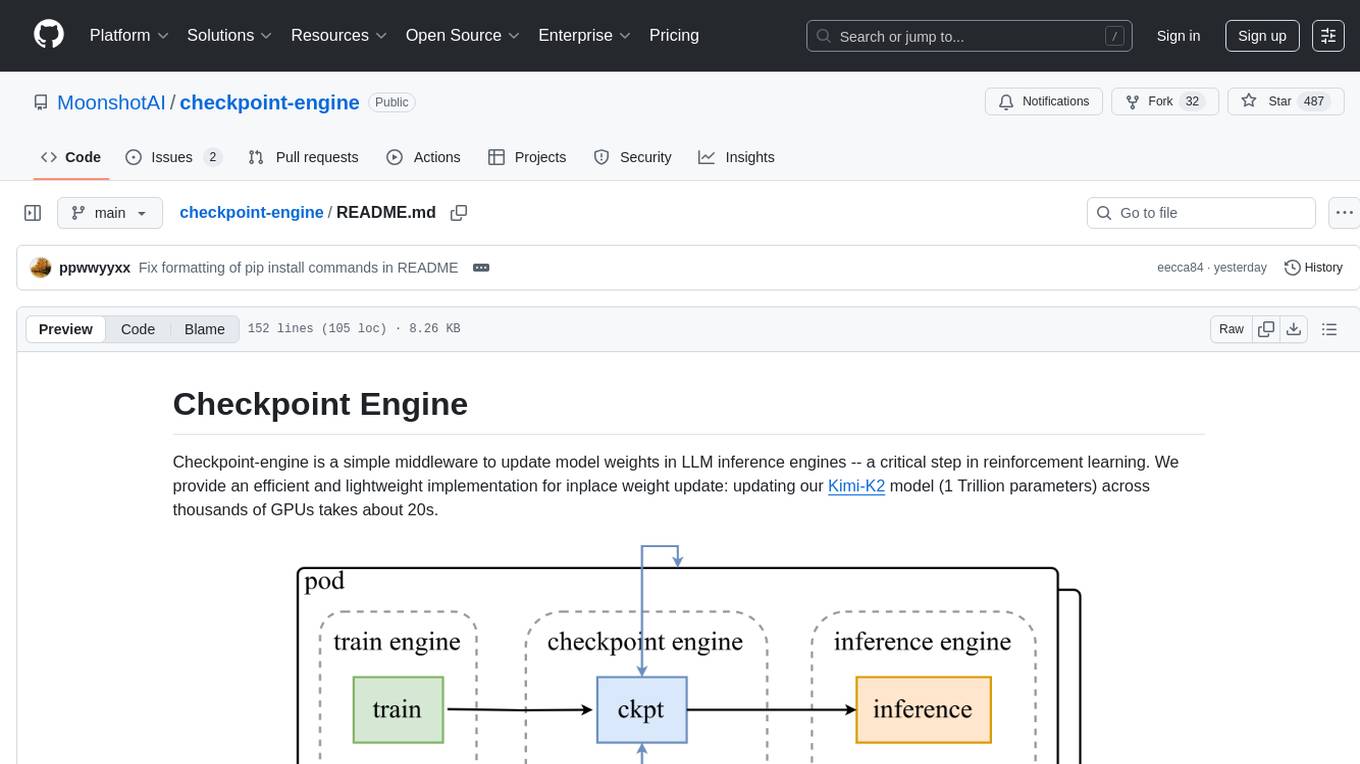

checkpoint-engine

Checkpoint-engine is a middleware tool designed for updating model weights in LLM inference engines efficiently. It provides implementations for both Broadcast and P2P weight update methods, orchestrating the transfer process and controlling the inference engine through ZeroMQ socket. The tool optimizes weight broadcast by arranging data transfer into stages and organizing transfers into a pipeline for performance. It supports flexible installation options and is tested with various models and device setups. Checkpoint-engine also allows reusing weights from existing instances and provides a patch for FP8 quantization in vLLM.

Awesome-Efficient-MoE

Awesome Efficient MoE is a GitHub repository that provides an implementation of Mixture of Experts (MoE) models for efficient deep learning. The repository includes code for training and using MoE models, which are neural network architectures that combine multiple expert networks to improve performance on complex tasks. MoE models are particularly useful for handling diverse data distributions and capturing complex patterns in data. The implementation in this repository is designed to be efficient and scalable, making it suitable for training large-scale MoE models on modern hardware. The code is well-documented and easy to use, making it accessible for researchers and practitioners interested in leveraging MoE models for their deep learning projects.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

chinese-llm-benchmark

The Chinese LLM Benchmark is a continuous evaluation list of large models in CLiB, covering a wide range of commercial and open-source models from various companies and research institutions. It supports multidimensional evaluation of capabilities including classification, information extraction, reading comprehension, data analysis, Chinese encoding efficiency, and Chinese instruction compliance. The benchmark not only provides capability score rankings but also offers the original output results of all models for interested individuals to score and rank themselves.

airllm

AirLLM is a tool that optimizes inference memory usage, enabling large language models to run on low-end GPUs without quantization, distillation, or pruning. It supports models like Llama3.1 on 8GB VRAM. The tool offers model compression for up to 3x inference speedup with minimal accuracy loss. Users can specify compression levels, profiling modes, and other configurations when initializing models. AirLLM also supports prefetching and disk space management. It provides examples and notebooks for easy implementation and usage.

transformers

Transformers is a state-of-the-art pretrained models library that acts as the model-definition framework for machine learning models in text, computer vision, audio, video, and multimodal tasks. It centralizes model definition for compatibility across various training frameworks, inference engines, and modeling libraries. The library simplifies the usage of new models by providing simple, customizable, and efficient model definitions. With over 1M+ Transformers model checkpoints available, users can easily find and utilize models for their tasks.

youtu-graphrag

Youtu-GraphRAG is a vertically unified agentic paradigm that connects the entire framework based on graph schema, allowing seamless domain transfer with minimal intervention. It introduces key innovations like schema-guided hierarchical knowledge tree construction, dually-perceived community detection, agentic retrieval, advanced construction and reasoning capabilities, fair anonymous dataset 'AnonyRAG', and unified configuration management. The framework demonstrates robustness with lower token cost and higher accuracy compared to state-of-the-art methods, enabling enterprise-scale deployment with minimal manual intervention for new domains.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

vllm

vLLM is a fast and easy-to-use library for LLM inference and serving. It is designed to be efficient, flexible, and easy to use. vLLM can be used to serve a variety of LLM models, including Hugging Face models. It supports a variety of decoding algorithms, including parallel sampling, beam search, and more. vLLM also supports tensor parallelism for distributed inference and streaming outputs. It is open-source and available on GitHub.

Fast-LLM

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

GPTQModel

GPTQModel is an easy-to-use LLM quantization and inference toolkit based on the GPTQ algorithm. It provides support for weight-only quantization and offers features such as dynamic per layer/module flexible quantization, sharding support, and auto-heal quantization errors. The toolkit aims to ensure inference compatibility with HF Transformers, vLLM, and SGLang. It offers various model supports, faster quant inference, better quality quants, and security features like hash check of model weights. GPTQModel also focuses on faster quantization, improved quant quality as measured by PPL, and backports bug fixes from AutoGPTQ.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

Main

This repository contains material related to the new book _Synthetic Data and Generative AI_ by the author, including code for NoGAN, DeepResampling, and NoGAN_Hellinger. NoGAN is a tabular data synthesizer that outperforms GenAI methods in terms of speed and results, utilizing state-of-the-art quality metrics. DeepResampling is a fast NoGAN based on resampling and Bayesian Models with hyperparameter auto-tuning. NoGAN_Hellinger combines NoGAN and DeepResampling with the Hellinger model evaluation metric.

For similar tasks

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

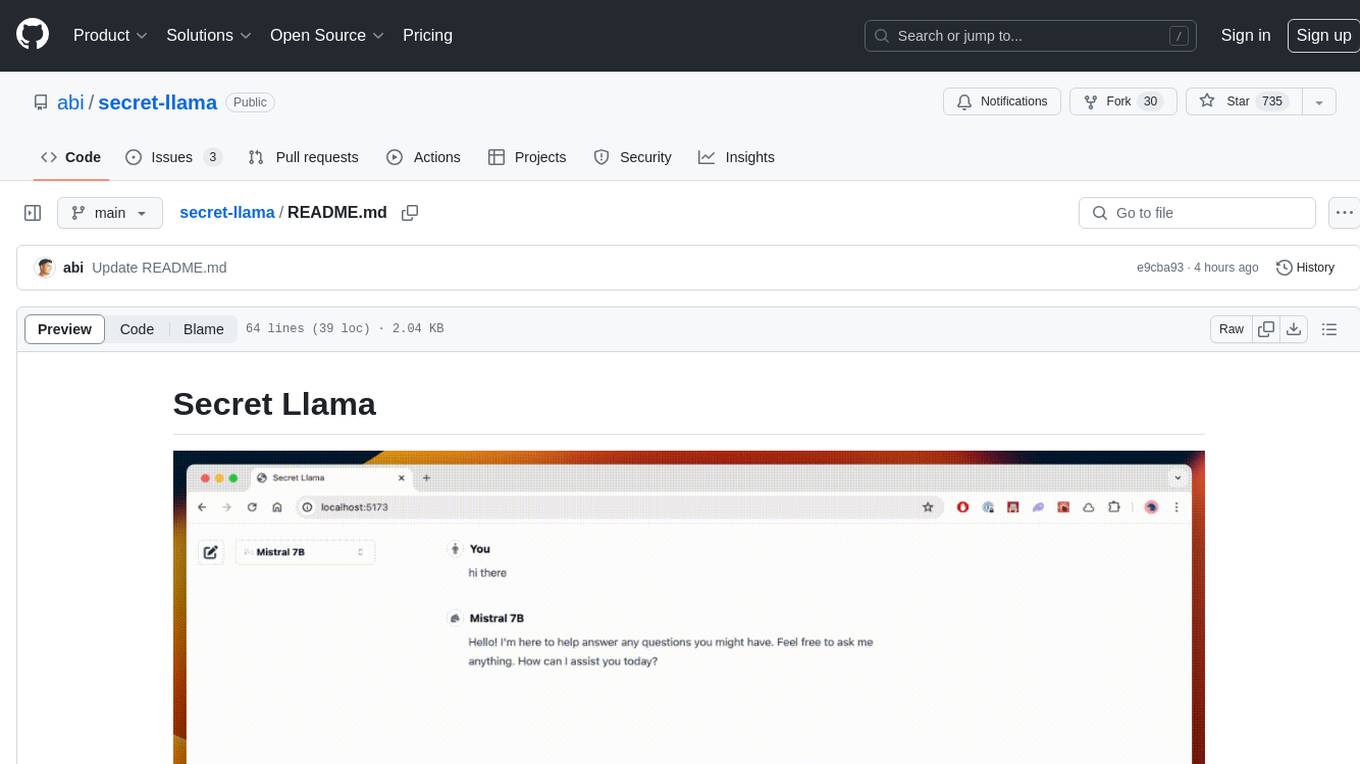

secret-llama

Entirely-in-browser, fully private LLM chatbot supporting Llama 3, Mistral and other open source models. Fully private = No conversation data ever leaves your computer. Runs in the browser = No server needed and no install needed! Works offline. Easy-to-use interface on par with ChatGPT, but for open source LLMs. System requirements include a modern browser with WebGPU support. Supported models include TinyLlama-1.1B-Chat-v0.4-q4f32_1-1k, Llama-3-8B-Instruct-q4f16_1, Phi1.5-q4f16_1-1k, and Mistral-7B-Instruct-v0.2-q4f16_1. Looking for contributors to improve the interface, support more models, speed up initial model loading time, and fix bugs.

baal

Baal is an active learning library that supports both industrial applications and research use cases. It provides a framework for Bayesian active learning methods such as Monte-Carlo Dropout, MCDropConnect, Deep ensembles, and Semi-supervised learning. Baal helps in labeling the most uncertain items in the dataset pool to improve model performance and reduce annotation effort. The library is actively maintained by a dedicated team and has been used in various research papers for production and experimentation.

LLM-Fine-Tuning

This GitHub repository contains examples of fine-tuning open source large language models. It showcases the process of fine-tuning and quantizing large language models using efficient techniques like Lora and QLora. The repository serves as a practical guide for individuals looking to optimize the performance of language models through fine-tuning.

magpie

This is the official repository for 'Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing'. Magpie is a tool designed to synthesize high-quality instruction data at scale by extracting it directly from an aligned Large Language Models (LLMs). It aims to democratize AI by generating large-scale alignment data and enhancing the transparency of model alignment processes. Magpie has been tested on various model families and can be used to fine-tune models for improved performance on alignment benchmarks such as AlpacaEval, ArenaHard, and WildBench.

DistillKit

DistillKit is an open-source research effort by Arcee.AI focusing on model distillation methods for Large Language Models (LLMs). It provides tools for improving model performance and efficiency through logit-based and hidden states-based distillation methods. The tool supports supervised fine-tuning and aims to enhance the adoption of open-source LLM distillation techniques.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.