AIStudioToAPI

A wrapper that exposes Google AI Studio as OpenAI, Gemini, and Anthropic compatible APIs.(一个将 Google AI Studio 封装为兼容 OpenAI / Gemini / Anthropic 风格 API 的工具)

Stars: 489

AIStudioToAPI is a tool that encapsulates the Google AI Studio web interface to be compatible with OpenAI API, Gemini API, and Anthropic API. It acts as a proxy, converting API requests into interactions with the AI Studio web interface. The tool supports API compatibility with OpenAI, Gemini, and Anthropic, browser automation with the AI Studio web interface, secure authentication mechanism based on API keys, tool calls for OpenAI, Gemini, and Anthropic interfaces, access to various Gemini models including image models and TTS speech synthesis models through AI Studio, and provides a visual Web console for account management and VNC login operations.

README:

中文文档 | English

一个将 Google AI Studio 网页端封装为兼容 OpenAI API、Gemini API 和 Anthropic API 的工具。该服务将充当代理,将 API 请求转换为与 AI Studio 网页界面的浏览器交互。

- 🔄 API 兼容性:同时兼容 OpenAI API、Gemini API 和 Anthropic API 格式

- 🌐 网页自动化:使用浏览器自动化技术与 AI Studio 网页界面交互

- 🔐 身份验证:基于 API 密钥的安全认证机制

- 🔧 支持工具调用:OpenAI、Gemini 和 Anthropic 接口均支持 Tool Calls (Function Calling)

- 📝 模型支持:通过 AI Studio 访问各种 Gemini 模型,包括生图模型和 TTS 语音合成模型

- 🎨 主页展示控制:提供可视化的 Web 控制台,支持账号管理、VNC 登录等操作

-

克隆仓库:

git clone https://github.com/iBUHub/AIStudioToAPI.git cd AIStudioToAPI -

运行快速设置脚本:

npm run setup-auth

该脚本将:

- 自动下载 Camoufox 浏览器(一个注重隐私的 Firefox 分支)

- 启动浏览器并自动导航到 AI Studio

- 在本地保存您的身份验证凭据

💡 提示: 如果下载 Camoufox 浏览器失败或等待太久,可以自行点击 此处 下载,然后设置环境变量

CAMOUFOX_EXECUTABLE_PATH为可执行文件的路径(支持绝对和相对路径)。 -

配置环境变量(可选):

复制根目录下的

.env.example为.env,并在.env中按需修改配置(如端口、API 密钥等)。 -

启动服务:

npm start

API 服务将在

http://localhost:7860上运行。服务启动后,您可以在浏览器中访问

http://localhost:7860打开 Web 控制台主页,在这里可以查看账号状态和服务状态。

⚠ 注意: 直接运行不支持通过 VNC 在线添加账号,需要使用

npm run setup-auth脚本添加账号。当前 VNC 登录功能仅在 Docker 容器中可用。

使用 Docker 部署,无需预先提取身份验证凭据。

docker run -d \

--name aistudio-to-api \

-p 7860:7860 \

-v /path/to/auth:/app/configs/auth \

-e API_KEYS=your-api-key-1,your-api-key-2 \

-e TZ=Asia/Shanghai \

--restart unless-stopped \

ghcr.io/ibuhub/aistudio-to-api:latest💡 提示: 如果

ghcr.io访问速度较慢或不可用,可以使用 Docker Hub 镜像:ibuhub/aistudio-to-api:latest。

参数说明:

-

-p 7860:7860:API 服务器端口(如果使用反向代理,强烈建议改成 127.0.0.1:7860) -

-v /path/to/auth:/app/configs/auth:挂载包含认证文件的目录 -

-e API_KEYS:用于身份验证的 API 密钥列表(使用逗号分隔) -

-e TZ=Asia/Shanghai:时区设置(可选,默认使用系统时区)

创建 docker-compose.yml 文件:

name: aistudio-to-api

services:

app:

image: ghcr.io/ibuhub/aistudio-to-api:latest

container_name: aistudio-to-api

ports:

- 7860:7860

restart: unless-stopped

volumes:

- ./auth:/app/configs/auth

environment:

API_KEYS: your-api-key-1,your-api-key-2

TZ: Asia/Shanghai # 日志时区设置(可选)💡 提示: 如果

ghcr.io访问速度较慢或不可用,可以将image改为ibuhub/aistudio-to-api:latest。

启动服务:

sudo docker compose up -d查看日志:

sudo docker compose logs -f停止服务:

sudo docker compose down如果您希望自己构建 Docker 镜像,可以使用以下命令:

-

构建镜像:

docker build -t aistudio-to-api . -

运行容器:

docker run -d \ --name aistudio-to-api \ -p 7860:7860 \ -v /path/to/auth:/app/configs/auth \ -e API_KEYS=your-api-key-1,your-api-key-2 \ -e TZ=Asia/Shanghai \ --restart unless-stopped \ aistudio-to-api

部署后,您需要使用以下方式之一添加 Google 账号:

方法 1:VNC 登录(推荐)

- 在浏览器中访问部署的服务地址(例如

http://your-server:7860)并点击「添加账号」按钮 - 将跳转到 VNC 页面,显示浏览器实例

- 登录您的 Google 账号,登录完成后点击「保存」按钮

- 账号将自动保存为

auth-N.json(N 从 0 开始)

方法 2:上传认证文件

- 在本地机器上运行

npm run setup-auth生成认证文件(参考 直接运行 的 1 和 2),认证文件在/configs/auth - 在网页控制台,点击「上传 Auth」,上传 auth 的 JSON 文件,或手动上传到挂载的

/path/to/auth目录

💡 提示:您也可以从已有的容器下载 auth 文件,然后上传到新的容器。在网页控制台点击对应账号的「下载 Auth」按钮即可下载 auth 文件。

⚠ 目前暂不支持通过环境变量注入认证信息。

如果需要通过域名访问或希望在反向代理层统一管理(例如配置 HTTPS、负载均衡等),可以使用 Nginx。

📖 详细的 Nginx 配置说明请参阅:Nginx 反向代理配置文档

支持直接部署到 Claw Cloud Run,全托管的容器平台。

📖 详细部署说明请参阅:部署到 Claw Cloud Run

支持部署到 Zeabur 容器平台。

⚠ 注意: Zeabur 的免费额度每月仅 5 美元,不足以支持 24 小时运行。不使用时请务必暂停服务!

📖 详细部署说明请参阅:部署到 Zeabur

此端点处理后转发到官方 Gemini API 格式端点。

-

GET /v1/models: 列出模型。 -

POST /v1/chat/completions: 聊天补全和图片生成,支持非流式、真流式和假流式。

此端点转发到官方 Gemini API 格式端点。

-

GET /v1beta/models: 列出可用的 Gemini 模型。 -

POST /v1beta/models/{model_name}:generateContent: 生成内容、图片和语音。 -

POST /v1beta/models/{model_name}:streamGenerateContent: 流式生成内容、图片和语音,支持真流式和假流式。 -

POST /v1beta/models/{model_name}:batchEmbedContents: 批量生成文本嵌入向量。 -

POST /v1beta/models/{model_name}:predict: Imagen 系列模型图像生成。

此端点处理后转发到官方 Gemini API 格式端点。

-

GET /v1/models: 列出模型。 -

POST /v1/messages: 聊天消息补全,支持非流式、真流式和假流式。 -

POST /v1/messages/count_tokens: 计算消息中的 token 数量。

📖 详细的 API 使用示例请参阅:API 使用示例文档

| 变量名 | 描述 | 默认值 |

|---|---|---|

API_KEYS |

用于身份验证的有效 API 密钥列表(使用逗号分隔)。 | 123456 |

PORT |

API 服务器端口。 | 7860 |

HOST |

服务器监听的主机地址。 | 0.0.0.0 |

ICON_URL |

用于自定义控制台的 favicon 图标。支持 ICO, PNG, SVG 等格式。 | /AIStudio_logo.svg |

SECURE_COOKIES |

是否启用安全 Cookie。true 表示仅支持 HTTPS 协议访问控制台。 |

false |

RATE_LIMIT_MAX_ATTEMPTS |

时间窗口内控制台允许的最大失败登录尝试次数(设为 0 禁用)。 |

5 |

RATE_LIMIT_WINDOW_MINUTES |

速率限制的时间窗口长度(分钟)。 | 15 |

CHECK_UPDATE |

是否在页面加载时检查版本更新(设为 false 禁用)。 |

true |

LOG_LEVEL |

日志输出等级。设为 DEBUG 启用详细调试日志。 |

INFO |

| 变量名 | 描述 | 默认值 |

|---|---|---|

INITIAL_AUTH_INDEX |

启动时使用的初始身份验证索引。 | 0 |

ENABLE_AUTH_UPDATE |

是否启用自动保存凭证更新。默认为启用状态,将在每次登录/切换账号成功时以及每 24 小时自动更新 auth 文件。设为 false 禁用。 |

true |

MAX_RETRIES |

请求失败后的最大重试次数(仅对假流式和非流式生效)。 | 3 |

RETRY_DELAY |

两次重试之间的间隔(毫秒)。 | 2000 |

SWITCH_ON_USES |

自动切换帐户前允许的请求次数(设为 0 禁用)。 |

40 |

FAILURE_THRESHOLD |

切换帐户前允许的连续失败次数(设为 0 禁用)。 |

3 |

IMMEDIATE_SWITCH_STATUS_CODES |

触发立即切换帐户的 HTTP 状态码(逗号分隔,设为空值以禁用)。 | 429,503 |

HTTP_PROXY |

用于访问 Google 服务的 HTTP 代理地址。 | 无 |

HTTPS_PROXY |

用于访问 Google 服务的 HTTPS 代理地址。 | 无 |

NO_PROXY |

不经过代理的地址列表(逗号分隔)。项目已内置自动绕过本地地址(localhost, 127.0.0.1, 0.0.0.0),通常无需手动配置本地绕过。 | 无 |

| 变量名 | 描述 | 默认值 |

|---|---|---|

STREAMING_MODE |

流式传输模式。real 为真流式,fake 为假流式。 |

real |

FORCE_THINKING |

强制为所有请求启用思考模式。 | false |

FORCE_WEB_SEARCH |

强制为所有请求启用网络搜索。 | false |

FORCE_URL_CONTEXT |

强制为所有请求启用 URL 上下文。 | false |

CAMOUFOX_EXECUTABLE_PATH |

Camoufox 浏览器的可执行文件路径(支持绝对或相对路径)。仅在手动下载浏览器时需配置。 | 自动检测 |

编辑 configs/models.json 以自定义可用模型及其设置。

本项目基于 ais2api(作者:Ellinav)分支开发,并完全沿用上游项目所采用的 CC BY-NC 4.0 许可证,其使用、分发与修改行为均需遵守原有许可证的全部条款,完整许可的内容请参见 LICENSE 文件。

感谢所有为本项目付出汗水与智慧的开发者。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIStudioToAPI

Similar Open Source Tools

AIStudioToAPI

AIStudioToAPI is a tool that encapsulates the Google AI Studio web interface to be compatible with OpenAI API, Gemini API, and Anthropic API. It acts as a proxy, converting API requests into interactions with the AI Studio web interface. The tool supports API compatibility with OpenAI, Gemini, and Anthropic, browser automation with the AI Studio web interface, secure authentication mechanism based on API keys, tool calls for OpenAI, Gemini, and Anthropic interfaces, access to various Gemini models including image models and TTS speech synthesis models through AI Studio, and provides a visual Web console for account management and VNC login operations.

ChatTTS-Forge

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

AI-Guide-and-Demos-zh_CN

This is a Chinese AI/LLM introductory project that aims to help students overcome the initial difficulties of accessing foreign large models' APIs. The project uses the OpenAI SDK to provide a more compatible learning experience. It covers topics such as AI video summarization, LLM fine-tuning, and AI image generation. The project also offers a CodePlayground for easy setup and one-line script execution to experience the charm of AI. It includes guides on API usage, LLM configuration, building AI applications with Gradio, customizing prompts for better model performance, understanding LoRA, and more.

OpenClawChineseTranslation

OpenClaw Chinese Translation is a localization project that provides a fully Chinese interface for the OpenClaw open-source personal AI assistant platform. It allows users to interact with their AI assistant through chat applications like WhatsApp, Telegram, and Discord to manage daily tasks such as emails, calendars, and files. The project includes both CLI command-line and dashboard web interface fully translated into Chinese.

pi-browser

Pi-Browser is a CLI tool for automating browsers based on multiple AI models. It supports various AI models like Google Gemini, OpenAI, Anthropic Claude, and Ollama. Users can control the browser using natural language commands and perform tasks such as web UI management, Telegram bot integration, Notion integration, extension mode for maintaining Chrome login status, parallel processing with multiple browsers, and offline execution with the local AI model Ollama.

Langchain-Chatchat

LangChain-Chatchat is an open-source, offline-deployable retrieval-enhanced generation (RAG) large model knowledge base project based on large language models such as ChatGLM and application frameworks such as Langchain. It aims to establish a knowledge base Q&A solution that is friendly to Chinese scenarios, supports open-source models, and can run offline.

Muice-Chatbot

Muice-Chatbot is an AI chatbot designed to proactively engage in conversations with users. It is based on the ChatGLM2-6B and Qwen-7B models, with a training dataset of 1.8K+ dialogues. The chatbot has a speaking style similar to a 2D girl, being somewhat tsundere but willing to share daily life details and greet users differently every day. It provides various functionalities, including initiating chats and offering 5 available commands. The project supports model loading through different methods and provides onebot service support for QQ users. Users can interact with the chatbot by running the main.py file in the project directory.

VideoCaptioner

VideoCaptioner is a video subtitle processing assistant based on a large language model (LLM), supporting speech recognition, subtitle segmentation, optimization, translation, and full-process handling. It is user-friendly and does not require high configuration, supporting both network calls and local offline (GPU-enabled) speech recognition. It utilizes a large language model for intelligent subtitle segmentation, correction, and translation, providing stunning subtitles for videos. The tool offers features such as accurate subtitle generation without GPU, intelligent segmentation and sentence splitting based on LLM, AI subtitle optimization and translation, batch video subtitle synthesis, intuitive subtitle editing interface with real-time preview and quick editing, and low model token consumption with built-in basic LLM model for easy use.

goclaw

goclaw is a powerful AI Agent framework written in Go language. It provides a complete tool system for FileSystem, Shell, Web, and Browser with Docker sandbox support and permission control. The framework includes a skill system compatible with OpenClaw and AgentSkills specifications, supporting automatic discovery and environment gating. It also offers persistent session storage, multi-channel support for Telegram, WhatsApp, Feishu, QQ, and WeWork, flexible configuration with YAML/JSON support, multiple LLM providers like OpenAI, Anthropic, and OpenRouter, WebSocket Gateway, Cron scheduling, and Browser automation based on Chrome DevTools Protocol.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

md

The WeChat Markdown editor automatically renders Markdown documents as WeChat articles, eliminating the need to worry about WeChat content layout! As long as you know basic Markdown syntax (now with AI, you don't even need to know Markdown), you can create a simple and elegant WeChat article. The editor supports all basic Markdown syntax, mathematical formulas, rendering of Mermaid charts, GFM warning blocks, PlantUML rendering support, ruby annotation extension support, rich code block highlighting themes, custom theme colors and CSS styles, multiple image upload functionality with customizable configuration of image hosting services, convenient file import/export functionality, built-in local content management with automatic draft saving, integration of mainstream AI models (such as DeepSeek, OpenAI, Tongyi Qianwen, Tencent Hanyuan, Volcano Ark, etc.) to assist content creation.

TelegramForwarder

Telegram Forwarder is a message forwarding tool that allows you to forward messages from specified chats to other chats without the need for a bot to enter the corresponding channels/groups to listen. It can be used for information stream integration filtering, message reminders, content archiving, and more. The tool supports multiple sources forwarding, keyword filtering in whitelist and blacklist modes, regular expression matching, message content modification, AI processing using major vendors' AI interfaces, media file filtering, and synchronization with a universal forum blocking plugin to achieve three-end blocking.

Feishu-MCP

Feishu-MCP is a server that provides access, editing, and structured processing capabilities for Feishu documents for Cursor, Windsurf, Cline, and other AI-driven coding tools, based on the Model Context Protocol server. This project enables AI coding tools to directly access and understand the structured content of Feishu documents, significantly improving the intelligence and efficiency of document processing. It covers the real usage process of Feishu documents, allowing efficient utilization of document resources, including folder directory retrieval, content retrieval and understanding, smart creation and editing, efficient search and retrieval, and more. It enhances the intelligent access, editing, and searching of Feishu documents in daily usage, improving content processing efficiency and experience.

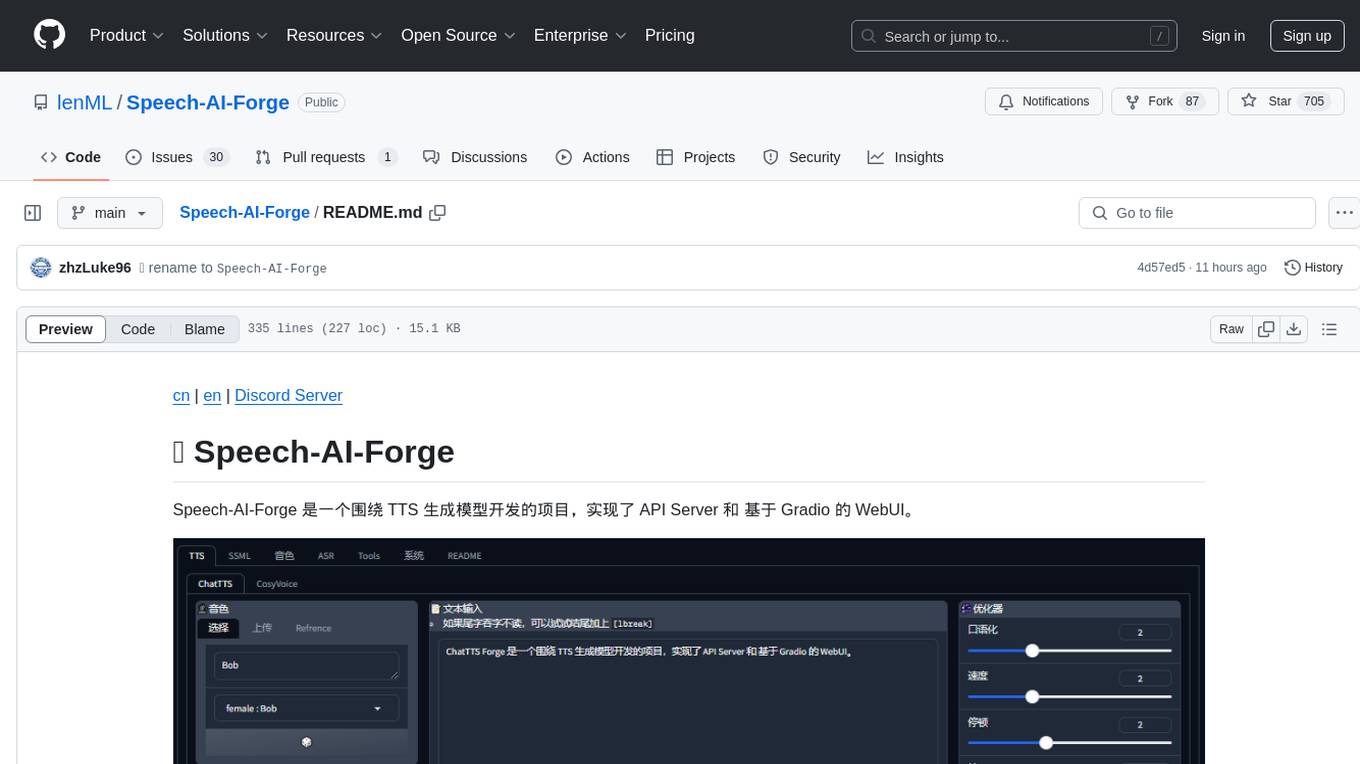

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

jimeng-free-api-all

Jimeng AI Free API is a reverse-engineered API server that encapsulates Jimeng AI's image and video generation capabilities into OpenAI-compatible API interfaces. It supports the latest jimeng-5.0-preview, jimeng-4.6 text-to-image models, Seedance 2.0 multi-image intelligent video generation, zero-configuration deployment, and multi-token support. The API is fully compatible with OpenAI API format, seamlessly integrating with existing clients and supporting multiple session IDs for polling usage.

For similar tasks

open-model-database

OpenModelDB is a community-driven database of AI upscaling models, providing a centralized platform for users to access and compare various models. The repository contains a collection of models and model metadata, facilitating easy exploration and evaluation of different AI upscaling solutions. With a focus on enhancing the accessibility and usability of AI models, OpenModelDB aims to streamline the process of finding and selecting the most suitable models for specific tasks or projects.

exo

Run your own AI cluster at home with everyday devices. Exo is experimental software that unifies existing devices into a powerful GPU, supporting wide model compatibility, dynamic model partitioning, automatic device discovery, ChatGPT-compatible API, and device equality. It does not use a master-worker architecture, allowing devices to connect peer-to-peer. Exo supports different partitioning strategies like ring memory weighted partitioning. Installation is recommended from source. Documentation includes example usage on multiple MacOS devices and information on inference engines and networking modules. Known issues include the iOS implementation lagging behind Python.

AIStudioToAPI

AIStudioToAPI is a tool that encapsulates the Google AI Studio web interface to be compatible with OpenAI API, Gemini API, and Anthropic API. It acts as a proxy, converting API requests into interactions with the AI Studio web interface. The tool supports API compatibility with OpenAI, Gemini, and Anthropic, browser automation with the AI Studio web interface, secure authentication mechanism based on API keys, tool calls for OpenAI, Gemini, and Anthropic interfaces, access to various Gemini models including image models and TTS speech synthesis models through AI Studio, and provides a visual Web console for account management and VNC login operations.

galxe-aio

Galxe AIO is a versatile tool designed to automate various tasks on social media platforms like Twitter, email, and Discord. It supports tasks such as following, retweeting, liking, and quoting on Twitter, as well as solving quizzes, submitting surveys, and more. Users can link their Twitter accounts, email accounts (IMAP or mail3.me), and Discord accounts to the tool to streamline their activities. Additionally, the tool offers features like claiming rewards, quiz solving, submitting surveys, and managing referral links and account statistics. It also supports different types of rewards like points, mystery boxes, gas-less OATs, gas OATs and NFTs, and participation in raffles. The tool provides settings for managing EVM wallets, proxies, twitters, emails, and discords, along with custom configurations in the `config.toml` file. Users can run the tool using Python 3.11 and install dependencies using `pip` and `playwright`. The tool generates results and logs in specific folders and allows users to donate using TRC-20 or ERC-20 tokens.

ChopperBot

A multifunctional, intelligent, personalized, scalable, easy to build, and fully automated multi platform intelligent live video editing and publishing robot. ChopperBot is a comprehensive AI tool that automatically analyzes and slices the most interesting clips from popular live streaming platforms, generates and publishes content, and manages accounts. It supports plugin DIY development and hot swapping functionality, making it easy to customize and expand. With ChopperBot, users can quickly build their own live video editing platform without the need to install any software, thanks to its visual management interface.

Tiktok_Automation_Bot

TikTok Automation Bot is an Appium-based tool for automating TikTok account creation and video posting on real devices. It offers functionalities such as automated account creation and video posting, along with integrations like Crane tweak, SMSActivate service, and IPQualityScore service. The tool also provides device and automation management system, anti-bot system for human behavior modeling, and IP rotation system for different IP addresses. It is designed to simplify the process of managing TikTok accounts and posting videos efficiently.

midjourney-proxy

Midjourney Proxy is an open-source project that acts as a proxy for the Midjourney Discord channel, allowing API-based AI drawing calls for charitable purposes. It provides drawing API for free use, ensuring full functionality, security, and minimal memory usage. The project supports various commands and actions related to Imagine, Blend, Describe, and more. It also offers real-time progress tracking, Chinese prompt translation, sensitive word pre-detection, user-token connection via wss for error information retrieval, and various account configuration options. Additionally, it includes features like image zooming, seed value retrieval, account-specific speed mode settings, multiple account configurations, and more. The project aims to support mainstream drawing clients and API calls, with features like task hierarchy, Remix mode, image saving, and CDN acceleration, among others.

chatgpt-mirai-qq-bot

Kirara AI is a chatbot that supports mainstream language models and chat platforms. It features various functionalities such as image sending, keyword-triggered replies, multi-account support, content moderation, personality settings, and support for platforms like QQ, Telegram, Discord, and WeChat. It also offers HTTP server capabilities, plugin support, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, and custom workflows. The tool can be accessed via HTTP API for integration with other platforms.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.