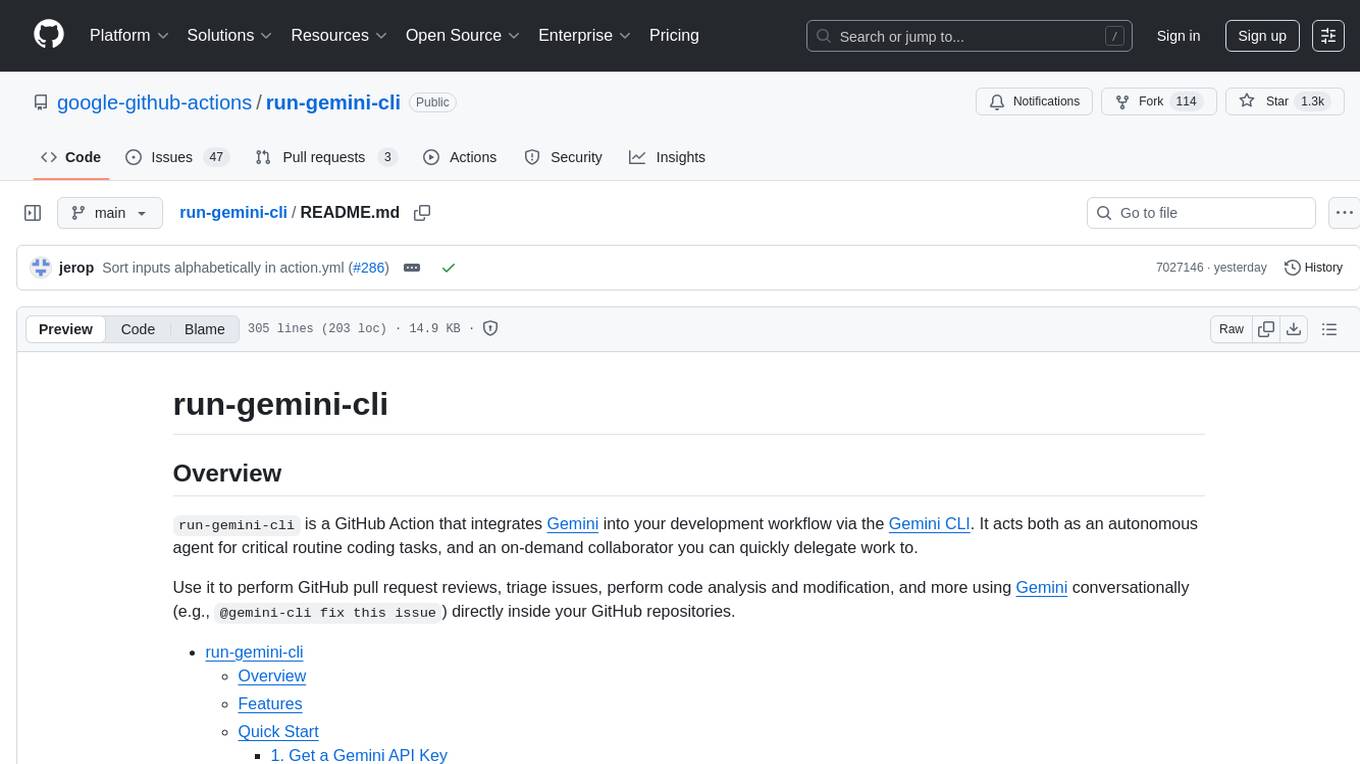

run-gemini-cli

A GitHub Action invoking the Gemini CLI.

Stars: 1275

run-gemini-cli is a GitHub Action that integrates Gemini into your development workflow via the Gemini CLI. It acts as an autonomous agent for routine coding tasks and an on-demand collaborator. Use it for GitHub pull request reviews, triaging issues, code analysis, and more. It provides automation, on-demand collaboration, extensibility with tools, and customization options.

README:

run-gemini-cli is a GitHub Action that integrates Gemini into your development workflow via the Gemini CLI. It acts both as an autonomous agent for critical routine coding tasks, and an on-demand collaborator you can quickly delegate work to.

Use it to perform GitHub pull request reviews, triage issues, perform code analysis and modification, and more using Gemini conversationally (e.g., @gemini-cli fix this issue) directly inside your GitHub repositories.

- run-gemini-cli

- Automation: Trigger workflows based on events (e.g. issue opening) or schedules (e.g. nightly).

-

On-demand Collaboration: Trigger workflows in issue and pull request

comments by mentioning the Gemini CLI (e.g.,

@gemini-cli /review). -

Extensible with Tools: Leverage Gemini models' tool-calling capabilities to

interact with other CLIs like the GitHub CLI (

gh). -

Customizable: Use a

GEMINI.mdfile in your repository to provide project-specific instructions and context to Gemini CLI.

Get started with Gemini CLI in your repository in just a few minutes:

Obtain your API key from Google AI Studio with generous free-of-charge quotas

Store your API key as a secret named GEMINI_API_KEY in your repository:

- Go to your repository's Settings > Secrets and variables > Actions

- Click New repository secret

- Name:

GEMINI_API_KEY, Value: your API key

Add the following entries to your .gitignore file:

# gemini-cli settings

.gemini/

# GitHub App credentials

gha-creds-*.jsonYou have two options to set up a workflow:

Option A: Use setup command (Recommended)

-

Start the Gemini CLI in your terminal:

gemini

-

In Gemini CLI in your terminal, type:

/setup-github

Option B: Manually copy workflows

- Copy the pre-built workflows from the

examples/workflowsdirectory to your repository's.github/workflowsdirectory. Note: thegemini-dispatch.ymlworkflow must also be copied, which triggers the workflows to run.

Pull Request Review:

- Open a pull request in your repository and wait for automatic review

- Comment

@gemini-cli /reviewon an existing pull request to manually trigger a review

Issue Triage:

- Open an issue and wait for automatic triage

- Comment

@gemini-cli /triageon existing issues to manually trigger triaging

General AI Assistance:

- In any issue or pull request, mention

@gemini-clifollowed by your request - Examples:

@gemini-cli explain this code change@gemini-cli suggest improvements for this function@gemini-cli help me debug this error@gemini-cli write unit tests for this component

This action provides several pre-built workflows for different use cases. Each workflow is designed to be copied into your repository's .github/workflows directory and customized as needed.

This workflow acts as a central dispatcher for Gemini CLI, routing requests to the appropriate workflow based on the triggering event and the command provided in the comment. For a detailed guide on how to set up the dispatch workflow, go to the Gemini Dispatch workflow documentation.

This action can be used to triage GitHub Issues automatically or on a schedule. For a detailed guide on how to set up the issue triage system, go to the GitHub Issue Triage workflow documentation.

This action can be used to automatically review pull requests when they are opened. For a detailed guide on how to set up the pull request review system, go to the GitHub PR Review workflow documentation.

This type of action can be used to invoke a general-purpose, conversational Gemini AI assistant within the pull requests and issues to perform a wide range of tasks. For a detailed guide on how to set up the general-purpose Gemini CLI workflow, go to the Gemini Assistant workflow documentation.

-

gcp_location: (Optional) The Google Cloud location. -

gcp_project_id: (Optional) The Google Cloud project ID. -

gcp_service_account: (Optional) The Google Cloud service account email. -

gcp_workload_identity_provider: (Optional) The Google Cloud Workload Identity Provider. -

gemini_api_key: (Optional) The API key for the Gemini API. -

gemini_cli_version: (Optional, default:latest) The version of the Gemini CLI to install. Can be "latest", "preview", "nightly", a specific version number, or a git branch, tag, or commit. For more information, see Gemini CLI releases. -

gemini_debug: (Optional) Enable debug logging and output streaming. -

gemini_model: (Optional) The model to use with Gemini. -

google_api_key: (Optional) The Vertex AI API key to use with Gemini. -

prompt: (Optional, default:You are a helpful assistant.) A string passed to the Gemini CLI's--promptargument. -

settings: (Optional) A JSON string written to.gemini/settings.jsonto configure the CLI's project settings. For more details, see the documentation on settings files. -

use_gemini_code_assist: (Optional, default:false) Whether to use Code Assist for Gemini model access instead of the default Gemini API key. For more information, see the Gemini CLI documentation. -

use_vertex_ai: (Optional, default:false) Whether to use Vertex AI for Gemini model access instead of the default Gemini API key. For more information, see the Gemini CLI documentation.

-

summary: The summarized output from the Gemini CLI execution. -

error: The error output from the Gemini CLI execution, if any.

We recommend setting the following values as repository variables so they can be reused across all workflows. Alternatively, you can set them inline as action inputs in individual workflows or to override repository-level values.

| Name | Description | Type | Required | When Required |

|---|---|---|---|---|

DEBUG |

Enables debug logging for the Gemini CLI. | Variable | No | Never |

GEMINI_CLI_VERSION |

Controls which version of the Gemini CLI is installed. | Variable | No | Pinning the CLI version |

GCP_WIF_PROVIDER |

Full resource name of the Workload Identity Provider. | Variable | No | Using Google Cloud |

GOOGLE_CLOUD_PROJECT |

Google Cloud project for inference and observability. | Variable | No | Using Google Cloud |

SERVICE_ACCOUNT_EMAIL |

Google Cloud service account email address. | Variable | No | Using Google Cloud |

GOOGLE_CLOUD_LOCATION |

Region of the Google Cloud project. | Variable | No | Using Google Cloud |

GOOGLE_GENAI_USE_VERTEXAI |

Set to true to use Vertex AI |

Variable | No | Using Vertex AI |

GOOGLE_GENAI_USE_GCA |

Set to true to use Gemini Code Assist |

Variable | No | Using Gemini Code Assist |

APP_ID |

GitHub App ID for custom authentication. | Variable | No | Using a custom GitHub App |

To add a repository variable:

- Go to your repository's Settings > Secrets and variables > Actions > New variable.

- Enter the variable name and value.

- Save.

For details about repository variables, refer to the GitHub documentation on variables.

You can set the following secrets in your repository:

| Name | Description | Required | When Required |

|---|---|---|---|

GEMINI_API_KEY |

Your Gemini API key from Google AI Studio. | No | You don't have a GCP project. |

APP_PRIVATE_KEY |

Private key for your GitHub App (PEM format). | No | Using a custom GitHub App. |

GOOGLE_API_KEY |

Your Google API Key to use with Vertex AI. | No | You have a express Vertex AI account. |

To add a secret:

- Go to your repository's Settings > Secrets and variables >Actions > New repository secret.

- Enter the secret name and value.

- Save.

For more information, refer to the official GitHub documentation on creating and using encrypted secrets.

This action requires authentication to both Google services (for Gemini AI) and the GitHub API.

Choose the authentication method that best fits your use case:

- Gemini API Key: The simplest method for projects that don't require Google Cloud integration

- Workload Identity Federation: The most secure method for authenticating to Google Cloud services

You can authenticate with GitHub in two ways:

-

Default

GITHUB_TOKEN: For simpler use cases, the action can use the defaultGITHUB_TOKENprovided by the workflow. - Custom GitHub App (Recommended): For the most secure and flexible authentication, we recommend creating a custom GitHub App.

For detailed setup instructions for both Google and GitHub authentication, go to the Authentication documentation.

This action can be configured to send telemetry data (traces, metrics, and logs) to your own Google Cloud project. This allows you to monitor the performance and behavior of the Gemini CLI within your workflows, providing valuable insights for debugging and optimization.

For detailed instructions on how to set up and configure observability, go to the Observability documentation.

To ensure the security, reliability, and efficiency of your automated workflows, we strongly recommend following our best practices. These guidelines cover key areas such as repository security, workflow configuration, and monitoring.

Key recommendations include:

- Securing Your Repository: Implementing branch and tag protection, and restricting pull request approvers.

- Workflow Configuration: Using Workload Identity Federation for secure authentication to Google Cloud, managing secrets effectively, and pinning action versions to prevent unexpected changes.

- Monitoring and Auditing: Regularly reviewing action logs and enabling OpenTelemetry for deeper insights into performance and behavior.

For a comprehensive guide on securing your repository and workflows, please refer to our Best Practices documentation.

Create a GEMINI.md file in the root of your repository to provide project-specific context and instructions to Gemini CLI. This is useful for defining coding conventions, architectural patterns, or other guidelines the model should follow for a given repository.

Contributions are welcome! Check out the Gemini CLI Contributing Guide for more details on how to get started.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for run-gemini-cli

Similar Open Source Tools

run-gemini-cli

run-gemini-cli is a GitHub Action that integrates Gemini into your development workflow via the Gemini CLI. It acts as an autonomous agent for routine coding tasks and an on-demand collaborator. Use it for GitHub pull request reviews, triaging issues, code analysis, and more. It provides automation, on-demand collaboration, extensibility with tools, and customization options.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

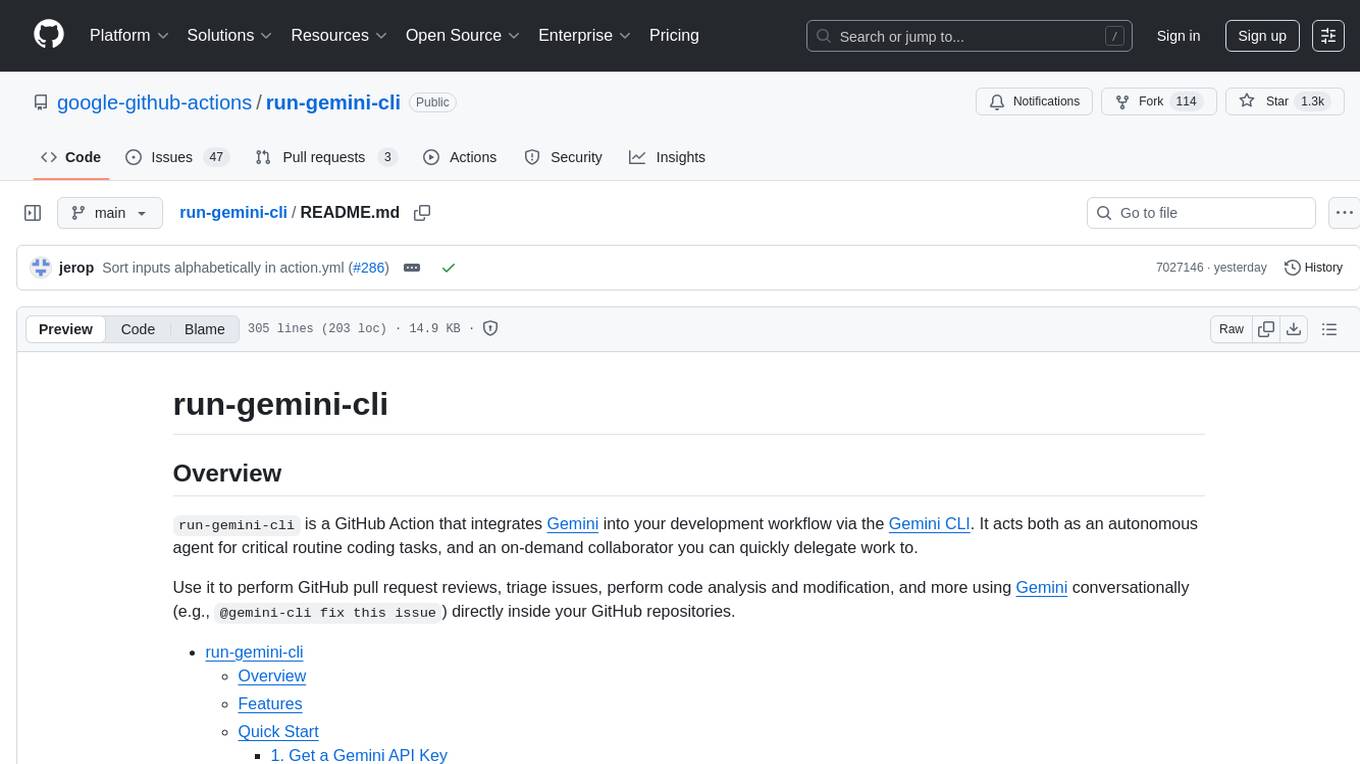

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

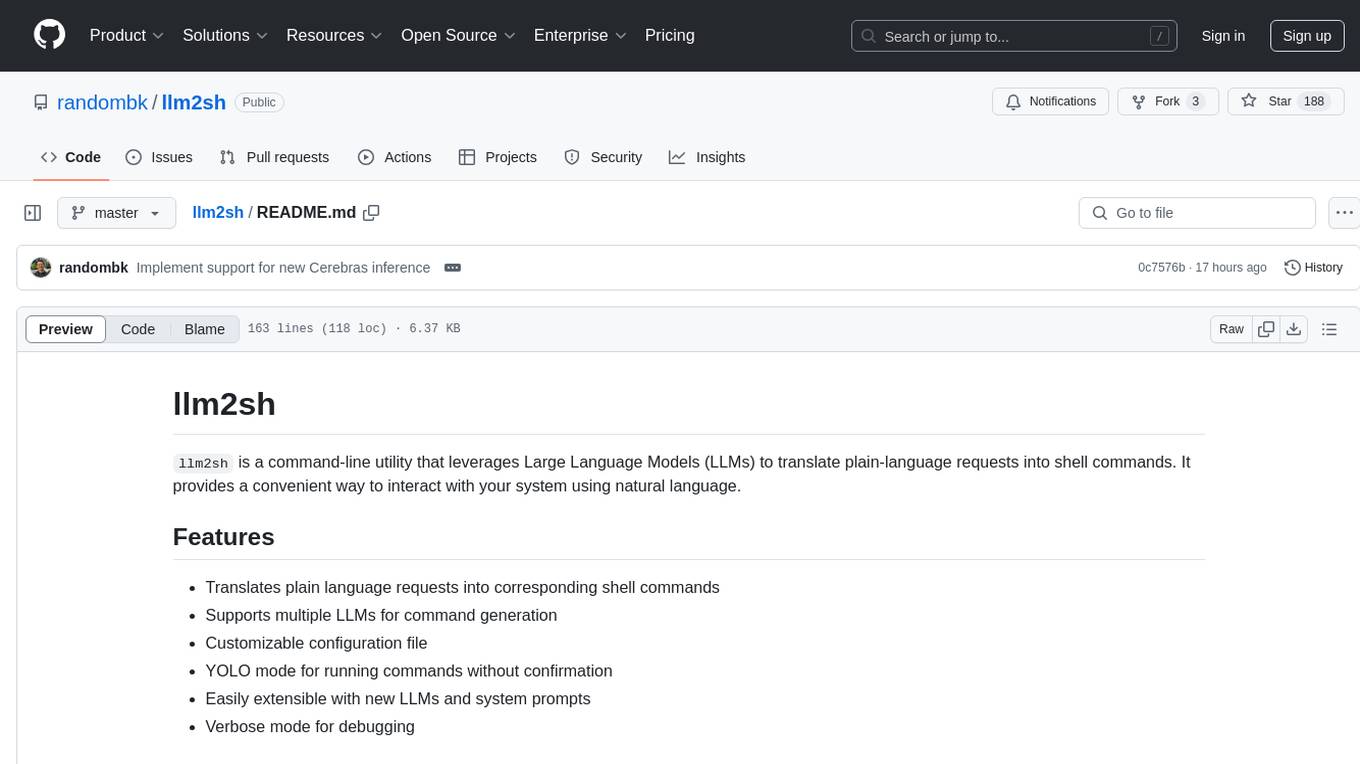

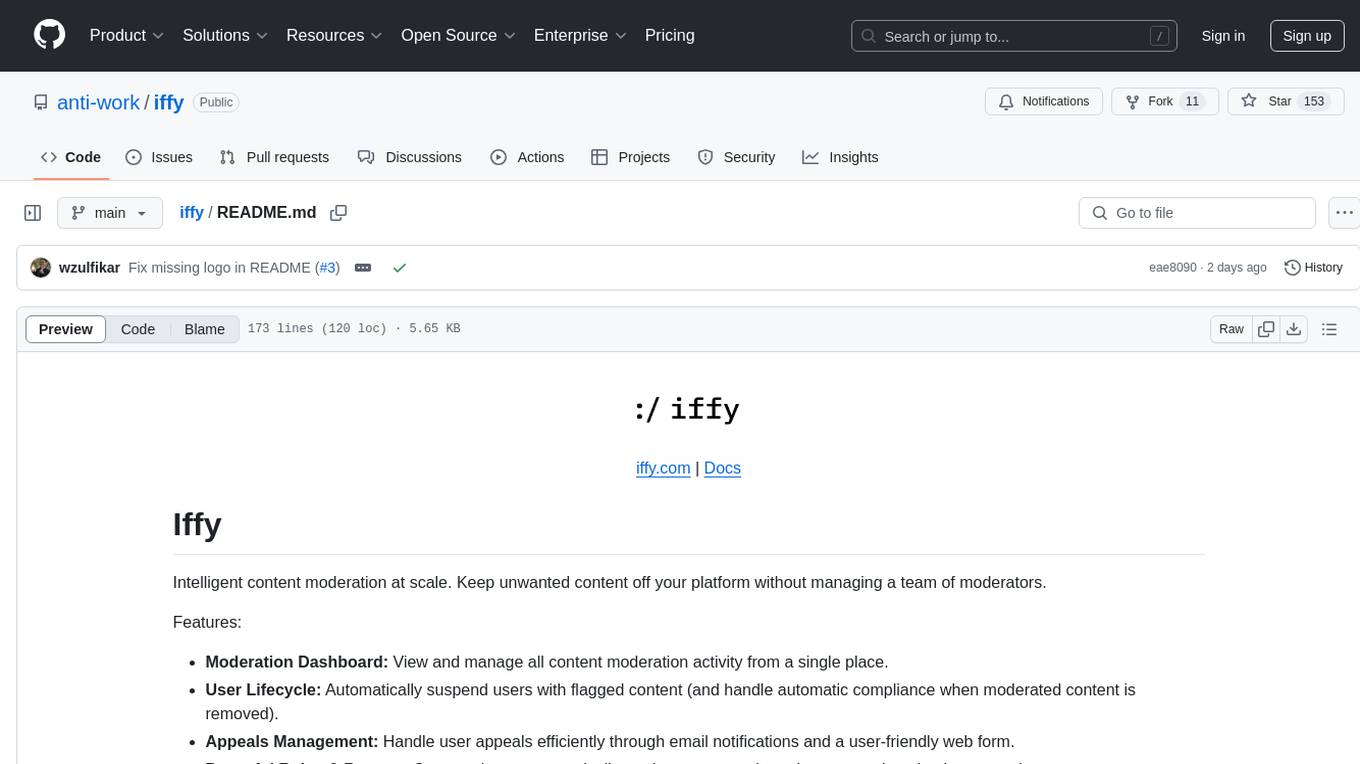

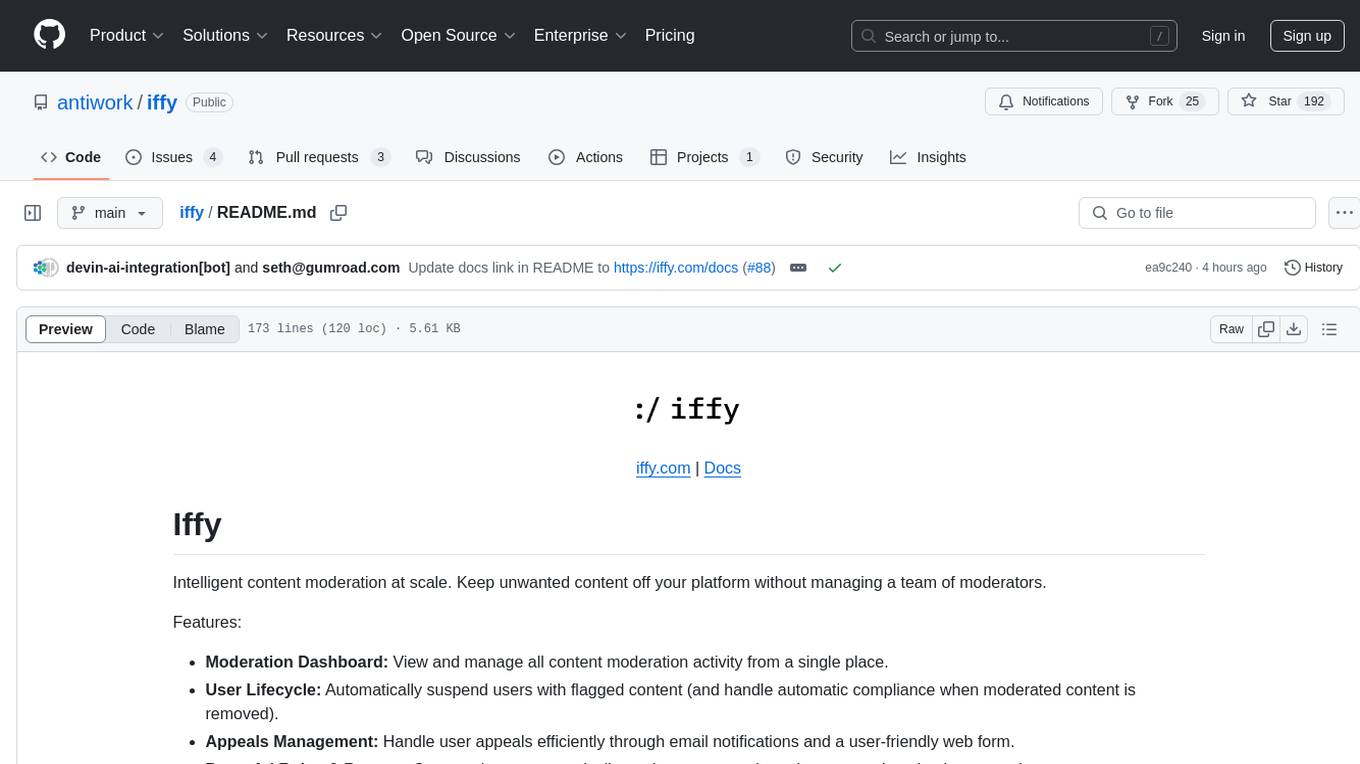

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It provides features such as a Moderation Dashboard to view and manage all moderation activity, User Lifecycle to automatically suspend users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setup requirements.

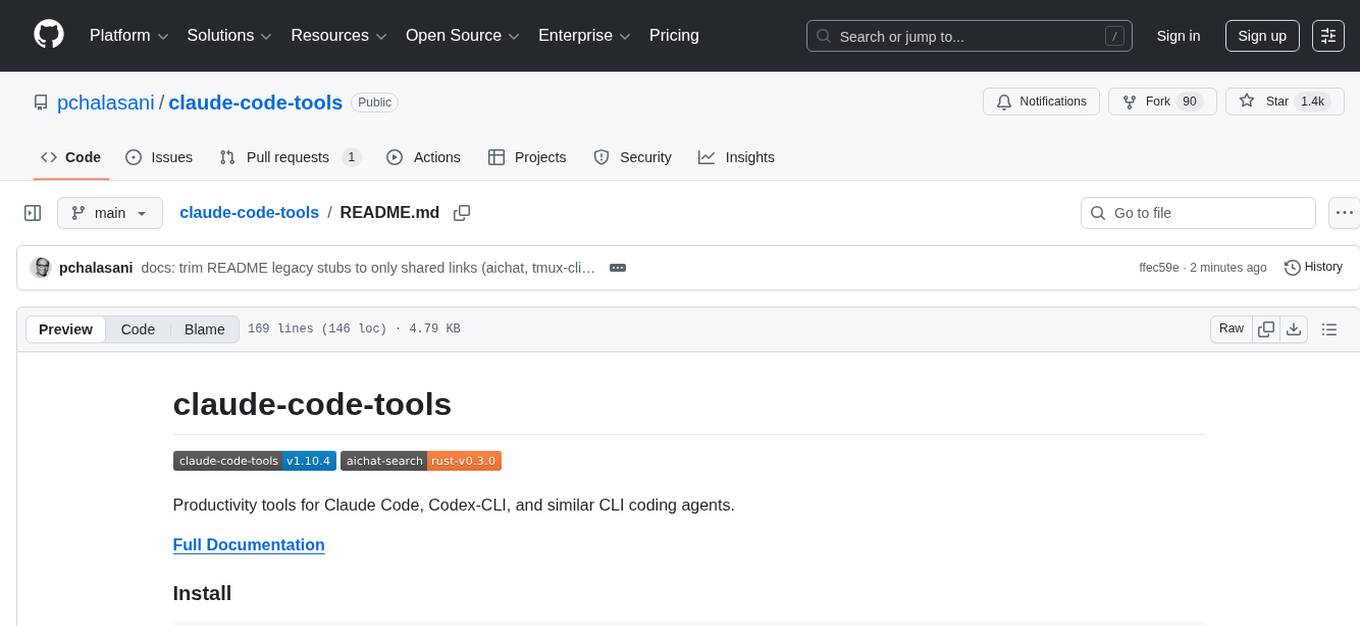

claude-code-tools

The 'claude-code-tools' repository provides productivity tools for Claude Code, Codex-CLI, and similar CLI coding agents. It includes CLI commands, skills, agents, hooks, and plugins for various tasks. The tools cover functionalities like session search, terminal automation, encrypted backup and sync, safe inspection of .env files, safety hooks, voice feedback, session chain repair, conversion between markdown and Google Docs, and CSV to Google Sheets and vice versa. The repository architecture consists of Python CLI, Rust TUI for search, and Node.js for action menus.

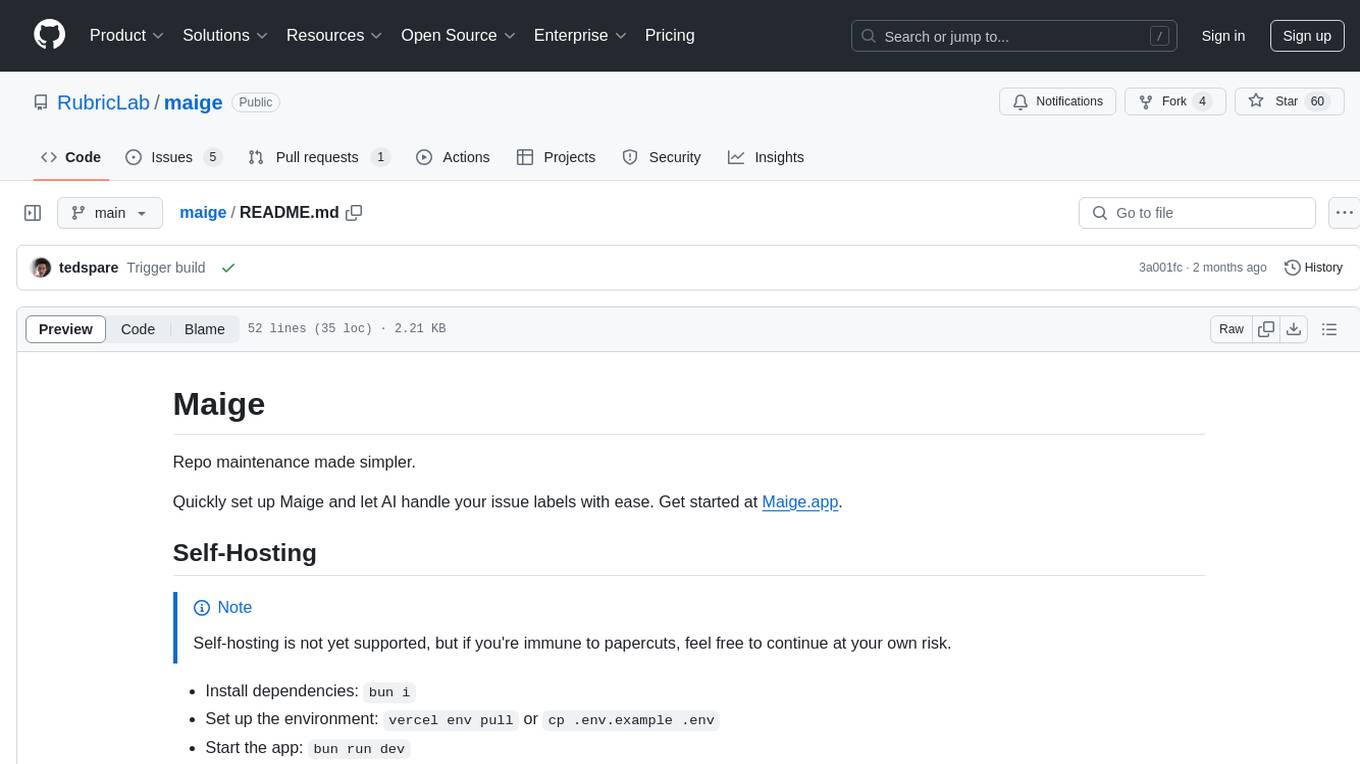

maige

Maige is a tool designed to simplify repository maintenance by automating the handling of issue labels. Users can quickly set up Maige to let AI manage their issue labels effortlessly. The tool provides guidance on self-hosting, GitHub app integration, environment variables setup, and offers commands for streamlined issue management. Maige aims to streamline the process of managing issues in a repository, making it easier for users to handle tasks related to labeling and tracking issues.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

RepoAgent

RepoAgent is an LLM-powered framework designed for repository-level code documentation generation. It automates the process of detecting changes in Git repositories, analyzing code structure through AST, identifying inter-object relationships, replacing Markdown content, and executing multi-threaded operations. The tool aims to assist developers in understanding and maintaining codebases by providing comprehensive documentation, ultimately improving efficiency and saving time.

mobile-use

Mobile-use is an open-source AI agent that controls Android or IOS devices using natural language. It understands commands to perform tasks like sending messages and navigating apps. Features include natural language control, UI-aware automation, data scraping, and extensibility. Users can automate their mobile experience by setting up environment variables, customizing LLM configurations, and launching the tool via Docker or manually for development. The tool supports physical Android phones, Android simulators, and iOS simulators. Contributions are welcome, and the project is licensed under MIT.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

iffy

Iffy is a tool for intelligent content moderation at scale, allowing users to keep unwanted content off their platform without the need to manage a team of moderators. It features a Moderation Dashboard to view and manage all moderation activities, User Lifecycle for automatically suspending users with flagged content, Appeals Management for efficient handling of user appeals, and Powerful Rules & Presets to create custom moderation rules based on unique business needs. Users can choose between the managed Iffy Cloud or the free self-hosted Iffy Community version, each offering different features and setups.

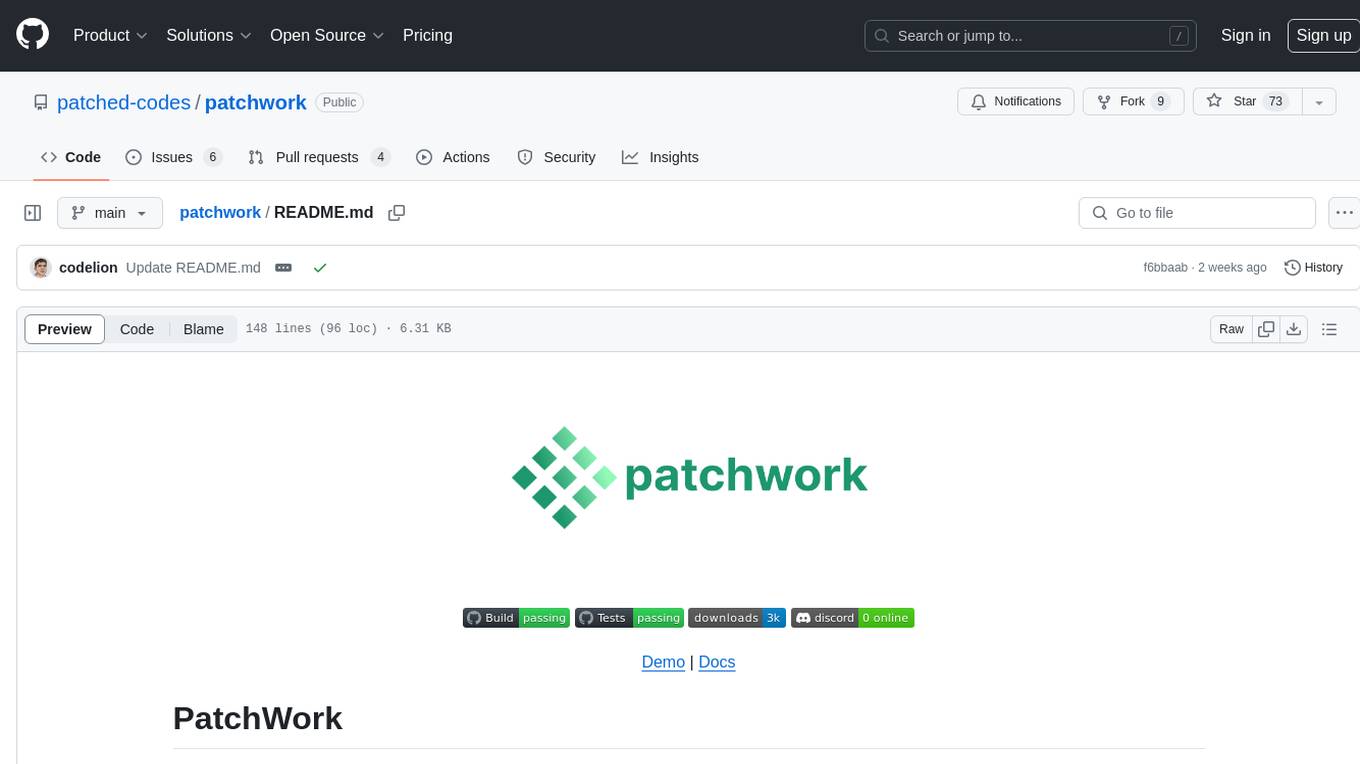

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

For similar tasks

ChatIDE

ChatIDE is an AI assistant that integrates with your IDE, allowing you to converse with OpenAI's ChatGPT or Anthropic's Claude within your development environment. It provides a seamless way to access AI-powered assistance while coding, enabling you to get real-time help, generate code snippets, debug errors, and brainstorm ideas without leaving your IDE.

mito

Mito is a set of Jupyter extensions designed to help users write Python code faster. It consists of Mito AI, providing tools like context-aware AI Chat and error debugging; Mito Spreadsheet, enabling data exploration with interactive spreadsheet features; and Mito for Streamlit and Dash, allowing easy integration of spreadsheets into dashboards with minimal code. Mito is open source and community-driven, with options to purchase Mito Pro for further development support.

Zentara-Code

Zentara Code is an AI coding assistant for VS Code that turns chat instructions into precise, auditable changes in the codebase. It is optimized for speed, safety, and correctness through parallel execution, LSP semantics, and integrated runtime debugging. It offers features like parallel subagents, integrated LSP tools, and runtime debugging for efficient code modification and analysis.

run-gemini-cli

run-gemini-cli is a GitHub Action that integrates Gemini into your development workflow via the Gemini CLI. It acts as an autonomous agent for routine coding tasks and an on-demand collaborator. Use it for GitHub pull request reviews, triaging issues, code analysis, and more. It provides automation, on-demand collaboration, extensibility with tools, and customization options.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

claude.vim

Claude.vim is a Vim plugin that integrates Claude, an AI pair programmer, into your Vim workflow. It allows you to chat with Claude about what to build or how to debug problems, and Claude offers opinions, proposes modifications, or even writes code. The plugin provides a chat/instruction-centric interface optimized for human collaboration, with killer features like access to chat history and vimdiff interface. It can refactor code, modify or extend selected pieces of code, execute complex tasks by reading documentation, cloning git repositories, and more. Note that it is early alpha software and expected to rapidly evolve.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.

sourcegraph

Sourcegraph is a code search and navigation tool that helps developers read, write, and fix code in large, complex codebases. It provides features such as code search across all repositories and branches, code intelligence for navigation and refactoring, and the ability to fix and refactor code across multiple repositories at once.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.