basilic

FullStack Web3 & AI Project Starter

Stars: 89

Basilic is a full-stack monorepo starter designed for teams developing Web3 and AI applications. It provides SDKs, public APIs, and multichain support to accelerate feature shipping. The starter includes AI-first development workflows, REST API with JWT authentication, SDK generation, Web3 and AI templates, design system, preconfigured development tools, security measures, multichain support, and TypeScript-first approach. It offers a technology stack covering AI, frontend, backend, Web3, and DevOps, along with various apps, packages, and scripts for setup, formatting, linting, testing, security, hooks, and miscellaneous tasks.

README:

Full-stack monorepo starter for Web3 and AI apps. Built for teams shipping products that need SDKs, public APIs, and multichain support—without rebuilding auth, OpenAPI tooling, or design systems from scratch. Ship features faster; security, docs, and AI-assisted workflows are included.

🚧 Active development — Explore, fork, and contribute. 🏗️

- 🤖 AI-first dev workflow — Agent rules, skills, MCP integrations, and automated CodeRabbit reviews

- 🔌 REST API & JWT — OpenAPI spec, Swagger UI, JWT auth for all clients

- 📦 SDK generation — Type-safe clients from OpenAPI via HeyAPI

- 🧩 Web3 & AI starters — Ready-to-use templates for Next.js, React, Expo, Fastify, and Ponder

- 🔓 Zero vendor lock-in — Run on VPS, AWS, Vercel, or local

- 🎨 Turbo monorepo + design system — ShadcnUI components with shared utilities

- ⚙️ Preconfigured dev tools — Biome, Git workflows, hooks, and security checks

- 🛡️ Security & quality — Automated checks in CI (e.g. Gitleaks, OSV)

- ⛓️ Multichain — EVM, Solana, Cosmos; shared validation and chain-specific tooling

- 📐 Conventions — Cursor rules per domain, @repo/sentry, Pino logging, shared TS and style

- 🧑💻 TypeScript-first — End-to-end types from database to frontend

- AI: AI SDK, OpenAI, Claude, Grok

- Frontend: Next.js 16, React 19, Tailwind, ShadcnUI

- Backend: Fastify, PostgreSQL, Supabase

- Web3: Solidity, Viem, Wagmi, Ponder, Solana

- DevOps: pnpm, TurboRepo, TypeScript, Biome, ESLint

- API — Type-safe REST API built with Fastify & OpenAPI

- Web App — Next.js app with monorepo integration

- Documentation — Fumadocs-based docs site for architecture, ADRs, and development workflows

- @repo/core — Runtime-agnostic API client and types generated from OpenAPI specs

-

@repo/react — React Query hooks for

@repo/coreAPI functions - @repo/ui — Shared UI component library (Shadcn/ui, Tailwind)

- @repo/utils — Shared utilities (async, data, debug, error, logger, web3)

-

@repo/sentry — Common

captureErrorinterface for error reporting - @repo/email — Email template library built with React Email

- @repo/notif — Notification service (email, activity) with type-safe schemas

Run with pnpm <script>.

Setup

-

setup— Full setup (install, hooks, gitleaks, osv, database) -

setup:gitleaks,setup:osv— Install Gitleaks, OSV scanner -

setup:database— Database tools Primary -

build— Build packages and apps -

dev— Start dev (core, react, sentry, utils, fastify, next) -

qa— Full check: install → checktypes → lint → build → test Format / Lint -

checktypes— Type-check all packages -

format— Format code (Biome) -

lint— Lint with Biome + ESLint -

lint:biome,lint:biome:fix— Biome check, fix -

lint:eslint,lint:eslint:fix— ESLint check, fix -

lint:fix— Fix both linters Test -

test— Run tests -

test:e2e— E2E (Fastify + Next) Security -

security:block-files— Block sensitive file patterns -

security:secrets— Scan staged files for secrets -

security:secrets:full— Full Gitleaks scan -

security:osv— OSV vulnerability scan -

security:audit— pnpm audit (moderate+) -

security:check— Run security check script Hooks -

hooks:pre-commit— Pre-commit: security + Biome staged -

hooks:security— Block files, scan secrets, OSV Misc -

update-deps— Update pnpm and all dependencies

Full docs: basilic-docs.vercel.app

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for basilic

Similar Open Source Tools

basilic

Basilic is a full-stack monorepo starter designed for teams developing Web3 and AI applications. It provides SDKs, public APIs, and multichain support to accelerate feature shipping. The starter includes AI-first development workflows, REST API with JWT authentication, SDK generation, Web3 and AI templates, design system, preconfigured development tools, security measures, multichain support, and TypeScript-first approach. It offers a technology stack covering AI, frontend, backend, Web3, and DevOps, along with various apps, packages, and scripts for setup, formatting, linting, testing, security, hooks, and miscellaneous tasks.

sparka

Sparka AI is a multi-provider AI chat tool that allows users to access various AI models like Claude, GPT-5, Gemini, and Grok through a single interface. It offers features such as document analysis, image generation, code execution, and research tools without the need for multiple subscriptions. The tool is open-source, production-ready, and provides capabilities for collaboration, secure authentication, attachment support, AI-powered image generation, syntax highlighting, resumable streams, chat branching, chat sharing, deep research, code execution, document creation, and web analytics. Built with modern technologies for scalability and performance, Sparka AI integrates with Vercel AI SDK, tRPC, Drizzle ORM, PostgreSQL, Redis, and AI SDK Gateway.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

llmchat

LLMChat is an all-in-one AI chat interface that supports multiple language models, offers a plugin library for enhanced functionality, enables web search capabilities, allows customization of AI assistants, provides text-to-speech conversion, ensures secure local data storage, and facilitates data import/export. It also includes features like knowledge spaces, prompt library, personalization, and can be installed as a Progressive Web App (PWA). The tech stack includes Next.js, TypeScript, Pglite, LangChain, Zustand, React Query, Supabase, Tailwind CSS, Framer Motion, Shadcn, and Tiptap. The roadmap includes upcoming features like speech-to-text and knowledge spaces.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

kitchenai

KitchenAI is an open-source toolkit designed to simplify AI development by serving as an AI backend and LLMOps solution. It aims to empower developers to focus on delivering results without being bogged down by AI infrastructure complexities. With features like simplifying AI integration, providing an AI backend, and empowering developers, KitchenAI streamlines the process of turning AI experiments into production-ready APIs. It offers built-in LLMOps features, is framework-agnostic and extensible, and enables faster time-to-production. KitchenAI is suitable for application developers, AI developers & data scientists, and platform & infra engineers, allowing them to seamlessly integrate AI into apps, deploy custom AI techniques, and optimize AI services with a modular framework. The toolkit eliminates the need to build APIs and infrastructure from scratch, making it easier to deploy AI code as production-ready APIs in minutes. KitchenAI also provides observability, tracing, and evaluation tools, and offers a Docker-first deployment approach for scalability and confidence.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

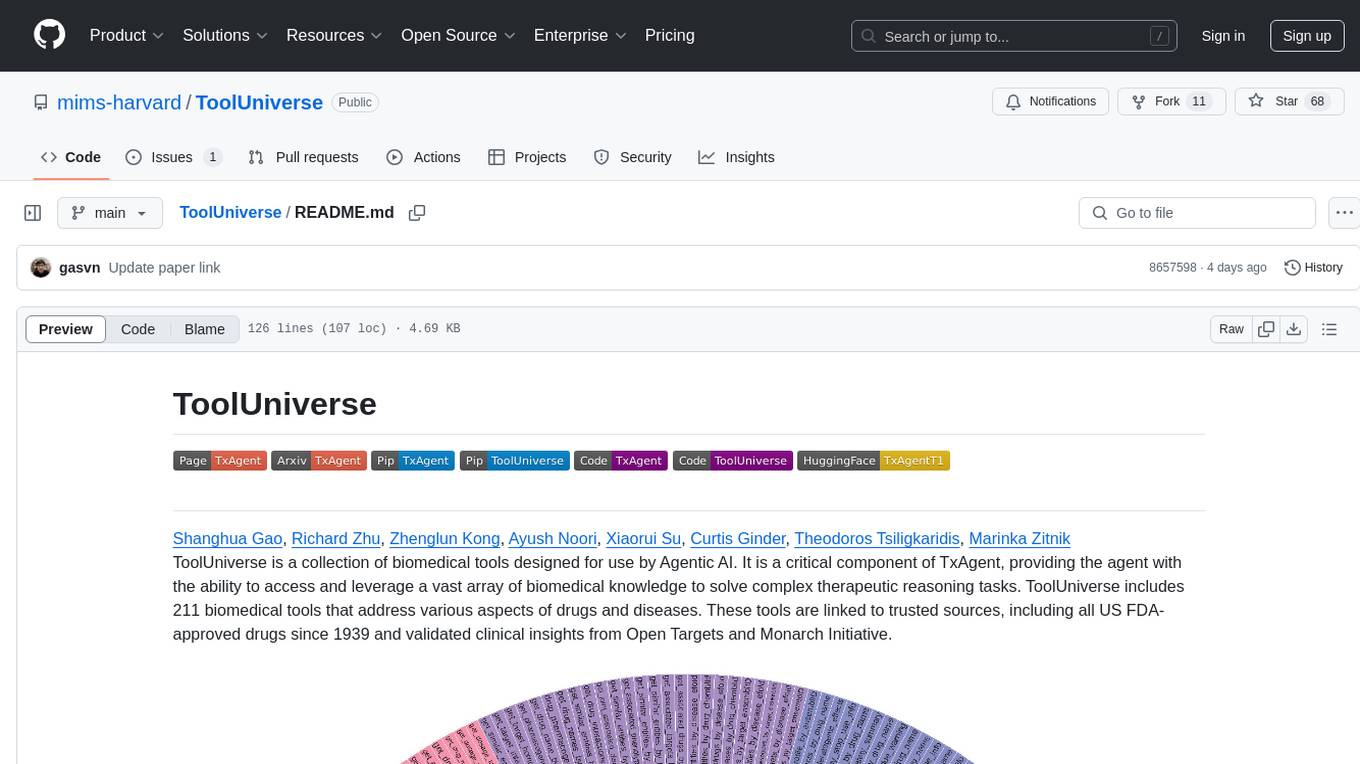

ToolUniverse

ToolUniverse is a collection of 211 biomedical tools designed for Agentic AI, providing access to biomedical knowledge for solving therapeutic reasoning tasks. The tools cover various aspects of drugs and diseases, linked to trusted sources like US FDA-approved drugs since 1939, Open Targets, and Monarch Initiative.

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

aiometadata

AIOMetadata is a next-generation metadata addon for Stremio that aggregates and enriches movie, series, and anime metadata from multiple sources like TMDB, TVDB, MyAnimeList, AniList, IMDb, and more. It offers rich artwork, custom catalogs, streaming provider integration, dynamic search, user configuration, global caching, advanced ID mapping, and a modern UI. Users can host it or self-host using Docker Compose, configure catalogs, providers, search engines, integrations, and security settings via a UI, and access various API endpoints for managing user config, cache, posters, images, and more. Supported providers include TMDB, TVDB, IMDb, MyAnimeList, AniList, Kitsu, Fanart.tv, MDBList, and more. Development involves backend and frontend setup using Redis for caching and SQLite/PostgreSQL for config storage. The project is licensed under Apache 2.0.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

OpenChat

OS Chat is a free, open-source AI personal assistant that combines 40+ language models with powerful automation capabilities. It allows users to deploy background agents, connect services like Gmail, Calendar, Notion, GitHub, and Slack, and get things done through natural conversation. With features like smart automation, service connectors, AI models, chat management, interface customization, and premium features, OS Chat offers a comprehensive solution for managing digital life and workflows. It prioritizes privacy by being open source and self-hostable, with encrypted API key storage.

For similar tasks

kork

Kork is an experimental Langchain chain that helps build natural language APIs powered by LLMs. It allows assembling a natural language API from python functions, generating a prompt for correct program writing, executing programs safely, and controlling the kind of programs LLMs can generate. The language is limited to variable declarations, function invocations, and arithmetic operations, ensuring predictability and safety in production settings.

llm-metadata

LLM Metadata is a lightweight static API designed for discovering and integrating LLM metadata. It provides a high-throughput friendly, static-by-default interface that serves static JSON via GitHub Pages. The sources for the metadata include models.dev/api.json and contributions from the basellm community. The tool allows for easy rebuilding on change and offers various scripts for compiling TypeScript, building the API, and managing the project. It also supports internationalization for both documentation and API, enabling users to add new languages and localize capability labels and descriptions. The tool follows an auto-update policy based on a configuration file and allows for directory-based overrides for providers and models, facilitating customization and localization of metadata.

basilic

Basilic is a full-stack monorepo starter designed for teams developing Web3 and AI applications. It provides SDKs, public APIs, and multichain support to accelerate feature shipping. The starter includes AI-first development workflows, REST API with JWT authentication, SDK generation, Web3 and AI templates, design system, preconfigured development tools, security measures, multichain support, and TypeScript-first approach. It offers a technology stack covering AI, frontend, backend, Web3, and DevOps, along with various apps, packages, and scripts for setup, formatting, linting, testing, security, hooks, and miscellaneous tasks.

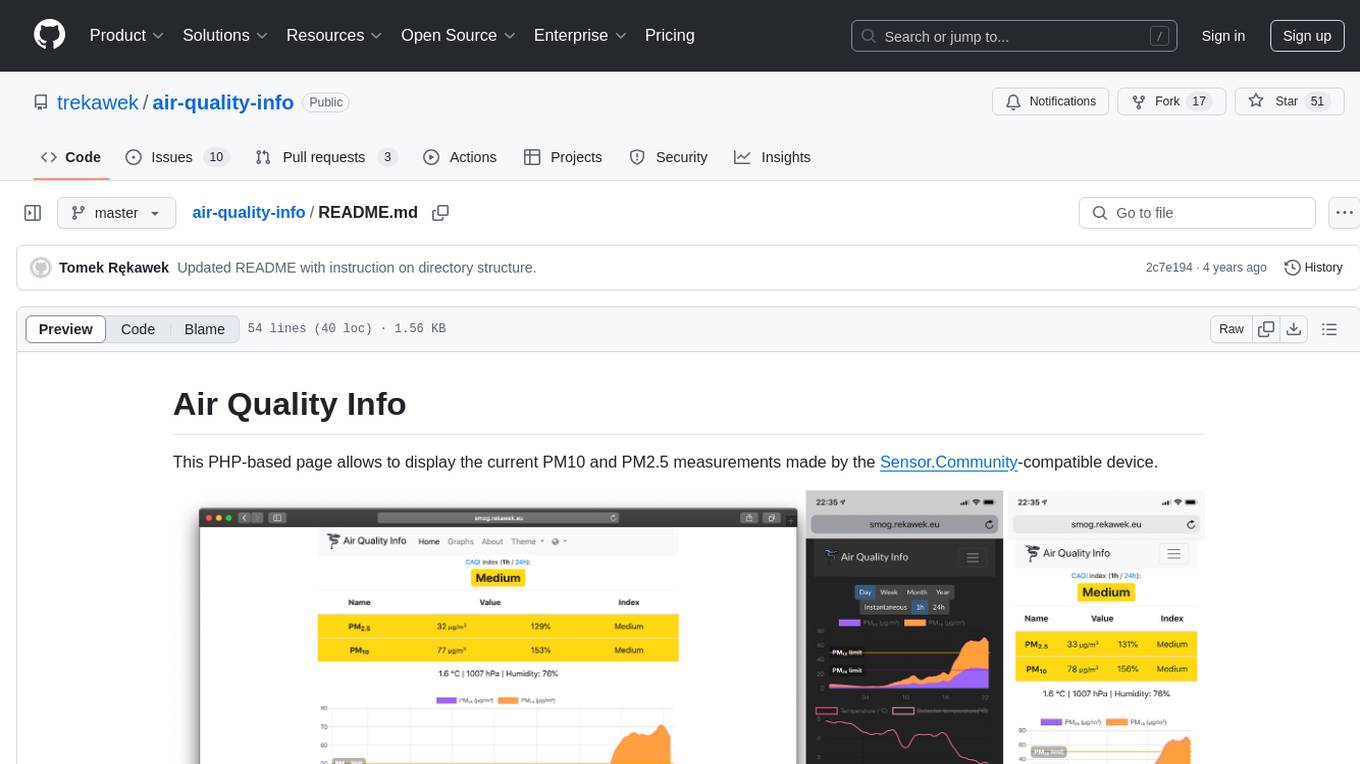

air-quality-info

Air Quality Info is a PHP-based page that displays current PM10 and PM2.5 measurements from Sensor.Community-compatible devices. It features a clean interface, stores records in MySQL, renders graphs with ChartJS, supports multiple devices, offers locale support, and functions as a Progressive Web App. The project setup involves creating directory structures, setting permissions, and starting Docker containers. The admin dashboard is accessible at http://aqi.eco.localhost:8080/, while the Air Quality Info pages use a specific naming schema. The project is supported by Nettigo Air Monitor, Sensor.Community, and a forum thread in Polish.

aspire-ai-chat-demo

Aspire AI Chat is a full-stack chat sample that combines modern technologies to deliver a ChatGPT-like experience. The backend API is built with ASP.NET Core and interacts with an LLM using Microsoft.Extensions.AI. It uses Entity Framework Core with CosmosDB for flexible, cloud-based NoSQL storage. The AI capabilities include using Ollama for local inference and switching to Azure OpenAI in production. The frontend UI is built with React, offering a modern and interactive chat experience.

ant-design-x-vue

Ant Design X Vue is a Vue implementation of the popular Ant Design X library. It provides a set of UI components and design patterns for Vue projects, allowing developers to easily create modern and responsive user interfaces. The repository includes installation instructions, development commands, and links to related resources such as Vue and Ant Design Vue. Contributions are welcome, and the project is licensed under MIT.

WhiskeyAI

WhiskeyAI is a Next.js project that serves as a starting point for developing web applications. It includes a development server for live previewing changes and utilizes next/font for optimizing and loading the Geist font family. The project encourages contributions and feedback from users, providing resources for learning Next.js and deploying applications on the Vercel platform.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.