aiometadata

my space for aiometadata

Stars: 184

AIOMetadata is a next-generation metadata addon for Stremio that aggregates and enriches movie, series, and anime metadata from multiple sources like TMDB, TVDB, MyAnimeList, AniList, IMDb, and more. It offers rich artwork, custom catalogs, streaming provider integration, dynamic search, user configuration, global caching, advanced ID mapping, and a modern UI. Users can host it or self-host using Docker Compose, configure catalogs, providers, search engines, integrations, and security settings via a UI, and access various API endpoints for managing user config, cache, posters, images, and more. Supported providers include TMDB, TVDB, IMDb, MyAnimeList, AniList, Kitsu, Fanart.tv, MDBList, and more. Development involves backend and frontend setup using Redis for caching and SQLite/PostgreSQL for config storage. The project is licensed under Apache 2.0.

README:

AIOMetadata is a next-generation, power-user-focused metadata addon for Stremio. It aggregates and enriches movie, series, and anime metadata from multiple sources (TMDB, TVDB, MyAnimeList, AniList, IMDb, TVmaze, Fanart.tv, MDBList, and more), giving you full control over catalog sources, artwork, and search.

- Multi-Source Metadata: Choose your preferred provider for each type (movie, series, anime) — TMDB, TVDB, MAL, AniList, IMDb, TVmaze, etc.

- Rich Artwork: High-quality posters, backgrounds, and logos from TMDB, TVDB, Fanart.tv, AniList, and more, with language-aware selection and fallback.

- Anime Power: Deep anime support with MAL, AniList, Kitsu, AniDB, and TVDB/IMDb mapping, including studio, genre, decade, and schedule catalogs.

- Custom Catalogs: Add, reorder, and delete catalogs (including MDBList, streaming, and custom lists) in a sortable UI.

- Streaming Catalogs: Integrate streaming provider catalogs (Netflix, Disney+, etc.) with region and monetization filters.

- Dynamic Search: Enable/disable search engines per type (movie, series, anime) and use AI-powered search (Gemini) if desired.

- User Config & Passwords: Secure, per-user configuration with password and optional addon password protection. Trusted UUIDs for seamless re-login.

- Global & Self-Healing Caching: Redis-backed, ETag-aware, and self-healing cache for fast, reliable metadata and catalog responses.

- Advanced ID Mapping: Robust mapping between all major ID systems (MAL, TMDB, TVDB, IMDb, AniList, AniDB, Kitsu, TVmaze).

- Modern UI: Intuitive React/Next.js configuration interface with drag-and-drop, tooltips, and instant feedback.

Visit your hosted instance's /configure page.

Configure your catalogs, providers, and preferences.

Save your config and install the generated Stremio addon URL.

services:

aiometadata:

image: ghcr.io/cedya77/aiometadata:latest

container_name: aiometadata

restart: unless-stopped

ports:

- "3232:3232" # Remove this if using Traefik

# expose: # Uncomment if using Traefik

# - 3232

env_file:

- .env

# labels: # Optional: Remove if not using Traefik

# - "traefik.enable=true"

# - "traefik.http.routers.aiometadata.rule=Host(`${AIOMETADATA_HOSTNAME?}`)"

# - "traefik.http.routers.aiometadata.entrypoints=websecure"

# - "traefik.http.routers.aiometadata.tls.certresolver=letsencrypt"

# - "traefik.http.routers.aiometadata.middlewares=authelia@docker"

# - "traefik.http.services.aiometadata.loadbalancer.server.port=3232"

volumes:

- ${DOCKER_DATA_DIR}/aiometadata/data:/app/addon/data

depends_on:

aiometadata_redis:

condition: service_healthy

tty: true

healthcheck:

test: ["CMD", "wget", "--no-verbose", "--tries=1", "--spider", "http://localhost:3232/health"]

interval: 30s

timeout: 10s

retries: 3

start_period: 40s

aiometadata_redis:

image: redis:latest

container_name: aiometadata_redis

restart: unless-stopped

volumes:

- ${DOCKER_DATA_DIR}/aiometadata/cache:/data

command: redis-server --appendonly yes --save 60 1

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 5s

retries: 5

#aiometadata_postgres:

# image: postgres:latest

# container_name: aiometadata_postgres

# restart: unless-stopped

# environment:

# - POSTGRES_DB=aiometadata

# - POSTGRES_USER=postgres

# - POSTGRES_PASSWORD=password

# volumes:

# - ${DOCKER_DATA_DIR}/aiometadata/postgres:/var/lib/postgresql/data

# healthcheck:

# test: ["CMD-SHELL", "pg_isready -U postgres -d aiometadata"]

# interval: 10s

# timeout: 5s

# retries: 5Create a .env file with your API keys and settings as shown in .env.example

Then run:

docker compose up -d- Catalogs: Add, remove, and reorder catalogs (TMDB, TVDB, MAL, AniList, MDBList, streaming, etc.).

- Providers: Set preferred metadata and artwork provider for each type.

- Search: Enable/disable search engines per type; enable AI search with Gemini API key.

- Integrations: Connect MDBList and more for personal lists.

- Security: Set user and (optional) addon password for config protection.

All configuration is managed via the /configure UI and saved per-user (UUID) in the database.

-

/stremio/:userUUID/:compressedConfig/manifest.json— Stremio manifest (per-user config) -

/api/config/save— Save user config (POST) -

/api/config/load/:userUUID— Load user config (POST) -

/api/config/update/:userUUID— Update user config (PUT) -

/api/config/is-trusted/:uuid— Check if UUID is trusted (GET) -

/api/cache/*— Cache health and admin endpoints -

/poster/:type/:id— Poster proxy with fallback and RPDB support -

/resize-image— Image resize proxy -

/api/image/blur— Image blur proxy

- Movies/Series: TMDB, TVDB, IMDb, TVmaze

- Anime: MyAnimeList (MAL), AniList, Kitsu, AniDB, TVDB, IMDb

- Artwork: TMDB, TVDB, Fanart.tv, AniList, RPDB

- Personal Lists: MDBList, MAL, AniList

- Streaming: Netflix, Disney+, Amazon, and more (via TMDB watch providers)

# Backend

npm run dev:server

# Frontend

npm run dev- Edit

/addonfor backend,/configurefor frontend. - Uses Redis for caching, SQLite/PostgreSQL for config storage.

Apache 2.0 — see LICENSE.

Special thanks to MrCanelas, the original developer of the TMDB Addon for Stremio, whose work inspired and laid the groundwork for this project.

This addon aggregates metadata from third-party sources. Data accuracy and availability are not guaranteed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiometadata

Similar Open Source Tools

aiometadata

AIOMetadata is a next-generation metadata addon for Stremio that aggregates and enriches movie, series, and anime metadata from multiple sources like TMDB, TVDB, MyAnimeList, AniList, IMDb, and more. It offers rich artwork, custom catalogs, streaming provider integration, dynamic search, user configuration, global caching, advanced ID mapping, and a modern UI. Users can host it or self-host using Docker Compose, configure catalogs, providers, search engines, integrations, and security settings via a UI, and access various API endpoints for managing user config, cache, posters, images, and more. Supported providers include TMDB, TVDB, IMDb, MyAnimeList, AniList, Kitsu, Fanart.tv, MDBList, and more. Development involves backend and frontend setup using Redis for caching and SQLite/PostgreSQL for config storage. The project is licensed under Apache 2.0.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

ProxyPilot

ProxyPilot is a powerful local API proxy tool built in Go that eliminates the need for separate API keys when using Claude Code, Codex, Gemini, Kiro, and Qwen subscriptions with any AI coding tool. It handles OAuth authentication, token management, and API translation automatically, providing a single server to route requests. The tool supports multiple authentication providers, universal API translation, tool calling repair, extended thinking models, OAuth integration, multi-account support, quota auto-switching, usage statistics tracking, context compression, agentic harness for coding agents, session memory, system tray app, auto-updates, rollback support, and over 60 management APIs. ProxyPilot also includes caching layers for response and prompt caching to reduce latency and token usage.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

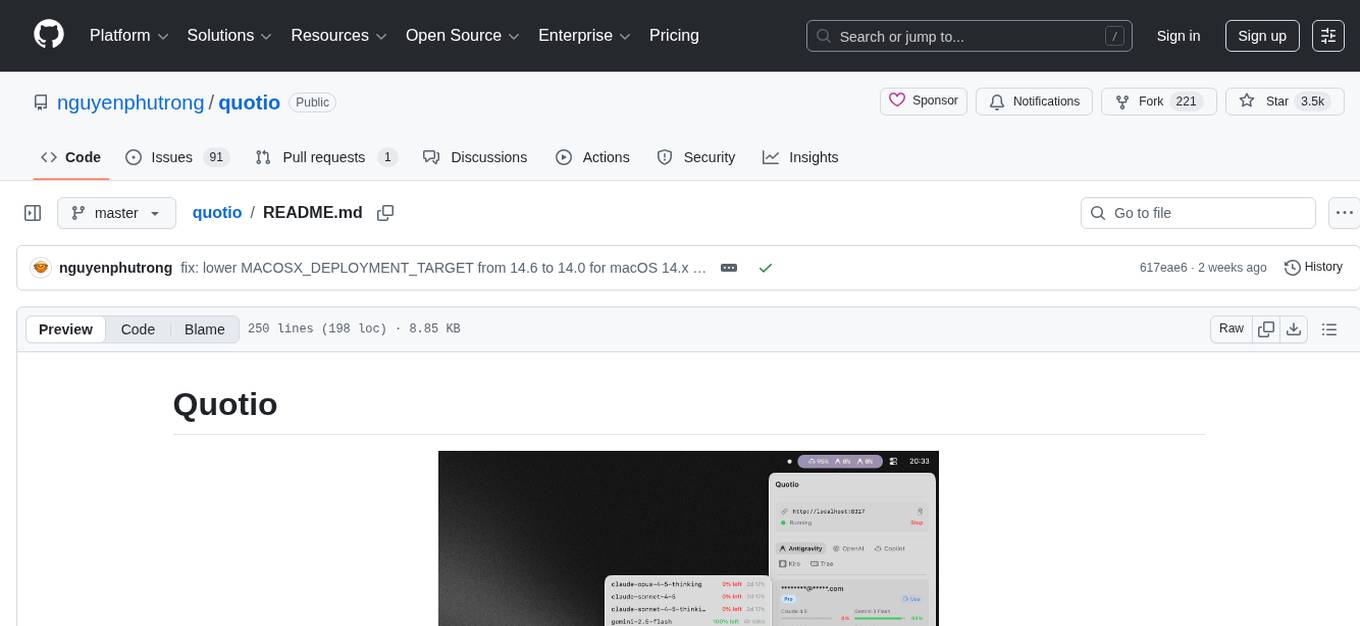

quotio

Quotio is a native macOS application designed as the ultimate command center for managing CLIProxyAPI, a local proxy server that powers AI coding agents. It allows users to connect multiple AI accounts, track quotas, configure CLI tools, and monitor request traffic in real-time. With features like multi-provider support, standalone quota mode, one-click agent configuration, real-time dashboard, smart quota management, API key management, menu bar integration, notifications, auto-update, and multilingual support, Quotio offers a comprehensive solution for AI coding assistants on macOS.

executorch

ExecuTorch is an end-to-end solution for enabling on-device inference capabilities across mobile and edge devices including wearables, embedded devices and microcontrollers. It is part of the PyTorch Edge ecosystem and enables efficient deployment of PyTorch models to edge devices. Key value propositions of ExecuTorch are: * **Portability:** Compatibility with a wide variety of computing platforms, from high-end mobile phones to highly constrained embedded systems and microcontrollers. * **Productivity:** Enabling developers to use the same toolchains and SDK from PyTorch model authoring and conversion, to debugging and deployment to a wide variety of platforms. * **Performance:** Providing end users with a seamless and high-performance experience due to a lightweight runtime and utilizing full hardware capabilities such as CPUs, NPUs, and DSPs.

polyfire-js

Polyfire is an all-in-one managed backend for AI apps that allows users to build AI apps directly from the frontend, eliminating the need for a separate backend. It simplifies the process by providing most backend services in just a few lines of code. With Polyfire, users can easily create chatbots, transcribe audio files to text, generate simple text, create a long-term memory, and generate images with Dall-E. The tool also offers starter guides and tutorials to help users get started quickly and efficiently.

zotero-mcp

Zotero MCP is an open-source project that integrates AI capabilities with Zotero using the Model Context Protocol. It consists of a Zotero plugin and an MCP server, enabling AI assistants to search, retrieve, and cite references from Zotero library. The project features a unified architecture with an integrated MCP server, eliminating the need for a separate server process. It provides features like intelligent search, detailed reference information, filtering by tags and identifiers, aiding in academic tasks such as literature reviews and citation management.

PageTalk

PageTalk is a browser extension that enhances web browsing by integrating Google's Gemini API. It allows users to select text on any webpage for AI analysis, translation, contextual chat, and customization. The tool supports multi-agent system, image input, rich content rendering, PDF parsing, URL context extraction, personalized settings, chat export, text selection helper, and proxy support. Users can interact with web pages, chat contextually, manage AI agents, and perform various tasks seamlessly.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

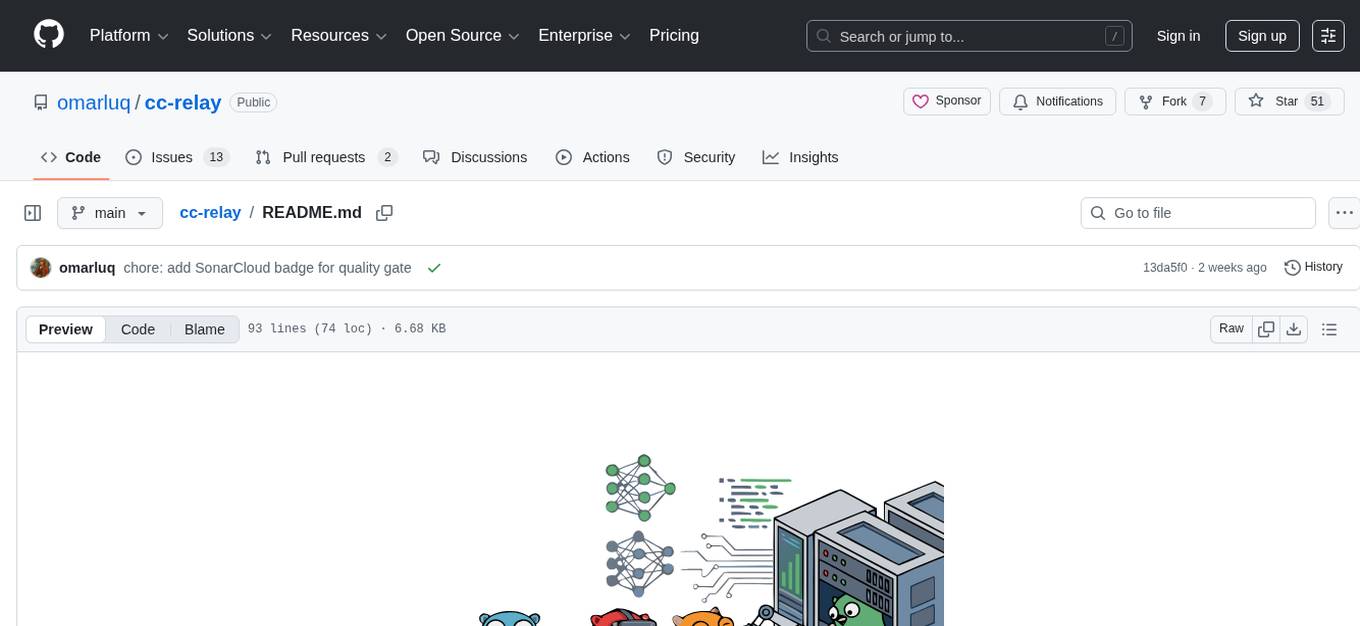

Dive

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. It offers universal LLM support, cross-platform compatibility, model context protocol for AI agent integration, OAP cloud integration, dual architecture for optimal performance, multi-language support, advanced API management, granular tool control, custom instructions, auto-update mechanism, and more. Dive provides a user-friendly interface for managing multiple AI models and tools, with recent updates introducing major architecture changes, new features, improvements, and platform availability. Users can easily download and install Dive on Windows, MacOS, and Linux, and set up MCP tools through local servers or OAP cloud services.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

For similar tasks

aiometadata

AIOMetadata is a next-generation metadata addon for Stremio that aggregates and enriches movie, series, and anime metadata from multiple sources like TMDB, TVDB, MyAnimeList, AniList, IMDb, and more. It offers rich artwork, custom catalogs, streaming provider integration, dynamic search, user configuration, global caching, advanced ID mapping, and a modern UI. Users can host it or self-host using Docker Compose, configure catalogs, providers, search engines, integrations, and security settings via a UI, and access various API endpoints for managing user config, cache, posters, images, and more. Supported providers include TMDB, TVDB, IMDb, MyAnimeList, AniList, Kitsu, Fanart.tv, MDBList, and more. Development involves backend and frontend setup using Redis for caching and SQLite/PostgreSQL for config storage. The project is licensed under Apache 2.0.

cc-relay

CC-Relay is a tool designed to boost Claude Code by routing to multiple Anthropic-compatible providers. It allows users to pool rate limits across multiple Anthropic API keys, save money by routing tasks to lighter models, ensure automatic failover between providers, and use company's Bedrock/Azure/Vertex alongside personal API keys. The tool facilitates seamless integration and efficient utilization of various AI service providers for enhanced performance and cost-effectiveness.

LakeSoul

LakeSoul is a cloud-native Lakehouse framework that supports scalable metadata management, ACID transactions, efficient and flexible upsert operation, schema evolution, and unified streaming & batch processing. It supports multiple computing engines like Spark, Flink, Presto, and PyTorch, and computing modes such as batch, stream, MPP, and AI. LakeSoul scales metadata management and achieves ACID control by using PostgreSQL. It provides features like automatic compaction, table lifecycle maintenance, redundant data cleaning, and permission isolation for metadata.

bella-openapi

Bella OpenAPI is an API gateway that provides rich AI capabilities, similar to openrouter. In addition to chat completion ability, it also offers text embedding, ASR, TTS, image-to-image, and text-to-image AI capabilities. It integrates billing, rate limiting, and resource management functions. All integrated capabilities have been validated in large-scale production environments. The tool supports various AI capabilities, metadata management, unified login service, billing and rate limiting, and has been validated in large-scale production environments for stability and reliability. It offers a user-friendly experience with Java-friendly technology stack, convenient cloud-based experience service, and Dockerized deployment.

datachain

DataChain is a Python-based AI-data warehouse for transforming and analyzing unstructured data like images, audio, videos, text, and PDFs. It integrates with external storage to process data efficiently without duplication and manages metadata for easy querying. Use cases include ETL, analytics, versioning, and incremental processing. Key features include multimodal dataset versioning, Python-friendly operations, data enrichment, and processing. The tool allows for generating metadata using AI models, filtering, joining, and grouping datasets, and performing high-performance vectorized operations.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.