cc-relay

⚡️ Blazing fast LLMs API Gateway written in Go

Stars: 51

CC-Relay is a tool designed to boost Claude Code by routing to multiple Anthropic-compatible providers. It allows users to pool rate limits across multiple Anthropic API keys, save money by routing tasks to lighter models, ensure automatic failover between providers, and use company's Bedrock/Azure/Vertex alongside personal API keys. The tool facilitates seamless integration and efficient utilization of various AI service providers for enhanced performance and cost-effectiveness.

README:

Claude Code connects to one provider at a time. But what if you want to:

🔑 Pool rate limits across multiple Anthropic API keys

💰 Save money by routing simple tasks to lighter models

🛡️ Never get stuck with automatic failover between providers

🏢 Use your company's Bedrock/Azure/Vertex alongside personal API keys

cc-relay makes all of this possible.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#6366f1', 'primaryTextColor': '#fff', 'primaryBorderColor': '#4f46e5', 'lineColor': '#94a3b8', 'secondaryColor': 'transparent', 'tertiaryColor': 'transparent', 'background': 'transparent', 'mainBkg': 'transparent', 'nodeBorder': '#4f46e5', 'clusterBkg': 'transparent', 'clusterBorder': 'transparent', 'titleColor': '#1e293b', 'edgeLabelBackground': 'transparent'}}}%%

flowchart LR

CC["👾 Claude Code"]

RELAY["⚡ CC-Relay"]

subgraph providers[" "]

direction TB

ANT["🤖 Anthropic"]

ZAI["🤖 Z.AI"]

OLL["🦙 Ollama"]

BED["🤖 AWS Bedrock"]

AZU["🤖 Azure Foundry"]

VTX["🤖 Vertex AI"]

end

CC --> RELAY

RELAY --> ANT

RELAY --> ZAI

RELAY --> OLL

RELAY --> BED

RELAY --> AZU

RELAY --> VTX

style CC fill:#1e1e2e,stroke:#6366f1,stroke-width:2px,color:#fff

style RELAY fill:#6366f1,stroke:#4f46e5,stroke-width:3px,color:#fff

style ANT fill:#ff6b35,stroke:#e55a2b,stroke-width:2px,color:#fff

style ZAI fill:#3b82f6,stroke:#2563eb,stroke-width:2px,color:#fff

style OLL fill:#22c55e,stroke:#16a34a,stroke-width:2px,color:#fff

style BED fill:#f59e0b,stroke:#d97706,stroke-width:2px,color:#fff

style AZU fill:#0ea5e9,stroke:#0284c7,stroke-width:2px,color:#fff

style VTX fill:#ef4444,stroke:#dc2626,stroke-width:2px,color:#fff

style providers fill:transparent,stroke:transparentAGPL-3.0 - © 2026 Omar Alani

Contributions welcome! Please open an issue before submitting PRs.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cc-relay

Similar Open Source Tools

cc-relay

CC-Relay is a tool designed to boost Claude Code by routing to multiple Anthropic-compatible providers. It allows users to pool rate limits across multiple Anthropic API keys, save money by routing tasks to lighter models, ensure automatic failover between providers, and use company's Bedrock/Azure/Vertex alongside personal API keys. The tool facilitates seamless integration and efficient utilization of various AI service providers for enhanced performance and cost-effectiveness.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

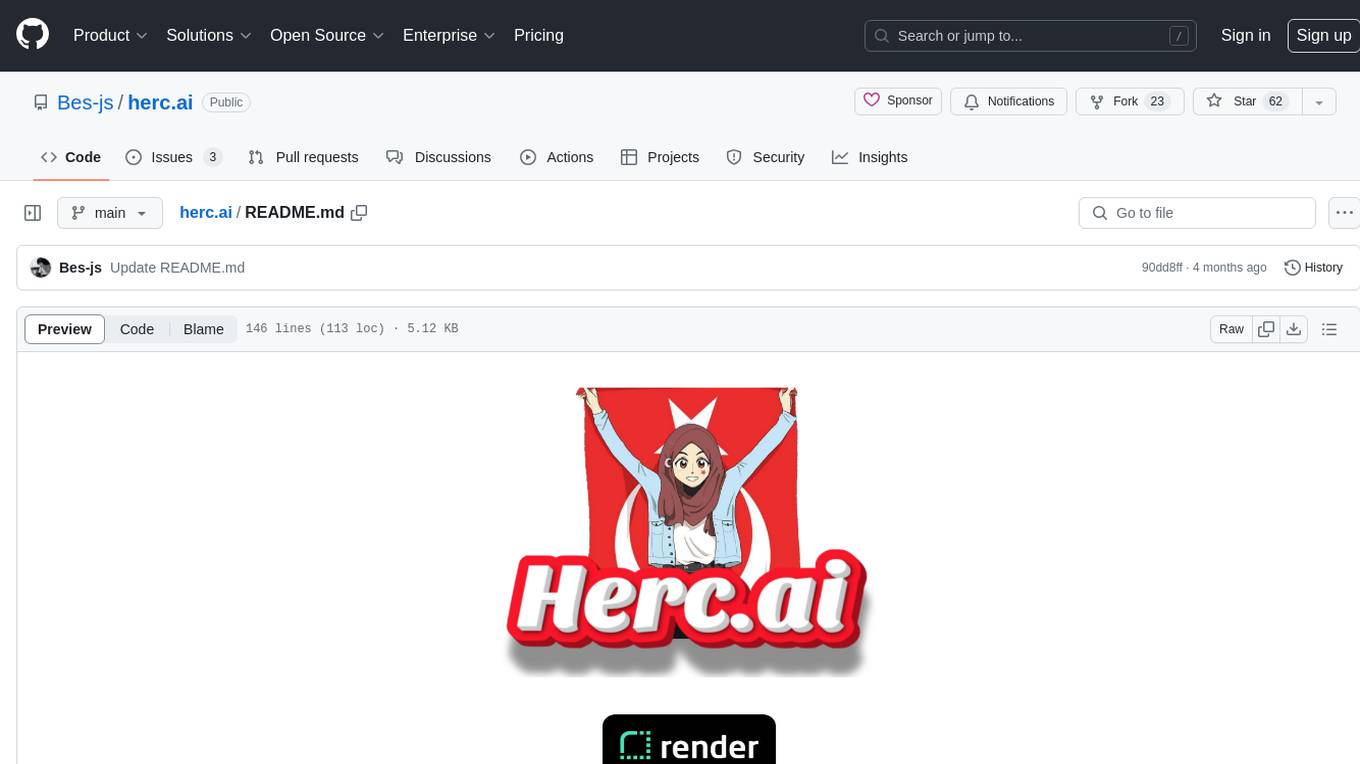

llm_model_hub

Model Hub V2 is a one-stop platform for model fine-tuning, deployment, and debugging without code, providing users with a visual interface to quickly validate the effects of fine-tuning various open-source models, facilitating rapid experimentation and decision-making, and lowering the threshold for users to fine-tune large models. For detailed instructions, please refer to the Feishu documentation.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

Janus

Janus is a series of unified multimodal understanding and generation models, including Janus-Pro, Janus, and JanusFlow. Janus-Pro is an advanced version that improves both multimodal understanding and visual generation significantly. Janus decouples visual encoding for unified multimodal understanding and generation, surpassing previous models. JanusFlow harmonizes autoregression and rectified flow for unified multimodal understanding and generation, achieving comparable or superior performance to specialized models. The models are available for download and usage, supporting a broad range of research in academic and commercial communities.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

Groq2API

Groq2API is a REST API wrapper around the Groq2 model, a large language model trained by Google. The API allows you to send text prompts to the model and receive generated text responses. The API is easy to use and can be integrated into a variety of applications.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

botgroup.chat

botgroup.chat is a multi-person AI chat application based on React and Cloudflare Pages for free one-click deployment. It supports multiple AI roles participating in conversations simultaneously, providing an interactive experience similar to group chat. The application features real-time streaming responses, customizable AI roles and personalities, group management functionality, AI role mute function, Markdown format support, mathematical formula display with KaTeX, aesthetically pleasing UI design, and responsive design for mobile devices.

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

For similar tasks

cc-relay

CC-Relay is a tool designed to boost Claude Code by routing to multiple Anthropic-compatible providers. It allows users to pool rate limits across multiple Anthropic API keys, save money by routing tasks to lighter models, ensure automatic failover between providers, and use company's Bedrock/Azure/Vertex alongside personal API keys. The tool facilitates seamless integration and efficient utilization of various AI service providers for enhanced performance and cost-effectiveness.

aiometadata

AIOMetadata is a next-generation metadata addon for Stremio that aggregates and enriches movie, series, and anime metadata from multiple sources like TMDB, TVDB, MyAnimeList, AniList, IMDb, and more. It offers rich artwork, custom catalogs, streaming provider integration, dynamic search, user configuration, global caching, advanced ID mapping, and a modern UI. Users can host it or self-host using Docker Compose, configure catalogs, providers, search engines, integrations, and security settings via a UI, and access various API endpoints for managing user config, cache, posters, images, and more. Supported providers include TMDB, TVDB, IMDb, MyAnimeList, AniList, Kitsu, Fanart.tv, MDBList, and more. Development involves backend and frontend setup using Redis for caching and SQLite/PostgreSQL for config storage. The project is licensed under Apache 2.0.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.