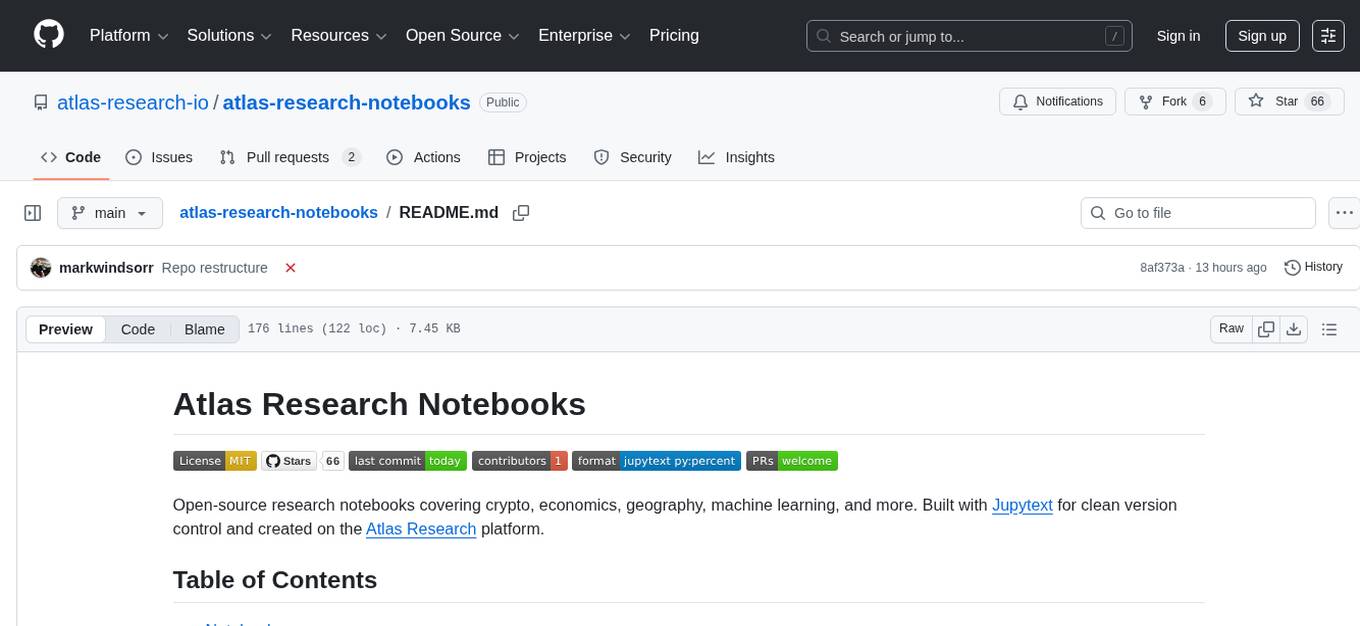

atlas-research-notebooks

From Prompt to Notebook. Some examples of taking ideas to execution with atlas-research.io

Stars: 66

A collection of open source sample codes and research notebooks created using the atlas-research.io platform. Enables rapid code prototyping, data wrangling, trading strategy development, academic research reproduction, and collaborative research. Repository structure includes sections for cryptocurrency analysis, economics research, and machine learning models. Requires Python 3.11+, Jupyter Notebook or JupyterLab, and necessary packages installed per notebook. Utilizes Jupytext to manage notebooks as Python scripts for better version control and code review. Demonstrates key platform features such as interactive development, data integration, visualization, reproducibility, and collaboration.

README:

A collection of open source sample codes and research notebooks created using the atlas-research.io platform.

Atlas Research enables researchers, developers, and analysts to:

- Prototype Code: Rapidly develop and test ideas in interactive environments

- Data Wrangling: Clean, transform, and analyze complex datasets

- Trading Strategies: Prototype and backtest financial trading algorithms

- Academic Research: Reproduce code from academic papers and research publications

- Collaborative Research: Share and collaborate on research projects

atlas-research-notebooks/

├── crypto/ # Cryptocurrency analysis and trading strategies

│ ├── 001_crypto_correlation.py

│ ├── 002_implied_volatility_surface_analysis.py

│ └── 003_volume_profile_market_regime.py

├── economics/ # Economics Research

├── machine-learning/ # ML models and experiments

├── CONTRIBUTING.md # Contribution guidelines

├── LICENSE # MIT License

└── README.md

- Python 3.11+

- Jupyter Notebook or JupyterLab

- Required packages (installed per notebook as needed)

- Clone the repository:

git clone https://github.com/atlas-research-io/atlas-research-notebooks.git

cd atlas-research-notebooks- Open any notebook and run the cells - dependencies will be installed automatically via pip install cells.

This repository uses Jupytext to manage notebooks as Python scripts (.py files) instead of .ipynb files. This approach provides better version control and easier code review.

Recommended: Create and edit notebooks in atlas-research.io, then use the Jupytext export button in the top toolbar to export as .py files.

For local development, you can convert between formats:

# Install jupytext (or use requirements.txt)

pip install jupytext

# Convert a single .py file to .ipynb

jupytext --to notebook crypto/001_crypto_correlation.py

# Convert all .py files to .ipynb

jupytext --to notebook **/*.py

# Sync changes from .ipynb back to .py

jupytext --sync crypto/001_crypto_correlation.ipynbNote: The repository only tracks .py files. Generated .ipynb files are ignored by git and can be created locally as needed.

We welcome contributions! Please see CONTRIBUTING.md for guidelines on how to:

- Submit new research notebooks

- Improve existing examples

- Report issues and suggest enhancements

- Follow coding standards and best practices

These notebooks demonstrate key capabilities of the atlas-research.io platform:

- Interactive Development: Jupyter-based research environment

- Data Integration: Seamless connection to external APIs and data sources

- Visualization: Rich plotting and charting capabilities

- Reproducibility: Self-contained notebooks with dependency management

- Collaboration: Shareable research workflows

- Built with the Atlas Research platform

- Powered by open source libraries including Jupyter, pandas, matplotlib, and many others

Start your research journey at atlas-research.io

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for atlas-research-notebooks

Similar Open Source Tools

atlas-research-notebooks

A collection of open source sample codes and research notebooks created using the atlas-research.io platform. Enables rapid code prototyping, data wrangling, trading strategy development, academic research reproduction, and collaborative research. Repository structure includes sections for cryptocurrency analysis, economics research, and machine learning models. Requires Python 3.11+, Jupyter Notebook or JupyterLab, and necessary packages installed per notebook. Utilizes Jupytext to manage notebooks as Python scripts for better version control and code review. Demonstrates key platform features such as interactive development, data integration, visualization, reproducibility, and collaboration.

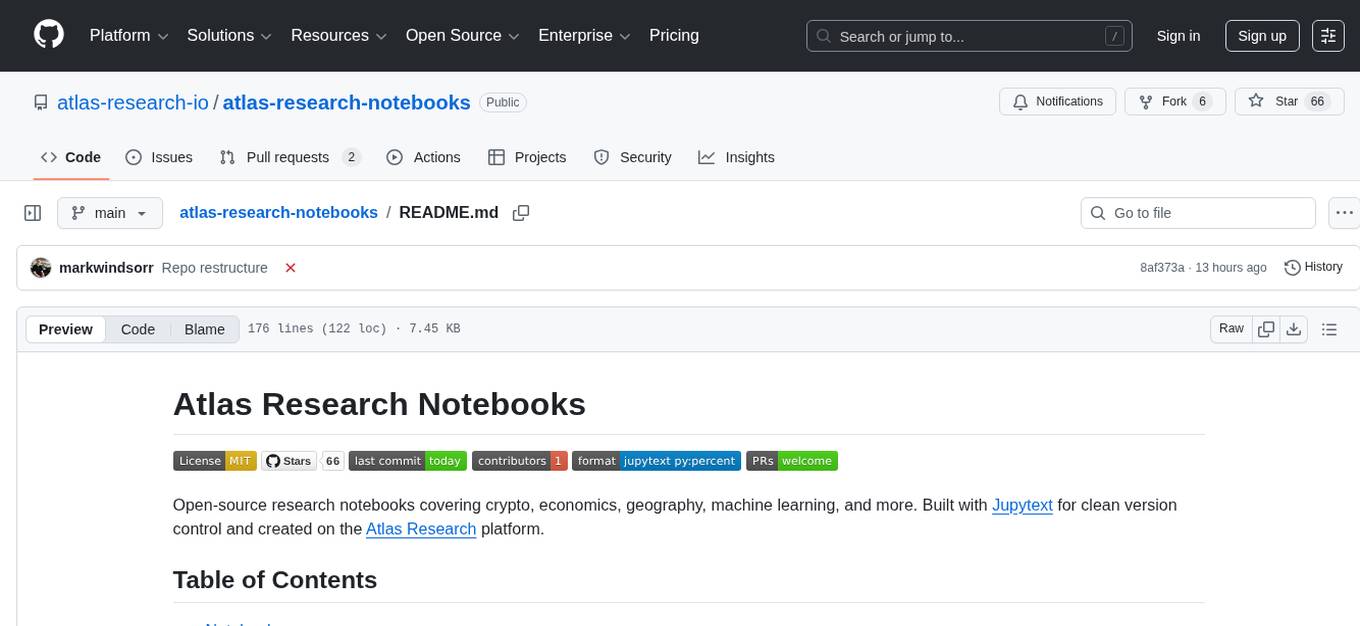

AI-Blueprints

This repository hosts a collection of AI blueprint projects for HP AI Studio, providing end-to-end solutions across key AI domains like data science, machine learning, deep learning, and generative AI. The projects are designed to be plug-and-play, utilizing open-source and hosted models to offer ready-to-use solutions. The repository structure includes projects related to classical machine learning, deep learning applications, generative AI, NGC integration, and troubleshooting guidelines for common issues. Each project is accompanied by detailed descriptions and use cases, showcasing the versatility and applicability of AI technologies in various domains.

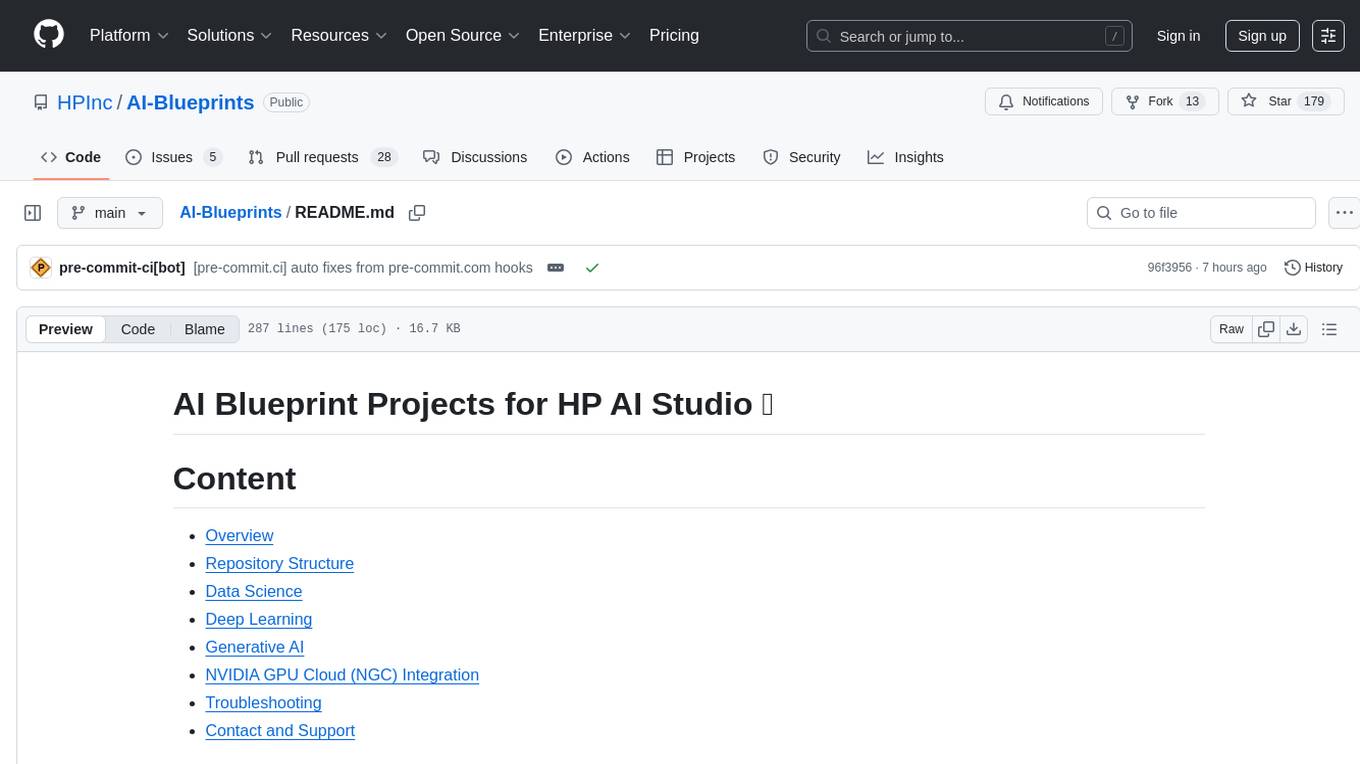

shandu

Shandu is an advanced AI research system that automates comprehensive research processes using language models, web scraping, and iterative exploration to generate well-structured reports with citations. It features intelligent state-based workflow, deep exploration, multi-source information synthesis, enhanced web scraping, smart source evaluation, content analysis pipeline, comprehensive report generation, parallel processing, adaptive search strategy, and full citation management.

Auto-Analyst

Auto-Analyst is an AI-driven data analytics agentic system designed to simplify and enhance the data science process. By integrating various specialized AI agents, this tool aims to make complex data analysis tasks more accessible and efficient for data analysts and scientists. Auto-Analyst provides a streamlined approach to data preprocessing, statistical analysis, machine learning, and visualization, all within an interactive Streamlit interface. It offers plug and play Streamlit UI, agents with data science speciality, complete automation, LLM agnostic operation, and is built using lightweight frameworks.

OAD

OAD is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With OAD, users can easily import data from various sources, clean and preprocess data, perform statistical analysis, and create customizable visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, OAD can help you streamline your data analysis workflow and uncover valuable insights from your data.

rowfill

Rowfill is an open-source document processing platform designed for knowledge workers. It offers advanced AI capabilities to extract, analyze, and process data from complex documents, images, and PDFs. The platform features advanced OCR and processing functionalities, auto-schema generation, and custom actions for creating tailored workflows. It prioritizes privacy and security by supporting Local LLMs like Llama and Mistral, syncing with company data while maintaining privacy, and being open source with AGPLv3 licensing. Rowfill is a versatile tool that aims to streamline document processing tasks for users in various industries.

LAMBDA

LAMBDA is a code-free multi-agent data analysis system that utilizes large models to address data analysis challenges in complex data-driven applications. It allows users to perform complex data analysis tasks through human language instruction, seamlessly generate and debug code using two key agent roles, integrate external models and algorithms, and automatically generate reports. The system has demonstrated strong performance on various machine learning datasets, enhancing data science practice by integrating human and artificial intelligence.

seatunnel

SeaTunnel is a high-performance, distributed data integration tool trusted by numerous companies for synchronizing vast amounts of data daily. It addresses common data integration challenges by seamlessly integrating with diverse data sources, supporting multimodal data integration, complex synchronization scenarios, resource efficiency, and quality monitoring. With over 100 connectors, SeaTunnel offers batch-stream integration, distributed snapshot algorithm, multi-engine support, JDBC multiplexing, and log parsing. It provides high throughput, low latency, real-time monitoring, and supports two job development methods. Users can configure jobs, select execution engines, and parallelize data using source connectors. SeaTunnel also supports multimodal data integration, Apache SeaTunnel tools, real-world use cases, and visual management of jobs through the SeaTunnel Web Project.

MARBLE

MARBLE (Multi-Agent Coordination Backbone with LLM Engine) is a modular framework for developing, testing, and evaluating multi-agent systems leveraging Large Language Models. It provides a structured environment for agents to interact in simulated environments, utilizing cognitive abilities and communication mechanisms for collaborative or competitive tasks. The framework features modular design, multi-agent support, LLM integration, shared memory, flexible environments, metrics and evaluation, industrial coding standards, and Docker support.

JamAIBase

JamAI Base is an open-source platform integrating SQLite and LanceDB databases with managed memory and RAG capabilities. It offers built-in LLM, vector embeddings, and reranker orchestration accessible through a spreadsheet-like UI and REST API. Users can transform static tables into dynamic entities, facilitate real-time interactions, manage structured data, and simplify chatbot development. The tool focuses on ease of use, scalability, flexibility, declarative paradigm, and innovative RAG techniques, making complex data operations accessible to users with varying technical expertise.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.

trustgraph

TrustGraph is a tool that deploys private GraphRAG pipelines to build a RDF style knowledge graph from data, enabling accurate and secure `RAG` requests compatible with cloud LLMs and open-source SLMs. It showcases the reliability and efficiencies of GraphRAG algorithms, capturing contextual language flags missed in conventional RAG approaches. The tool offers features like PDF decoding, text chunking, inference of various LMs, RDF-aligned Knowledge Graph extraction, and more. TrustGraph is designed to be modular, supporting multiple Language Models and environments, with a plug'n'play architecture for easy customization.

mldl.study

MLDL.Study is a free interactive learning platform focused on simplifying Machine Learning (ML) and Deep Learning (DL) education for students and enthusiasts. It features curated roadmaps, videos, articles, and other learning materials. The platform aims to provide a comprehensive learning experience for Indian audiences, with easy-to-follow paths for ML and DL concepts, diverse resources including video tutorials and articles, and a growing community of over 6000 users. Contributors can add new resources following specific guidelines to maintain quality and relevance. Future plans include expanding content for global learners, introducing a Python programming roadmap, and creating roadmaps for fields like Generative AI and Reinforcement Learning.

instill-core

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

openvino

OpenVINO™ is an open-source toolkit for optimizing and deploying AI inference. It provides a common API to deliver inference solutions on various platforms, including CPU, GPU, NPU, and heterogeneous devices. OpenVINO™ supports pre-trained models from Open Model Zoo and popular frameworks like TensorFlow, PyTorch, and ONNX. Key components of OpenVINO™ include the OpenVINO™ Runtime, plugins for different hardware devices, frontends for reading models from native framework formats, and the OpenVINO Model Converter (OVC) for adjusting models for optimal execution on target devices.

software-dev-prompt-library

A collection of AI-powered prompts designed to streamline software development workflows. The library contains prompts at various stages of development, with structured sequences of connected prompts, project initialization support, development assistance, and documentation generation. It aims to provide consistent guidance across different development phases, promote systematic development processes, and enable progress tracking and validation.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.