Advanced-Prompt-Generator

Automating prompt engineering using AI Agents.

Stars: 85

This project is an LLM-based Advanced Prompt Generator designed to automate the process of prompt engineering by enhancing given input prompts using large language models (LLMs). The tool can generate advanced prompts with minimal user input, leveraging LLM agents for optimized prompt generation. It supports gpt-4o or gpt-4o-mini, offers FastAPI & Docker deployment for efficiency, provides a Gradio interface for easy testing, and is hosted on Hugging Face Spaces for quick demos. Users can expand model support to offer more variety and flexibility.

README:

This project is an LLM-based Advanced Prompt Generator designed to automate the process of prompt engineering by enhancing given input prompts using large language models (LLMs). Following established prompt engineering principles, the tool can generate advanced prompts with a simple click, leveraging LLM agents for optimized prompt generation.

You can demo this solution on Hugging Face Spaces.

Also you can check this Medium article about this solution for more details!

- Automated Prompt Engineering: AI Agent based prompt engineering processes, requiring minimal user input.

- Uses OpenAI APIs: Support for gpt-4o or gpt-4o-mini (we recommend gpt-4o-mini).

- FastAPI & Docker Deployment: Ensures efficient and scalable backend deployment.

- Gradio Interface: Provides an easy-to-use interface for testing the prompt generation.

- Hugging Face Integration: Hosted on Hugging Face Spaces for quck demo.

- Expand Model Support: Integrate additional models to offer more variety and flexibility.

├── .gitignore # Files and directories to be ignored by Git

├── LICENSE # License information for the project

├── README.md # Project documentation (this file)

├── Advancd_Prompt_Generator.py # Script to test the tool locally

├── pipeline.py # Core logic for prompt enhancement

├── requirements.txt # Python dependencies for the project

├── Docker-FastAPI-app # Version deployed with FastAPI & Docker

│ ├── app

│ │ ├── main.py

│ │ ├── pipeline.py

│ ├── Dockerfile

│ ├── requirements.txt

├── Gradio-app # Version deployed with Gradio

│ ├── app.py

│ ├── pipeline.py

│ ├── requirements.txt

>>> Input Prompt:

how to write a book?

>>> Enhanced Prompt:

As a knowledgeable writing coach, please provide a comprehensive guide on how to write a book.

Requirements:

1. Outline the key steps involved in the book writing process, including brainstorming, outlining, drafting, and revising.

2. Offer tips for maintaining motivation and overcoming writer's block.

3. Include advice on setting a writing schedule and establishing a writing environment.

4. Suggest resources for further learning about writing techniques and publishing options.

Structure your response as follows:

- Introduction to the book writing journey

- Step-by-step guide with actionable tips

- Strategies for motivation and productivity

- Recommended resources for aspiring authors

Keep the response medium in length (approximately 200-300 words) to ensure thorough coverage of the topic.

##REFERENCE SUGGESTIONS##

- "On Writing: A Memoir of the Craft" by Stephen King

Purpose: Offers insights into the writing process and practical advice for aspiring authors

Integration: Use as a guide for understanding the nuances of writing a book

- "The Elements of Style" by William Strunk Jr. and E.B. White

Purpose: Provides essential rules of English style and composition

Integration: Reference for improving writing clarity and effectiveness

- "Bird by Bird: Some Instructions on Writing and Life" by Anne Lamott

Purpose: Shares personal anecdotes and practical tips for overcoming writing challenges

Integration: Use for motivation and strategies to tackle the writing process

##THOUGHT PROCESS##

*Subtask 1*:

- **Description**: Introduce the book writing journey and its significance.

- **Reasoning**: Providing an introduction sets the context for aspiring authors, helping them understand the importance and challenges of writing a book.

- **Success criteria**: The introduction should clearly articulate the purpose of writing a book and inspire readers about the journey ahead.

*Subtask 2*:

- **Description**: Outline the key steps involved in the book writing process: brainstorming, outlining, drafting, and revising.

- **Reasoning**: Breaking down the writing process into clear steps helps authors navigate their journey systematically and reduces overwhelm.

- **Success criteria**: Each step should be defined with actionable tips, such as techniques for brainstorming ideas, structuring an outline, and strategies for effective drafting and revising.

*Subtask 3*:

- **Description**: Provide tips for maintaining motivation and overcoming writer's block.

- **Reasoning**: Addressing common challenges like writer's block is crucial for sustaining progress and ensuring authors remain engaged with their writing.

- **Success criteria**: Include practical strategies, such as setting small goals, taking breaks, and using prompts to reignite creativity.

*Subtask 4*:

- **Description**: Advise on setting a writing schedule and establishing a conducive writing environment.

- **Reasoning**: A structured writing schedule and a supportive environment are essential for productivity and focus during the writing process.

- **Success criteria**: Offer specific recommendations for daily writing routines and tips for creating a distraction-free workspace.

*Subtask 5*:

- **Description**: Suggest resources for further learning about writing techniques and publishing options.

- **Reasoning**: Providing additional resources empowers authors to deepen their knowledge and explore various publishing avenues.

- **Success criteria**: List reputable books, websites, and courses that cover writing skills and the publishing process, ensuring they are accessible and relevant to aspiring authors.

>>> Input Prompt:

write a python script to compute and plot the fibonacci spiral

>>> Enhanced Prompt:

As a programming expert, please create a Python script that computes and plots the Fibonacci spiral.

Requirements:

- Use the Fibonacci sequence to generate the necessary points for the spiral.

- Utilize libraries such as Matplotlib for plotting and NumPy for numerical calculations.

- Include comments explaining each step of the code.

- Ensure the script is modular, allowing for easy adjustments to the number of Fibonacci terms used in the spiral.

Provide the implementation with:

- Function to compute Fibonacci numbers up to a specified term.

- Function to plot the Fibonacci spiral using the computed points.

- Example usage demonstrating the script in action, including a plot display.

The Fibonacci spiral should start with the first few Fibonacci numbers and visually represent the growth of the spiral based on these values.

##REFERENCE SUGGESTIONS##

- Matplotlib Documentation

Purpose: Provides details on plotting functions and customization options

Integration: Reference for creating and customizing plots in the script

- Fibonacci Sequence Article

Purpose: Offers insights into the mathematical properties and applications of the Fibonacci sequence

Integration: Use as a reference for understanding the sequence's generation and its relation to the spiral

- Python Programming Guide

Purpose: Serves as a comprehensive resource for Python syntax and libraries

Integration: Reference for general Python programming practices and functions used in the script

##THOUGHT PROCESS##

*Subtask 1*:

- **Description**: Create a function to compute Fibonacci numbers up to a specified term.

- **Reasoning**: This function is essential for generating the sequence of Fibonacci numbers, which will be used to determine the points for the spiral.

- **Success criteria**: The function should return a list of Fibonacci numbers up to the specified term, with correct values (e.g., for n=5, it should return [0, 1, 1, 2, 3]).

*Subtask 2*:

- **Description**: Implement a function to plot the Fibonacci spiral using the computed points.

- **Reasoning**: Plotting the spiral visually represents the growth of the Fibonacci sequence, making it easier to understand the relationship between the numbers and the spiral shape.

- **Success criteria**: The function should create a plot that accurately represents the Fibonacci spiral, with appropriate axes and labels, and should display the plot correctly.

*Subtask 3*:

- **Description**: Include comments explaining each step of the code.

- **Reasoning**: Comments enhance code readability and maintainability, allowing others (or the future self) to understand the logic and flow of the script.

- **Success criteria**: The code should have clear, concise comments that explain the purpose of each function and key steps within the functions.

*Subtask 4*:

- **Description**: Ensure the script is modular, allowing for easy adjustments to the number of Fibonacci terms used in the spiral.

- **Reasoning**: Modularity allows users to easily modify the number of terms without altering the core logic of the script, enhancing usability and flexibility.

- **Success criteria**: The script should allow the user to specify the number of Fibonacci terms as an input parameter, and the plot should update accordingly.

*Subtask 5*:

- **Description**: Provide example usage demonstrating the script in action, including a plot display.

- **Reasoning**: Example usage helps users understand how to implement and run the script, showcasing its functionality and output.

- **Success criteria**: The example should include a clear demonstration of how to call the functions, specify the number of terms, and display the resulting plot, with expected output shown.

- Clone the repository:

git clone https://github.com/Thunderhead-exe/Advanced-Prompt-Generator.git

- Install the dependencies:

pip install -r requirements.txt

- Run the app:

python3 Advancd_Prompt_Generator.py

This project is licensed under the terms of the apache-2.0 License.

Contributions are welcome! Please feel free to submit a Pull Request or open an Issue for any bug reports, feature requests, or general feedback.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Advanced-Prompt-Generator

Similar Open Source Tools

Advanced-Prompt-Generator

This project is an LLM-based Advanced Prompt Generator designed to automate the process of prompt engineering by enhancing given input prompts using large language models (LLMs). The tool can generate advanced prompts with minimal user input, leveraging LLM agents for optimized prompt generation. It supports gpt-4o or gpt-4o-mini, offers FastAPI & Docker deployment for efficiency, provides a Gradio interface for easy testing, and is hosted on Hugging Face Spaces for quick demos. Users can expand model support to offer more variety and flexibility.

ROSGPT_Vision

ROSGPT_Vision is a new robotic framework designed to command robots using only two prompts: a Visual Prompt for visual semantic features and an LLM Prompt to regulate robotic reactions. It is based on the Prompting Robotic Modalities (PRM) design pattern and is used to develop CarMate, a robotic application for monitoring driver distractions and providing real-time vocal notifications. The framework leverages state-of-the-art language models to facilitate advanced reasoning about image data and offers a unified platform for robots to perceive, interpret, and interact with visual data through natural language. LangChain is used for easy customization of prompts, and the implementation includes the CarMate application for driver monitoring and assistance.

Controllable-RAG-Agent

This repository contains a sophisticated deterministic graph-based solution for answering complex questions using a controllable autonomous agent. The solution is designed to ensure that answers are solely based on the provided data, avoiding hallucinations. It involves various steps such as PDF loading, text preprocessing, summarization, database creation, encoding, and utilizing large language models. The algorithm follows a detailed workflow involving planning, retrieval, answering, replanning, content distillation, and performance evaluation. Heuristics and techniques implemented focus on content encoding, anonymizing questions, task breakdown, content distillation, chain of thought answering, verification, and model performance evaluation.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

nextpy

Nextpy is a cutting-edge software development framework optimized for AI-based code generation. It provides guardrails for defining AI system boundaries, structured outputs for prompt engineering, a powerful prompt engine for efficient processing, better AI generations with precise output control, modularity for multiplatform and extensible usage, developer-first approach for transferable knowledge, and containerized & scalable deployment options. It offers 4-10x faster performance compared to Streamlit apps, with a focus on cooperation within the open-source community and integration of key components from various projects.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

ai-workshop

The AI Workshop repository provides a comprehensive guide to utilizing OpenAI's APIs, including Chat Completion, Embedding, and Assistant APIs. It offers hands-on demonstrations and code examples to help users understand the capabilities of these APIs. The workshop covers topics such as creating interactive chatbots, performing semantic search using text embeddings, and building custom assistants with specific data and context. Users can enhance their understanding of AI applications in education, research, and other domains through practical examples and usage notes.

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

persian-license-plate-recognition

The Persian License Plate Recognition (PLPR) system is a state-of-the-art solution designed for detecting and recognizing Persian license plates in images and video streams. Leveraging advanced deep learning models and a user-friendly interface, it ensures reliable performance across different scenarios. The system offers advanced detection using YOLOv5 models, precise recognition of Persian characters, real-time processing capabilities, and a user-friendly GUI. It is well-suited for applications in traffic monitoring, automated vehicle identification, and similar fields. The system's architecture includes modules for resident management, entrance management, and a detailed flowchart explaining the process from system initialization to displaying results in the GUI. Hardware requirements include an Intel Core i5 processor, 8 GB RAM, a dedicated GPU with at least 4 GB VRAM, and an SSD with 20 GB of free space. The system can be installed by cloning the repository and installing required Python packages. Users can customize the video source for processing and run the application to upload and process images or video streams. The system's GUI allows for parameter adjustments to optimize performance, and the Wiki provides in-depth information on the system's architecture and model training.

spacy-llm

This package integrates Large Language Models (LLMs) into spaCy, featuring a modular system for **fast prototyping** and **prompting** , and turning unstructured responses into **robust outputs** for various NLP tasks, **no training data** required. It supports open-source LLMs hosted on Hugging Face 🤗: Falcon, Dolly, Llama 2, OpenLLaMA, StableLM, Mistral. Integration with LangChain 🦜️🔗 - all `langchain` models and features can be used in `spacy-llm`. Tasks available out of the box: Named Entity Recognition, Text classification, Lemmatization, Relationship extraction, Sentiment analysis, Span categorization, Summarization, Entity linking, Translation, Raw prompt execution for maximum flexibility. Soon: Semantic role labeling. Easy implementation of **your own functions** via spaCy's registry for custom prompting, parsing and model integrations. For an example, see here. Map-reduce approach for splitting prompts too long for LLM's context window and fusing the results back together

intro-llm-rag

This repository serves as a comprehensive guide for technical teams interested in developing conversational AI solutions using Retrieval-Augmented Generation (RAG) techniques. It covers theoretical knowledge and practical code implementations, making it suitable for individuals with a basic technical background. The content includes information on large language models (LLMs), transformers, prompt engineering, embeddings, vector stores, and various other key concepts related to conversational AI. The repository also provides hands-on examples for two different use cases, along with implementation details and performance analysis.

awesome-gpt-prompt-engineering

Awesome GPT Prompt Engineering is a curated list of resources, tools, and shiny things for GPT prompt engineering. It includes roadmaps, guides, techniques, prompt collections, papers, books, communities, prompt generators, Auto-GPT related tools, prompt injection information, ChatGPT plug-ins, prompt engineering job offers, and AI links directories. The repository aims to provide a comprehensive guide for prompt engineering enthusiasts, covering various aspects of working with GPT models and improving communication with AI tools.

For similar tasks

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

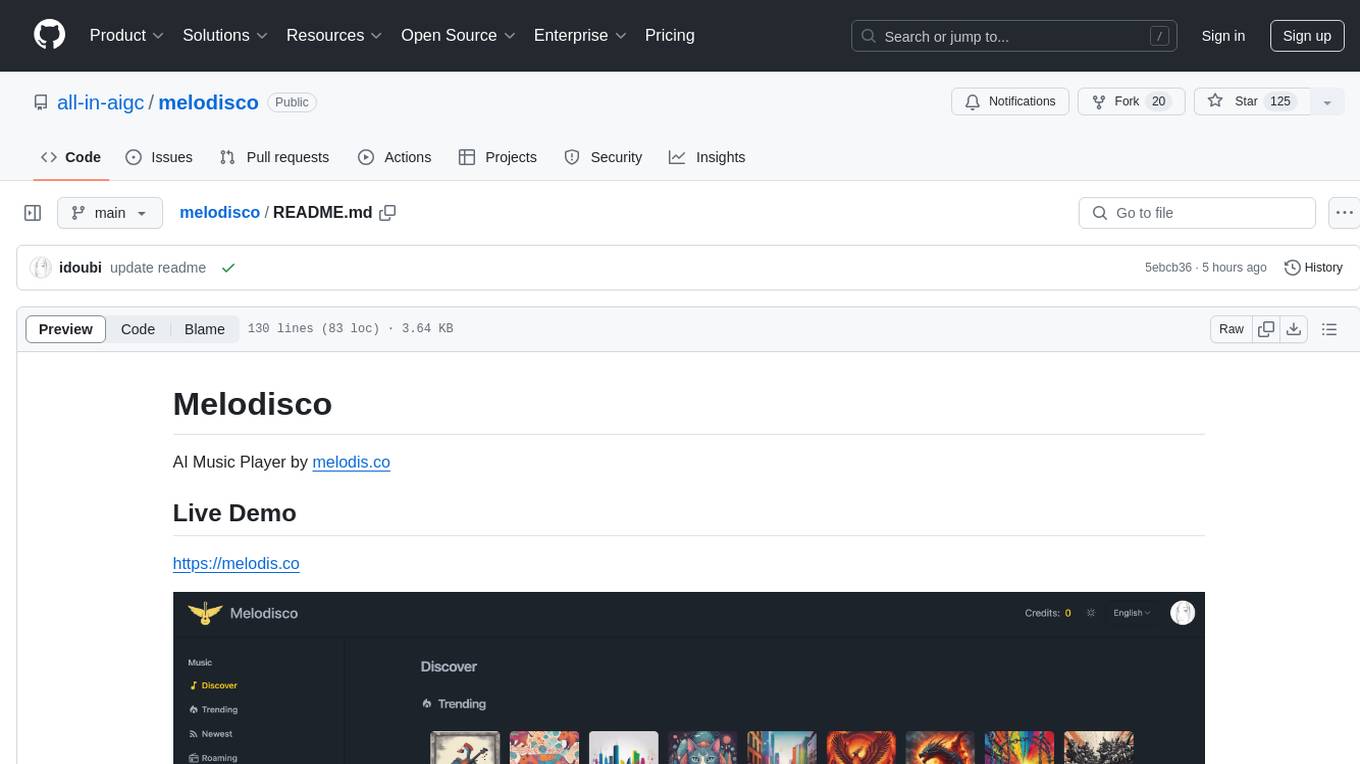

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

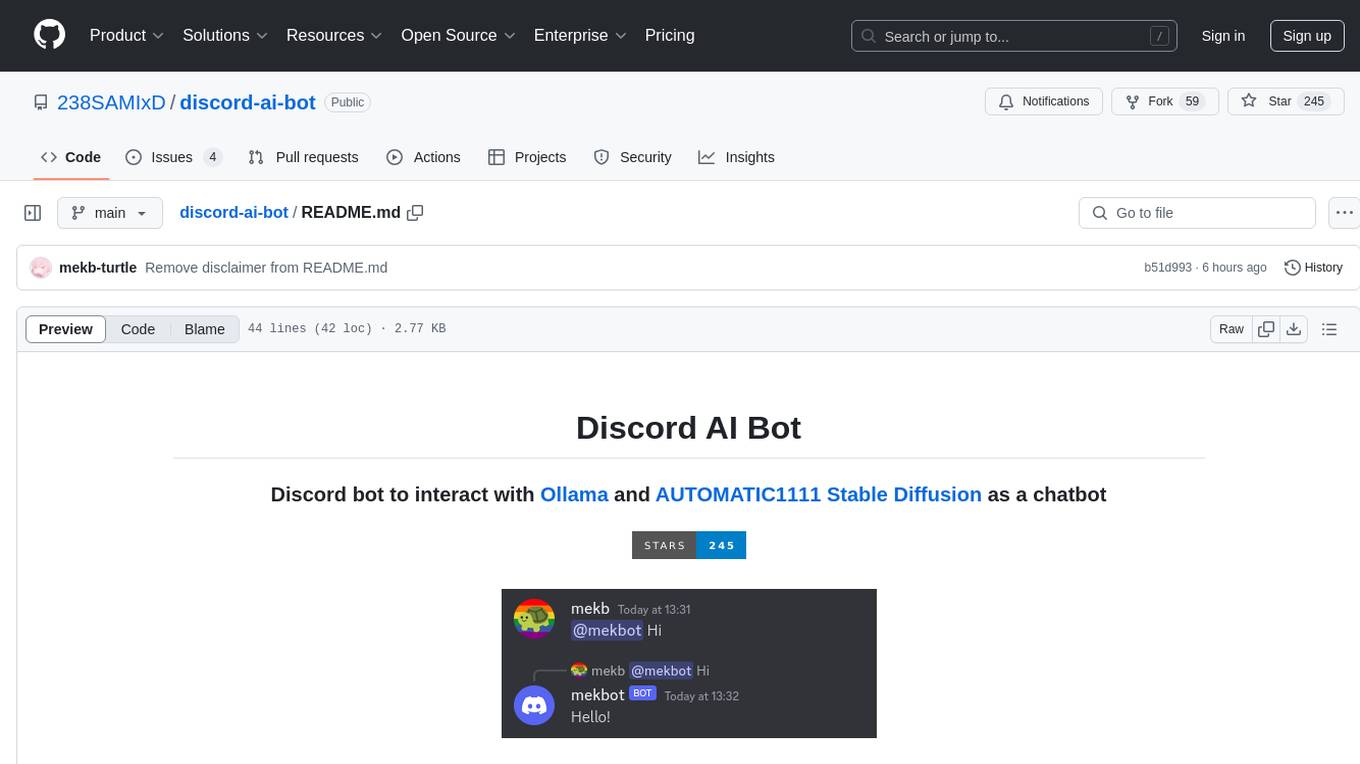

discord-ai-bot

Discord AI Bot is a chatbot tool designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The bot allows users to set up and configure a Discord bot to communicate with the mentioned AI models. Users can follow step-by-step instructions to install Node.js, Ollama, and the required dependencies, create a Discord bot, and interact with the bot by mentioning it in messages. Additionally, the tool provides set-up instructions for Docker users to easily deploy the bot using Docker containers. Overall, Discord AI Bot simplifies the process of integrating AI chatbots into Discord servers for interactive communication.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.