wiseflow

Continuously extract the information you need from specified sources

Stars: 8071

Wiseflow is an agile information mining tool that utilizes the thinking and analysis capabilities of large models to accurately extract specific information from various given sources, without the need for manual intervention. The tool focuses on filtering noise from a vast amount of information to reveal valuable insights. It is recommended to use normal language models for information extraction tasks to optimize speed and cost, rather than complex reasoning models. The tool is designed for continuous information gathering based on specified focus points from various sources.

README:

English | 日本語 | 한국어 | Deutsch | Français | العربية

🚀 STEP INTO 5.x

📌 寻找 4.x 版本? 原版 v4.30 及之前版本的代码在

4.x分支中。

“吾生也有涯,而知也无涯。以有涯随无涯,殆已!“ —— 《庄子·内篇·养生主第三》

wiseflow 4.x(包括之前的版本) 通过一系列精密的 workflow 实现了在特定场景下的强大的获取能力,但依然存在诸多局限性:

-

- 无法获取交互式内容(需要经过点选才能出现的内容,尤其是动态加载的情况)

-

- 只能进行信息过滤与提取,几乎没有任何下游任务能力

- ……

虽然我们一直致力于完善它的功能、扩增它的边界,但真实世界是复杂的,真实的互联网也一样,规则永无可能穷尽,因此固定的 workflow 永远做不到适配所有场景,这不是 wiseflow 的问题,这是传统软件的问题!

然而过去一年 Agent 的突飞猛进,让我们看到了由大模型驱动完全模拟人类互联网行为在技术上的可能,openclaw 的出现更让我们坚定了此信念。

更奇妙的是,通过前期的实验和探索,我们发现将 wiseflow 的获取能力以”插件“形式融入 openclaw,即可以完美解决上面提到的两个局限性。我们接下来会陆续放出激动人心的真实 demo 视频,同时开源发布这些”插件“。

不过需要说明的是,openclaw 的 plugin 系统与传统上我们理解的“插件”(类似 claude code 的 plugin)并不相同,因此我们不得不额外提出了“add-on"的概念,所以确切的说,wiseflow5.x 将以 openclaw add-on 的形态出现。原版的 openclaw 并不具有”add-on“架构,不过实际上,你只需要几条简单的 shell 命令即可完成这个”改造“。我们也准备了开箱即用、同时包含一系列针对真实商用场景预设配置的 openclaw 强化版本,即 openclaw_for_business, 你可以直接 clone ,并将 wiseflow release 解压缩放置于 openclaw_for_business 的 add-on 文件夹内即可。

将本目录复制到 openclaw_for_business 的 addons/ 目录:

# 方式一:从 wiseflow 仓库复制

git clone https://github.com/TeamWiseFlow/wiseflow.git /tmp/wiseflow

cp -r /tmp/wiseflow/addon <openclaw_for_business>/addons/wiseflow

# 方式二:如果已有 wiseflow 仓库

从 https://github.com/TeamWiseFlow/wiseflow/releases 下载最新的发布

解压缩后放入 <openclaw_for_business>/addons安装后重启 openclaw 即可生效。

addon/

├── addon.json # 元数据

├── overrides.sh # pnpm overrides: playwright-core → patchright-core

├── patches/

│ └── 001-browser-tab-recovery.patch # 标签页恢复补丁

├── skills/

│ └── browser-guide/SKILL.md # 浏览器使用最佳实践

├── docs/ # 技术文档

│ ├── anti-detection-research.md

│ └── openclaw-extension-architecture.md

└── tests/ # 测试用例和脚本

├── README.md

└── run-managed-tests.mjs

更强的抓取能力、更全面的社交媒体支持、含 UI 界面和免部署一键安装包!

https://github.com/user-attachments/assets/57f8569c-e20a-4564-a669-1200d56c5725

🔥 Pro 版本现已面向全网发售:https://shouxiqingbaoguan.com/

🌹 即日起为 wiseflow 开源版本贡献 PR(代码、文档、成功案例分享均欢迎),一经采纳,贡献者将获赠 wiseflow pro版本一年使用权!

📥 🎉 📚

自4.2版本起,我们更新了开源许可协议,敬请查阅: LICENSE

商用合作,请联系 Email:[email protected]

有任何问题或建议,欢迎通过 issue 留言。

有关 pro 版本的需求或合作反馈,欢迎联系 AI首席情报官“掌柜”企业微信:

- Patchright(Undetected Python version of the Playwright testing and automation library) https://github.com/Kaliiiiiiiiii-Vinyzu/patchright-python

- Feedparser(Parse feeds in Python) https://github.com/kurtmckee/feedparser

- SearXNG(a free internet metasearch engine which aggregates results from various search services and databases) https://github.com/searxng/searxng

如果您在相关工作中参考或引用了本项目的部分或全部,请注明如下信息:

Author:Wiseflow Team

https://github.com/TeamWiseFlow/wiseflow

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for wiseflow

Similar Open Source Tools

wiseflow

Wiseflow is an agile information mining tool that utilizes the thinking and analysis capabilities of large models to accurately extract specific information from various given sources, without the need for manual intervention. The tool focuses on filtering noise from a vast amount of information to reveal valuable insights. It is recommended to use normal language models for information extraction tasks to optimize speed and cost, rather than complex reasoning models. The tool is designed for continuous information gathering based on specified focus points from various sources.

hia

HIA (Health Insights Agent) is an AI agent designed to analyze blood reports and provide personalized health insights. It features an intelligent agent-based architecture with multi-model cascade system, in-context learning, PDF upload and text extraction, secure user authentication, session history tracking, and a modern UI. The tech stack includes Streamlit for frontend, Groq for AI integration, Supabase for database, PDFPlumber for PDF processing, and Supabase Auth for authentication. The project structure includes components for authentication, UI, configuration, services, agents, and utilities. Contributions are welcome, and the project is licensed under MIT.

claude-scholar

Claude Scholar is a personal configuration system for Claude Code CLI, designed for academic research and software development. It covers the full research lifecycle from ideation to publication, offering rich skills, commands, agents, and hooks optimized for various tasks. The tool supports features like research ideation, ML project development, experiment analysis, paper writing, self-review, submission and rebuttal, post-acceptance processing, and more. It also includes supporting workflows for automated enforcement, knowledge extraction, skill evolution, and offers a structured file structure for easy navigation and usage.

mxcp

MXCP is an enterprise-grade MCP framework for building production-ready AI applications. It provides a structured methodology for data modeling, service design, smart implementation, quality assurance, and production operations. With built-in enterprise features like security, audit trail, type safety, testing framework, performance optimization, and drift detection, MXCP ensures comprehensive security, quality, and operations. The tool supports SQL for data queries and Python for complex logic, ML models, and integrations, allowing users to choose the right tool for each job while maintaining security and governance. MXCP's architecture includes LLM client, MXCP framework, implementations, security & policies, SQL endpoints, Python tools, type system, audit engine, validation & tests, data sources, and APIs. The tool enforces an organized project structure and offers CLI commands for initialization, quality assurance, data management, operations & monitoring, and LLM integration. MXCP is compatible with Claude Desktop, OpenAI-compatible tools, and custom integrations through the Model Context Protocol (MCP) specification. The tool is developed by RAW Labs for production data-to-AI workflows and is released under the Business Source License 1.1 (BSL), with commercial licensing required for certain production scenarios.

gigachad-grc

A comprehensive, modular, containerized Governance, Risk, and Compliance (GRC) platform built with modern technologies. Manage your entire security program from compliance tracking to risk management, third-party assessments, and external audits. The platform includes specialized modules for Compliance, Data Management, Risk Management, Third-Party Risk Management, Trust, Audit, Tools, AI & Automation, and Administration. It offers features like controls management, frameworks assessment, policies lifecycle management, vendor risk management, security questionnaires, knowledge base, audit management, awareness training, phishing simulations, AI-powered risk scoring, and MCP server integration. The tech stack includes Node.js, TypeScript, React, PostgreSQL, Keycloak, Traefik, Redis, and RustFS for storage.

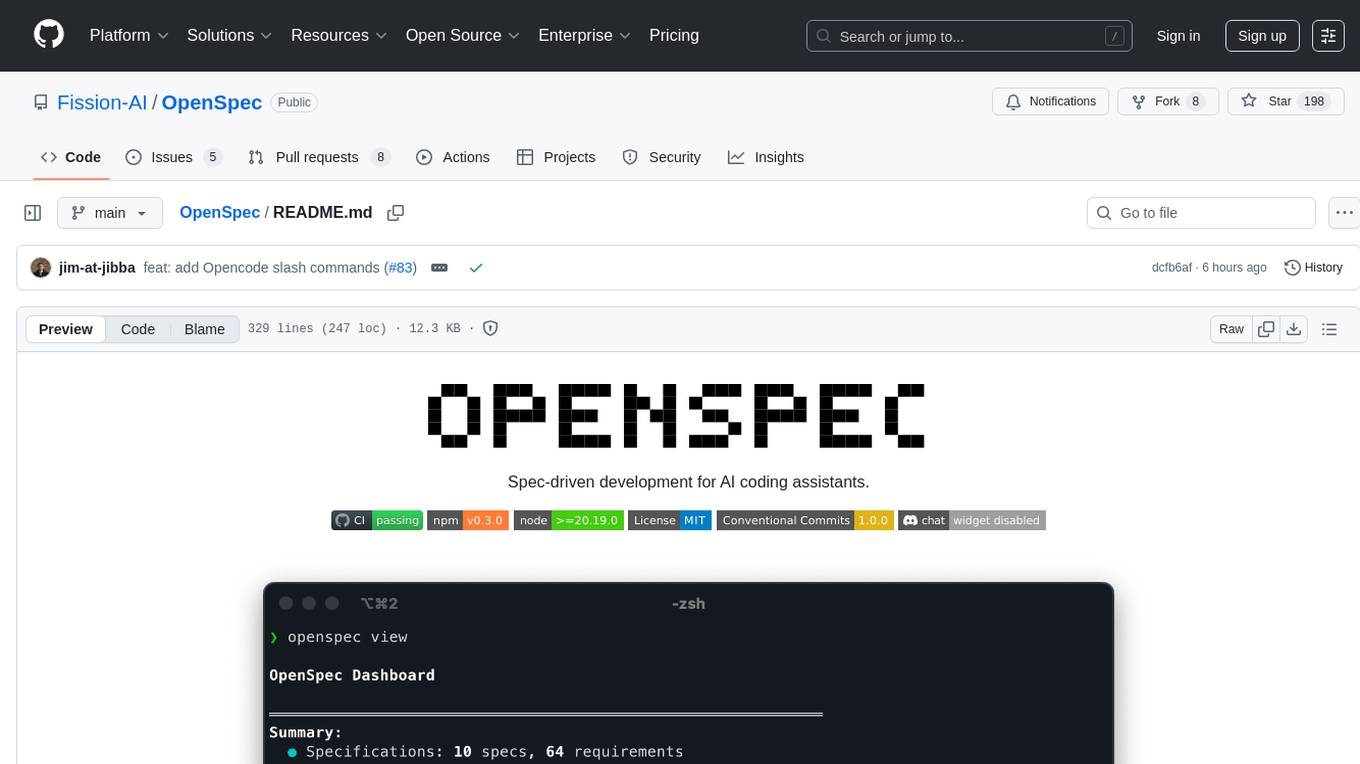

OpenSpec

OpenSpec is a tool for spec-driven development, aligning humans and AI coding assistants to agree on what to build before any code is written. It adds a lightweight specification workflow that ensures deterministic, reviewable outputs without the need for API keys. With OpenSpec, stakeholders can draft change proposals, review and align with AI assistants, implement tasks based on agreed specs, and archive completed changes for merging back into the source-of-truth specs. It works seamlessly with existing AI tools, offering shared visibility into proposed, active, or archived work.

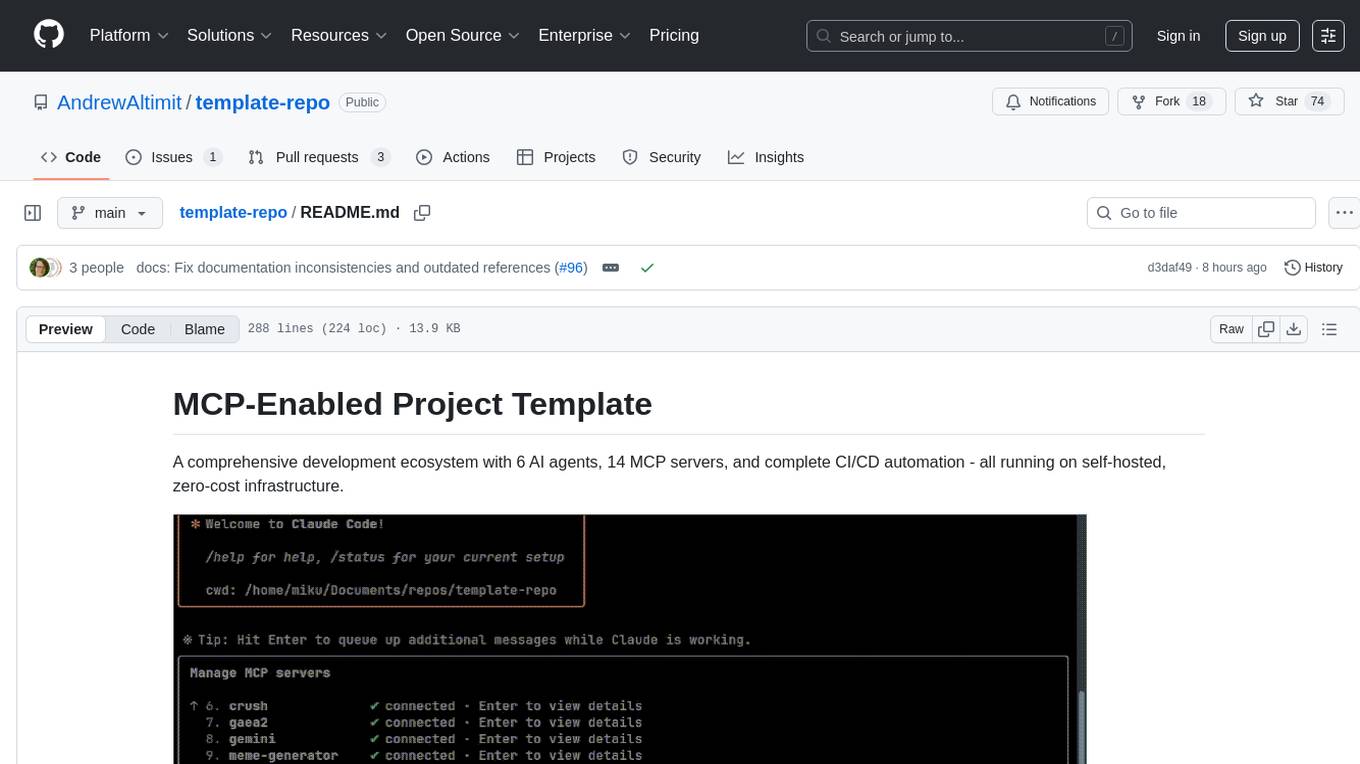

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

tandem

Tandem is a local-first, privacy-focused AI workspace that runs entirely on your machine. It is inspired by early AI coworking research previews, open source, and provider-agnostic. Tandem offers privacy-first operation, provider agnosticism, zero trust model, true cross-platform support, open-source licensing, modern stack, and developer superpowers for everyone. It provides folder-wide intelligence, multi-step automation, visual change review, complete undo, zero telemetry, provider freedom, secure design, cross-platform support, visual permissions, full undo, long-term memory, skills system, document text extraction, workspace Python venv, rich themes, execution planning, auto-updates, multiple specialized agent modes, multi-agent orchestration, project management, and various artifacts and outputs.

kiss_ai

KISS AI is a lightweight and powerful multi-agent evolutionary framework that simplifies building AI agents. It uses native function calling for efficiency and accuracy, making building AI agents as straightforward as possible. The framework includes features like multi-agent orchestration, agent evolution and optimization, relentless coding agent for long-running tasks, output formatting, trajectory saving and visualization, GEPA for prompt optimization, KISSEvolve for algorithm discovery, self-evolving multi-agent, Docker integration, multiprocessing support, and support for various models from OpenAI, Anthropic, Gemini, Together AI, and OpenRouter.

OpenOutreach

OpenOutreach is a self-hosted, open-source LinkedIn automation tool designed for B2B lead generation. It automates the entire outreach process in a stealthy, human-like way by discovering and enriching target profiles, ranking profiles using ML for smart prioritization, sending personalized connection requests, following up with custom messages after acceptance, and tracking everything in a built-in CRM with web UI. It offers features like undetectable behavior, fully customizable Python-based campaigns, local execution with CRM, easy deployment with Docker, and AI-ready templating for hyper-personalized messages.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

Callytics

Callytics is an advanced call analytics solution that leverages speech recognition and large language models (LLMs) technologies to analyze phone conversations from customer service and call centers. By processing both the audio and text of each call, it provides insights such as sentiment analysis, topic detection, conflict detection, profanity word detection, and summary. These cutting-edge techniques help businesses optimize customer interactions, identify areas for improvement, and enhance overall service quality. When an audio file is placed in the .data/input directory, the entire pipeline automatically starts running, and the resulting data is inserted into the database. This is only a v1.1.0 version; many new features will be added, models will be fine-tuned or trained from scratch, and various optimization efforts will be applied.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

os-moda

osModa is a NixOS distribution with 9 Rust daemons and 72 typed tools, providing structured access to the entire OS without shell parsing. Every mutation is hash-chained, enabling atomic system state rollbacks. The agent runs at ring 0 with root access, ensuring tamper-proof audit logging. Third-party tools are sandboxed, while the agent is not. It offers structured system access, hash-chained audit ledger, FTS5 full-text memory search, ETH + SOL crypto signing, SafeSwitch deploys with auto-rollback, P2P encrypted mesh with hybrid post-quantum crypto, local voice, MCP server management, system learning and self-optimization, service discovery, emergency safety commands, Cloudflare Tunnel + Tailscale remote access, app process management with systemd-run, and 72 bridge tools.

For similar tasks

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

Transformers_And_LLM_Are_What_You_Dont_Need

Transformers_And_LLM_Are_What_You_Dont_Need is a repository that explores the limitations of transformers in time series forecasting. It contains a collection of papers, articles, and theses discussing the effectiveness of transformers and LLMs in this domain. The repository aims to provide insights into why transformers may not be the best choice for time series forecasting tasks.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package for time series forecasting with state-of-the-art network architectures. It offers a high-level API for training networks on pandas data frames and utilizes PyTorch Lightning for scalable training on GPUs and CPUs. The package aims to simplify time series forecasting with neural networks by providing a flexible API for professionals and default settings for beginners. It includes a timeseries dataset class, base model class, multiple neural network architectures, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. PyTorch Forecasting is built on pytorch-lightning for easy training on various hardware configurations.

spider

Spider is a high-performance web crawler and indexer designed to handle data curation workloads efficiently. It offers features such as concurrency, streaming, decentralization, headless Chrome rendering, HTTP proxies, cron jobs, subscriptions, smart mode, blacklisting, whitelisting, budgeting depth, dynamic AI prompt scripting, CSS scraping, and more. Users can easily get started with the Spider Cloud hosted service or set up local installations with spider-cli. The tool supports integration with Node.js and Python for additional flexibility. With a focus on speed and scalability, Spider is ideal for extracting and organizing data from the web.

AI_for_Science_paper_collection

AI for Science paper collection is an initiative by AI for Science Community to collect and categorize papers in AI for Science areas by subjects, years, venues, and keywords. The repository contains `.csv` files with paper lists labeled by keys such as `Title`, `Conference`, `Type`, `Application`, `MLTech`, `OpenReviewLink`. It covers top conferences like ICML, NeurIPS, and ICLR. Volunteers can contribute by updating existing `.csv` files or adding new ones for uncovered conferences/years. The initiative aims to track the increasing trend of AI for Science papers and analyze trends in different applications.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package designed for state-of-the-art timeseries forecasting using deep learning architectures. It offers a high-level API and leverages PyTorch Lightning for efficient training on GPU or CPU with automatic logging. The package aims to simplify timeseries forecasting tasks by providing a flexible API for professionals and user-friendly defaults for beginners. It includes features such as a timeseries dataset class for handling data transformations, missing values, and subsampling, various neural network architectures optimized for real-world deployment, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. Built on pytorch-lightning, it supports training on CPUs, single GPUs, and multiple GPUs out-of-the-box.

For similar jobs

wiseflow

Wiseflow is an agile information mining tool that utilizes the thinking and analysis capabilities of large models to accurately extract specific information from various given sources, without the need for manual intervention. The tool focuses on filtering noise from a vast amount of information to reveal valuable insights. It is recommended to use normal language models for information extraction tasks to optimize speed and cost, rather than complex reasoning models. The tool is designed for continuous information gathering based on specified focus points from various sources.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.