Kuzco

Kuzco reviews your Terraform and OpenTofu resources, compares them to the provider schema to detect unused parameters, and uses AI to suggest improvements and fixes

Stars: 115

Enhance your Terraform and OpenTofu configurations with intelligent analysis powered by local LLMs. Kuzco reviews your resources, compares them to the provider schema, detects unused parameters, and suggests improvements for a more secure, reliable, and optimized setup. It saves time by avoiding the need to dig through the Terraform registry and decipher unclear options.

README:

Enhance your Terraform and OpenTofu configurations with intelligent analysis powered by local LLMs

Here's the problem: You spin up a Terraform or OpenTofu resource, pull a basic configuration from the registry, and start wondering what other parameters should be enabled to make it more secure and efficient. Sure, you could use tools like TLint or TFSec, but kuzco saves you time by avoiding the need to dig through the Terraform registry and decipher unclear options. It leverages local LLMs to recommend what should be enabled and configured. Simply put, kuzco reviews your Terraform and OpenTofu resources, compares them to the provider schema to detect unused parameters, and uses AI to suggest improvements for a more secure, reliable, and optimized setup.

[!NOTE] To use

kuzco, Ollama must be installed. You can do this by runningbrew bundle installorbrew install ollama. For more information on customizing Ollama models for tailored Kuzco responses, check out Customizing Ollama

brew install kuzcoIf you have a functional Go environment, you can install with:

go install github.com/RoseSecurity/kuzco@latestTo install packages, you can quickly setup the repository automatically:

curl -1sLf \

'https://dl.cloudsmith.io/public/rosesecurity/kuzco/setup.deb.sh' \

| sudo -E bashOnce the repository is configured, you can install with:

apt install kuzco=<VERSION>git clone [email protected]:RoseSecurity/Kuzco.git

cd Kuzco

make buildThe following configuration options are available:

❯ kuzco

██ ██ ██ ██ ███████ ██████ ██████

██ ██ ██ ██ ███ ██ ██ ██

█████ ██ ██ ███ ██ ██ ██

██ ██ ██ ██ ███ ██ ██ ██

██ ██ ██████ ███████ ██████ ██████

Intelligently analyze your Terraform and OpenTofu configurations to receive personalized recommendations and fixes for boosting efficiency, security, and performance.

Usage:

kuzco [flags]

kuzco [command]

Available Commands:

completion Generate the autocompletion script for the specified shell

fix Diagnose configuration errors

help Help about any command

list Lists available Ollama models

recommend Intelligently analyze your Terraform and OpenTofu configurations

version Print the CLI version

Flags:

-h, --help help for kuzco

Use "kuzco [command] --help" for more information about a command.For bug reports & feature requests, please use the issue tracker.

PRs are welcome! We follow the typical "fork-and-pull" Git workflow.

- Fork the repo on GitHub

- Clone the project to your own machine

- Commit changes to your own branch

- Push your work back up to your fork

- Submit a Pull Request so that we can review your changes

[!TIP] Be sure to merge the latest changes from "upstream" before making a pull request!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Kuzco

Similar Open Source Tools

Kuzco

Enhance your Terraform and OpenTofu configurations with intelligent analysis powered by local LLMs. Kuzco reviews your resources, compares them to the provider schema, detects unused parameters, and suggests improvements for a more secure, reliable, and optimized setup. It saves time by avoiding the need to dig through the Terraform registry and decipher unclear options.

llm-swarm

llm-swarm is a tool designed to manage scalable open LLM inference endpoints in Slurm clusters. It allows users to generate synthetic datasets for pretraining or fine-tuning using local LLMs or Inference Endpoints on the Hugging Face Hub. The tool integrates with huggingface/text-generation-inference and vLLM to generate text at scale. It manages inference endpoint lifetime by automatically spinning up instances via `sbatch`, checking if they are created or connected, performing the generation job, and auto-terminating the inference endpoints to prevent idling. Additionally, it provides load balancing between multiple endpoints using a simple nginx docker for scalability. Users can create slurm files based on default configurations and inspect logs for further analysis. For users without a Slurm cluster, hosted inference endpoints are available for testing with usage limits based on registration status.

ENOVA

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

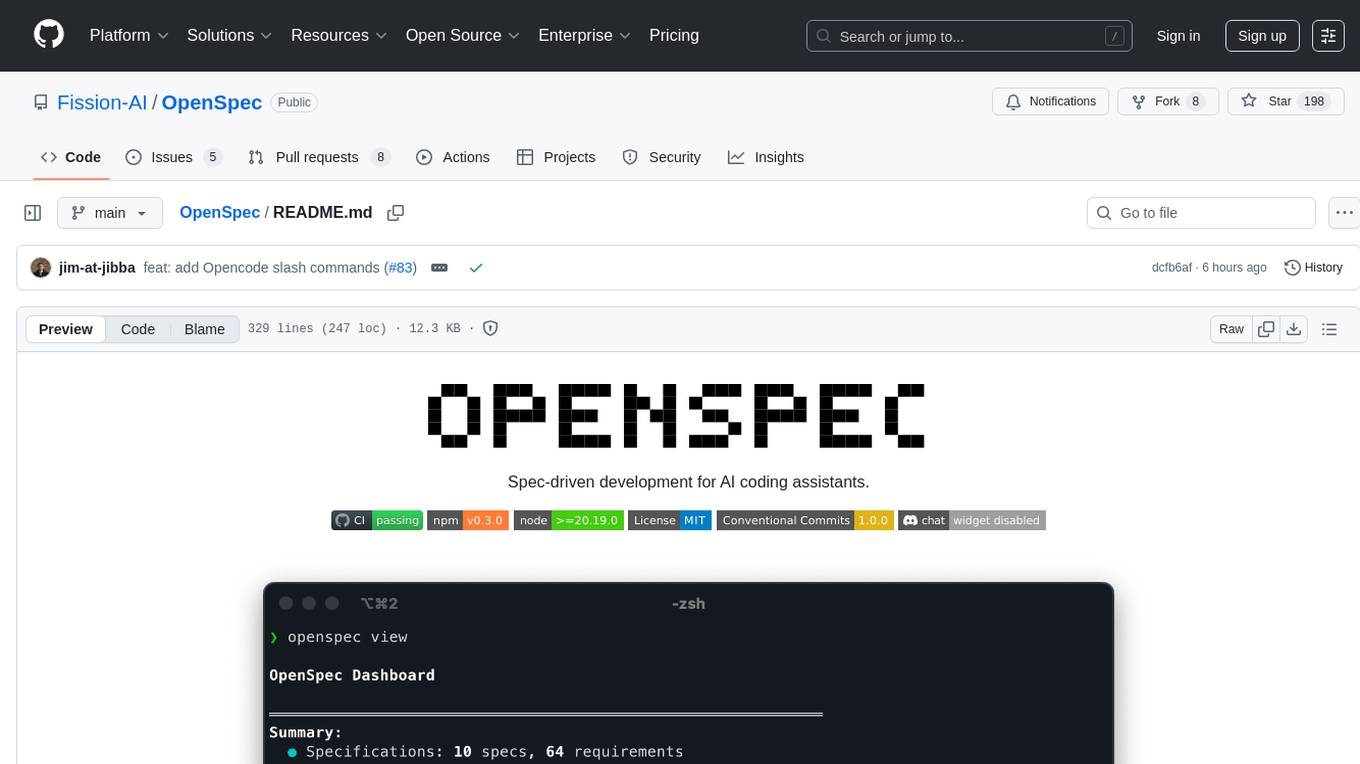

OpenSpec

OpenSpec is a tool for spec-driven development, aligning humans and AI coding assistants to agree on what to build before any code is written. It adds a lightweight specification workflow that ensures deterministic, reviewable outputs without the need for API keys. With OpenSpec, stakeholders can draft change proposals, review and align with AI assistants, implement tasks based on agreed specs, and archive completed changes for merging back into the source-of-truth specs. It works seamlessly with existing AI tools, offering shared visibility into proposed, active, or archived work.

tangent

Tangent is a canvas for exploring AI conversations, allowing users to resurrect and continue conversations, branch and explore different ideas, organize conversations by topics, and support archive data exports. It aims to provide a visual/textual/audio exploration experience with AI assistants, offering a 'thoughts workbench' for experimenting freely, reviving old threads, and diving into tangents. The project structure includes a modular backend with components for API routes, background task management, data processing, and more. Prerequisites for setup include Whisper.cpp, Ollama, and exported archive data from Claude or ChatGPT. Users can initialize the environment, install Python packages, set up Ollama, configure local models, and start the backend and frontend to interact with the tool.

mmwave-gesture-recognition

This repository provides a setup for basic gesture recognition using the TI AWR1642 mmWave sensor. Users can collect data from the sensor and choose from various neural network architectures for gesture recognition. The supported gestures include Swipe Up, Swipe Down, Swipe Right, Swipe Left, Spin Clockwise, Spin Counterclockwise, Letter Z, Letter S, and Letter X. The repository includes data and models for training and inference, along with instructions for installation, serial permissions setup, flashing firmware, running the system, collecting data, training models, selecting different models, and accessing help documentation. The project is developed using Python and TensorFlow 2.15.

nori-skillsets

The Nori Skillsets Client is a CLI tool for installing and managing Skillsets from noriskillsets.dev. Skillsets are unified configurations defining agent behaviors, including step-by-step instructions, custom workflows, specialized agents, and quick actions. Users can install, switch, and manage Skillsets, maintaining multiple configurations without losing any settings. The tool requires Node.js, Claude Code CLI, and runs on Mac or Linux. Teams can create custom Skillsets, set up private registries, and benefit from access control, package sharing, and optional Skills Review service.

LlamaBot

LlamaBot is an open-source AI coding agent that rapidly builds MVPs, prototypes, and internal tools. It works for non-technical founders, product teams, and engineers by generating working prototypes, embedding AI directly into the app, and running real workflows. Unlike typical codegen tools, LlamaBot can embed directly in your app and run real workflows, making it ideal for collaborative software building where founders guide the vision, engineers stay in control, and AI fills the gap. LlamaBot is built for moving ideas fast, allowing users to prototype an AI MVP in a weekend, experiment with workflows, and collaborate with teammates to bridge the gap between non-technical founders and engineering teams.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

browser-tools-mcp

BrowserTools MCP is a powerful browser monitoring and interaction tool that enables AI-powered applications to capture and analyze browser data through a Chrome extension. It consists of a Chrome Extension for capturing screenshots, console logs, network activity, and DOM elements, a Node Server for communication between the extension and an MCP server, and an MCP Server that provides standardized tools for AI clients to interact with the browser. All logs are stored locally on the user's machine. The tool is compatible with various MCP clients like Cursor, Cline, and Zed, allowing users to monitor console output, capture network traffic, take screenshots, analyze elements, and wipe logs stored in the MCP server.

Local-File-Organizer

The Local File Organizer is an AI-powered tool designed to help users organize their digital files efficiently and securely on their local device. By leveraging advanced AI models for text and visual content analysis, the tool automatically scans and categorizes files, generates relevant descriptions and filenames, and organizes them into a new directory structure. All AI processing occurs locally using the Nexa SDK, ensuring privacy and security. With support for multiple file types and customizable prompts, this tool aims to simplify file management and bring order to users' digital lives.

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

FinAnGPT-Pro

FinAnGPT-Pro is a financial data downloader and AI query system that downloads quarterly and annual financial data for stocks from EOD Historical Data, storing it in MongoDB and Google BigQuery. It includes an AI-powered natural language interface for querying financial data. Users can set up the tool by following the prerequisites and setup instructions provided in the README. The tool allows users to download financial data for all stocks in a watchlist or for a single stock, query financial data using a natural language interface, and receive responses in a structured format. Important considerations include error handling, rate limiting, data validation, BigQuery costs, MongoDB connection, and security measures for API keys and credentials.

Visionatrix

Visionatrix is a project aimed at providing easy use of ComfyUI workflows. It offers simplified setup and update processes, a minimalistic UI for daily workflow use, stable workflows with versioning and update support, scalability for multiple instances and task workers, multiple user support with integration of different user backends, LLM power for integration with Ollama/Gemini, and seamless integration as a service with backend endpoints and webhook support. The project is approaching version 1.0 release and welcomes new ideas for further implementation.

For similar tasks

Kuzco

Enhance your Terraform and OpenTofu configurations with intelligent analysis powered by local LLMs. Kuzco reviews your resources, compares them to the provider schema, detects unused parameters, and suggests improvements for a more secure, reliable, and optimized setup. It saves time by avoiding the need to dig through the Terraform registry and decipher unclear options.

LLMLingua

LLMLingua is a tool that utilizes a compact, well-trained language model to identify and remove non-essential tokens in prompts. This approach enables efficient inference with large language models, achieving up to 20x compression with minimal performance loss. The tool includes LLMLingua, LongLLMLingua, and LLMLingua-2, each offering different levels of prompt compression and performance improvements for tasks involving large language models.

MiniAI-Face-Recognition-LivenessDetection-ServerSDK

The MiniAiLive Face Recognition LivenessDetection Server SDK provides system integrators with fast, flexible, and extremely precise facial recognition that can be deployed across various scenarios, including security, access control, public safety, fintech, smart retail, and home protection. The SDK is fully on-premise, meaning all processing happens on the hosting server, and no data leaves the server. The project structure includes bin, cpp, flask, model, python, test_image, and Dockerfile directories. To set up the project on Linux, download the repo, install system dependencies, and copy libraries into the system folder. For Windows, contact MiniAiLive via email. The C++ example involves replacing the license key in main.cpp, building the project, and running it. The Python example requires installing dependencies and running the project. The Python Flask example involves replacing the license key in app.py, installing dependencies, and running the project. The Docker Flask example includes building the docker image and running it. To request a license, contact MiniAiLive. Contributions to the project are welcome by following specific steps. An online demo is available at https://demo.miniai.live. Related products include MiniAI-Face-Recognition-LivenessDetection-AndroidSDK, MiniAI-Face-Recognition-LivenessDetection-iOS-SDK, MiniAI-Face-LivenessDetection-AndroidSDK, MiniAI-Face-LivenessDetection-iOS-SDK, MiniAI-Face-Matching-AndroidSDK, and MiniAI-Face-Matching-iOS-SDK. MiniAiLive is a leading AI solutions company specializing in computer vision and machine learning technologies.

MiniAI-Face-LivenessDetection-AndroidSDK

The MiniAiLive Face Liveness Detection Android SDK provides advanced computer vision techniques to enhance security and accuracy on Android platforms. It offers 3D Passive Face Liveness Detection capabilities, ensuring that users are physically present and not using spoofing methods to access applications or services. The SDK is fully on-premise, with all processing happening on the hosting server, ensuring data privacy and security.

blinkid-ios

BlinkID iOS is a mobile SDK that enables developers to easily integrate ID scanning and data extraction capabilities into their iOS applications. The SDK supports scanning and processing various types of identity documents, such as passports, driver's licenses, and ID cards. It provides accurate and fast data extraction, including personal information and document details. With BlinkID iOS, developers can enhance their apps with secure and reliable ID verification functionality, improving user experience and streamlining identity verification processes.

cheat-sheet-pdf

The Cheat-Sheet Collection for DevOps, Engineers, IT professionals, and more is a curated list of cheat sheets for various tools and technologies commonly used in the software development and IT industry. It includes cheat sheets for Nginx, Docker, Ansible, Python, Go (Golang), Git, Regular Expressions (Regex), PowerShell, VIM, Jenkins, CI/CD, Kubernetes, Linux, Redis, Slack, Puppet, Google Cloud Developer, AI, Neural Networks, Machine Learning, Deep Learning & Data Science, PostgreSQL, Ajax, AWS, Infrastructure as Code (IaC), System Design, and Cyber Security.

L1B3RT45

L1B3RT45 is a tool designed for jailbreaking all flagship AI models. It is part of the FREEAI project and is named LIBERTAS. Users can join the BASI Discord community for support. The tool was created with love by Pliny the Prompter.

card-scanner-flutter

Card Scanner Flutter is a fast, accurate, and secure plugin for Flutter that allows users to scan debit and credit cards offline. It can scan card details such as the card number, expiry date, card holder name, and card issuer. Powered by Google's Machine Learning models, the plugin offers great performance and accuracy. Users can control parameters for speed and accuracy balance and benefit from an intuitive API. Suitable for various jobs such as mobile app developer, fintech product manager, software engineer, data scientist, and UI/UX designer. AI keywords include card scanner, flutter plugin, debit card, credit card, machine learning. Users can use this tool to scan cards, verify card details, extract card information, validate card numbers, and enhance security.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.