merlinn

Open source AI on-call developer 🧙♂️ Get relevant context & root cause analysis in seconds about production incidents and make on-call engineers 10x better 🏎️

Stars: 241

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

README:

Merlinn is an AI-powered on-call engineer. It can automatically jump into incidents & alerts with you, and provide you useful & contextual insights and RCA in real time.

Most people don't like to do on-call shifts. It requires engineers to be swift and solve problems quickly. Moreover, it takes time to reach to the root cause of the problem. That's why we developed Merlinn. We believe Gen AI can help on-call developers solve issues faster.

- Overview

- Why

- Key Features

- Demo

- Getting started

- Support and feedback

- Contributing to Merlinn

- Troubleshooting

- Telemetry

- License

- Learn more

- Contributors

- Automatic RCA: Merlinn automatically listens to production incidents/alerts and automatically investigates them for you.

- Slack integration: Merlinn lives inside your Slack. Simply connect it and enjoy an on-call engineer that never sleeps.

- Integrations: Merlinn integrates with popular observability/incident management tools such as Datadog, Coralogix, Opsgenie and Pagerduty. It also integrates to other tools as GitHub, Notion, Jira and Confluence to gain insights on incidents.

- Intuitive UX: Merlinn offers a familiar experience. You can talk to it and ask follow-up questions.

- Secure: Self-host Merlinn and own your data. Always.

- Open Source: We love open-source. Self-host Merlinn and use it for free.

Checkout our demo video to see Merlinn in action.

In order to run Merlinn, you need to clone the repo & run the app using Docker Compose.

Ensure you have the following installed:

- Docker & Docker Compose - The app works with Docker containers. To run it, you need to have Docker Desktop, which comes with Docker CLI, Docker Engine and Docker Compose.

You can find the installation video here.

-

Clone the repository:

git clone [email protected]:merlinn-co/merlinn.git && cd merlinn

-

Configure LiteLLM Proxy Server:

We use LiteLLM Proxy Server to interact with 100+ of LLMs in a unified interface (OpenAI interface).

-

Copy the example files:

cp config/litellm/.env.example config/litellm/.env cp config/litellm/config.example.yaml config/litellm/config.yaml

-

Define your OpenAI key and place it inside

config/litellm/.envasOPENAI_API_KEY. You can get your API key here. Rest assured, you won't be charged unless you use the API. For more details on pricing, check here.

-

-

Copy the

.env.examplefile:cp .env.example .env

-

Open the

.envfile in your favorite editor (vim, vscode, emacs, etc):vim .env # or emacs or vscode or nano -

Update these variables:

-

SLACK_BOT_TOKEN,SLACK_APP_TOKENandSLACK_SIGNING_SECRET- These variables are needed in order to talk to Merlinn on Slack. Please follow this guide to create a new Slack app in your organization. -

(Optional)

SMTP_CONNECTION_URL- This variable is needed in order to invite new members to your Merlinn organization via email and allow them to use the bot. It's not mandatory if you just want to test Merlinn and play with it. If you do want to send invites to your team members, you can use a service like SendGrid/Mailgun. Should follow this pattern:smtp://username:password@domain:port.

-

-

Launch the project:

docker compose up -d

That's it. You should be able to visit Merlinn's dashboard in http://localhost:5173. Simply create a user (with the same e-mail as the one in your Slack user) and start to configure your organization. If something does not work for you, please checkout our troubleshooting or reach out to us via our support channels.

The next steps are to configure your organization a bit more (connect incident management tools, build a knowledge base, etc). Head over to the connect & configure section in our docs for more information 💫

If you want, you can pull our Docker images from DockerHub instead of cloning the repo & building from scratch.

In order to do that, follow these steps:

-

Download configuration files:

curl https://raw.githubusercontent.com/merlinn-co/merlinn/main/tools/scripts/download_env_files.sh | sh -

Follow steps 2 and 5 above to configure LiteLLM Proxy and your

.envfile respectively. Namely, you'd need to configure your OpenAI key atconfig/litellm/.envand configure your Slack credentials in the root.env. -

Spin up the environment using docker compose:

curl https://raw.githubusercontent.com/merlinn-co/merlinn/main/tools/scripts/start.sh | sh

That's it 💫 You should be able to visit Merlinn's dashboard in http://localhost:5173.

-

Pull the latest changes:

git pull

-

Rebuild images:

docker-compose up --build -d

Visit our example guides in order to deploy Merlinn to your cloud.

We use ChromaDB as our vector DB. We also use vector admin in order to see the ingested documents. To use vector admin, simply run this command:

docker compose up vector-admin -dThis command starts vector-admin at port 3001. Head over to http://localhost:3001 and configure your local ChromaDB. Note: Since vector-admin runs inside a docker container, in the "host" field make sure to insert http://host.docker.internal:8000 instead of http://localhost:8000. This is because "localhost" doesn't refer to the host inside the container itself.

Moreover, in the "API Header & Key", you'd need to put "X-Chroma-Token" as the header and the value you have inside .env CHROMA_SERVER_AUTHN_CREDENTIALS as the value.

To learn how to use VectorAdmin, visit the docs.

In order of preference the best way to communicate with us:

- GitHub Discussions: Contribute ideas, support requests and report bugs (preferred as there is a static & permanent for other community members).

- Slack: community support. Click here to join.

- Privately: contact at [email protected]

If you're interested in contributing to Merlinn, checkout our CONTRIBUTING.md file 💫 🧙♂️

If you encounter any problems/errors/issues with Merlinn, checkout our troubleshooting guide. We try to update it regularly, and fix some of the urgent problems there as soon as possible.

Moreover, feel free to reach out to us at our support channels.

By default, Merlinn automatically sends basic usage statistics from self-hosted instances to our server via PostHog.

This allows us to:

- Understand how Merlinn is used so we can improve it.

- Track overall usage for internal purposes and external reporting, such as for fundraising.

Rest assured, the data collected is not shared with third parties and does not include any sensitive information. We aim to be transparent, and you can review the specific data we collect here.

If you prefer not to participate, you can easily opt-out by setting TELEMETRY_ENABLED=false inside your .env.

This project is licensed under the Apache 2.0 license - see the LICENSE file for details

Check out the official website at https://merlinn.co for more information.

Built with ❤️ by Dudu & Topaz

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for merlinn

Similar Open Source Tools

merlinn

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

AgentGenesis

AgentGenesis is an open-source web app that provides customizable Gen AI code snippets for building custom RAG flows and AI agents. Users can easily copy and paste components into their applications, modify the code as needed, and build on top of it. The tool aims to simplify the development process by offering pre-built code snippets that can be integrated seamlessly into projects without the need for npm installation. AgentGenesis encourages contributions, positive feedback, and support from the community to enhance and grow the project.

TerminalGPT

TerminalGPT is a terminal-based ChatGPT personal assistant app that allows users to interact with OpenAI GPT-3.5 and GPT-4 language models. It offers advantages over browser-based apps, such as continuous availability, faster replies, and tailored answers. Users can use TerminalGPT in their IDE terminal, ensuring seamless integration with their workflow. The tool prioritizes user privacy by not using conversation data for model training and storing conversations locally on the user's machine.

open-source-slack-ai

This repository provides a ready-to-run basic Slack AI solution that allows users to summarize threads and channels using OpenAI. Users can generate thread summaries, channel overviews, channel summaries since a specific time, and full channel summaries. The tool is powered by GPT-3.5-Turbo and an ensemble of NLP models. It requires Python 3.8 or higher, an OpenAI API key, Slack App with associated API tokens, Poetry package manager, and ngrok for local development. Users can customize channel and thread summaries, run tests with coverage using pytest, and contribute to the project for future enhancements.

MiniSearch

MiniSearch is a minimalist search engine with integrated browser-based AI. It is privacy-focused, easy to use, cross-platform, integrated, time-saving, efficient, optimized, and open-source. MiniSearch can be used for a variety of tasks, including searching the web, finding files on your computer, and getting answers to questions. It is a great tool for anyone who wants a fast, private, and easy-to-use search engine.

obsidian-chat-cbt-plugin

ChatCBT is an AI-powered journaling assistant for Obsidian, inspired by cognitive behavioral therapy (CBT). It helps users reframe negative thoughts and rewire reactions to distressful situations. The tool provides kind and objective responses to uncover negative thinking patterns, store conversations privately, and summarize reframed thoughts. Users can choose between a cloud-based AI service (OpenAI) or a local and private service (Ollama) for handling data. ChatCBT is not a replacement for therapy but serves as a journaling assistant to help users gain perspective on their problems.

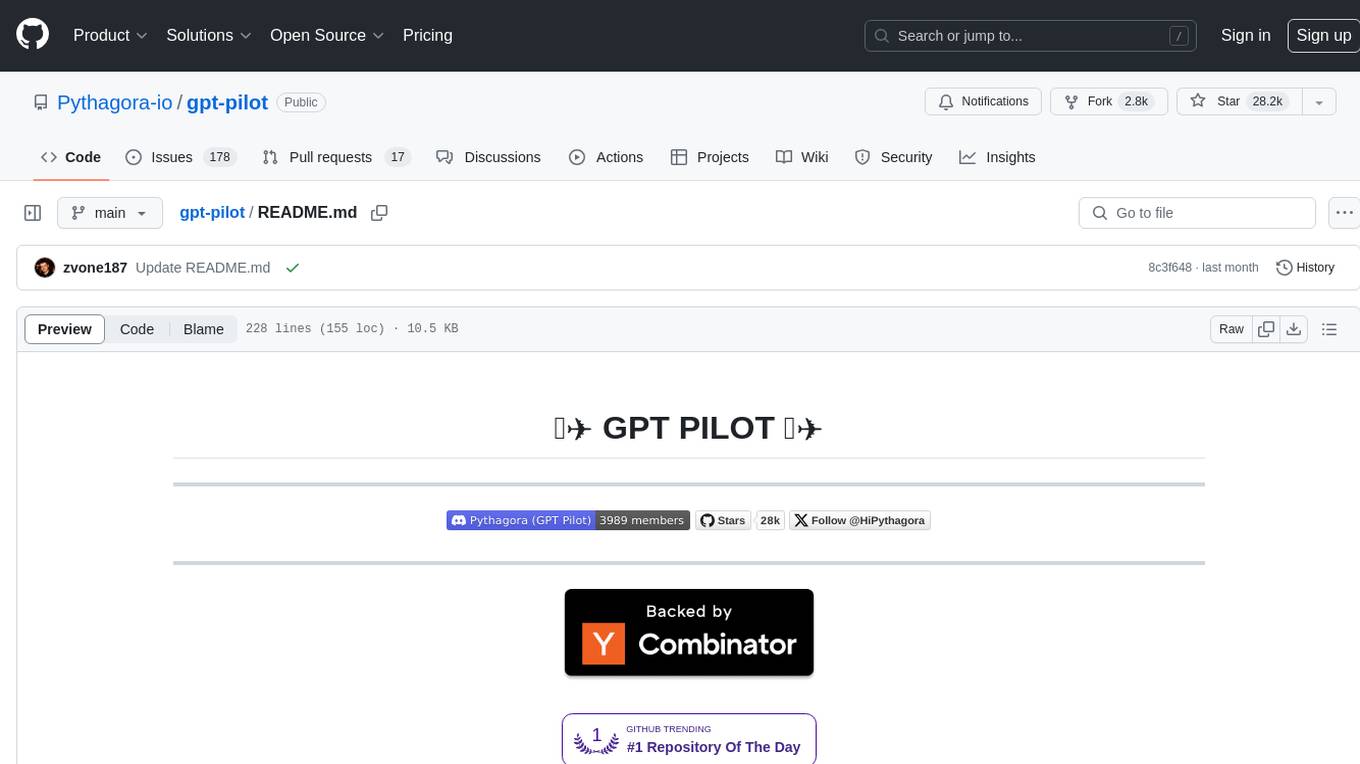

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

extensionOS

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

superflows

Superflows is an open-source alternative to OpenAI's Assistant API. It allows developers to easily add an AI assistant to their software products, enabling users to ask questions in natural language and receive answers or have tasks completed by making API calls. Superflows can analyze data, create plots, answer questions based on static knowledge, and even write code. It features a developer dashboard for configuration and testing, stateful streaming API, UI components, and support for multiple LLMs. Superflows can be set up in the cloud or self-hosted, and it provides comprehensive documentation and support.

Helios

Helios is a powerful open-source tool for managing and monitoring your Kubernetes clusters. It provides a user-friendly interface to easily visualize and control your cluster resources, including pods, deployments, services, and more. With Helios, you can efficiently manage your containerized applications and ensure high availability and performance of your Kubernetes infrastructure.

For similar tasks

merlinn

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.