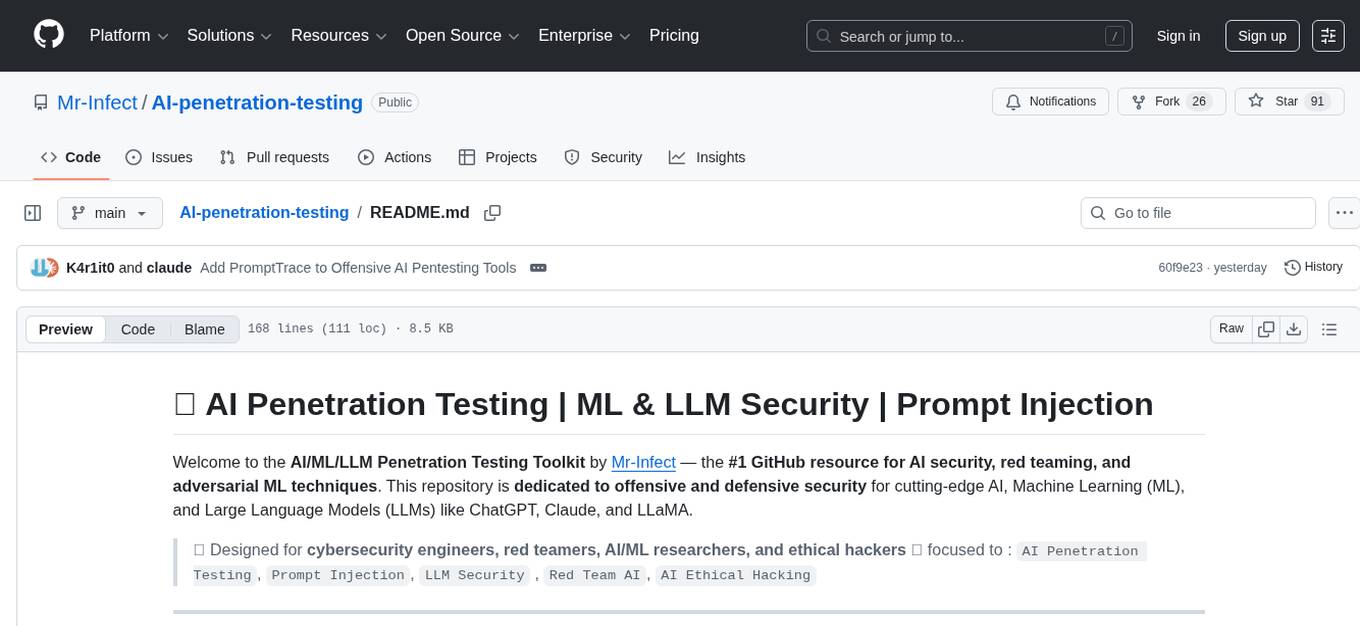

AI-penetration-testing

AI/ML/LLM Penetration Testing Toolkit by Mr-Infect — the #1 GitHub resource for AI security, red teaming, and adversarial ML techniques. This repository is dedicated to offensive and defensive security for cutting-edge AI, Machine Learning (ML), and Large Language Models (LLMs) like ChatGPT, Claude, and LLaMA.

Stars: 91

AI Penetration Testing is a tool designed to automate the process of identifying security vulnerabilities in computer systems using artificial intelligence algorithms. It helps security professionals to efficiently scan and analyze networks, applications, and devices for potential weaknesses and exploits. The tool combines machine learning techniques with traditional penetration testing methods to provide comprehensive security assessments and recommendations for remediation. With AI Penetration Testing, users can enhance the effectiveness and accuracy of their security testing efforts, enabling them to proactively protect their systems from cyber threats and attacks.

README:

Welcome to the AI/ML/LLM Penetration Testing Toolkit by Mr-Infect — the #1 GitHub resource for AI security, red teaming, and adversarial ML techniques. This repository is dedicated to offensive and defensive security for cutting-edge AI, Machine Learning (ML), and Large Language Models (LLMs) like ChatGPT, Claude, and LLaMA.

✅ Designed for cybersecurity engineers, red teamers, AI/ML researchers, and ethical hackers ✅ focused to :

AI Penetration Testing,Prompt Injection,LLM Security,Red Team AI,AI Ethical Hacking

AI is now integrated across finance, healthcare, legal, defense, and national infrastructure. Penetration testing for AI systems is no longer optional — it is mission-critical.

- 🕵️ Sensitive Data Leaks – PII, trade secrets, source code

- 💀 Prompt Injection Attacks – Jailbreaking, sandbox escapes, plugin abuse

- 🧠 Model Hallucination – Offensive, misleading, or manipulated content

- 🐍 Data/Model Poisoning – Adversarial training manipulation

- 🔌 LLM Plugin Abuse – Uncontrolled API interactions

- 📦 AI Supply Chain Attacks – Dependency poisoning, model tampering

To use this repository effectively:

- 🔬 Understanding of AI/ML lifecycle:

Data > Train > Deploy > Monitor - 🧠 Familiarity with LLMs (e.g. Transformer models, tokenization)

- 🧑💻 Core pentesting skills: XSS, SQLi, RCE, API abuse

- 🐍 Strong Python scripting (most tools and exploits rely on Python)

- AI vs ML vs LLMs: Clear distinctions

- LLM Lifecycle: Problem -> Dataset -> Model -> Training -> Evaluation -> Deployment

- Tokenization & Vectorization: Foundation of how LLMs parse and understand input

- Prompt Injection

- Jailbreaking & Output Overwriting

- Sensitive Information Leakage

- Vector Store Attacks & Retrieval Manipulation

- Model Weight Poisoning

- Data Supply Chain Attacks

- "Ignore previous instructions" payloads

- Unicode, emojis, and language-switching evasion

- Markdown/image/HTML-based payloads

- Plugin and multi-modal attack vectors (image, audio, PDF, API)

| ID | Risk | SEO Keywords |

|---|---|---|

| LLM01 | Prompt Injection | "LLM jailbreak", "prompt override" |

| LLM02 | Sensitive Info Disclosure | "AI data leak", "PII exfiltration" |

| LLM03 | Supply Chain Risk | "dependency poisoning", "model repo hijack" |

| LLM04 | Data/Model Poisoning | "AI training corruption", "malicious dataset" |

| LLM05 | Improper Output Handling | "AI-generated XSS", "model SQLi" |

| LLM06 | Excessive Agency | "plugin abuse", "autonomous API misuse" |

| LLM07 | System Prompt Leakage | "instruction leakage", "LLM prompt reveal" |

| LLM08 | Vector Store Vulnerabilities | "embedding attack", "semantic poisoning" |

| LLM09 | Misinformation | "hallucination", "bias injection" |

| LLM10 | Unbounded Resource Consumption | "LLM DoS", "token flooding" |

| Tool | Description |

|---|---|

| LLM Attacks | Directory of adversarial LLM research |

| PIPE | Prompt Injection Primer for Engineers |

| MITRE ATLAS | MITRE's AI/ML threat knowledge base |

| Awesome GPT Security | Curated LLM threat intelligence tools |

| ChatGPT Red Team Ally | ChatGPT usage for red teaming |

| Lakera Gandalf | Live prompt injection playground |

| AI Immersive Labs | Prompt attack labs with real-time feedback |

| AI Goat | OWASP-style AI pentesting playground |

| L1B3RT45 | Jailbreak prompt collections |

| PromptTrace | Interactive AI security training with 7 attack labs, 15-level Gauntlet, and real-time Context Trace for prompt injection and defense bypass |

- https://github.com/DummyKitty/Cyber-Security-chatGPT-prompt

- https://github.com/swisskyrepo/PayloadsAllTheThings/tree/master/Prompt%20Injection

- https://github.com/f/awesome-chatgpt-prompts

- https://gist.github.com/coolaj86/6f4f7b30129b0251f61fa7baaa881516

- https://kai-greshake.de/posts/inject-my-pdf

- https://www.lakera.ai/blog/guide-to-prompt-injection

- https://arxiv.org/abs/2306.05499

- https://www.csoonline.com/article/3613932/how-data-poisoning-attacks-corrupt-machine-learning-models.html

- https://pytorch.org/blog/compromised-nightly-dependency/

Want to improve this repo? Here's how:

# Fork and clone the repo

$ git clone https://github.com/Mr-Infect/AI-penetration-testing

$ cd AI-penetration-testing

# Create a new feature branch

$ git checkout -b feature/my-feature

# Commit, push, and create a pull request

AI Pentesting,Prompt Injection,LLM Security,Mr-Infect AI Hacking,ChatGPT Exploits,Large Language Model Jailbreak,AI Red Team Tools,Adversarial AI Attacks,OpenAI Prompt Security,LLM Ethical Hacking,AI Security Github,AI Offensive Security,LLM OWASP,LLM Top 10,AI Prompt Vulnerability,Token Abuse DoS,ChatGPT Jailbreak,Red Team AI,AI Security Research

- GitHub Profile: https://github.com/Mr-Infect

- Project Link: AI Penetration Testing Repository

⚠️ Disclaimer: This project is intended solely for educational, research, and authorized ethical hacking purposes. Unauthorized use is illegal.

⭐️ Star this repository to help others discover top-tier content on AI/LLM penetration testing along with security infra!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-penetration-testing

Similar Open Source Tools

AI-penetration-testing

AI Penetration Testing is a tool designed to automate the process of identifying security vulnerabilities in computer systems using artificial intelligence algorithms. It helps security professionals to efficiently scan and analyze networks, applications, and devices for potential weaknesses and exploits. The tool combines machine learning techniques with traditional penetration testing methods to provide comprehensive security assessments and recommendations for remediation. With AI Penetration Testing, users can enhance the effectiveness and accuracy of their security testing efforts, enabling them to proactively protect their systems from cyber threats and attacks.

pentest-agent

Pentest Agent is a lightweight and versatile tool designed for conducting penetration testing on network systems. It provides a user-friendly interface for scanning, identifying vulnerabilities, and generating detailed reports. The tool is highly customizable, allowing users to define specific targets and parameters for testing. Pentest Agent is suitable for security professionals and ethical hackers looking to assess the security posture of their systems and networks.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

PentestGPT

PentestGPT provides advanced AI and integrated tools to help security teams conduct comprehensive penetration tests effortlessly. Scan, exploit, and analyze web applications, networks, and cloud environments with ease and precision, without needing expert skills. The tool utilizes Supabase for data storage and management, and Vercel for hosting the frontend. It offers a local quickstart guide for running the tool locally and a hosted quickstart guide for deploying it in the cloud. PentestGPT aims to simplify the penetration testing process for security professionals and enthusiasts alike.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

Awesome-AI-Security

Awesome-AI-Security is a curated list of resources for AI security, including tools, research papers, articles, and tutorials. It aims to provide a comprehensive overview of the latest developments in securing AI systems and preventing vulnerabilities. The repository covers topics such as adversarial attacks, privacy protection, model robustness, and secure deployment of AI applications. Whether you are a researcher, developer, or security professional, this collection of resources will help you stay informed and up-to-date in the rapidly evolving field of AI security.

prompt-injection-defenses

This repository provides a collection of tools and techniques for defending against injection attacks in software applications. It includes code samples, best practices, and guidelines for implementing secure coding practices to prevent common injection vulnerabilities such as SQL injection, XSS, and command injection. The tools and resources in this repository aim to help developers build more secure and resilient applications by addressing one of the most common and critical security threats in modern software development.

holmesgpt

HolmesGPT is an AI agent designed for troubleshooting and investigating issues in cloud environments. It utilizes AI models to analyze data from various sources, identify root causes, and provide remediation suggestions. The tool offers integrations with popular cloud providers, observability tools, and on-call systems, enabling users to streamline the troubleshooting process. HolmesGPT can automate the investigation of alerts and tickets from external systems, providing insights back to the source or communication platforms like Slack. It supports end-to-end automation and offers a CLI for interacting with the AI agent. Users can customize HolmesGPT by adding custom data sources and runbooks to enhance investigation capabilities. The tool prioritizes data privacy, ensuring read-only access and respecting RBAC permissions. HolmesGPT is a CNCF Sandbox Project and is distributed under the Apache 2.0 License.

agentic-qe

Agentic Quality Engineering Fleet (Agentic QE) is a comprehensive tool designed for quality engineering tasks. It offers a Domain-Driven Design architecture with 13 bounded contexts and 60 specialized QE agents. The tool includes features like TinyDancer intelligent model routing, ReasoningBank learning with Dream cycles, HNSW vector search, Coherence Verification, and integration with other tools like Claude Flow and Agentic Flow. It provides capabilities for test generation, coverage analysis, quality assessment, defect intelligence, requirements validation, code intelligence, security compliance, contract testing, visual accessibility, chaos resilience, learning optimization, and enterprise integration. The tool supports various protocols, LLM providers, and offers a vast library of QE skills for different testing scenarios.

awesome-ai-cybersecurity

This repository is a comprehensive collection of resources for utilizing AI in cybersecurity. It covers various aspects such as prediction, prevention, detection, response, monitoring, and more. The resources include tools, frameworks, case studies, best practices, tutorials, and research papers. The repository aims to assist professionals, researchers, and enthusiasts in staying updated and advancing their knowledge in the field of AI cybersecurity.

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

www-project-top-10-for-large-language-model-applications

The OWASP Top 10 for Large Language Model Applications is a standard awareness document for developers and web application security, providing practical, actionable, and concise security guidance for applications utilizing Large Language Model (LLM) technologies. The project aims to make application security visible and bridge the gap between general application security principles and the specific challenges posed by LLMs. It offers a comprehensive guide to navigate potential security risks in LLM applications, serving as a reference for both new and experienced developers and security professionals.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

pegainfer

PegaInfer is a machine learning tool designed for predictive analytics and pattern recognition. It provides a user-friendly interface for training and deploying machine learning models without the need for extensive coding knowledge. With PegaInfer, users can easily analyze large datasets, make predictions, and uncover hidden patterns in their data. The tool supports various machine learning algorithms and allows for customization to suit specific use cases. Whether you are a data scientist, business analyst, or researcher, PegaInfer can help streamline your data analysis process and enhance decision-making capabilities.

For similar tasks

llm_related

llm_related is a repository that documents issues encountered and solutions found during the application of large models. It serves as a knowledge base for troubleshooting and problem-solving in the context of working with complex models in various applications.

AI-penetration-testing

AI Penetration Testing is a tool designed to automate the process of identifying security vulnerabilities in computer systems using artificial intelligence algorithms. It helps security professionals to efficiently scan and analyze networks, applications, and devices for potential weaknesses and exploits. The tool combines machine learning techniques with traditional penetration testing methods to provide comprehensive security assessments and recommendations for remediation. With AI Penetration Testing, users can enhance the effectiveness and accuracy of their security testing efforts, enabling them to proactively protect their systems from cyber threats and attacks.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

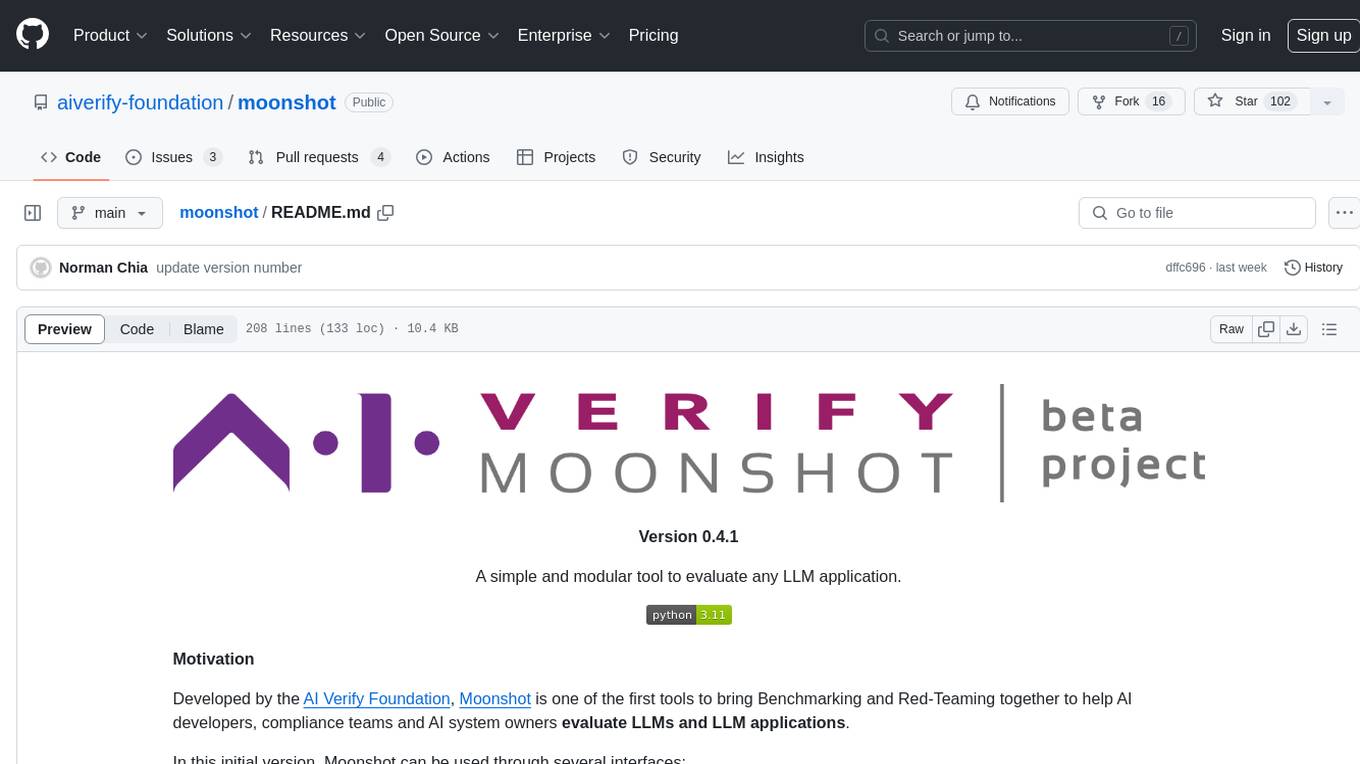

moonshot

Moonshot is a simple and modular tool developed by the AI Verify Foundation to evaluate Language Model Models (LLMs) and LLM applications. It brings Benchmarking and Red-Teaming together to assist AI developers, compliance teams, and AI system owners in assessing LLM performance. Moonshot can be accessed through various interfaces including User-friendly Web UI, Interactive Command Line Interface, and seamless integration into MLOps workflows via Library APIs or Web APIs. It offers features like benchmarking LLMs from popular model providers, running relevant tests, creating custom cookbooks and recipes, and automating Red Teaming to identify vulnerabilities in AI systems.

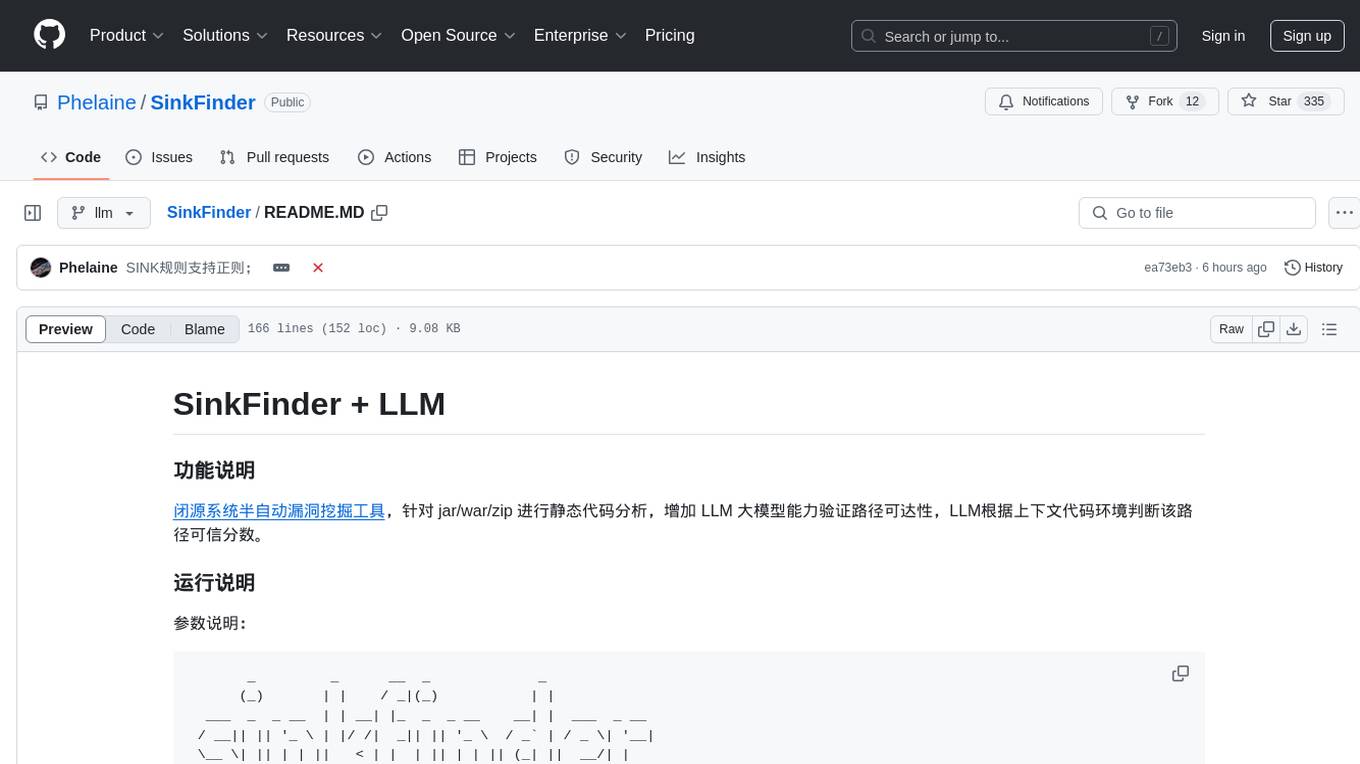

SinkFinder

SinkFinder + LLM is a closed-source semi-automatic vulnerability discovery tool that performs static code analysis on jar/war/zip files. It enhances the capability of LLM large models to verify path reachability and assess the trustworthiness score of the path based on the contextual code environment. Users can customize class and jar exclusions, depth of recursive search, and other parameters through command-line arguments. The tool generates rule.json configuration file after each run and requires configuration of the DASHSCOPE_API_KEY for LLM capabilities. The tool provides detailed logs on high-risk paths, LLM results, and other findings. Rules.json file contains sink rules for various vulnerability types with severity levels and corresponding sink methods.

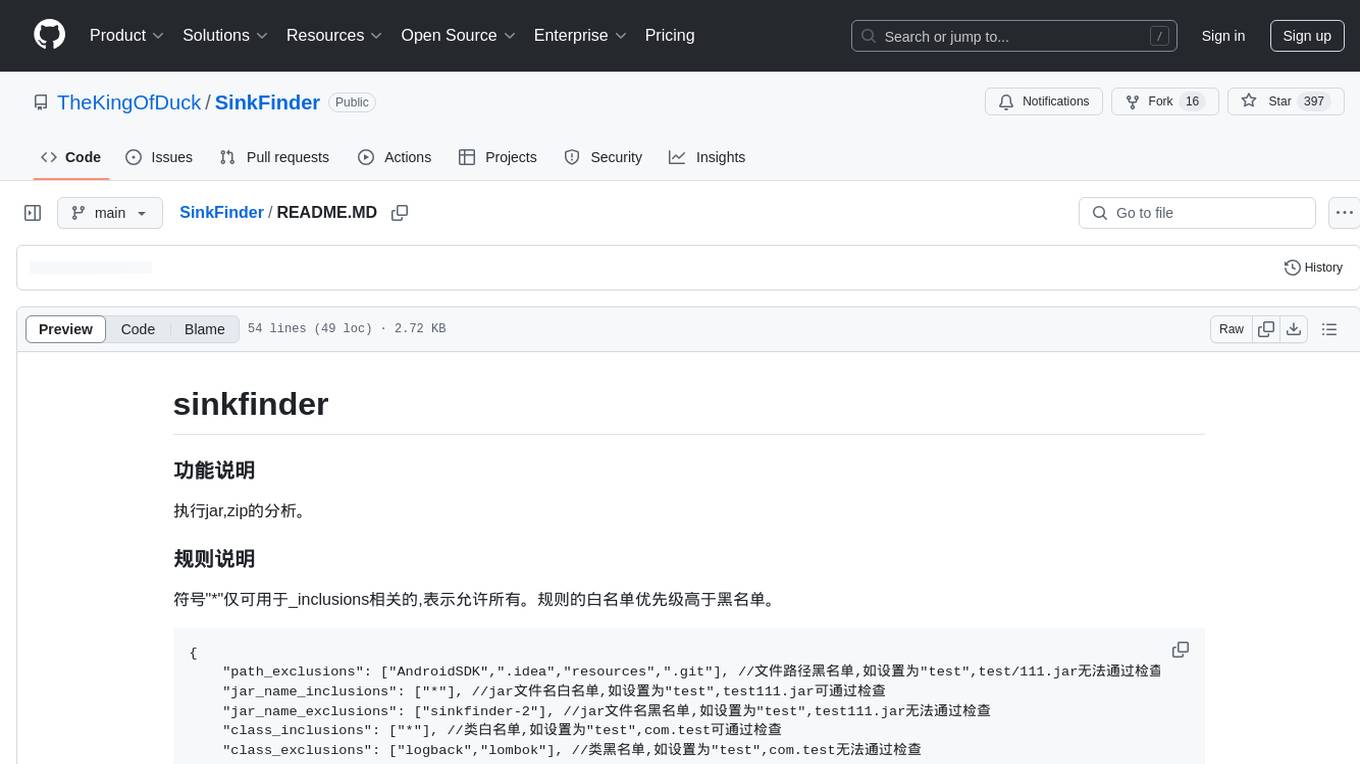

SinkFinder

SinkFinder is a tool designed to analyze jar and zip files for security vulnerabilities. It allows users to define rules for white and blacklisting specific classes and methods that may pose a risk. The tool provides a list of common security sink names along with severity levels and associated vulnerable methods. Users can use SinkFinder to quickly identify potential security issues in their Java applications by scanning for known sink patterns and configurations.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

agentic-radar

The Agentic Radar is a security scanner designed to analyze and assess agentic systems for security and operational insights. It helps users understand how agentic systems function, identify potential vulnerabilities, and create security reports. The tool includes workflow visualization, tool identification, and vulnerability mapping, providing a comprehensive HTML report for easy reviewing and sharing. It simplifies the process of assessing complex workflows and multiple tools used in agentic systems, offering a structured view of potential risks and security frameworks.

For similar jobs

Copilot-For-Security

Microsoft Copilot for Security is a generative AI-powered assistant for daily operations in security and IT that empowers teams to protect at the speed and scale of AI.

AIL-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

beelzebub

Beelzebub is an advanced honeypot framework designed to provide a highly secure environment for detecting and analyzing cyber attacks. It offers a low code approach for easy implementation and utilizes virtualization techniques powered by OpenAI Generative Pre-trained Transformer. Key features include OpenAI Generative Pre-trained Transformer acting as Linux virtualization, SSH Honeypot, HTTP Honeypot, TCP Honeypot, Prometheus openmetrics integration, Docker integration, RabbitMQ integration, and kubernetes support. Beelzebub allows easy configuration for different services and ports, enabling users to create custom honeypot scenarios. The roadmap includes developing Beelzebub into a robust PaaS platform. The project welcomes contributions and encourages adherence to the Code of Conduct for a supportive and respectful community.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

awesome-business-of-cybersecurity

The 'Awesome Business of Cybersecurity' repository is a comprehensive resource exploring the cybersecurity market, focusing on publicly traded companies, industry strategy, and AI capabilities. It provides insights into how cybersecurity companies operate, compete, and evolve across 18 solution categories and beyond. The repository offers structured information on the cybersecurity market snapshot, specialists vs. multiservice cybersecurity companies, cybersecurity stock lists, endpoint protection and threat detection, network security, identity and access management, cloud and application security, data protection and governance, security analytics and threat intelligence, non-US traded cybersecurity companies, cybersecurity ETFs, blogs and newsletters, podcasts, market insights and research, and cybersecurity solutions categories.

mcp-scan

MCP-Scan is a security scanning tool designed to detect common security vulnerabilities in Model Context Protocol (MCP) servers. It can auto-discover various MCP configurations, scan both local and remote servers for security issues like prompt injection attacks, tool poisoning attacks, and toxic flows. The tool operates in two main modes - 'scan' for static scanning of installed servers and 'proxy' for real-time monitoring and guardrailing of MCP connections. It offers features like scanning for specific attacks, enforcing guardrailing policies, auditing MCP traffic, and detecting changes to MCP tools. MCP-Scan does not store or log usage data and can be used to enhance the security of MCP environments.

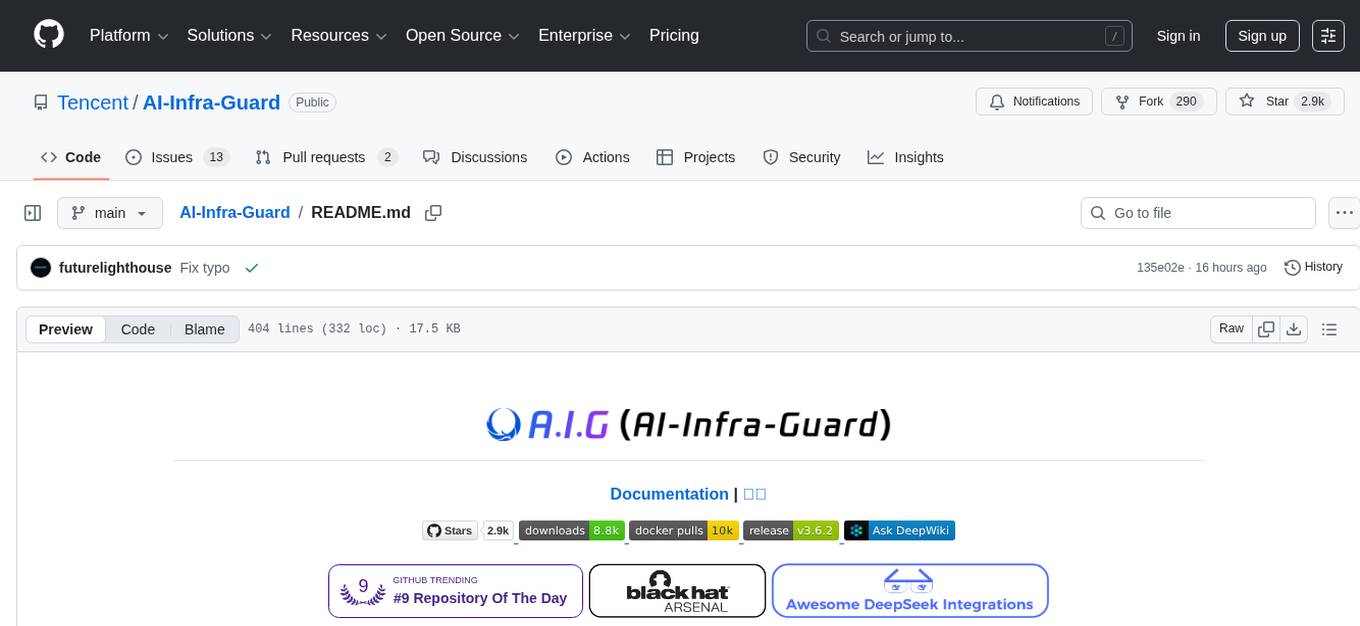

AI-Infra-Guard

A.I.G (AI-Infra-Guard) is an AI red teaming platform by Tencent Zhuque Lab that integrates capabilities such as AI infra vulnerability scan, MCP Server risk scan, and Jailbreak Evaluation. It aims to provide users with a comprehensive, intelligent, and user-friendly solution for AI security risk self-examination. The platform offers features like AI Infra Scan, AI Tool Protocol Scan, and Jailbreak Evaluation, along with a modern web interface, complete API, multi-language support, cross-platform deployment, and being free and open-source under the MIT license.

HydraDragonPlatform

Hydra Dragon Automatic Malware/Executable Analysis Platform offers dynamic and static analysis for Windows, including open-source XDR projects, ClamAV, YARA-X, machine learning AI, behavioral analysis, Unpacker, Deobfuscator, Decompiler, website signatures, Ghidra, Suricata, Sigma, Kernel based protection, and more. It is a Unified Executable Analysis & Detection Framework.