pegainfer

Pure Rust + CUDA LLM inference engine

Stars: 83

PegaInfer is a machine learning tool designed for predictive analytics and pattern recognition. It provides a user-friendly interface for training and deploying machine learning models without the need for extensive coding knowledge. With PegaInfer, users can easily analyze large datasets, make predictions, and uncover hidden patterns in their data. The tool supports various machine learning algorithms and allows for customization to suit specific use cases. Whether you are a data scientist, business analyst, or researcher, PegaInfer can help streamline your data analysis process and enhance decision-making capabilities.

README:

Pure Rust + CUDA LLM inference engine. No PyTorch. No frameworks. Just metal.

Quickstart · Architecture · Performance · API

pegainfer is a from-scratch LLM inference engine written in Rust with hand-written CUDA kernels. It currently runs Qwen3-4B at ~70 tokens/sec on a single GPU.

The goal is not to replace vLLM or TensorRT-LLM — it's to understand every layer of the inference stack by building it from the ground up, and to explore what a Rust-native inference engine can look like.

What's implemented:

- Full Qwen3 transformer: GQA, RoPE, SwiGLU MLP, RMSNorm

- 11 custom CUDA kernels + cuBLAS GEMV

- BF16 storage, FP32 accumulators

- KV cache with tiled fused attention (online softmax, TILE_SIZE=64)

- OpenAI-compatible

/v1/completionsHTTP API - Safetensors weight loading, HuggingFace tokenizer

What's not (yet):

- Batching, PagedAttention, streaming (SSE)

- FlashAttention-level kernel optimization

- Multi-GPU / tensor parallelism

- Quantization (INT8/INT4)

- Rust (2024 edition)

- CUDA Toolkit (nvcc, cuBLAS)

- A CUDA-capable GPU

- Qwen3-4B model weights in

models/Qwen3-4B/

export CUDA_HOME=/usr/local/cuda

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

# Build (compiles CUDA kernels via build.rs)

cargo build --release

# Run inference server on port 8000

cargo run --release

# Run tests

cargo test --release# Using huggingface-cli

huggingface-cli download Qwen/Qwen3-4B --local-dir models/Qwen3-4BOpenAI-compatible completions endpoint:

curl http://localhost:8000/v1/completions \

-H "Content-Type: application/json" \

-d '{"prompt": "The capital of France is", "max_tokens": 32}'Tokenize → Embedding → 28× TransformerBlock → RMSNorm → LM Head → Argmax

│

├── RMSNorm → Fused GQA Attention → Residual

└── RMSNorm → Fused SwiGLU MLP → Residual

src/

├── main.rs # HTTP server (axum)

├── model.rs # Qwen3Model, Attention, MLP, TransformerBlock

├── tensor.rs # DeviceVec, DeviceMatrix — GPU tensor types

├── ops.rs # GPU operators (linear, rms_norm, rope, fused_mlp, fused_attention)

├── kv_cache.rs # KV cache for autoregressive generation

├── weight_loader.rs # Safetensors loading + RoPE precomputation

├── ffi.rs # FFI bindings to CUDA kernels

├── qwen3_config.rs # Model config parsing

├── tokenizer.rs # HuggingFace tokenizers wrapper

└── trace_reporter.rs # Chrome Trace JSON profiling output

csrc/

├── kernels.cu # RMSNorm, RoPE, SiLU, embedding, GEMV, fused MLP, sampling

├── fused_attention.cu # Fused GQA attention with tiled online softmax

└── common.cuh # Shared CUDA utilities

- All computation on GPU — no CPU fallback, no hybrid execution

- Custom CUDA kernels for everything except matrix multiplication (cuBLAS)

- Fused operators — attention and MLP are each a single kernel launch

- BF16 storage, FP32 accumulation — numerical stability without memory overhead

- Synchronous execution — simple and debuggable, no overlap optimization yet

Measured on RTX 5070 Ti, Qwen3-4B, BF16:

| Metric | Value |

|---|---|

| TTFT (prompt_len=4) | ~17 ms |

| TPOT | ~14 ms/token |

| Throughput | ~70 tokens/sec |

Profiling traces are written to traces/ in Chrome Trace JSON format — open with Perfetto UI.

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pegainfer

Similar Open Source Tools

pegainfer

PegaInfer is a machine learning tool designed for predictive analytics and pattern recognition. It provides a user-friendly interface for training and deploying machine learning models without the need for extensive coding knowledge. With PegaInfer, users can easily analyze large datasets, make predictions, and uncover hidden patterns in their data. The tool supports various machine learning algorithms and allows for customization to suit specific use cases. Whether you are a data scientist, business analyst, or researcher, PegaInfer can help streamline your data analysis process and enhance decision-making capabilities.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

datatune

Datatune is a data analysis tool designed to help users explore and analyze datasets efficiently. It provides a user-friendly interface for importing, cleaning, visualizing, and modeling data. With Datatune, users can easily perform tasks such as data preprocessing, feature engineering, model selection, and evaluation. The tool offers a variety of statistical and machine learning algorithms to support data analysis tasks. Whether you are a data scientist, analyst, or researcher, Datatune can streamline your data analysis workflow and help you derive valuable insights from your data.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

ROGRAG

ROGRAG is a powerful open-source tool designed for data analysis and visualization. It provides a user-friendly interface for exploring and manipulating datasets, making it ideal for researchers, data scientists, and analysts. With ROGRAG, users can easily import, clean, analyze, and visualize data to gain valuable insights and make informed decisions. The tool supports a wide range of data formats and offers a variety of statistical and visualization tools to help users uncover patterns, trends, and relationships in their data. Whether you are working on exploratory data analysis, statistical modeling, or data visualization, ROGRAG is a versatile tool that can streamline your workflow and enhance your data analysis capabilities.

arconia

Arconia is a powerful open-source tool for managing and visualizing data in a user-friendly way. It provides a seamless experience for data analysts and scientists to explore, clean, and analyze datasets efficiently. With its intuitive interface and robust features, Arconia simplifies the process of data manipulation and visualization, making it an essential tool for anyone working with data.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

NadirClaw

NadirClaw is a powerful open-source tool designed for web scraping and data extraction. It provides a user-friendly interface for extracting data from websites with ease. With NadirClaw, users can easily scrape text, images, and other content from web pages for various purposes such as data analysis, research, and automation. The tool offers flexibility and customization options to cater to different scraping needs, making it a versatile solution for extracting data from the web. Whether you are a data scientist, researcher, or developer, NadirClaw can streamline your data extraction process and help you gather valuable insights from online sources.

LLM-Project

LLM-Project is a machine learning model for sentiment analysis. It is designed to analyze text data and classify it into positive, negative, or neutral sentiments. The model uses natural language processing techniques to extract features from the text and train a classifier to make predictions. LLM-Project is suitable for researchers, developers, and data scientists who are working on sentiment analysis tasks. It provides a pre-trained model that can be easily integrated into existing projects or used for experimentation and research purposes. The codebase is well-documented and easy to understand, making it accessible to users with varying levels of expertise in machine learning and natural language processing.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

upgini

Upgini is an intelligent data search engine with a Python library that helps users find and add relevant features to their ML pipeline from various public, community, and premium external data sources. It automates the optimization of connected data sources by generating an optimal set of machine learning features using large language models, GraphNNs, and recurrent neural networks. The tool aims to simplify feature search and enrichment for external data to make it a standard approach in machine learning pipelines. It democratizes access to data sources for the data science community.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

Daft

Daft is a lightweight and efficient tool for data analysis and visualization. It provides a user-friendly interface for exploring and manipulating datasets, making it ideal for both beginners and experienced data analysts. With Daft, you can easily import data from various sources, clean and preprocess it, perform statistical analysis, create insightful visualizations, and export your results in multiple formats. Whether you are a student, researcher, or business professional, Daft simplifies the process of analyzing data and deriving meaningful insights.

atlas

Atlas is a powerful data visualization tool that allows users to create interactive charts and graphs from their datasets. It provides a user-friendly interface for exploring and analyzing data, making it ideal for both beginners and experienced data analysts. With Atlas, users can easily customize the appearance of their visualizations, add filters and drill-down capabilities, and share their insights with others. The tool supports a wide range of data formats and offers various chart types to suit different data visualization needs. Whether you are looking to create simple bar charts or complex interactive dashboards, Atlas has you covered.

For similar tasks

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

pegainfer

PegaInfer is a machine learning tool designed for predictive analytics and pattern recognition. It provides a user-friendly interface for training and deploying machine learning models without the need for extensive coding knowledge. With PegaInfer, users can easily analyze large datasets, make predictions, and uncover hidden patterns in their data. The tool supports various machine learning algorithms and allows for customization to suit specific use cases. Whether you are a data scientist, business analyst, or researcher, PegaInfer can help streamline your data analysis process and enhance decision-making capabilities.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

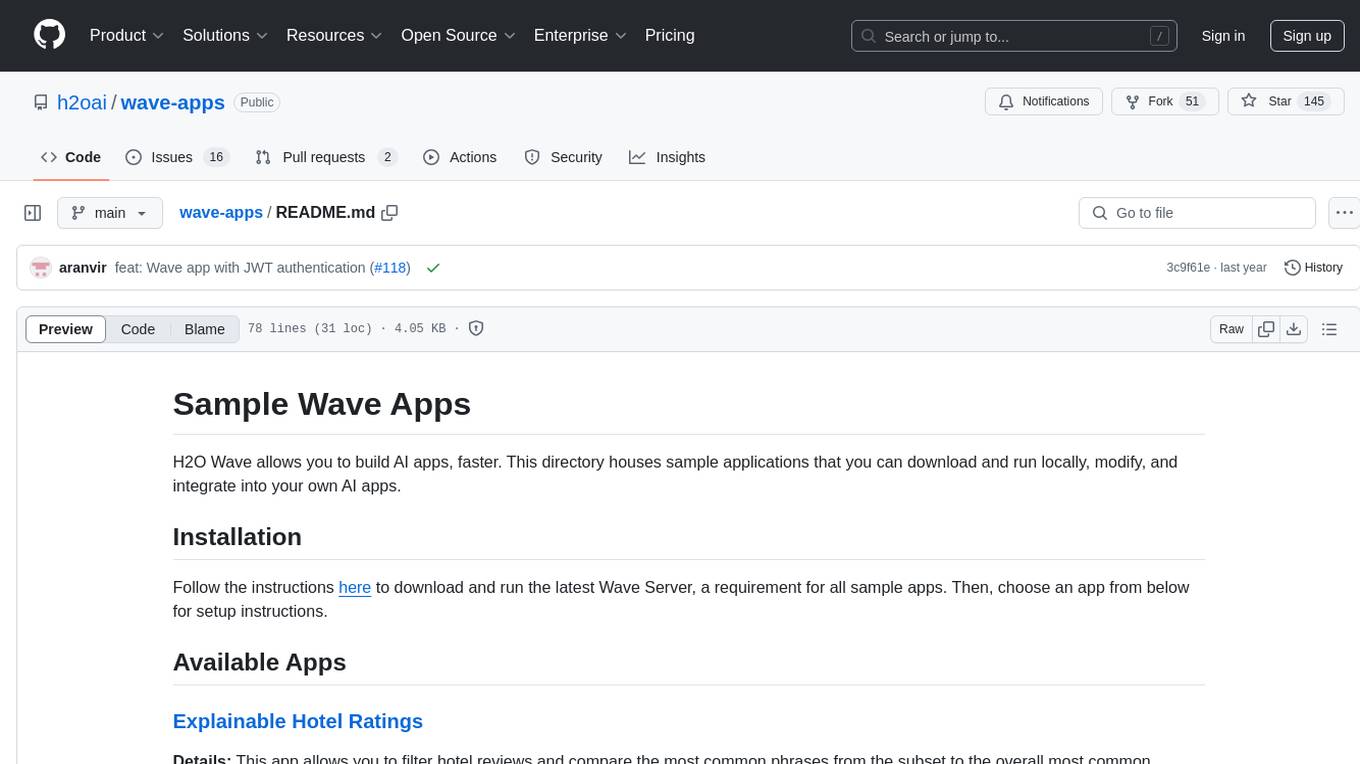

wave-apps

Wave Apps is a directory of sample applications built on H2O Wave, allowing users to build AI apps faster. The apps cover various use cases such as explainable hotel ratings, human-in-the-loop credit risk assessment, mitigating churn risk, online shopping recommendations, and sales forecasting EDA. Users can download, modify, and integrate these sample apps into their own projects to learn about app development and AI model deployment.

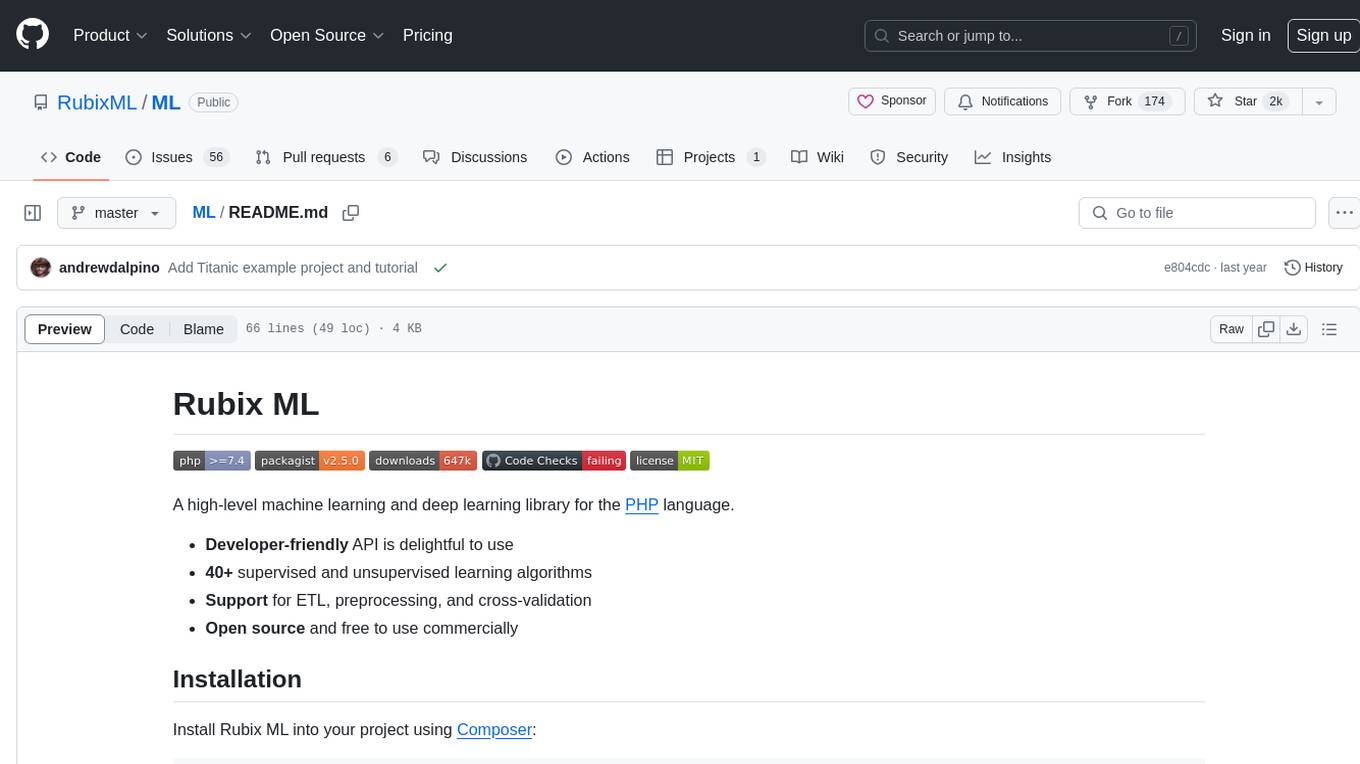

ML

Rubix ML is a high-level machine learning and deep learning library for the PHP language. It provides a developer-friendly API with over 40 supervised and unsupervised learning algorithms, support for ETL, preprocessing, and cross-validation. The library is open source and free to use commercially. Rubix ML allows users to build machine learning programs in PHP, covering the entire machine learning life cycle from data processing to training and production. It also offers tutorials and educational content to help users get started with machine learning projects.

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

vanna

Vanna is an open-source Python framework for SQL generation and related functionality. It uses Retrieval-Augmented Generation (RAG) to train a model on your data, which can then be used to ask questions and get back SQL queries. Vanna is designed to be portable across different LLMs and vector databases, and it supports any SQL database. It is also secure and private, as your database contents are never sent to the LLM or the vector database.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide