holmesgpt

Your 24/7 On-Call AI Agent - Solve Alerts Faster with Automatic Correlations, Investigations, and More

Stars: 1844

HolmesGPT is an AI agent designed for troubleshooting and investigating issues in cloud environments. It utilizes AI models to analyze data from various sources, identify root causes, and provide remediation suggestions. The tool offers integrations with popular cloud providers, observability tools, and on-call systems, enabling users to streamline the troubleshooting process. HolmesGPT can automate the investigation of alerts and tickets from external systems, providing insights back to the source or communication platforms like Slack. It supports end-to-end automation and offers a CLI for interacting with the AI agent. Users can customize HolmesGPT by adding custom data sources and runbooks to enhance investigation capabilities. The tool prioritizes data privacy, ensuring read-only access and respecting RBAC permissions. HolmesGPT is a CNCF Sandbox Project and is distributed under the Apache 2.0 License.

README:

HolmesGPT is an AI agent for investigating problems in your cloud, finding the root cause, and suggesting remediations. It has dozens of built-in integrations for cloud providers, observability tools, and on-call systems.

🎉 HolmesGPT is now a CNCF Sandbox Project!

HolmesGPT was originally created by Robusta.Dev and is a CNCF sandbox project.

Find more about HolmesGPT's maintainers and adopters here.

📚 Read the full documentation at holmesgpt.dev for installation guides, tutorials, API reference, and more.

How it Works |

Installation |

LLM Providers |

YouTube Demo |

HolmesGPT connects AI models with live observability data and organizational knowledge. It uses an agentic loop to analyze data from multiple sources and identify possible root causes.

HolmesGPT integrates with popular observability and cloud platforms. The following data sources ("toolsets") are built-in. Add your own.

| Data Source | Status | Notes |

|---|---|---|

| ✅ | Get status, history and manifests and more of apps, projects and clusters | |

| ✅ | Fetch events, instances, slow query logs and more | |

| ✅ | Private runbooks and documentation | |

| ✅ | Retrieve logs for any resource | |

| ✅ | Date and time-related operations | |

| ✅ | Get images, logs, events, history and more | |

| 🟡 Beta | Remediate alerts by opening pull requests with fixes | |

| 🟡 Beta | Fetches log data from datadog | |

| ✅ | Query logs for Kubernetes resources or any query | |

| ✅ | Fetch trace info, debug issues like high latency in application. | |

| ✅ | Release status, chart metadata, and values | |

| ✅ | Public runbooks, community docs etc | |

| ✅ | Fetch metadata, list consumers and topics or find lagging consumer groups | |

| ✅ | Pod logs, K8s events, and resource status (kubectl describe) | |

| 🟡 Beta | Investigate alerts, query tracing data | |

| ✅ | Query health, shard, and settings related info of one or more clusters | |

| ✅ | Investigate alerts, query metrics and generate PromQL queries | |

| ✅ | Info about partitions, memory/disk alerts to troubleshoot split-brain scenarios and more | |

| ✅ | Multi-cluster monitoring, historical change data, user-configured runbooks, PromQL graphs and more | |

| ✅ | Team knowledge base and runbooks on demand |

HolmesGPT can fetch alerts/tickets to investigate from external systems, then write the analysis back to the source or Slack.

| Integration | Status | Notes |

|---|---|---|

| Slack | ✅ | Demo. Available via Robusta.dev (commercial platform) |

| Microsoft Teams | ✅ | Available via Robusta.dev (commercial platform) |

| Prometheus/AlertManager | ✅ | Robusta SaaS or HolmesGPT CLI |

| PagerDuty | ✅ | HolmesGPT CLI only |

| OpsGenie | ✅ | HolmesGPT CLI only |

| Jira | ✅ | HolmesGPT CLI only |

| GitHub | ✅ | HolmesGPT CLI only |

Read the installation documentation to learn how to install HolmesGPT.

Read the LLM Providers documentation to learn how to set up your LLM API key.

- In the Robusta SaaS: Go to platform.robusta.dev and use Holmes from your browser

- With HolmesGPT CLI: setup an LLM API key and ask Holmes a question 👇

holmes ask "what pods are unhealthy and why?"

You can also provide files as context:

holmes ask "summarize the key points in this document" -f ./mydocument.txt

You can also load the prompt from a file using the --prompt-file option:

holmes ask --prompt-file ~/long-prompt.txt

Enter interactive mode to ask follow-up questions:

```bash

holmes ask "what pods are unhealthy and why?" --interactive

# or

holmes ask "what pods are unhealthy and why?" -i

Also supported:

HolmesGPT CLI: investigate Prometheus alerts

Pull alerts from AlertManager and investigate them with HolmesGPT:

holmes investigate alertmanager --alertmanager-url http://localhost:9093

# if on Mac OS and using the Holmes Docker image👇

# holmes investigate alertmanager --alertmanager-url http://docker.for.mac.localhost:9093

To investigate alerts in your browser, sign up for a free trial of Robusta SaaS.

Optional: port-forward to AlertManager before running the command mentioned above (if running Prometheus inside Kubernetes)

kubectl port-forward alertmanager-robusta-kube-prometheus-st-alertmanager-0 9093:9093 &

HolmesGPT CLI: investigate PagerDuty and OpsGenie alerts

holmes investigate opsgenie --opsgenie-api-key <OPSGENIE_API_KEY>

holmes investigate pagerduty --pagerduty-api-key <PAGERDUTY_API_KEY>

# to write the analysis back to the incident as a comment

holmes investigate pagerduty --pagerduty-api-key <PAGERDUTY_API_KEY> --update

For more details, run holmes investigate <source> --help

HolmesGPT can investigate many issues out of the box, with no customization or training. Optionally, you can extend Holmes to improve results:

Custom Data Sources: Add data sources (toolsets) to improve investigations

- If using Robusta SaaS: See here

- If using the CLI: Use

-tflag with custom toolset files or add to~/.holmes/config.yaml

Custom Runbooks: Give HolmesGPT instructions for known alerts:

- If using Robusta SaaS: Use the Robusta UI to add runbooks

- If using the CLI: Use

-rflag with custom runbook files or add to~/.holmes/config.yaml

You can save common settings and API Keys in a config file to avoid passing them from the CLI each time:

Reading settings from a config file

You can save common settings and API keys in config file for re-use. Place the config file in ~/.holmes/config.yaml` or pass it using the --config

You can view an example config file with all available settings here.

HolmesGPT supports transformers to process large tool outputs before sending them to your primary LLM. This feature helps manage context window limits while preserving essential information.

The most common transformer is llm_summarize, which uses a fast secondary model to summarize lengthy outputs from tools like kubectl describe, log queries, or metrics collection.

📖 Learn more: Tool Output Transformers Documentation

By design, HolmesGPT has read-only access and respects RBAC permissions. It is safe to run in production environments.

We do not train HolmesGPT on your data. Data sent to Robusta SaaS is private to your account.

For extra privacy, bring an API key for your own AI model.

Because HolmesGPT relies on LLMs, it relies on a suite of pytest based evaluations to ensure the prompt and HolmesGPT's default set of tools work as expected with LLMs.

- Introduction to HolmesGPT's evals.

- Write your own evals.

- Use Braintrust to view analyze results (optional).

Distributed under the Apache 2.0 License. See LICENSE for more information.

Join our community to discuss the HolmesGPT roadmap and share feedback:

If you have any questions, feel free to message us on HolmesGPT Slack Channel

Please read our CONTRIBUTING.md for guidelines and instructions.

For help, contact us on Slack or ask DeepWiki AI your questions.

Please make sure to follow the CNCF code of conduct - details here.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for holmesgpt

Similar Open Source Tools

holmesgpt

HolmesGPT is an AI agent designed for troubleshooting and investigating issues in cloud environments. It utilizes AI models to analyze data from various sources, identify root causes, and provide remediation suggestions. The tool offers integrations with popular cloud providers, observability tools, and on-call systems, enabling users to streamline the troubleshooting process. HolmesGPT can automate the investigation of alerts and tickets from external systems, providing insights back to the source or communication platforms like Slack. It supports end-to-end automation and offers a CLI for interacting with the AI agent. Users can customize HolmesGPT by adding custom data sources and runbooks to enhance investigation capabilities. The tool prioritizes data privacy, ensuring read-only access and respecting RBAC permissions. HolmesGPT is a CNCF Sandbox Project and is distributed under the Apache 2.0 License.

openlit

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

biochatter

Generative AI models have shown tremendous usefulness in increasing accessibility and automation of a wide range of tasks. This repository contains the `biochatter` Python package, a generic backend library for the connection of biomedical applications to conversational AI. It aims to provide a common framework for deploying, testing, and evaluating diverse models and auxiliary technologies in the biomedical domain. BioChatter is part of the BioCypher ecosystem, connecting natively to BioCypher knowledge graphs.

coreply

Coreply is an open-source Android app that provides texting suggestions while typing, enhancing the typing experience with intelligent, context-aware suggestions. It supports various texting apps and offers real-time AI suggestions, customizable LLM settings, and ensures no data collection. Users can install the app, configure it with an API key, and start receiving suggestions while typing in messaging apps. The tool supports different AI models from providers like OpenAI, Google AI Studio, Openrouter, Groq, and Codestral for chat completion and fill-in-the-middle tasks.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

Starmoon

Starmoon is an affordable, compact AI-enabled device that can understand and respond to your emotions with empathy. It offers supportive conversations and personalized learning assistance. The device is cost-effective, voice-enabled, open-source, compact, and aims to reduce screen time. Users can assemble the device themselves using off-the-shelf components and deploy it locally for data privacy. Starmoon integrates various APIs for AI language models, speech-to-text, text-to-speech, and emotion intelligence. The hardware setup involves components like ESP32S3, microphone, amplifier, speaker, LED light, and button, along with software setup instructions for developers. The project also includes a web app, backend API, and background task dashboard for monitoring and management.

openrl

OpenRL is an open-source general reinforcement learning research framework that supports training for various tasks such as single-agent, multi-agent, offline RL, self-play, and natural language. Developed based on PyTorch, the goal of OpenRL is to provide a simple-to-use, flexible, efficient and sustainable platform for the reinforcement learning research community. It supports a universal interface for all tasks/environments, single-agent and multi-agent tasks, offline RL training with expert dataset, self-play training, reinforcement learning training for natural language tasks, DeepSpeed, Arena for evaluation, importing models and datasets from Hugging Face, user-defined environments, models, and datasets, gymnasium environments, callbacks, visualization tools, unit testing, and code coverage testing. It also supports various algorithms like PPO, DQN, SAC, and environments like Gymnasium, MuJoCo, Atari, and more.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

project-blog

Welcome to the Blog Script Project, a collaborative platform for developers and writers to create, manage, and share content. With features like Markdown support, submodule integration, customizable templates, project contribution workflow, global visibility, community discussions, SEO optimization, and role-based dashboard, Blog Script enhances collaboration and visibility for your work. You can contribute by adding new projects, improving existing projects, updating documentation, fixing bugs, optimizing, and ensuring code readability. Follow the contribution guidelines to star the repository, find tasks, fork the repository, make changes, add screenshots, submit a pull request, and contribute to the open-source community. Additionally, you can add your project as a submodule by following the provided guidelines. Join us, contribute, and grow together!

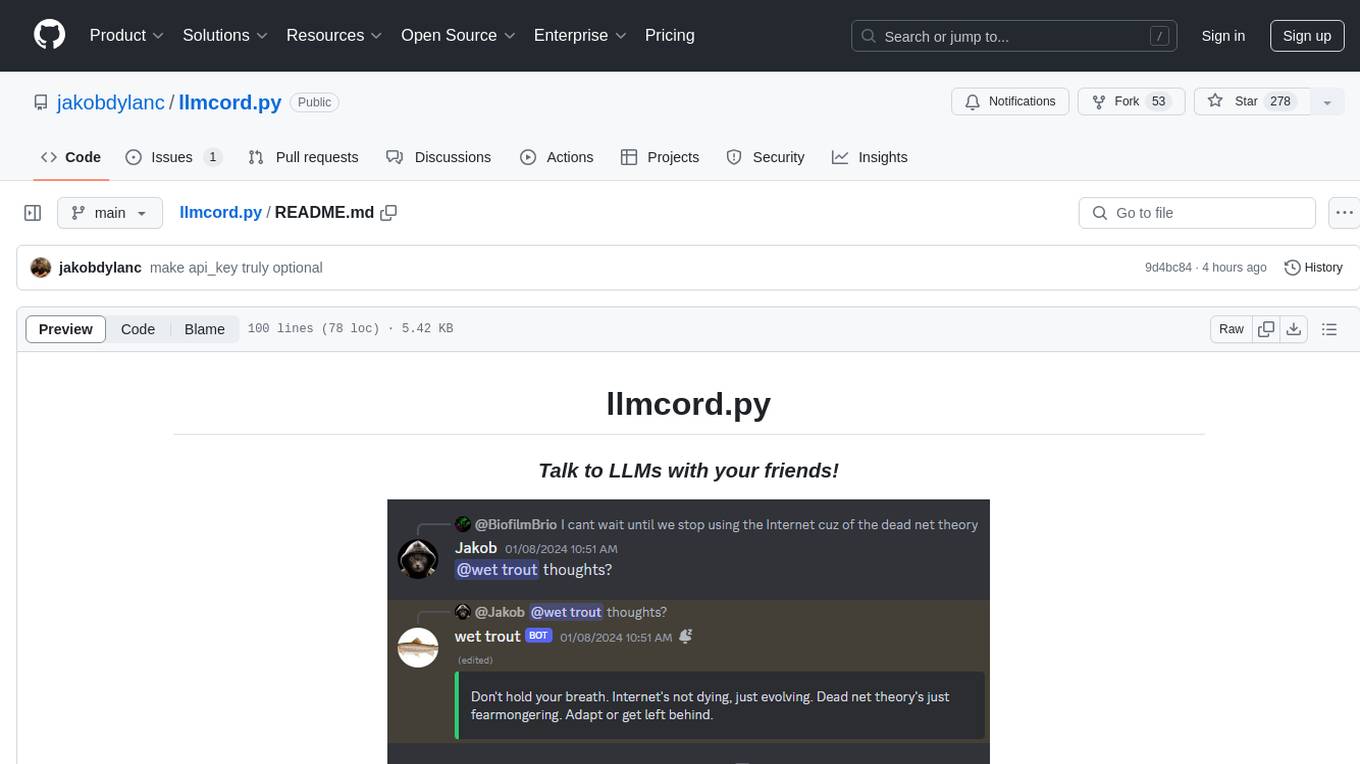

llmcord.py

llmcord.py is a tool that allows users to chat with Language Model Models (LLMs) directly in Discord. It supports various LLM providers, both remote and locally hosted, and offers features like reply-based chat system, choosing any LLM, support for image and text file attachments, customizable system prompt, private access via DM, user identity awareness, streamed responses, warning messages, efficient message data caching, and asynchronous operation. The tool is designed to facilitate seamless conversations with LLMs and enhance user experience on Discord.

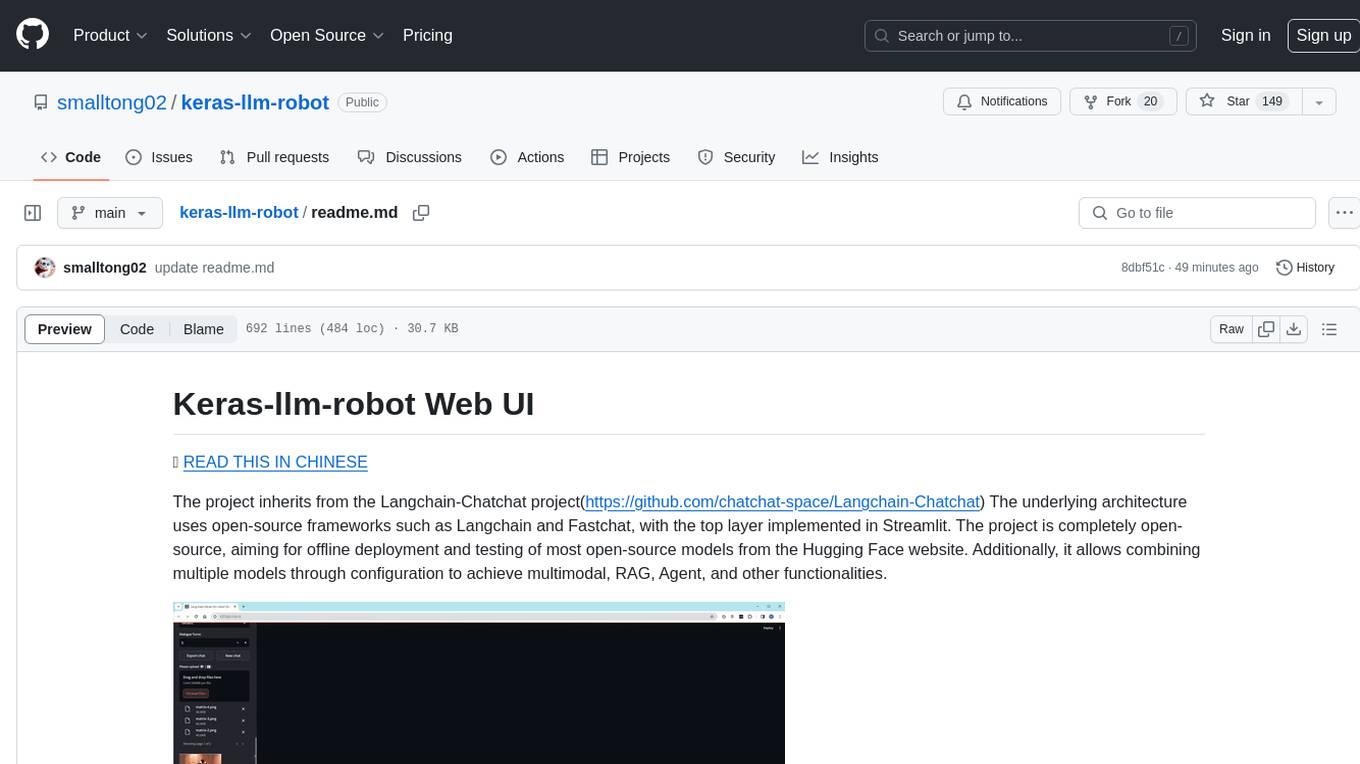

keras-llm-robot

The Keras-llm-robot Web UI project is an open-source tool designed for offline deployment and testing of various open-source models from the Hugging Face website. It allows users to combine multiple models through configuration to achieve functionalities like multimodal, RAG, Agent, and more. The project consists of three main interfaces: chat interface for language models, configuration interface for loading models, and tools & agent interface for auxiliary models. Users can interact with the language model through text, voice, and image inputs, and the tool supports features like model loading, quantization, fine-tuning, role-playing, code interpretation, speech recognition, image recognition, network search engine, and function calling.

OmAgent

OmAgent is an open-source agent framework designed to streamline the development of on-device multimodal agents. It enables agents to empower various hardware devices, integrates speed-optimized SOTA multimodal models, provides SOTA multimodal agent algorithms, and focuses on optimizing the end-to-end computing pipeline for real-time user interaction experience. Key features include easy connection to diverse devices, scalability, flexibility, and workflow orchestration. The architecture emphasizes graph-based workflow orchestration, native multimodality, and device-centricity, allowing developers to create bespoke intelligent agent programs.

deepchecks

Deepchecks is a holistic open-source solution for AI & ML validation needs, enabling thorough testing of data and models from research to production. It includes components for testing, CI & testing management, and monitoring. Users can install and use Deepchecks for testing and monitoring their AI models, with customizable checks and suites for tabular, NLP, and computer vision data. The tool provides visual reports, pythonic/json output for processing, and a dynamic UI for collaboration and monitoring. Deepchecks is open source, with premium features available under a commercial license for monitoring components.

For similar tasks

holmesgpt

HolmesGPT is an AI agent designed for troubleshooting and investigating issues in cloud environments. It utilizes AI models to analyze data from various sources, identify root causes, and provide remediation suggestions. The tool offers integrations with popular cloud providers, observability tools, and on-call systems, enabling users to streamline the troubleshooting process. HolmesGPT can automate the investigation of alerts and tickets from external systems, providing insights back to the source or communication platforms like Slack. It supports end-to-end automation and offers a CLI for interacting with the AI agent. Users can customize HolmesGPT by adding custom data sources and runbooks to enhance investigation capabilities. The tool prioritizes data privacy, ensuring read-only access and respecting RBAC permissions. HolmesGPT is a CNCF Sandbox Project and is distributed under the Apache 2.0 License.

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

blind_chat

BlindChat is a confidential and verifiable Conversational AI tool that ensures user prompts remain private from the AI provider. It leverages privacy-enhancing technology called enclaves with the core solution, BlindLlama. BlindChat Local variant operates entirely in the user's browser, ensuring data never leaves the device. The tool provides cryptographic guarantees that user data is protected and not accessible to AI providers.

AskDB

AskDB is a revolutionary application that simplifies the way users interact with SQL databases. It allows users to query databases in plain English, provides instant answers, and offers AI-assisted query writing and database exploration. AskDB benefits business analysts, data scientists, managers, developers, and database administrators by making querying databases intuitive, effortless, and safe. It offers features like natural language querying, instant insight from data, multi-database connectivity, intelligent query suggestions, data privacy, and easy data export.

local-chat

LocalChat is a simple, easy-to-set-up, and open-source local AI chat tool that allows users to interact with generative language models on their own computers without transmitting data to a cloud server. It provides a chat-like interface for users to experience ChatGPT-like behavior locally, ensuring GDPR compliance and data privacy. Users can download LocalChat for macOS, Windows, or Linux to chat with open-weight generative language models.

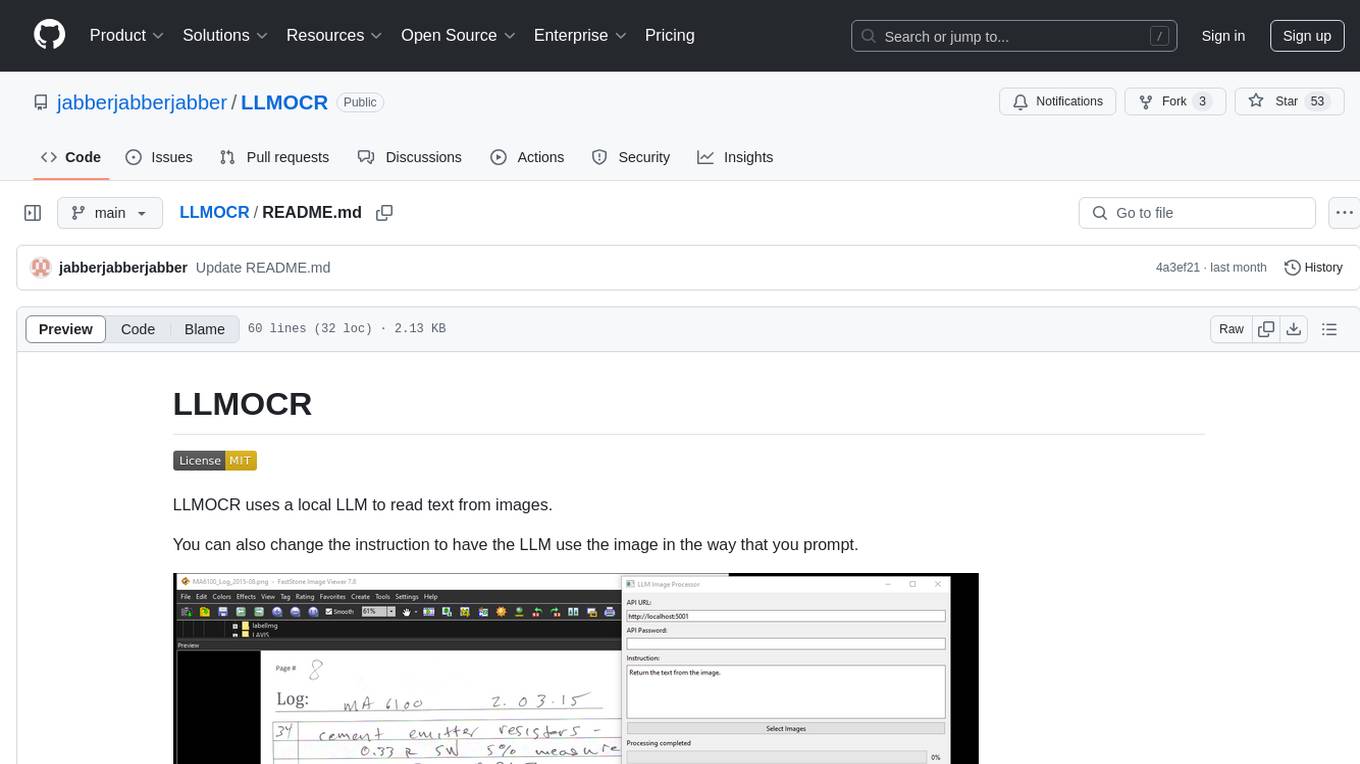

LLMOCR

LLMOCR is a tool that utilizes a local Large Language Model (LLM) to extract text from images. It offers a user-friendly GUI and supports GPU acceleration for faster inference. The tool is cross-platform, compatible with Windows, macOS ARM, and Linux. Users can prompt the LLM to process images in a customized way. The processing is done locally on the user's machine, ensuring data privacy and security. LLMOCR requires Python 3.8 or higher and KoboldCPP for installation and operation.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.