azure-ai-document-processing-samples

A collection of samples demonstrating techniques for processing documents with Azure AI including AI Studio, OpenAI, Document Intelligence, etc.

Stars: 60

This repository contains a collection of code samples that demonstrate how to use various Azure AI capabilities to process documents. The samples help engineering teams establish techniques with Azure AI Foundry, Azure OpenAI, Azure AI Document Intelligence, and Azure AI Language services to build solutions for extracting structured data, classifying, and analyzing documents. The techniques simplify custom model training, improve reliability in document processing, and simplify document processing workflows by providing reusable code and patterns that can be easily modified and evaluated for most use cases.

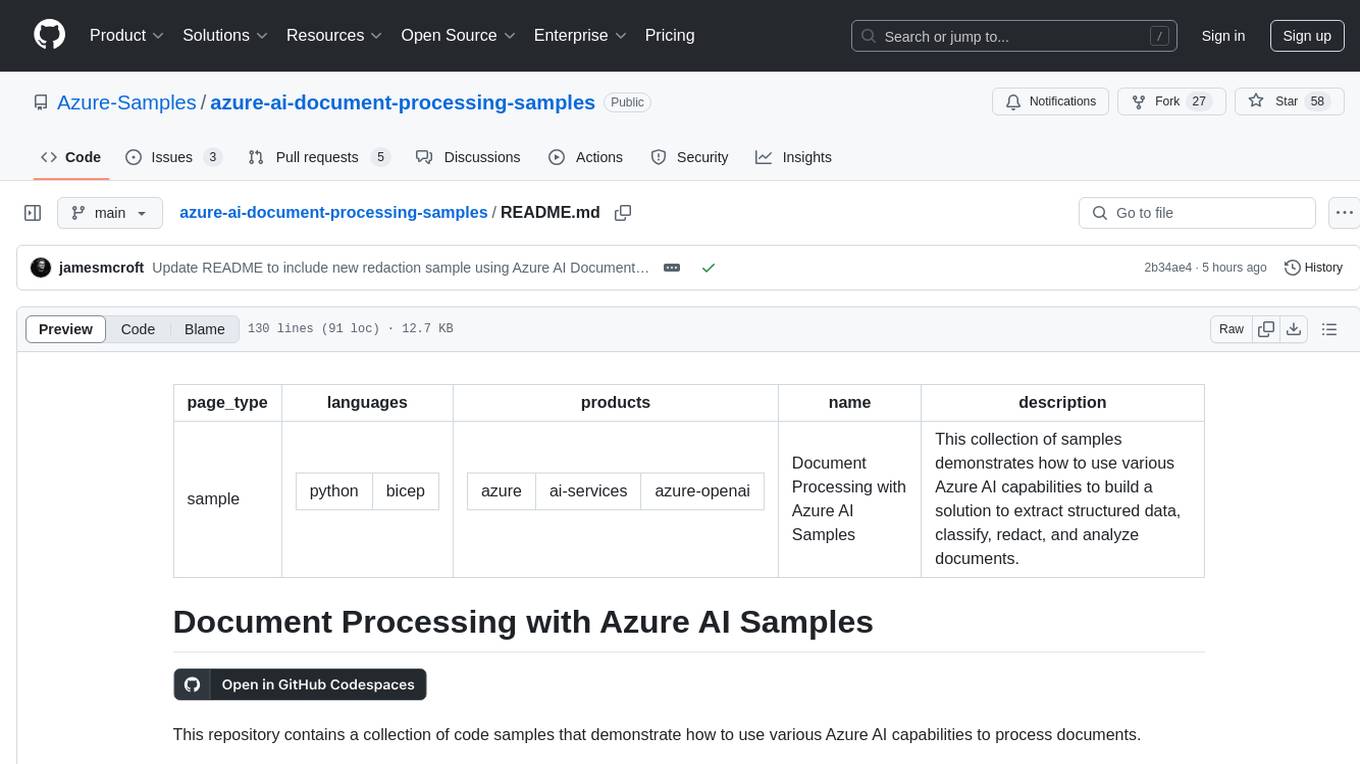

README:

page_type: sample languages:

- python

- bicep products:

- azure

- ai-services

- azure-openai name: Document Processing with Azure AI Samples description: This collection of samples demonstrates how to use various Azure AI capabilities to build a solution to extract structured data, classify, redact, and analyze documents.

This repository contains a collection of code samples that demonstrate how to use various Azure AI capabilities to process documents.

The samples are intended to help engineering teams establish techniques with Azure AI Foundry, Azure OpenAI, Azure AI Document Intelligence, and Azure AI Language services to build solutions to extract structured data, classify, and analyze documents.

The techniques demonstrated take advantage of various capabilities from each service to:

- Reduce complexity of custom model training by taking advantage of the capabilities of Generative AI models to analyze and classify documents.

- Improve reliability in document processing by utilizing combining AI service capbilities to extract structured data from any document type, with high accuracy and confidence.

- Simplify document processing workflows by providing reusable code and patterns that can be easily modified and evaluated for most use cases.

[!NOTE] All data extraction samples provide both an accuracy and confidence score for the extracted data. The accuracy score is calculated based on the similarity between the extracted data and the ground truth data. The confidence score is calculated based on OCR analysis confidence and

logprobsin Azure OpenAI requests.

| Sample | Description | Example Use Cases |

|---|---|---|

| Classification - Azure OpenAI GPT-4o with Vision | Demonstrates how to use Azure OpenAI GPT-4o and GPT-4o-mini models to classify documents using their built-in vision capabilities. | Processing multiple documents types or documents with varying purposes, such as contracts, legal documents, and emails. |

| Classification - Azure AI Document Intelligence + Embeddings | Demonstrates how to use Azure AI Document Intelligence pre-built layout and embeddings models to classify documents based on their content. | Processing multiple documents types or documents with varying purposes, such as contracts, legal documents, and emails. |

| Sample | Description | Example Use Cases |

|---|---|---|

| Redaction - Azure AI Document Intelligence + Azure OpenAI GPT-4o | Demonstrates how to use Azure AI Document Intelligence pre-built layout and Azure OpenAI GPT models to redact sensitive information from documents using natural language instruction to determine redaction areas. | Need for more complex redaction rules, such as redacting based on context or relationships. Also works well for redacting PII, including names, addresses, and phone numbers. |

| Redaction - Azure AI Language PII Native Document Analysis | Demonstrates how to use Azure AI Language Native Document Analysis features to redact personally identifiable information (PII) from documents. | Redacting sensitive information from documents, such as names, addresses, and phone numbers. |

| Sample | Description | Example Use Cases |

|---|---|---|

| Data Extraction - Azure AI Document Intelligence + Azure OpenAI GPT-4o | Demonstrates how to use Azure AI Document Intelligence pre-built layout and Azure OpenAI GPT models to extract structured data from documents. | Predominantly text-based documents such as invoices, receipts, and forms. |

| Data Extraction - Azure AI Document Intelligence + Phi | Demonstrates how to use Azure AI Document Intelligence pre-built layout and Microsoft's Phi small language models to extract structured data from documents. | Predominantly text-based documents such as invoices, receipts, and forms. |

| Data Extraction - Azure OpenAI GPT-4o with Vision | Demonstrates how to use Azure OpenAI GPT-4o and GPT-4o-mini models to extract structured data from documents using their built-in vision capabilities. | Complex documents with a mix of text and images, including diagrams, signatures, selection marks, etc. such as reports and contracts. |

| Data Extraction - Comprehensive Azure AI Document Intelligence + Azure OpenAI GPT-4o with Vision | Demonstrates how to improve the accuracy and confidence in extracting structured data from documents by combining Azure AI Document Intelligence and Azure OpenAI GPT-4o models with vision capabilities. | Any structured or unstructured document type. |

The sample repository comes with a Dev Container that contains all the necessary tools and dependencies to run the sample.

[!IMPORTANT] An Azure subscription is required to run these samples. If you don't have an Azure subscription, create an account.

To use the Dev Container in GitHub Codespaces, follow these steps:

- Click on the

Codebutton in the repository and selectCodespaces. - Click on the + button to create a new Codespace using the provided

.devcontainer\devcontainer.jsonconfiguration. - Once the Codespace is created, continue to the Azure environment setup section.

To use the Dev Container, you need to have the following tools installed on your local machine:

- Install Visual Studio Code

- Install Docker Desktop

- Install Remote - Containers extension for Visual Studio Code

To setup a local development environment, follow these steps:

[!IMPORTANT] Ensure that Docker Desktop is running on your local machine.

- Clone the repository to your local machine.

- Open the repository in Visual Studio Code.

- Press

F1to open the command palette and typeDev Containers: Reopen in Container.

Once the Dev Container is up and running, continue to the Azure environment setup section.

Once the Dev Container is up and running, you can setup the necessary Azure services and run the samples in the repository by running the following command in a pwsh terminal:

[!NOTE] For the most optimal sample experience, it is recommended to run the samples in

East USwhich will provide support for all the services used in the samples. Find out more about region availability for Azure AI Document Intelligence, andGPT-4o,Phi-4, andtext-embedding-3-largemodels.

az login

./Setup-Environment.ps1 -DeploymentName <UniqueDeploymentName> -Location <AzureRegion>[!NOTE] If a specific Azure tenant is required, use the

--tenant <TenantId>parameter in theaz logincommand.az login --tenant <TenantId>

[!TIP] If you want to preview the changes without deployment, you can add the

-WhatIfparameter to theSetup-Environment.ps1script../Setup-Environment.ps1 -DeploymentName <UniqueDeploymentName> -Location <AzureRegion> -WhatIf

The script will deploy the following resources to your Azure subscription:

-

Azure AI Foundry Hub & Project, a development platform for building AI solutions that integrates with Azure AI Services in a secure manner using Microsoft Entra ID for authentication.

- Note: Phi-4 MoE will be deployed as a PAYG serverless endpoint in the Azure AI Foundry Project with its primary key stored in the associated Azure Key Vault.

-

Azure AI Services, a managed service for all Azure AI Services, including Azure OpenAI, Azure AI Document Intelligence, and Azure AI Language services.

-

Note: GPT-4o and GPT-4o-mini will be deployed as Global Standard models with 10K TPM quota allocation.

text-embedding-3-largewill be deployed as a Standard model with 115K TPM quota allocation. These can be adjusted based on your quota availability in the main.bicep file.

-

Note: GPT-4o and GPT-4o-mini will be deployed as Global Standard models with 10K TPM quota allocation.

- Azure Storage Account, required by Azure AI Foundry.

- Azure Monitor, used to store logs and traces for monitoring and troubleshooting purposes.

- Azure Container Registry, used to store container images for the Azure AI Foundry environment.

[!NOTE] All resources are secured by default with Microsoft Entra ID using Azure RBAC. Your user client ID will be added with the necessary least-privilege roles to access the resources created. A user-assigned managed identity will also be deployed for the Azure AI Foundry environment.

After the script completes, you can run any of the samples in the repository by following their instructions.

You can contribute to the repository by opening an issue or submitting a pull request. For more information, see the Contributing guide.

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for azure-ai-document-processing-samples

Similar Open Source Tools

azure-ai-document-processing-samples

This repository contains a collection of code samples that demonstrate how to use various Azure AI capabilities to process documents. The samples help engineering teams establish techniques with Azure AI Foundry, Azure OpenAI, Azure AI Document Intelligence, and Azure AI Language services to build solutions for extracting structured data, classifying, and analyzing documents. The techniques simplify custom model training, improve reliability in document processing, and simplify document processing workflows by providing reusable code and patterns that can be easily modified and evaluated for most use cases.

Conversation-Knowledge-Mining-Solution-Accelerator

The Conversation Knowledge Mining Solution Accelerator enables customers to leverage intelligence to uncover insights, relationships, and patterns from conversational data. It empowers users to gain valuable knowledge and drive targeted business impact by utilizing Azure AI Foundry, Azure OpenAI, Microsoft Fabric, and Azure Search for topic modeling, key phrase extraction, speech-to-text transcription, and interactive chat experiences.

get-started-with-ai-agents

The 'Getting Started with Agents Using Azure AI Foundry' repository provides a solution that deploys a web-based chat application with an AI agent running in Azure Container App. The agent leverages Azure AI services for knowledge retrieval from uploaded files, enabling it to generate responses with citations. The solution includes built-in monitoring capabilities for easier troubleshooting and optimized performance. Users can deploy AI models, customize the agent, and evaluate its performance. The repository offers flexible deployment options through GitHub Codespaces, VS Code Dev Containers, or local environments.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

nucliadb

NucliaDB is a robust database that allows storing and searching on unstructured data. It is an out of the box hybrid search database, utilizing vector, full text and graph indexes. NucliaDB is written in Rust and Python. We designed it to index large datasets and provide multi-teanant support. When utilizing NucliaDB with Nuclia cloud, you are able to the power of an NLP database without the hassle of data extraction, enrichment and inference. We do all the hard work for you.

HuggingFists

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. It provides functionalities similar to Langchain, allowing users to design, debug, and manage data processing workflows, create and schedule workflow jobs, manage resources environment, and handle various data artifact resources. The tool also offers account management for users, allowing centralized management of data source accounts and API accounts. Users can access Hugging Face models through the Inference API or locally deployed models, as well as datasets on Hugging Face. HuggingFists supports breakpoint debugging, branch selection, function calls, workflow variables, and more to assist users in developing complex data processing workflows.

LabelLLM

LabelLLM is an open-source data annotation platform designed to optimize the data annotation process for LLM development. It offers flexible configuration, multimodal data support, comprehensive task management, and AI-assisted annotation. Users can access a suite of annotation tools, enjoy a user-friendly experience, and enhance efficiency. The platform allows real-time monitoring of annotation progress and quality control, ensuring data integrity and timeliness.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

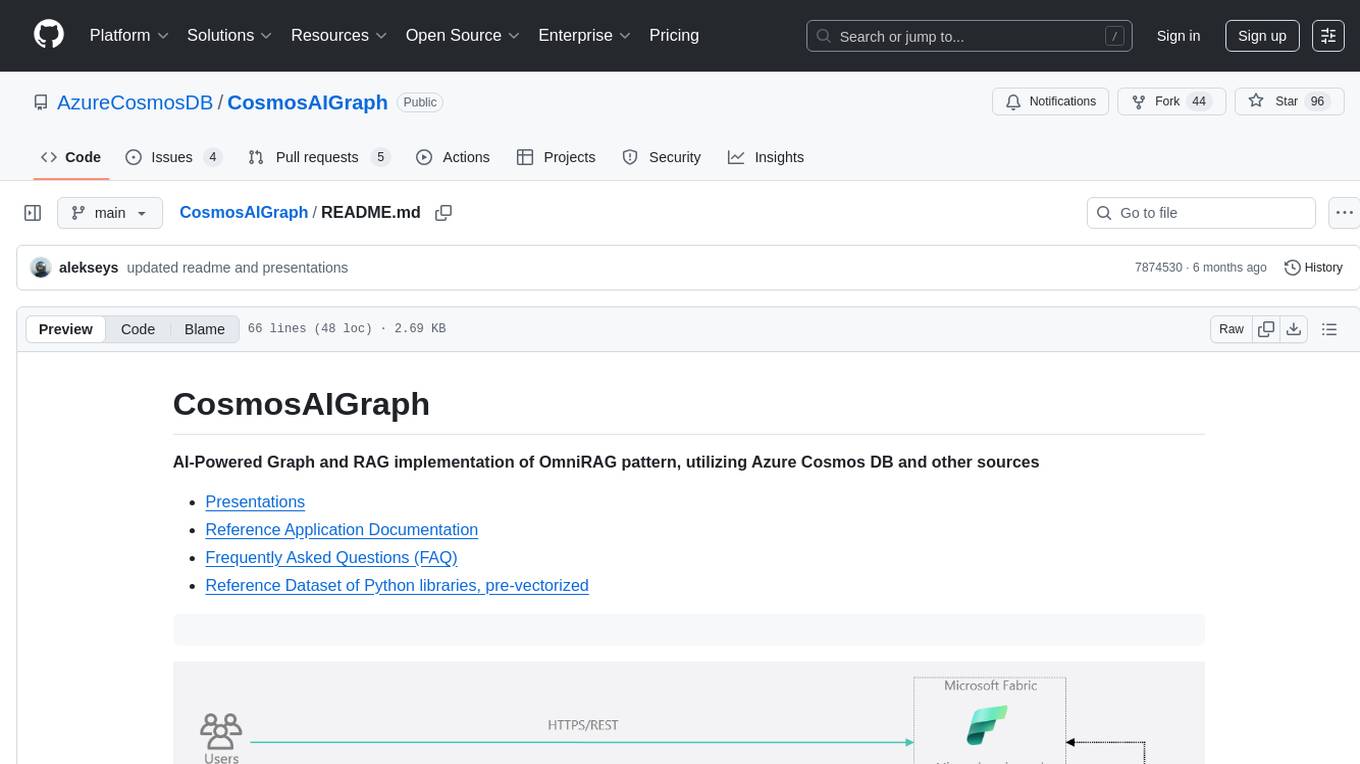

CosmosAIGraph

CosmosAIGraph is an AI-powered graph and RAG implementation of OmniRAG pattern, utilizing Azure Cosmos DB and other sources. It includes presentations, reference application documentation, FAQs, and a reference dataset of Python libraries pre-vectorized. The project focuses on Azure Cosmos DB for NoSQL and Apache Jena implementation for the in-memory RDF graph. It provides DockerHub images, with plans to add RBAC and Microsoft Entra ID/AAD authentication support, update AI model to gpt-4.5, and offer generic graph examples with a graph generation solution.

bedrock-engineer

Bedrock Engineer is an autonomous software development agent application that utilizes Amazon Bedrock. It allows users to customize, create/edit files, execute commands, search the web, use a knowledge base, utilize multi-agents, generate images, and more. The tool provides an interactive chat interface with AI agents, file system operations, web search capabilities, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select and customize agents, choose from various tools like file system operations, web search, Amazon Bedrock integration, and system command execution. Additionally, the tool offers features for website generation, connecting to design system data sources, AWS Step Functions ASL definition generation, diagram creation using natural language descriptions, and multi-language support.

fenic

fenic is an opinionated DataFrame framework from typedef.ai for building AI and agentic applications. It transforms unstructured and structured data into insights using familiar DataFrame operations enhanced with semantic intelligence. With support for markdown, transcripts, and semantic operators, plus efficient batch inference across various model providers. fenic is purpose-built for LLM inference, providing a query engine designed for AI workloads, semantic operators as first-class citizens, native unstructured data support, production-ready infrastructure, and a familiar DataFrame API.

dataherald

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over structured data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to: * Allow business users to get insights from the data warehouse without going through a data analyst * Enable Q+A from your production DBs inside your SaaS application * Create a ChatGPT plug-in from your proprietary data

ctakes

Apache cTAKES is a clinical Text Analysis and Knowledge Extraction System that focuses on extracting knowledge from clinical text through Natural Language Processing (NLP) techniques. It is modular and employs rule-based and machine learning methods to extract concepts such as symptoms, procedures, diagnoses, medications, and anatomy with attributes and standard codes. cTAKES can identify temporal events, dates, and times, placing events in a patient timeline. It supports various biomedical text processing tasks and can handle different types of clinical and health-related narratives using multiple data standards. cTAKES is widely used in research initiatives and encourages contributions from professionals, researchers, doctors, and students from diverse backgrounds.

For similar tasks

document-ai-samples

The Google Cloud Document AI Samples repository contains code samples and Community Samples demonstrating how to analyze, classify, and search documents using Google Cloud Document AI. It includes various projects showcasing different functionalities such as integrating with Google Drive, processing documents using Python, content moderation with Dialogflow CX, fraud detection, language extraction, paper summarization, tax processing pipeline, and more. The repository also provides access to test document files stored in a publicly-accessible Google Cloud Storage Bucket. Additionally, there are codelabs available for optical character recognition (OCR), form parsing, specialized processors, and managing Document AI processors. Community samples, like the PDF Annotator Sample, are also included. Contributions are welcome, and users can seek help or report issues through the repository's issues page. Please note that this repository is not an officially supported Google product and is intended for demonstrative purposes only.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

searchGPT

searchGPT is an open-source project that aims to build a search engine based on Large Language Model (LLM) technology to provide natural language answers. It supports web search with real-time results, file content search, and semantic search from sources like the Internet. The tool integrates LLM technologies such as OpenAI and GooseAI, and offers an easy-to-use frontend user interface. The project is designed to provide grounded answers by referencing real-time factual information, addressing the limitations of LLM's training data. Contributions, especially from frontend developers, are welcome under the MIT License.

LLMs-at-DoD

This repository contains tutorials for using Large Language Models (LLMs) in the U.S. Department of Defense. The tutorials utilize open-source frameworks and LLMs, allowing users to run them in their own cloud environments. The repository is maintained by the Defense Digital Service and welcomes contributions from users.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

EAGLE

Eagle is a family of Vision-Centric High-Resolution Multimodal LLMs that enhance multimodal LLM perception using a mix of vision encoders and various input resolutions. The model features a channel-concatenation-based fusion for vision experts with different architectures and knowledge, supporting up to over 1K input resolution. It excels in resolution-sensitive tasks like optical character recognition and document understanding.

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. This tool processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. It includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.