AgentDoG

A Diagnostic Guardrail Framework for AI Agent Safety and Security

Stars: 338

AgentDoG is a risk-aware evaluation and guarding framework for autonomous agents that focuses on trajectory-level risk assessment. It analyzes the full execution trace of tool-using agents to detect risks that emerge mid-trajectory. It provides trajectory-level monitoring, taxonomy-guided diagnosis, flexible use cases, and state-of-the-art performance. The framework includes a safety taxonomy for agentic systems, a methodology for task definition, data synthesis and collection, training, and performance highlights. It also offers deployment examples, agentic XAI attribution framework, and repository structure. Customization options are available, and the project is licensed under Apache 2.0.

README:

🤗 Hugging Face   |    🤖 ModelScope   |    📄 Technical Report   |    🌐 Project Page   |    📘 Documentation

Visit our Hugging Face or ModelScope organization (click links above), search checkpoints with names starting with AgentDoG-, and you will find all you need! Enjoy!

AgentDoG is a risk-aware evaluation and guarding framework for autonomous agents. It focuses on trajectory-level risk assessment, aiming to determine whether an agent’s execution trajectory contains safety risks under diverse application scenarios. Unlike single-step content moderation or final-output filtering, AgentDoG analyzes the full execution trace of tool-using agents to detect risks that emerge mid-trajectory.

- 🧭 Trajectory-Level Monitoring: evaluates multi-step agent executions spanning observations, reasoning, and actions.

- 🧩 Taxonomy-Guided Diagnosis: provides fine-grained risk labels (risk source, failure mode, and real-world harm) to explain why unsafe behavior occurs. More crucially, AgentDoG diagnoses the root cause of a specific action, tracing it to specific planning steps or tool selections.

- 🛡️ Flexible Use Cases: can serve as a benchmark, a risk classifier for trajectories, or a guard module in agent systems.

- 🥇 State-of-the-Art Performance: Outperforms existing approaches on R-Judge, ASSE-Safety, and ATBench.

| Name | Parameters | BaseModel | Download |

|---|---|---|---|

| AgentDoG-Qwen3-4B | 4B | Qwen3-4B-Instruct-2507 | 🤗 Hugging Face |

| AgentDoG-Qwen2.5-7B | 7B | Qwen2.5-7B-Instruct | 🤗 Hugging Face |

| AgentDoG-Llama3.1-8B | 8B | Llama3.1-8B-Instruct | 🤗 Hugging Face |

| AgentDoG-FG-Qwen3-4B | 4B | Qwen3-4B-Instruct-2507 | 🤗 Hugging Face |

| AgentDoG-FG-Qwen2.5-7B | 7B | Qwen2.5-7B-Instruct | 🤗 Hugging Face |

| AgentDoG-FG-Llama3.1-8B | 8B | Llama3.1-8B-Instruct | 🤗 Hugging Face |

For more details, please refer to Technical Report.

We release ATBench (Agent Trajectory Safety and Security Benchmark) for trajectory-level safety evaluation and fine-grained risk diagnosis.

- Download: 🤗 Hugging Face Datasets

- Scale: 500 trajectories (250 safe / 250 unsafe), ~8.97 turns per trajectory (~4486 turn interactions)

- Tools: 1575 unique tools appearing in trajectories; an independent unseen-tools library with 2292 tool definitions (no overlap with training tools)

-

Labels: binary

safe/unsafe; unsafe trajectories additionally include fine-grained labels (Risk Source, Failure Mode, Real-World Harm)

We adopt a unified, three-dimensional safety taxonomy for agentic systems. It organizes risks along three orthogonal axes, answering: why a risk arises (risk source), how it manifests in behavior (failure mode), and what harm it causes (real-world harm).

- Risk Source: where the threat originates in the agent loop, e.g., user inputs, environmental observations, external tools/APIs, or the agent's internal reasoning.

- Failure Mode: how the unsafe behavior is realized, such as flawed planning, unsafe tool usage, instruction-priority confusion, or unsafe content generation.

- Real-World Harm: the real-world impact, including privacy leakage, financial loss, physical harm, security compromise, or broader societal/psychological harms.

In the current release, the taxonomy includes 8 risk-source categories, 14 failure modes, and 10 real-world harm categories, and is used for fine-grained labeling during training and evaluation.

Figure: Example task instructions for the two AgentDoG classification tasks (trajectory-level evaluation and fine-grained diagnosis).

Prior works (e.g., LlamaGuard, Qwen3Guard) formulate safety moderation as classifying whether the final output in a multi-turn chat is safe. In contrast, AgentDoG defines a different task: diagnosing an entire agent trajectory to determine whether the agent exhibits any unsafe behavior at any point during execution.

Concretely, we consider two tasks:

-

Trajectory-level safety evaluation (binary). Given an agent trajectory (a sequence of steps, each step containing an action and an observation), predict

safe/unsafe. A trajectory is labeledunsafeif any step exhibits unsafe behavior; otherwise it issafe. -

Fine-grained risk diagnosis. Given an

unsafetrajectory, additionally predict the tuple (Risk Source, Failure Mode, Real-World Harm).

Prompting. Trajectory-level evaluation uses (i) task definition, (ii) agent trajectory, and (iii) output format. Fine-grained diagnosis additionally includes the safety taxonomy for reference and asks the model to output the three labels line by line.

| Task | Prompt Components |

|---|---|

| Trajectory-level safety evaluation | Task Definition + Agent Trajectory + Output Format |

| Fine-grained risk diagnosis | Task Definition + Safety Taxonomy + Agent Trajectory + Output Format |

We use a taxonomy-guided synthesis pipeline to generate realistic, multi-step agent trajectories. Each trajectory is conditioned on a sampled risk tuple (risk source, failure mode, real-world harm), then expanded into a coherent tool-augmented execution and filtered by quality checks.

Figure: Three-stage pipeline for multi-step agent safety trajectory synthesis.

To reflect realistic agent tool use, our tool library is orders of magnitude larger than prior benchmarks. For example, it is about 86x, 55x, and 41x larger than R-Judge, ASSE-Safety, and ASSE-Security, respectively.

Figure: Tool library size compared to existing agent safety benchmarks.

We also track the coverage of the three taxonomy dimensions (risk source, failure mode, and harm type) to ensure balanced and diverse risk distributions in our synthesized data.

Figure: Distribution over risk source, failure mode, and harm type categories.

Our guard models are trained with standard supervised fine-tuning (SFT) on trajectory demonstrations. Given a training set $\mathcal{D}_{\mathrm{train}}=\lbrace(x_i, y_i)\rbrace _{i=1}^n$, where $x_i$ is an agent trajectory and $y_i$ is the target output (binary safe/unsafe, and optionally fine-grained labels), we minimize the negative log-likelihood:

$$\mathcal{L}=-\sum_{(x_i,y_i)\in\mathcal{D}{\text{train}}}\log p{\theta}(y_i\mid x_i).$$

We fine-tuned multiple base models: Qwen3-4B-Instruct-2507, Qwen2.5-7B-Instruct, and Llama3.1-8B-Instruct.

-

Evaluated on R-Judge, ASSE-Safety, and ATBench

-

Outperforms step-level baselines in detecting:

- Long-horizon instruction hijacking

- Tool misuse after benign prefixes

-

Strong generalization across:

- Different agent frameworks

- Different LLM backbones

-

Fine-grained label accuracy on ATBench (best of our FG models): Risk Source 82.0%, Failure Mode 32.4%, Harm Type 59.2%

Accuracy comparison (ours + baselines):

| Model | Type | R-Judge | ASSE-Safety | ATBench |

|---|---|---|---|---|

| GPT-5.2 | General | 90.8 | 77.4 | 90.0 |

| Gemini-3-Flash | General | 95.2 | 75.9 | 75.6 |

| Gemini-3-Pro | General | 94.3 | 78.5 | 87.2 |

| QwQ-32B | General | 89.5 | 68.2 | 63.0 |

| Qwen3-235B-A22B-Instruct | General | 85.1 | 77.6 | 84.6 |

| LlamaGuard3-8B | Guard | 61.2 | 54.5 | 53.3 |

| LlamaGuard4-12B | Guard | 63.8 | 56.3 | 58.1 |

| Qwen3-Guard | Guard | 40.6 | 48.2 | 55.3 |

| ShieldAgent | Guard | 81.0 | 79.6 | 76.0 |

| AgentDoG-4B (Ours) | Guard | 91.8 | 80.4 | 92.8 |

| AgentDoG-7B (Ours) | Guard | 91.7 | 79.8 | 87.4 |

| AgentDoG-8B (Ours) | Guard | 78.2 | 81.1 | 87.6 |

Fine-grained label accuracy on ATBench (unsafe trajectories only):

| Model | Risk Source Acc | Failure Mode Acc | Harm Type Acc |

|---|---|---|---|

| Gemini-3-Flash | 38.0 | 22.4 | 34.8 |

| GPT-5.2 | 41.6 | 20.4 | 30.8 |

| Gemini-3-Pro | 36.8 | 17.6 | 32.0 |

| Qwen3-235B-A22B-Instruct-2507 | 19.6 | 17.2 | 38.0 |

| QwQ-32B | 23.2 | 14.4 | 34.8 |

| AgentDoG-FG-4B (Ours) | 82.0 | 32.4 | 58.4 |

| AgentDoG-FG-8B (Ours) | 81.6 | 31.6 | 57.6 |

| AgentDoG-FG-7B (Ours) | 81.2 | 28.8 | 59.2 |

For deployment, you can use sglang>=0.4.6 or vllm>=0.10.0 to create an OpenAI-compatible API endpoint:

SGLang

python -m sglang.launch_server --model-path AI45Research/AgentDoG-Qwen3-4B --port 30000 --context-length 16384

python -m sglang.launch_server --model-path AI45Research/AgentDoG-FG-Qwen3-4B --port 30001 --context-length 16384vLLM

vllm serve AI45Research/AgentDoG-Qwen3-4B --port 8000 --max-model-len 16384

vllm serve AI45Research/AgentDoG-FG-Qwen3-4B --port 8001 --max-model-len 16384Recommended: use prompt templates in prompts/ and run the example script in examples/.

Binary trajectory moderation

python examples/run_openai_moderation.py \

--base-url http://localhost:8000/v1 \

--model AI45Research/AgentDoG-Qwen3-4B \

--trajectory examples/trajectory_sample.json \

--prompt prompts/trajectory_binary.txtFine-grained risk diagnosis

python examples/run_openai_moderation.py \

--base-url http://localhost:8000/v1 \

--model AI45Research/AgentDoG-FG-Qwen3-4B \

--trajectory examples/trajectory_sample.json \

--prompt prompts/trajectory_finegrained.txt \

--taxonomy prompts/taxonomy_finegrained.txtWe also introduce a novel hierarchical framework for Agentic Attribution, designed to unveil the internal drivers behind agent actions beyond simple failure localization. By decomposing interaction trajectories into pivotal components and fine-grained textual evidence, our approach explains why an agent makes specific decisions regardless of the outcome. This framework enhances the transparency and accountability of autonomous systems by identifying key factors such as memory biases and tool outputs.

To evaluate the effectiveness of the proposed agentic attribution framework, we conducted several case studies across diverse scenarios. The figure illustrates how our framework localizes decision drivers across four representative cases. The highlighted regions denote the historical components and fine-grained sentences identified by our framework as the primary decision drivers.

Figure: Illustration of attribution results across two representative scenarios.

Figure: Comparative attribution results between AgentDoG and Basemodel.

Figure: Demo of dynamic attribution.

You can run the analysis in three steps:

Analyze the contribution of each conversation step.

python component_attri.py \

--model_id "your_model_path" \

--data_dir ./samples \

--output_dir ./resultsPerform fine-grained analysis on the top-K most influential steps.

python sentence_attri.py \

--model_id "your_model_path" \

--traj_file ./samples/xx.json \

--attr_file ./results/xx_attr_trajectory.json \

--output_file ./results/xx_attr_sentence.json \

--top_k 3Create an interactive HTML heatmap.

python case_plot_html.py \

--original_traj_file ./samples/xx.json \

--traj_attr_file ./results/xx_attr_trajectory.json \

--sent_attr_file ./results/xx_attr_sentence.json \

--output_file ./results/xx_visualization.htmlTo run the complete pipeline automatically, configure and run the shell script:

bash run_all_pipeline.shAgentDoG/

├── README.md

├── figures/

├── prompts/

│ ├── trajectory_binary.txt

│ ├── trajectory_finegrained.txt

│ └── taxonomy_finegrained.txt

├── examples/

│ ├── run_openai_moderation.py

│ └── trajectory_sample.json

├── AgenticXAI

│ ├── case_plot_html.py

│ ├── component_attri.py

│ ├── README.md

│ ├── run_all_pipeline.sh

│ ├── samples

│ │ ├── finance.json

│ │ ├── resume.json

│ │ └── transaction.json

│ └── sentence_attri.py

-

Edit prompt templates:

prompts/trajectory_binary.txt,prompts/trajectory_finegrained.txt -

Update taxonomy labels:

prompts/taxonomy_finegrained.txt -

Change runtime integration:

examples/run_openai_moderation.py

This project is released under the Apache 2.0 License.

If you use AgentDoG in your research, please cite:

@misc{liu2026agentdogdiagnosticguardrailframework,

title={AgentDoG: A Diagnostic Guardrail Framework for AI Agent Safety and Security},

author={Dongrui Liu and Qihan Ren and Chen Qian and Shuai Shao and Yuejin Xie and Yu Li and Zhonghao Yang and Haoyu Luo and Peng Wang and Qingyu Liu and Binxin Hu and Ling Tang and Jilin Mei and Dadi Guo and Leitao Yuan and Junyao Yang and Guanxu Chen and Qihao Lin and Yi Yu and Bo Zhang and Jiaxuan Guo and Jie Zhang and Wenqi Shao and Huiqi Deng and Zhiheng Xi and Wenjie Wang and Wenxuan Wang and Wen Shen and Zhikai Chen and Haoyu Xie and Jialing Tao and Juntao Dai and Jiaming Ji and Zhongjie Ba and Linfeng Zhang and Yong Liu and Quanshi Zhang and Lei Zhu and Zhihua Wei and Hui Xue and Chaochao Lu and Jing Shao and Xia Hu},

year={2026},

journal={arXiv preprint arXiv:2601.18491}

}

@misc{qian2026behind,

title={The Why Behind the Action: Unveiling Internal Drivers via Agentic Attribution},

author={Chen Qian and Peng Wang and Dongrui Liu and Junyao Yang and Dadi Guo and Ling Tang and Jilin Mei and Qihan Ren and Shuai Shao and Yong Liu and Jie Fu and Jing Shao and Xia Hu},

year={2026},

journal={arXiv preprint arXiv:2601.15075}

}This project builds upon prior work in agent safety, trajectory evaluation, and risk-aware AI systems.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AgentDoG

Similar Open Source Tools

AgentDoG

AgentDoG is a risk-aware evaluation and guarding framework for autonomous agents that focuses on trajectory-level risk assessment. It analyzes the full execution trace of tool-using agents to detect risks that emerge mid-trajectory. It provides trajectory-level monitoring, taxonomy-guided diagnosis, flexible use cases, and state-of-the-art performance. The framework includes a safety taxonomy for agentic systems, a methodology for task definition, data synthesis and collection, training, and performance highlights. It also offers deployment examples, agentic XAI attribution framework, and repository structure. Customization options are available, and the project is licensed under Apache 2.0.

awesome-slash

Automate the entire development workflow beyond coding. awesome-slash provides production-ready skills, agents, and commands for managing tasks, branches, reviews, CI, and deployments. It automates the entire workflow, including task exploration, planning, implementation, review, and shipping. The tool includes 11 plugins, 40 agents, 26 skills, and 26k lines of lib code, with 3,357 tests and support for 3 platforms. It works with Claude Code, OpenCode, and Codex CLI, offering specialized capabilities through skills and agents.

UniCoT

Uni-CoT is a unified reasoning framework that extends Chain-of-Thought (CoT) principles to the multimodal domain, enabling Multimodal Large Language Models (MLLMs) to perform interpretable, step-by-step reasoning across both text and vision. It decomposes complex multimodal tasks into structured, manageable steps that can be executed sequentially or in parallel, allowing for more scalable and systematic reasoning.

buffer-of-thought-llm

Buffer of Thoughts (BoT) is a thought-augmented reasoning framework designed to enhance the accuracy, efficiency, and robustness of large language models (LLMs). It introduces a meta-buffer to store high-level thought-templates distilled from problem-solving processes, enabling adaptive reasoning for efficient problem-solving. The framework includes a buffer-manager to dynamically update the meta-buffer, ensuring scalability and stability. BoT achieves significant performance improvements on reasoning-intensive tasks and demonstrates superior generalization ability and robustness while being cost-effective compared to other methods.

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.

BitBLAS

BitBLAS is a library for mixed-precision BLAS operations on GPUs, for example, the $W_{wdtype}A_{adtype}$ mixed-precision matrix multiplication where $C_{cdtype}[M, N] = A_{adtype}[M, K] \times W_{wdtype}[N, K]$. BitBLAS aims to support efficient mixed-precision DNN model deployment, especially the $W_{wdtype}A_{adtype}$ quantization in large language models (LLMs), for example, the $W_{UINT4}A_{FP16}$ in GPTQ, the $W_{INT2}A_{FP16}$ in BitDistiller, the $W_{INT2}A_{INT8}$ in BitNet-b1.58. BitBLAS is based on techniques from our accepted submission at OSDI'24.

HuatuoGPT-o1

HuatuoGPT-o1 is a medical language model designed for advanced medical reasoning. It can identify mistakes, explore alternative strategies, and refine answers. The model leverages verifiable medical problems and a specialized medical verifier to guide complex reasoning trajectories and enhance reasoning through reinforcement learning. The repository provides access to models, data, and code for HuatuoGPT-o1, allowing users to deploy the model for medical reasoning tasks.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

qserve

QServe is a serving system designed for efficient and accurate Large Language Models (LLM) on GPUs with W4A8KV4 quantization. It achieves higher throughput compared to leading industry solutions, allowing users to achieve A100-level throughput on cheaper L40S GPUs. The system introduces the QoQ quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache, addressing runtime overhead challenges. QServe improves serving throughput for various LLM models by implementing compute-aware weight reordering, register-level parallelism, and fused attention memory-bound techniques.

pai-opencode

PAI-OpenCode is a complete port of Daniel Miessler's Personal AI Infrastructure (PAI) to OpenCode, an open-source, provider-agnostic AI coding assistant. It brings modular capabilities, dynamic multi-agent orchestration, session history, and lifecycle automation to personalize AI assistants for users. With support for 75+ AI providers, PAI-OpenCode offers dynamic per-task model routing, full PAI infrastructure, real-time session sharing, and multiple client options. The tool optimizes cost and quality with a 3-tier model strategy and a 3-tier research system, allowing users to switch presets for different routing strategies. PAI-OpenCode's architecture preserves PAI's design while adapting to OpenCode, documented through Architecture Decision Records (ADRs).

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

azure-agentic-infraops

Agentic InfraOps is a multi-agent orchestration system for Azure infrastructure development that transforms how you build Azure infrastructure with AI agents. It provides a structured 7-step workflow that coordinates specialized AI agents through a complete infrastructure development cycle: Requirements → Architecture → Design → Plan → Code → Deploy → Documentation. The system enforces Azure Well-Architected Framework (WAF) alignment and Azure Verified Modules (AVM) at every phase, combining the speed of AI coding with best practices in cloud engineering.

Cherry_LLM

Cherry Data Selection project introduces a self-guided methodology for LLMs to autonomously discern and select cherry samples from open-source datasets, minimizing manual curation and cost for instruction tuning. The project focuses on selecting impactful training samples ('cherry data') to enhance LLM instruction tuning by estimating instruction-following difficulty. The method involves phases like 'Learning from Brief Experience', 'Evaluating Based on Experience', and 'Retraining from Self-Guided Experience' to improve LLM performance.

HuatuoGPT-II

HuatuoGPT2 is an innovative domain-adapted medical large language model that excels in medical knowledge and dialogue proficiency. It showcases state-of-the-art performance in various medical benchmarks, surpassing GPT-4 in expert evaluations and fresh medical licensing exams. The open-source release includes HuatuoGPT2 models in 7B, 13B, and 34B versions, training code for one-stage adaptation, partial pre-training and fine-tuning instructions, and evaluation methods for medical response capabilities and professional pharmacist exams. The tool aims to enhance LLM capabilities in the Chinese medical field through open-source principles.

For similar tasks

AgentDoG

AgentDoG is a risk-aware evaluation and guarding framework for autonomous agents that focuses on trajectory-level risk assessment. It analyzes the full execution trace of tool-using agents to detect risks that emerge mid-trajectory. It provides trajectory-level monitoring, taxonomy-guided diagnosis, flexible use cases, and state-of-the-art performance. The framework includes a safety taxonomy for agentic systems, a methodology for task definition, data synthesis and collection, training, and performance highlights. It also offers deployment examples, agentic XAI attribution framework, and repository structure. Customization options are available, and the project is licensed under Apache 2.0.

distilabel

Distilabel is a framework for synthetic data and AI feedback for AI engineers that require high-quality outputs, full data ownership, and overall efficiency. It helps you synthesize data and provide AI feedback to improve the quality of your AI models. With Distilabel, you can: * **Synthesize data:** Generate synthetic data to train your AI models. This can help you to overcome the challenges of data scarcity and bias. * **Provide AI feedback:** Get feedback from AI models on your data. This can help you to identify errors and improve the quality of your data. * **Improve your AI output quality:** By using Distilabel to synthesize data and provide AI feedback, you can improve the quality of your AI models and get better results.

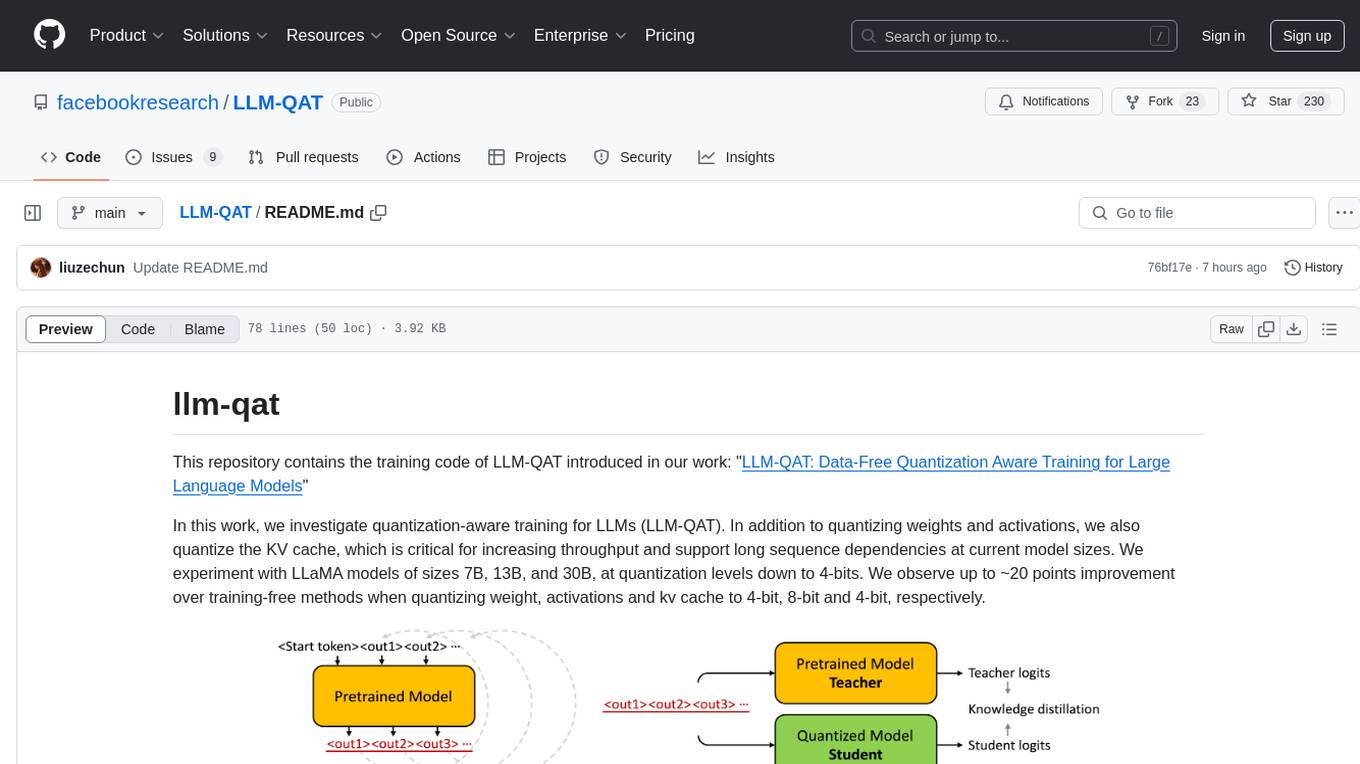

LLM-QAT

This repository contains the training code of LLM-QAT for large language models. The work investigates quantization-aware training for LLMs, including quantizing weights, activations, and the KV cache. Experiments were conducted on LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4-bits. Significant improvements were observed when quantizing weight, activations, and kv cache to 4-bit, 8-bit, and 4-bit, respectively.

YuLan-Mini

YuLan-Mini is a lightweight language model with 2.4 billion parameters that achieves performance comparable to industry-leading models despite being pre-trained on only 1.08T tokens. It excels in mathematics and code domains. The repository provides pre-training resources, including data pipeline, optimization methods, and annealing approaches. Users can pre-train their own language models, perform learning rate annealing, fine-tune the model, research training dynamics, and synthesize data. The team behind YuLan-Mini is AI Box at Renmin University of China. The code is released under the MIT License with future updates on model weights usage policies. Users are advised on potential safety concerns and ethical use of the model.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.