Best AI tools for< Synthesize Data >

20 - AI tool Sites

GovAI

GovAI is an AI tool designed to assist decision-makers in navigating the transition to a world with advanced AI. The tool produces rigorous research and fosters talent to address economic and national security imperatives related to AI dominance. It features analysis on various topics such as economics, export controls, public attitudes towards AI, AI governance trends, and the impact of AI on work. GovAI aims to provide insights and recommendations for policymakers and stakeholders in the AI domain.

System Pro

System Pro is a cutting-edge platform that revolutionizes the way users conduct research, particularly in the fields of health and life sciences. It offers a fast and dependable method to discover, combine, and place scientific research in context. By leveraging advanced technology, System Pro enhances the efficiency and effectiveness of research processes, empowering users to access valuable insights with ease.

The Keenfolks

The Keenfolks is an AI Marketing Agency providing AI solutions for global brands to enhance media efficiency and ROI. They offer AI-powered tools to optimize media campaigns, synthesize audience data, and provide actionable intelligence. The agency helps brands transform fragmented data into unified intelligence, collaborate effectively, and improve media performance. The Keenfolks work with multinational brands across various markets, offering services such as AI assessment, content generation, customer behavior prediction, personalization automation, and data-informed decision-making.

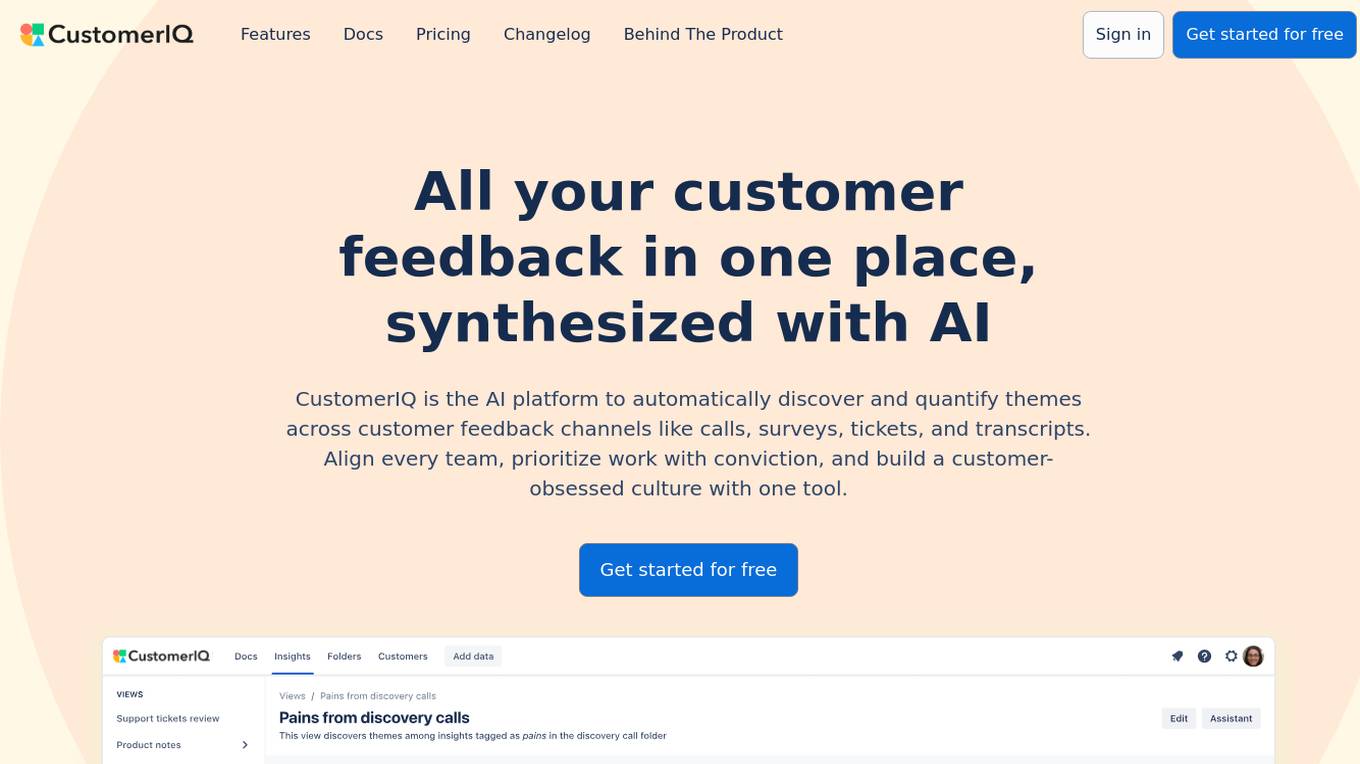

CustomerIQ

CustomerIQ is an AI platform that automatically discovers and quantifies themes across customer feedback channels like calls, surveys, tickets, and transcripts. It aggregates customer feedback, extracts and categorizes feature requests, pain points, preferences, and highlights related to customers. The platform helps align teams, prioritize work, and build a customer-obsessed culture. CustomerIQ accelerates development by scoping project requirements faster and providing actionable insights backed with context.

Cue

Cue is an AI-powered data analytics tool designed to help GTM (Go-To-Market) leaders make informed decisions without the need to be data scientists. It seamlessly embeds data science into organizations, providing measurable growth insights and strategies. Cue offers expert data science, clean data integration, AI-driven analysis, customized GTM solutions, and strategy synthesis. It aims to maximize the value of existing data and people, emphasizing the importance of a solid data foundation for business growth.

Hermae Solutions

Hermae Solutions offers an AI Assistant for Enterprise Design Systems, providing onboarding acceleration, contractor efficiency, design system adoption support, knowledge distribution, and various AI documentation and Storybook assistants. The platform enables users to train custom AI assistants, embed them into documentation sites, and communicate instantly with the knowledge base. Hermae's process simplifies efficiency improvements by gathering information sources, processing data for AI supplementation, customizing integration, and supporting integration success. The AI assistant helps reduce engineering costs and increase development efficiency across the board.

iseek.ai

iseek.ai is an AI-powered search and analytics platform designed to revolutionize decision-making in professional and higher education institutions. The platform utilizes patented AI and Natural Language Understanding technology to help users find and synthesize essential information quickly and efficiently. iseek.ai offers solutions for accreditation preparation, curriculum design, outcome analytics, and more, enabling users to transform their content and data into actionable insights.

OpinioAI

OpinioAI is an AI-powered market research tool that allows users to gain business critical insights from data without the need for costly polls, surveys, or interviews. With OpinioAI, users can create AI personas and market segments to understand customer preferences, affinities, and opinions. The platform democratizes research by providing efficient, effective, and budget-friendly solutions for businesses, students, and individuals seeking valuable insights. OpinioAI leverages Large Language Models to simulate humans and extract opinions in detail, enabling users to analyze existing data, synthesize new insights, and evaluate content from the perspective of their target audience.

Elicit

Elicit is a research tool that uses artificial intelligence to help researchers analyze research papers more efficiently. It can summarize papers, extract data, and synthesize findings, saving researchers time and effort. Elicit is used by over 800,000 researchers worldwide and has been featured in publications such as Nature and Science. It is a powerful tool that can help researchers stay up-to-date on the latest research and make new discoveries.

ChatTTS

ChatTTS is a text-to-speech tool optimized for natural, conversational scenarios. It supports both Chinese and English languages, trained on approximately 100,000 hours of data. With features like multi-language support, large data training, dialog task compatibility, open-source plans, control, security, and ease of use, ChatTTS provides high-quality and natural-sounding voice synthesis. It is designed for conversational tasks, dialogue speech generation, video introductions, educational content synthesis, and more. Users can integrate ChatTTS into their applications using provided API and SDKs for a seamless text-to-speech experience.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

Undermind

Undermind is an AI-powered scientific research assistant that revolutionizes the way researchers access and analyze academic papers. By utilizing intelligent language models, Undermind reads and synthesizes information from hundreds of papers to provide accurate and comprehensive results. Researchers can describe their queries in natural language, and Undermind assists in finding relevant papers, brainstorming questions, and discovering crucial insights. Trusted by researchers across various fields, Undermind offers a unique approach to literature search, surpassing traditional search engines in accuracy and efficiency.

AppTek.ai

AppTek.ai is a global leader in artificial intelligence (AI) and machine learning (ML) technologies, providing advanced solutions in automatic speech recognition, neural machine translation, natural language processing/understanding, large language models, and text-to-speech technologies. The platform offers industry-leading language solutions for various sectors such as media and entertainment, call centers, government, and enterprise business. AppTek.ai combines cutting-edge AI research with real-world applications, delivering accurate and efficient tools for speech transcription, translation, understanding, and synthesis across multiple languages and dialects.

User Evaluation

User Evaluation is an AI-first user research platform that leverages AI technology to provide instant insights, comprehensive reports, and on-demand answers to enhance customer research. The platform offers features such as AI-driven data analysis, multilingual transcription, live timestamped notes, AI reports & presentations, and multimodal AI chat. User Evaluation empowers users to analyze qualitative and quantitative data, synthesize AI-generated recommendations, and ensure data security through encryption protocols. It is designed for design agencies, product managers, founders, and leaders seeking to accelerate innovation and shape exceptional product experiences.

PaperGuide.AI

PaperGuide.AI is an AI-powered research platform that helps users discover, read, write, and manage research with ease. It offers features such as AI search to discover new papers, summaries to understand complex research, reference management, note-taking, and AI writing assistance. Trusted by over 500,000 users, PaperGuide.AI streamlines academic and research workflows by providing tools to synthesize research faster, manage references effectively, and write essays and research papers efficiently.

Max Planck Institute for Informatics

The Max Planck Institute for Informatics focuses on Visual Computing and Artificial Intelligence, conducting research at the intersection of Computer Graphics, Computer Vision, and Artificial Intelligence. The institute aims to develop innovative methods to capture, represent, synthesize, and simulate real-world models with high detail, robustness, and efficiency. By combining concepts from Computer Graphics, Computer Vision, and Artificial Intelligence, the institute lays the groundwork for advanced computing systems that can interact intelligently with humans and the environment.

illumi

illumi is a collaborative AI tool designed to enhance teamwork by providing a visual AI workspace for planning, alignment, and action. It aims to bridge the gap between human thinking and AI capabilities, allowing teams to work together in real-time on an infinite canvas. With features like custom AI workflows, knowledge capture, and various AI model support, illumi empowers teams to streamline processes, synthesize insights, and boost productivity. The application focuses on making AI more accessible and intuitive for users, enabling them to leverage AI intelligence effectively in their projects.

Elicit

Elicit is an AI research assistant that helps researchers analyze research papers at superhuman speed. It automates time-consuming research tasks such as summarizing papers, extracting data, and synthesizing findings. Trusted by researchers, Elicit offers a plethora of features to speed up the research process and is particularly beneficial for empirical domains like biomedicine and machine learning.

Voicepanel

Voicepanel is an AI-powered platform that helps businesses gather detailed feedback from their customers at unprecedented speed and scale. It uses AI to recruit target audiences, conduct interviews over voice or video, and synthesize actionable insights instantly. Voicepanel's platform is easy to use and can be set up in minutes. It offers a variety of features, including AI interviewing, AI recruiting, and AI synthesis. Voicepanel is a valuable tool for businesses that want to gain a deeper understanding of their customers and make better decisions.

Outset

Outset is an AI-powered research platform that enables users to conduct and synthesize video, audio, and text conversations with hundreds of participants at once. It uses AI to moderate conversations, identify common themes, tag relevant conversations, and pull out powerful quotes. Outset is designed to help researchers understand the 'why' behind answers and gain deeper insights into the people they serve.

4 - Open Source AI Tools

distilabel

Distilabel is a framework for synthetic data and AI feedback for AI engineers that require high-quality outputs, full data ownership, and overall efficiency. It helps you synthesize data and provide AI feedback to improve the quality of your AI models. With Distilabel, you can: * **Synthesize data:** Generate synthetic data to train your AI models. This can help you to overcome the challenges of data scarcity and bias. * **Provide AI feedback:** Get feedback from AI models on your data. This can help you to identify errors and improve the quality of your data. * **Improve your AI output quality:** By using Distilabel to synthesize data and provide AI feedback, you can improve the quality of your AI models and get better results.

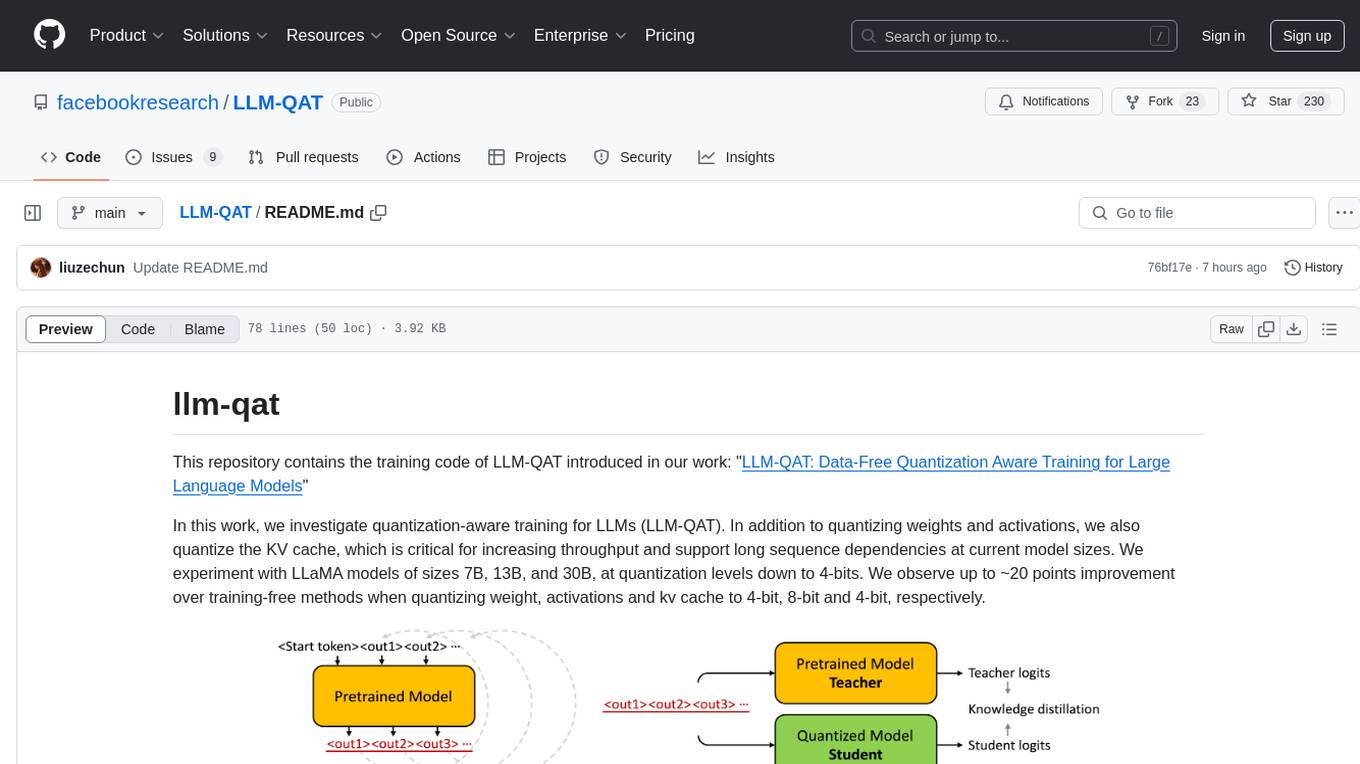

LLM-QAT

This repository contains the training code of LLM-QAT for large language models. The work investigates quantization-aware training for LLMs, including quantizing weights, activations, and the KV cache. Experiments were conducted on LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4-bits. Significant improvements were observed when quantizing weight, activations, and kv cache to 4-bit, 8-bit, and 4-bit, respectively.

YuLan-Mini

YuLan-Mini is a lightweight language model with 2.4 billion parameters that achieves performance comparable to industry-leading models despite being pre-trained on only 1.08T tokens. It excels in mathematics and code domains. The repository provides pre-training resources, including data pipeline, optimization methods, and annealing approaches. Users can pre-train their own language models, perform learning rate annealing, fine-tune the model, research training dynamics, and synthesize data. The team behind YuLan-Mini is AI Box at Renmin University of China. The code is released under the MIT License with future updates on model weights usage policies. Users are advised on potential safety concerns and ethical use of the model.

AgentDoG

AgentDoG is a risk-aware evaluation and guarding framework for autonomous agents that focuses on trajectory-level risk assessment. It analyzes the full execution trace of tool-using agents to detect risks that emerge mid-trajectory. It provides trajectory-level monitoring, taxonomy-guided diagnosis, flexible use cases, and state-of-the-art performance. The framework includes a safety taxonomy for agentic systems, a methodology for task definition, data synthesis and collection, training, and performance highlights. It also offers deployment examples, agentic XAI attribution framework, and repository structure. Customization options are available, and the project is licensed under Apache 2.0.

20 - OpenAI Gpts

PANˈDÔRƏ

Pandora is a Posthuman Prompt Engineer powered by the MANNS engine. Surpass human creative limitations by synthesizing diverse knowledge, advanced pattern recognition, and algorithmic creativity

AstroLex

Expertly guides users to identify gaps in research by analyzing and summarizing academic papers.

AI Debate Synthesizer OPED

Game-like GPT in which five AIs dynamically debate a given "theme" and lead to a proposal-based conclusion.

Work Contribution Record Table Synthesizer

Guides in creating a Work Contribution Record Table.