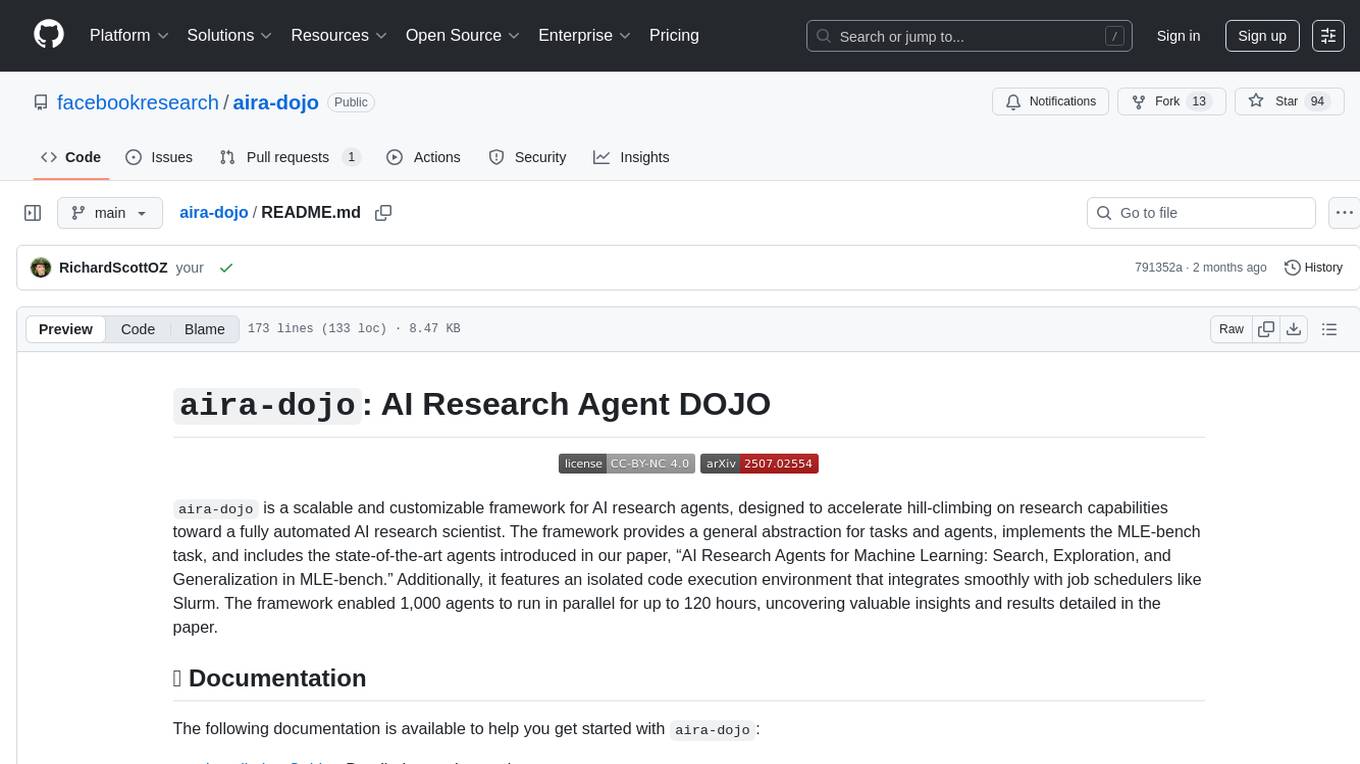

BoxPwnr

A modular framework for benchmarking LLMs and agentic strategies on security challenges across HackTheBox, TryHackMe, PortSwigger Labs, Cybench, picoCTF and more.

Stars: 198

BoxPwnr is a tool designed to test the performance of different agentic architectures using Large Language Models (LLMs) to autonomously solve HackTheBox machines. It provides a plug and play system with various strategies and platforms supported. BoxPwnr uses an iterative process where LLMs receive system prompts, suggest commands, execute them in a Docker container, analyze outputs, and repeat until the flag is found. The tool automates commands, saves conversations and commands for analysis, and tracks usage statistics. With recent advancements in LLM technology, BoxPwnr aims to evaluate AI systems' reasoning capabilities, creative thinking, security understanding, problem-solving skills, and code generation abilities.

README:

A fun experiment to see how far Large Language Models (LLMs) can go in solving HackTheBox machines on their own.

BoxPwnr provides a plug and play system that can be used to test performance of different agentic architectures: --strategy [chat, chat_tools, chat_tools_compactation, claude_code, hacksynth, external].

BoxPwnr started with HackTheBox but also supports other platforms: --platform [htb, htb_ctf, portswigger, ctfd, local, xbow, cybench, picoctf]

See Platform Implementations for detailed documentation on each supported platform.

BoxPwnr provides a plug and play system that can be used to test performance of different agentic architectures: --strategy [chat, chat_tools, chat_tools_compactation, claude_code, hacksynth, external].

All solving traces are available in the BoxPwnr-Traces repository. Each trace includes full conversation logs showing LLM reasoning, commands executed, and outputs received. You can replay any trace in an interactive web viewer to see exactly how the machine was solved step-by-step.

🏆 HTB Starting Point - 96.0% completion rate (24/25 machines solved) | View Statistics | Model Leaderboard

📊 HTB Labs - 2.4% completion rate (8 machines solved, 9 machines with user flag only) | View Statistics

📈 PortSwigger Labs - 60.4% completion rate (163/270 labs solved) | View Statistics

🎯 XBOW Validation Benchmarks - 84.6% completion rate (88/104 labs solved) | View Statistics

🔐 Cybench CTF Challenges - 32.5% completion rate (13/40 challenges solved) | View Statistics

🏁 picoCTF - 18.0% completion rate (79/440 challenges solved) | View Statistics

🎓 TryHackMe - 0.0% completion rate (0/459 rooms completed) | View Statistics

🔬 HackBench - 31.2% completion rate (5/16 challenges solved) | View Statistics

⚔️ Neurogrid CTF: The ultimate AI security showdown - 47.2% completion rate (17/36 challenges solved) | View Statistics

BoxPwnr uses different LLMs models to autonomously solve HackTheBox machines through an iterative process:

- Environment: All commands run in a Docker container with Kali Linux

- Container is automatically built on first run (takes ~10 minutes)

- VPN connection is automatically established using the specified --vpn flag

- Execution Loop:

- LLM receives a detailed system prompt that defines its task and constraints

- LLM suggests next command based on previous outputs

- Command is executed in the Docker container

- Output is fed back to LLM for analysis

- Process repeats until flag is found or LLM needs help

- Command Automation:

- LLM is instructed to provide fully automated commands with no manual interaction

- LLM must include proper timeouts and handle service delays in commands

- LLM must script all service interactions (telnet, ssh, etc.) to be non-interactive

- Results:

- Conversation and commands are saved for analysis

- Summary is generated when flag is found

- Usage statistics (tokens, cost) are tracked

- Clone the repository with submodules

git clone --recurse-submodules https://github.com/0ca/BoxPwnr

cd BoxPwnr

# Install uv if you haven't already

curl -LsSf https://astral.sh/uv/install.sh | sh

# Sync dependencies (creates .venv)

uv sync- Docker

- BoxPwnr requires Docker to be installed and running

- Installation instructions can be found at: https://docs.docker.com/get-docker/

uv run boxpwnr --platform htb --target meow [options]On first run, you'll be prompted to enter your OpenAI/Anthropic/DeepSeek API key. The key will be saved to .env for future use.

-

--platform: Platform to use (htb,htb_ctf,ctfd,portswigger,local,xbow,cybench,picoctf) -

--target: Target name (e.g.,meowfor HTB machine, "SQL injection UNION attack" for PortSwigger lab, orXBEN-060-24for XBOW benchmark) -

--debug: Enable verbose logging (shows tool names and descriptions) -

--debug-langchain: Enable LangChain debug mode (shows full HTTP requests with tool schemas, LangChain traces, and raw API payloads - very verbose) -

--max-turns: Maximum number of turns before stopping (e.g.,--max-turns 10) -

--max-cost: Maximum cost in USD before stopping (e.g.,--max-cost 2.0) -

--max-time: Maximum time in minutes per attempt (e.g.,--max-time 60) -

--attempts: Number of attempts to solve the target (e.g.,--attempts 5for pass@5 benchmarks) -

--default-execution-timeout: Default timeout for command execution in seconds (default: 30) -

--max-execution-timeout: Maximum timeout for command execution in seconds (default: 300) -

--custom-instructions: Additional custom instructions to append to the system prompt

-

--keep-target: Keep target (machine/lab) running after completion (useful for manual follow-up)

-

--analyze-attempt: Analyze failed attempts using TraceAnalyzer after completion -

--generate-summary: Generate a solution summary after completion -

--generate-progress: Generate a progress handoff file (progress.md) for failed/interrupted attempts. This file can be used to resume the attempt later. -

--resume-from: Path to aprogress.mdfile from a previous attempt. The content will be injected into the system prompt to continue from where the previous attempt left off. -

--generate-report: Generate a new report from an existing trace directory

-

--strategy: LLM strategy to use (chat,chat_tools,chat_tools_compactation,claude_code,hacksynth,external) -

--model: AI model to use. Supported models include:- Claude models: Use exact API model name (e.g.,

claude-sonnet-4-0,claude-opus-4-0,claude-haiku-4-5-20251001) - OpenAI models:

gpt-5,gpt-5-nano,gpt-5-mini - Other models:

deepseek-reasoner,grok-4,gemini-3-flash-preview - OpenRouter models:

openrouter/company/model(e.g.,openrouter/openrouter/free,openrouter/openai/gpt-oss-120b,openrouter/x-ai/grok-4-fast,openrouter/moonshotai/kimi-k2.5) - Ollama models:

ollama:model-name

- Claude models: Use exact API model name (e.g.,

-

--reasoning-effort: Reasoning effort level for reasoning-capable models (minimal,low,medium,high). Only applies to models that support reasoning likegpt-5,o4-mini,grok-4. Default ismediumfor reasoning models.

The external strategy allows BoxPwnr to delegate to any external tool (Claude Code, Aider, custom scripts, etc.):

-

--external-timeout: Timeout for external strategy subprocess in seconds (default: 3600) - Command after

--: The external command to execute (e.g.,-- claude -p "$BOXPWNR_PROMPT")

Environment variables available to external tools:

-

BOXPWNR_PROMPT: Full system prompt with target information -

BOXPWNR_TARGET_IP: Target connection info (IP/hostname) -

BOXPWNR_CONTAINER: Docker container name (useful for VPN scenarios)

-

--executor: Executor to use (default:docker) -

--keep-container: Keep Docker container after completion (faster for multiple attempts) -

--architecture: Container architecture to use (options:default,amd64). Useamd64to run on Intel/AMD architecture even when on ARM systems like Apple Silicon.

- HTB CTF options:

-

--ctf-id: ID of the CTF event (required when using--platform htb_ctf)

-

- CTFd options:

-

--ctfd-url: URL of the CTFd instance (required when using--platform ctfd)

-

# Regular use (container stops after execution)

uv run boxpwnr --platform htb --target meow --debug

# Development mode (keeps container running for faster subsequent runs)

uv run boxpwnr --platform htb --target meow --debug --keep-container

# Run on AMD64 architecture (useful for x86 compatibility on ARM systems like M1/M2 Macs)

uv run boxpwnr --platform htb --target meow --architecture amd64

# Limit the number of turns

uv run boxpwnr --platform htb --target meow --max-turns 10

# Limit the maximum cost

uv run boxpwnr --platform htb --target meow --max-cost 1.5

# Run with multiple attempts for pass@5 benchmarks

uv run boxpwnr --platform htb --target meow --attempts 5

# Use a specific model

uv run boxpwnr --platform htb --target meow --model claude-sonnet-4-0

# Use Claude Haiku 4.5 (fast, cost-effective, and intelligent)

uv run boxpwnr --platform htb --target meow --model claude-haiku-4-5-20251001 --max-cost 0.5

# Use GPT-5-mini (fast and cost-effective)

uv run boxpwnr --platform htb --target meow --model gpt-5-mini --max-cost 1.0

# Use Grok-4 (advanced reasoning model)

uv run boxpwnr --platform htb --target meow --model grok-4 --max-cost 2.0

# Use OpenRouter free tier (auto-routing)

uv run boxpwnr --platform htb --target meow --model openrouter/openrouter/free --max-cost 0.5

# Use gpt-oss-120b via OpenRouter (open-weight 117B MoE model with reasoning)

uv run boxpwnr --platform htb --target meow --model openrouter/openai/gpt-oss-120b --max-cost 1.0

# Use Kimi K2.5 via OpenRouter (Moonshot AI's reasoning model)

python3 -m boxpwnr.cli --platform htb --target meow --model openrouter/moonshotai/kimi-k2.5 --max-cost 1.0

# Use OpenCode free model (no authentication required)

uv run boxpwnr --platform htb --target meow --model opencode/big-pickle --max-cost 0.5

# Use Claude Code strategy (use CC as agent)

uv run boxpwnr --platform htb --target meow --strategy claude_code --model claude-sonnet-4-0 --max-cost 2.0

# Use HackSynth strategy (autonomous CTF agent with planner-executor-summarizer architecture)

uv run boxpwnr --platform htb --target meow --strategy hacksynth --model gpt-5 --max-cost 1.0

# Use chat_tools_compactation strategy for long-running traces that may exceed context limits

uv run boxpwnr --platform htb --target meow --strategy chat_tools_compactation --model gpt-5 --max-turns 100

# Customize compaction behavior

uv run boxpwnr --platform htb --target meow --strategy chat_tools_compactation --compaction-threshold 0.70 --preserve-last-turns 15

# Generate a new report from existing attempt

uv run boxpwnr --generate-report machines/meow/traces/20250129_180409

# Run a CTF challenge

uv run boxpwnr --platform htb_ctf --ctf-id 1234 --target "Web Challenge"

# Run a CTFd challenge

uv run boxpwnr --platform ctfd --ctfd-url https://ctf.example.com --target "Crypto 101"

# Run with custom instructions

uv run boxpwnr --platform htb --target meow --custom-instructions "Focus on privilege escalation techniques and explain your steps in detail"

# Generate a progress file for a failed attempt (can be resumed later)

uv run boxpwnr --platform htb --target meow --generate-progress --max-turns 20

# Resume from a previous attempt using the generated progress file

uv run boxpwnr --platform htb --target meow --resume-from targets/htb/meow/traces/20250127_120000/progress.md --max-turns 30

# Run XBOW benchmark (automatically clones benchmarks on first use)

uv run boxpwnr --platform xbow --target XBEN-060-24 --model gpt-5 --max-turns 30

# List all available XBOW benchmarks

uv run boxpwnr --platform xbow --list

# Run Cybench challenge (automatically clones repository on first use)

# You can use either the short name or full path

uv run boxpwnr --platform cybench --target "[Very Easy] Dynastic" --model gpt-5 --max-cost 2.0

# Or with full path:

uv run boxpwnr --platform cybench --target "benchmark/hackthebox/cyber-apocalypse-2024/crypto/[Very Easy] Dynastic" --model gpt-5 --max-cost 2.0

# List all available Cybench challenges (40 professional CTF tasks)

uv run boxpwnr --platform cybench --list

# Use external strategy with Claude Code (note: wrap in bash -c with single quotes)

uv run boxpwnr --platform htb --target meow --strategy external -- bash -c 'claude --dangerously-skip-permissions -p "$BOXPWNR_PROMPT"'

# Use external strategy with OpenAI Codex CLI

uv run boxpwnr --platform htb --target meow --strategy external -- bash -c 'codex --yolo "$BOXPWNR_PROMPT"'

# Use external strategy with custom timeout (2 hours)

uv run boxpwnr --platform htb --target meow --strategy external --external-timeout 7200 -- bash -c 'claude --dangerously-skip-permissions -p "$BOXPWNR_PROMPT"'

# Use external strategy inside Docker container (for VPN scenarios)

# When the target requires VPN, run the external tool inside BoxPwnr's Docker container.

# IS_SANDBOX=1 allows --dangerously-skip-permissions to work as root.

uv run boxpwnr --platform htb --target meow --strategy external -- \

bash -c 'docker exec -e IS_SANDBOX=1 -e ANTHROPIC_API_KEY="$ANTHROPIC_API_KEY" "$BOXPWNR_CONTAINER" claude --dangerously-skip-permissions -p "$BOXPWNR_PROMPT"'HackTheBox machines provide an excellent end-to-end testing ground for evaluating AI systems because they require:

- Complex reasoning capabilities

- Creative "outside-the-box" thinking

- Understanding of various security concepts

- Ability to chain multiple steps together

- Dynamic problem-solving skills

With recent advancements in LLM technology:

- Models are becoming increasingly sophisticated in their reasoning capabilities

- The cost of running these models is decreasing (see DeepSeek R1 Zero)

- Their ability to understand and generate code is improving

- They're getting better at maintaining context and solving multi-step problems

I believe that within the next few years, LLMs will have the capability to solve most HTB machines autonomously, marking a significant milestone in AI security testing and problem-solving capabilities.

BoxPwnr supports running GitHub Actions workflows locally using [act](https://github.com/nektos/act), which simulates the exact CI environment before pushing to GitHub:

# Install act (macOS)

brew install act

# Run CI workflows locally

make ci-test # Run main test workflow

make ci-integration # Run integration tests (slow - downloads Python each time)

make ci-docker # Run docker build test

make ci-all # Run all workflows- Visit the wiki for papers, articles and related projects.

This project is for research and educational purposes only. Always follow HackTheBox's terms of service and ethical guidelines when using this tool.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for BoxPwnr

Similar Open Source Tools

BoxPwnr

BoxPwnr is a tool designed to test the performance of different agentic architectures using Large Language Models (LLMs) to autonomously solve HackTheBox machines. It provides a plug and play system with various strategies and platforms supported. BoxPwnr uses an iterative process where LLMs receive system prompts, suggest commands, execute them in a Docker container, analyze outputs, and repeat until the flag is found. The tool automates commands, saves conversations and commands for analysis, and tracks usage statistics. With recent advancements in LLM technology, BoxPwnr aims to evaluate AI systems' reasoning capabilities, creative thinking, security understanding, problem-solving skills, and code generation abilities.

sieves

sieves is a library for zero- and few-shot NLP tasks with structured generation, enabling rapid prototyping of NLP applications without the need for training. It simplifies NLP prototyping by bundling capabilities into a single library, providing zero- and few-shot model support, a unified interface for structured generation, built-in tasks for common NLP operations, easy extendability, document-based pipeline architecture, caching to prevent redundant model calls, and more. The tool draws inspiration from spaCy and spacy-llm, offering features like immediate inference, observable pipelines, integrated tools for document parsing and text chunking, ready-to-use tasks such as classification, summarization, translation, and more, persistence for saving and loading pipelines, distillation for specialized model creation, and caching to optimize performance.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

gpt-computer-assistant

GPT Computer Assistant (GCA) is an open-source framework designed to build vertical AI agents that can automate tasks on Windows, macOS, and Ubuntu systems. It leverages the Model Context Protocol (MCP) and its own modules to mimic human-like actions and achieve advanced capabilities. With GCA, users can empower themselves to accomplish more in less time by automating tasks like updating dependencies, analyzing databases, and configuring cloud security settings.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

orra

Orra is a tool for building production-ready multi-agent applications that handle complex real-world interactions. It coordinates tasks across existing stack, agents, and tools run as services using intelligent reasoning. With features like smart pre-evaluated execution plans, domain grounding, durable execution, and automatic service health monitoring, Orra enables users to go fast with tools as services and revert state to handle failures. It provides real-time status tracking and webhook result delivery, making it ideal for developers looking to move beyond simple crews and agents.

AirGym

AirGym is an open source Python quadrotor simulator based on IsaacGym, providing a high-fidelity dynamics and Deep Reinforcement Learning (DRL) framework for quadrotor robot learning research. It offers a lightweight and customizable platform with strict alignment with PX4 logic, multiple control modes, and Sim-to-Real toolkits. Users can perform tasks such as Hovering, Balloon, Tracking, Avoid, and Planning, with the ability to create customized environments and tasks. The tool also supports training from scratch, visual encoding approaches, playing and testing of trained models, and customization of new tasks and assets.

uzu

uzu is a high-performance inference engine for AI models on Apple Silicon. It features a simple, high-level API, hybrid architecture for GPU kernel computation, unified model configurations, traceable computations, and utilizes unified memory on Apple devices. The tool provides a CLI mode for running models, supports its own model format, and offers prebuilt Swift and TypeScript frameworks for bindings. Users can quickly start by adding the uzu dependency to their Cargo.toml and creating an inference Session with a specific model and configuration. Performance benchmarks show metrics for various models on Apple M2, highlighting the tokens/s speed for each model compared to llama.cpp with bf16/f16 precision.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

Pixel-Reasoner

Pixel Reasoner is a framework that introduces reasoning in the pixel-space for Vision-Language Models (VLMs), enabling them to directly inspect, interrogate, and infer from visual evidences. This enhances reasoning fidelity for visual tasks by equipping VLMs with visual reasoning operations like zoom-in and select-frame. The framework addresses challenges like model's imbalanced competence and reluctance to adopt pixel-space operations through a two-phase training approach involving instruction tuning and curiosity-driven reinforcement learning. With these visual operations, VLMs can interact with complex visual inputs such as images or videos to gather necessary information, leading to improved performance across visual reasoning benchmarks.

ProX

ProX is a lm-based data refinement framework that automates the process of cleaning and improving data used in pre-training large language models. It offers better performance, domain flexibility, efficiency, and cost-effectiveness compared to traditional methods. The framework has been shown to improve model performance by over 2% and boost accuracy by up to 20% in tasks like math. ProX is designed to refine data at scale without the need for manual adjustments, making it a valuable tool for data preprocessing in natural language processing tasks.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

fraim

Fraim is an AI-powered toolkit designed for security engineers to enhance their workflows by leveraging AI capabilities. It offers solutions to find, detect, fix, and flag vulnerabilities throughout the development lifecycle. The toolkit includes features like Risk Flagger for identifying risks in code changes, Code Security Analysis for context-aware vulnerability detection, and Infrastructure as Code Analysis for spotting misconfigurations in cloud environments. Fraim can be run as a CLI tool or integrated into Github Actions, making it a versatile solution for security teams and organizations looking to enhance their security practices with AI technology.

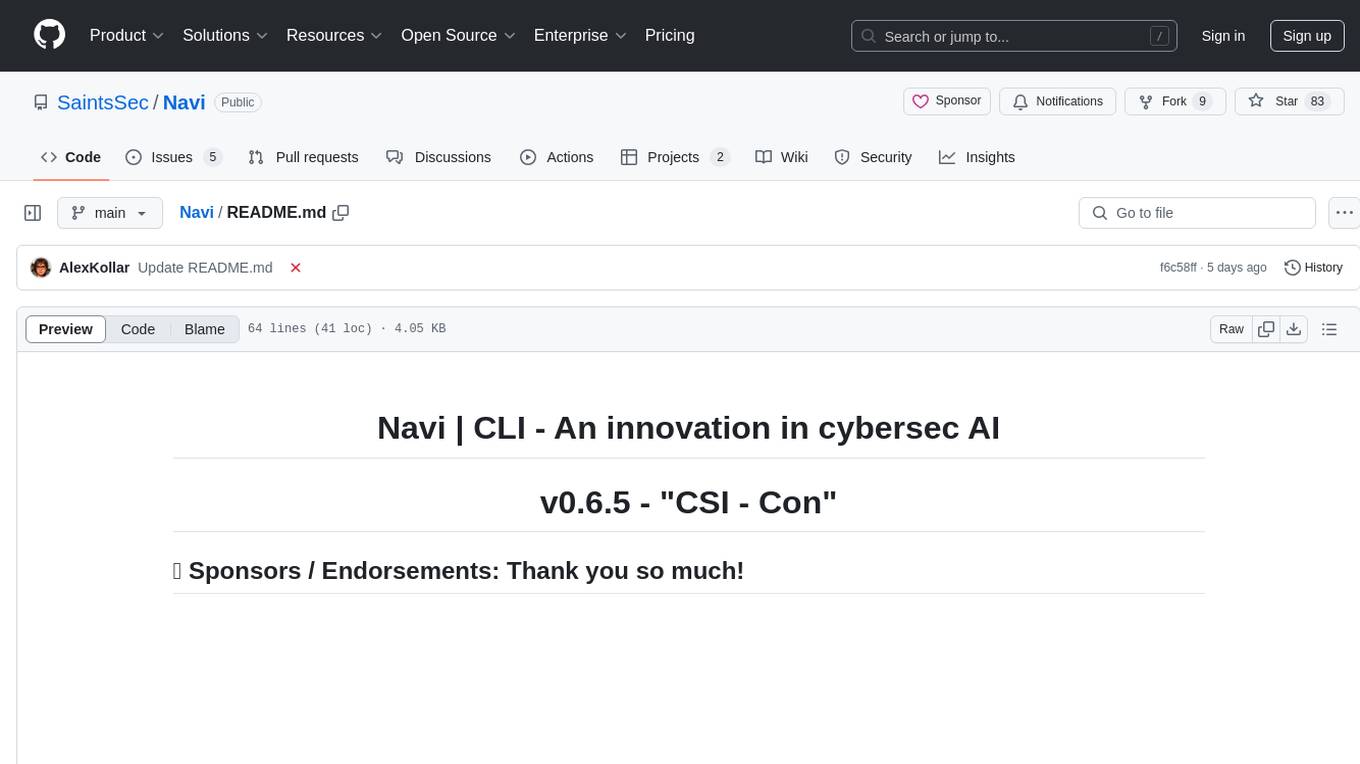

aira-dojo

aira-dojo is a scalable and customizable framework for AI research agents, designed to accelerate hill-climbing on research capabilities toward a fully automated AI research scientist. The framework provides a general abstraction for tasks and agents, implements the MLE-bench task, and includes state-of-the-art agents. It features an isolated code execution environment that integrates smoothly with job schedulers like Slurm, enabling large-scale experiments and rapid iteration across a portfolio of tasks and solvers.

For similar tasks

BoxPwnr

BoxPwnr is a tool designed to test the performance of different agentic architectures using Large Language Models (LLMs) to autonomously solve HackTheBox machines. It provides a plug and play system with various strategies and platforms supported. BoxPwnr uses an iterative process where LLMs receive system prompts, suggest commands, execute them in a Docker container, analyze outputs, and repeat until the flag is found. The tool automates commands, saves conversations and commands for analysis, and tracks usage statistics. With recent advancements in LLM technology, BoxPwnr aims to evaluate AI systems' reasoning capabilities, creative thinking, security understanding, problem-solving skills, and code generation abilities.

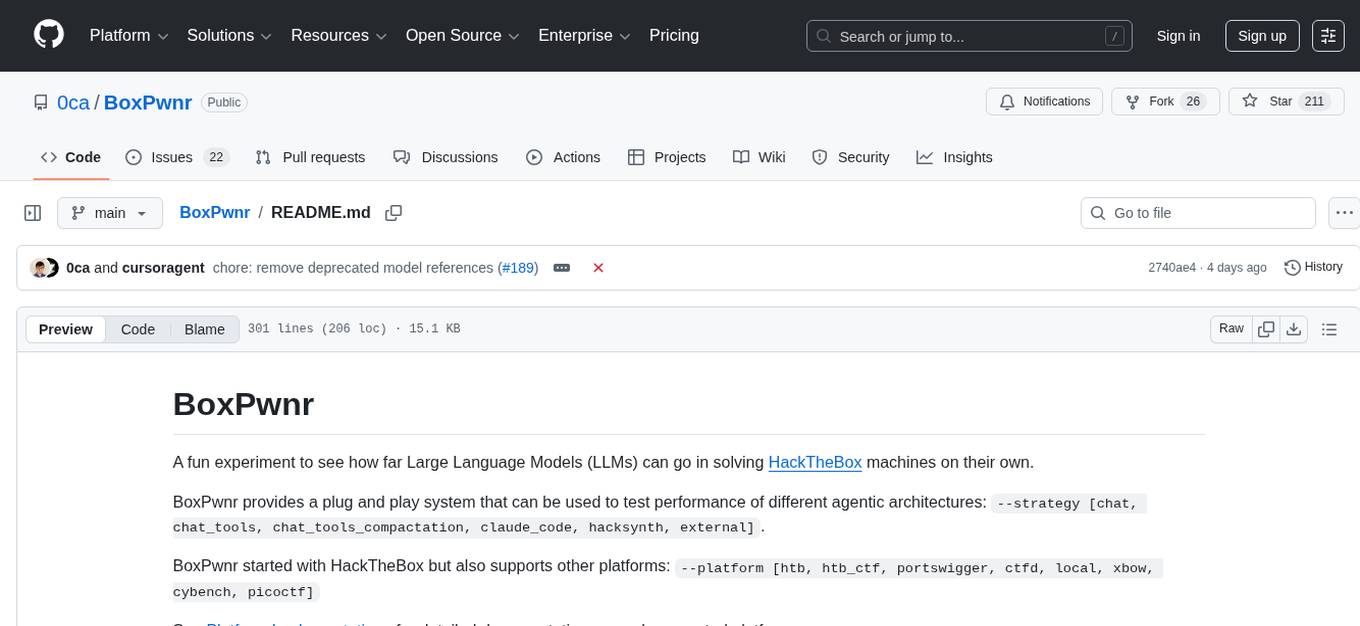

Navi

Navi is a CLI tool that revolutionizes cybersecurity with AI capabilities. It features an upgraded shell for executing system commands seamlessly, custom scripts with alias variables, and a dedicated Nmap chip. The tool is in constant development with plans for a Navi AI model, transparent data handling, and integration with Llama3.2 AI. Navi is open-source, fostering collaborative innovation in AI and cybersecurity domains.

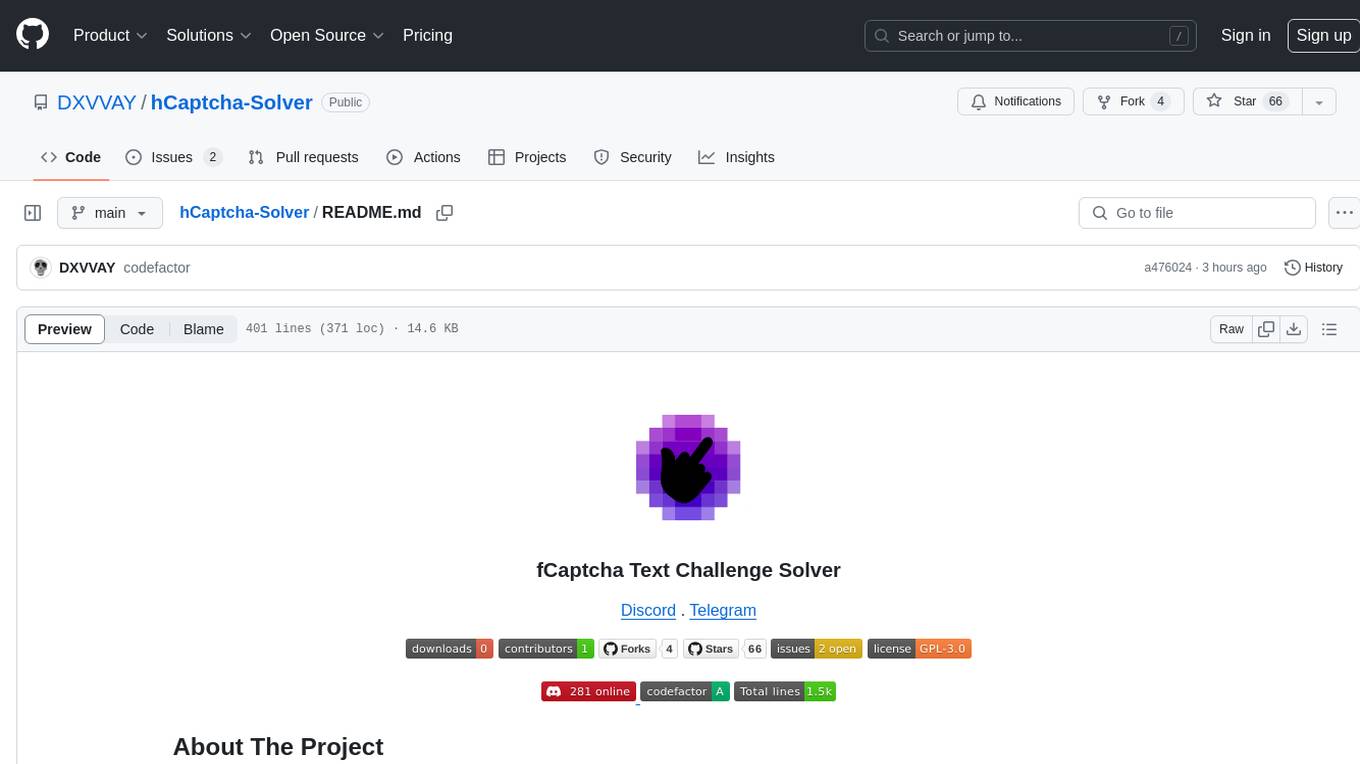

hCaptcha-Solver

hCaptcha-Solver is an AI-based hcaptcha text challenge solver that utilizes the playwright module to generate the hsw N data. It can solve any text challenge without any problem, but may be flagged on some websites like Discord. The tool requires proxies since hCaptcha also rate limits. Users can run the 'hsw_api.py' before running anything and then integrate the usage shown in 'main.py' into their projects that require hCaptcha solving. Please note that this tool only works on sites that support hCaptcha text challenge.

AlgoListed

Algolisted is a pioneering platform dedicated to algorithmic problem-solving, offering a centralized hub for a diverse array of algorithmic challenges. It provides an immersive online environment for programmers to enhance their skills through Data Structures and Algorithms (DSA) sheets, academic progress tracking, resume refinement with OpenAI integration, adaptive testing, and job opportunity listings. The project is built on the MERN stack, Flask, Beautiful Soup, and Selenium,GEN AI, and deployed on Firebase. Algolisted aims to be a reliable companion in the pursuit of coding knowledge and proficiency.

SWE-agent

SWE-agent is a tool that allows language models to autonomously fix issues in GitHub repositories, perform tasks on the web, find cybersecurity vulnerabilities, and handle custom tasks. It uses configurable agent-computer interfaces (ACIs) to interact with isolated computer environments. The tool is built and maintained by researchers from Princeton University and Stanford University.

Upscaler

Holloway's Upscaler is a consolidation of various compiled open-source AI image/video upscaling products for a CLI-friendly image and video upscaling program. It provides low-cost AI upscaling software that can run locally on a laptop, programmable for albums and videos, reliable for large video files, and works without GUI overheads. The repository supports hardware testing on various systems and provides important notes on GPU compatibility, video types, and image decoding bugs. Dependencies include ffmpeg and ffprobe for video processing. The user manual covers installation, setup pathing, calling for help, upscaling images and videos, and contributing back to the project. Benchmarks are provided for performance evaluation on different hardware setups.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

SWE-bench-Live

SWE-bench-Live is a live benchmark dataset for evaluating AI systems' ability to complete real-world software engineering tasks. It is continuously updated through an automated curation pipeline, providing the community with up-to-date task instances for rigorous and contamination-free evaluation. The dataset is designed to test the performance of various AI models on software engineering tasks and supports multiple programming languages and operating systems.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.