Best AI tools for< Troubleshoot Dependencies >

20 - AI tool Sites

Inkdrop

Inkdrop is an AI-powered tool that helps users visualize their cloud infrastructure by automatically generating interactive diagrams of cloud resources and dependencies. It provides a comprehensive overview of infrastructure, aids in understanding complex resource relationships, and seamlessly integrates with CI pipeline for documentation updates.

403 Forbidden Resolver

The website is currently displaying a '403 Forbidden' error, which means that the server is refusing to respond to the request. This could be due to various reasons such as insufficient permissions, server misconfiguration, or a client error. The 'openresty' message indicates that the server is using the OpenResty web platform. It is important to troubleshoot and resolve the issue to regain access to the website.

Highcountry Toyota Internet Connection Troubleshooter

Highcountrytoyota.stage.autogo.ai is an AI tool designed to provide assistance and support for troubleshooting internet connection issues. The website offers guidance on resolving connection problems, including checking network settings, firewall configurations, and proxy server issues. Users can find step-by-step instructions and tips to troubleshoot and fix connection errors. The platform aims to help users quickly identify and resolve connectivity issues to ensure seamless internet access.

Web Server Error Resolver

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error message is typically displayed when the server understands the request made by the client but refuses to fulfill it. The 'openresty' mentioned in the text is likely the web server software being used. It is important to troubleshoot and resolve the 403 Forbidden error to regain access to the website's content.

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

Webb.ai

Webb.ai is an AI-powered platform that offers automated troubleshooting for Kubernetes. It is designed to assist users in identifying and resolving issues within their Kubernetes environment efficiently. By leveraging AI technology, Webb.ai provides insights and recommendations to streamline the troubleshooting process, ultimately improving system reliability and performance. The platform is user-friendly and caters to both beginners and experienced users in the field of Kubernetes management.

Mavenoid

Mavenoid is an AI-powered product support tool that offers automated product support services, including product selection advice, troubleshooting solutions, replacement part ordering, and more. The platform is designed to understand complex questions and provide step-by-step instructions to guide users through various product-related processes. Mavenoid is trusted by leading product companies and focuses on resolving customer questions efficiently. The tool optimizes help centers for SEO, offers product insights to increase revenue, and provides support in multiple languages. It is known for reducing incoming inquiries and offering a seamless support experience.

403 Forbidden

The website seems to be experiencing a 403 Forbidden error, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or misconfigured server settings. The message '403 Forbidden' is a standard HTTP status code that indicates the server understood the request but refuses to authorize it. Users encountering this error may need to contact the website administrator or webmaster for assistance in resolving the issue.

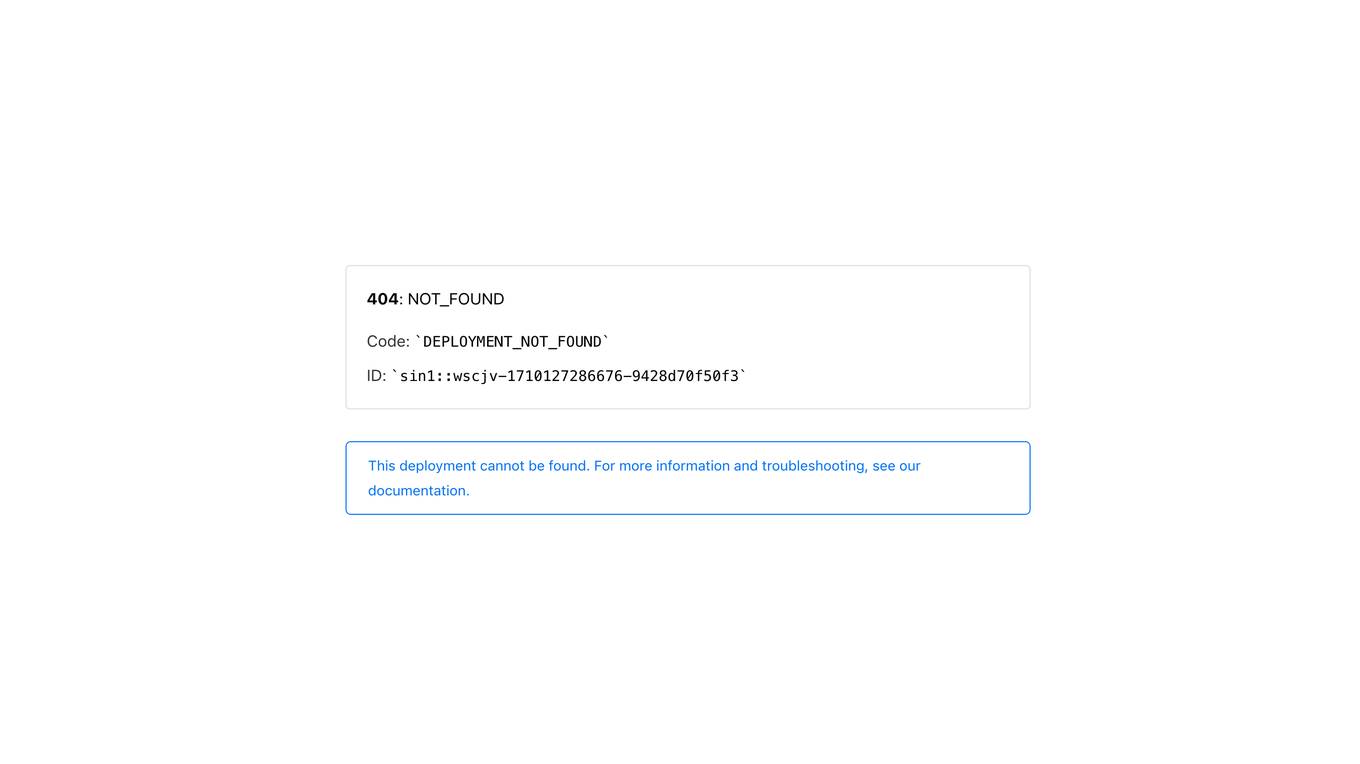

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::spxmx-1740329113552-88538b58eccb) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Page Not Found

The website is a simple and straightforward platform that displays a '404 page not found' error message. It indicates that the requested webpage could not be found on the server. This error message is a standard response code in HTTP indicating that the client was able to communicate with the server, but the server could not find what was requested.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::22md2-1720772812453-4893618e160a) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website page displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::4wq5g-1718736845999-777f28b346ca) for reference. Users are advised to consult the documentation for further information and troubleshooting.

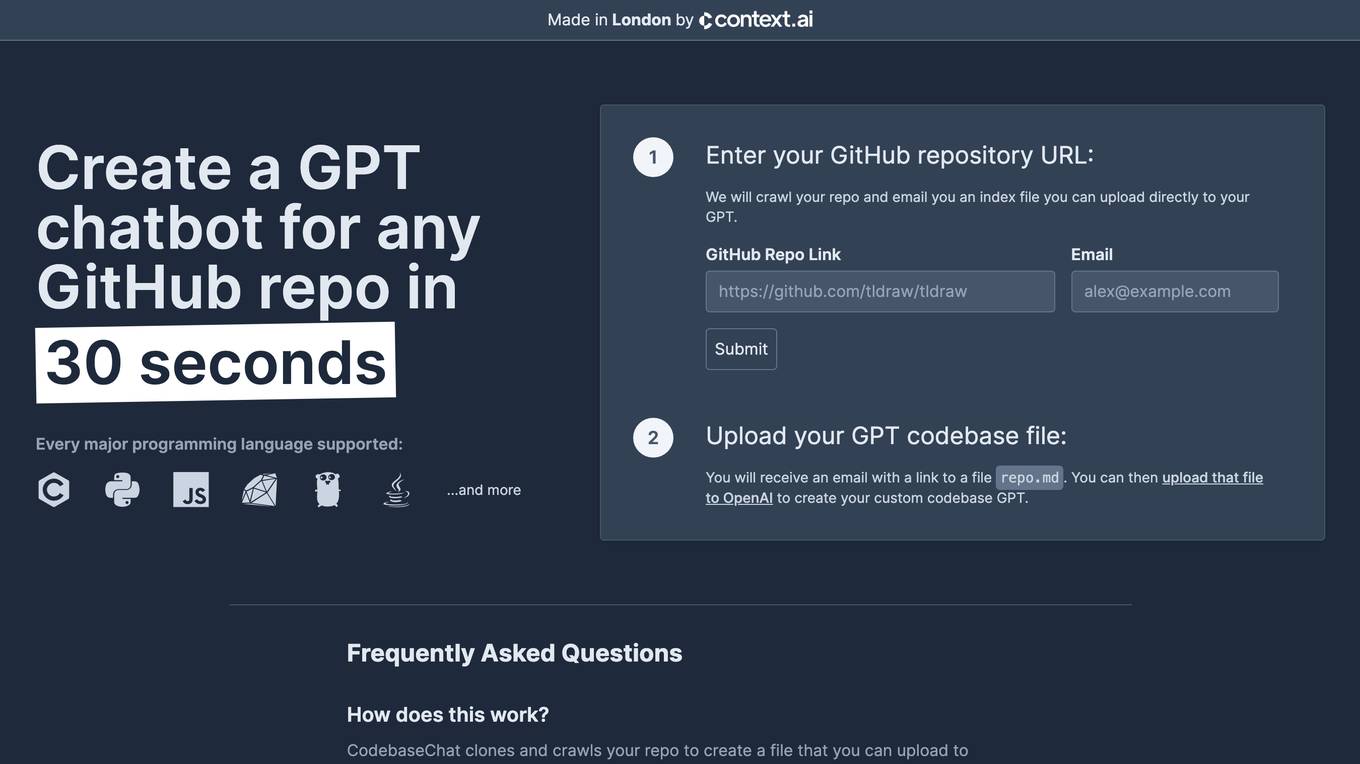

Codebase Chat

The website codebasechat.com seems to be inaccessible at the moment, displaying an 'Access Denied' message. It appears to be related to a domain sale on GoDaddy. The error message suggests that the user does not have permission to access the page. The reference number provided may be useful for troubleshooting the issue. It seems like the website is encountering a server-side error preventing access to the content.

faye.xyz

faye.xyz is a website that encountered an SSL handshake failed error, preventing Cloudflare from establishing a secure connection to the origin server. The error code 525 indicates an issue with the SSL configuration, possibly due to no shared cipher suites. Visitors are advised to try again later, while website owners may need to troubleshoot the SSL setup. Cloudflare provides performance and security services for websites.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. Users are directed to refer to the documentation for more information and troubleshooting.

Error 404 Not Found

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::t6mdp-1736442717535-3a5d4eeaf597'. Users are directed to refer to the documentation for further information and troubleshooting.

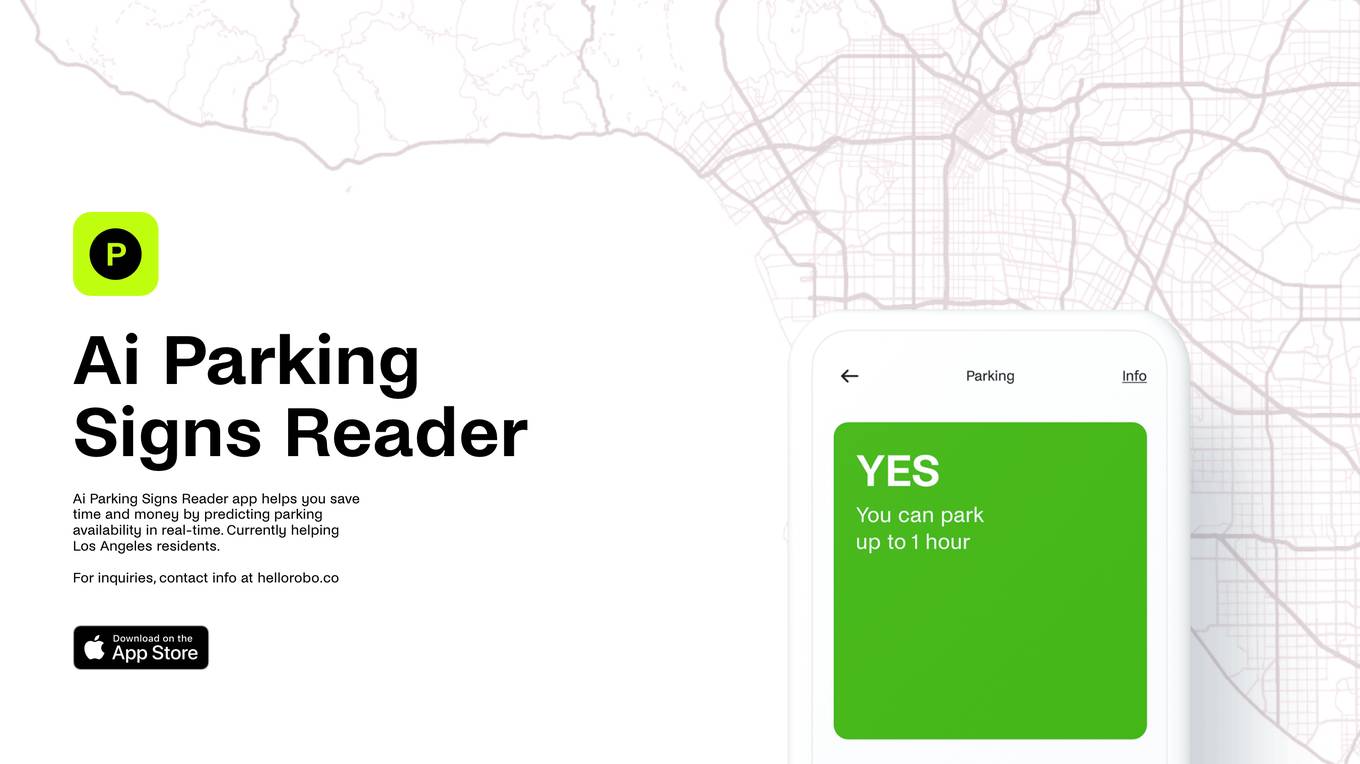

Parking Reader

The website 'parkingreader.com' appears to be inaccessible, showing an 'Access Denied' message. It seems like the user does not have permission to access the content on the server. The error message references a specific server code. The website may be related to domain parking or domain sales, as it mentions 'forsale' in the URL. The error message indicates that the server is denying access to the requested page. It is unclear why the access is denied, and further investigation or contacting the website owner may be necessary to resolve the issue.

Internal Server Error

The website encountered an internal server error, resulting in a 500 Internal Server Error message. This error indicates that the server faced an issue preventing it from fulfilling the request. Possible causes include server overload or errors within the application.

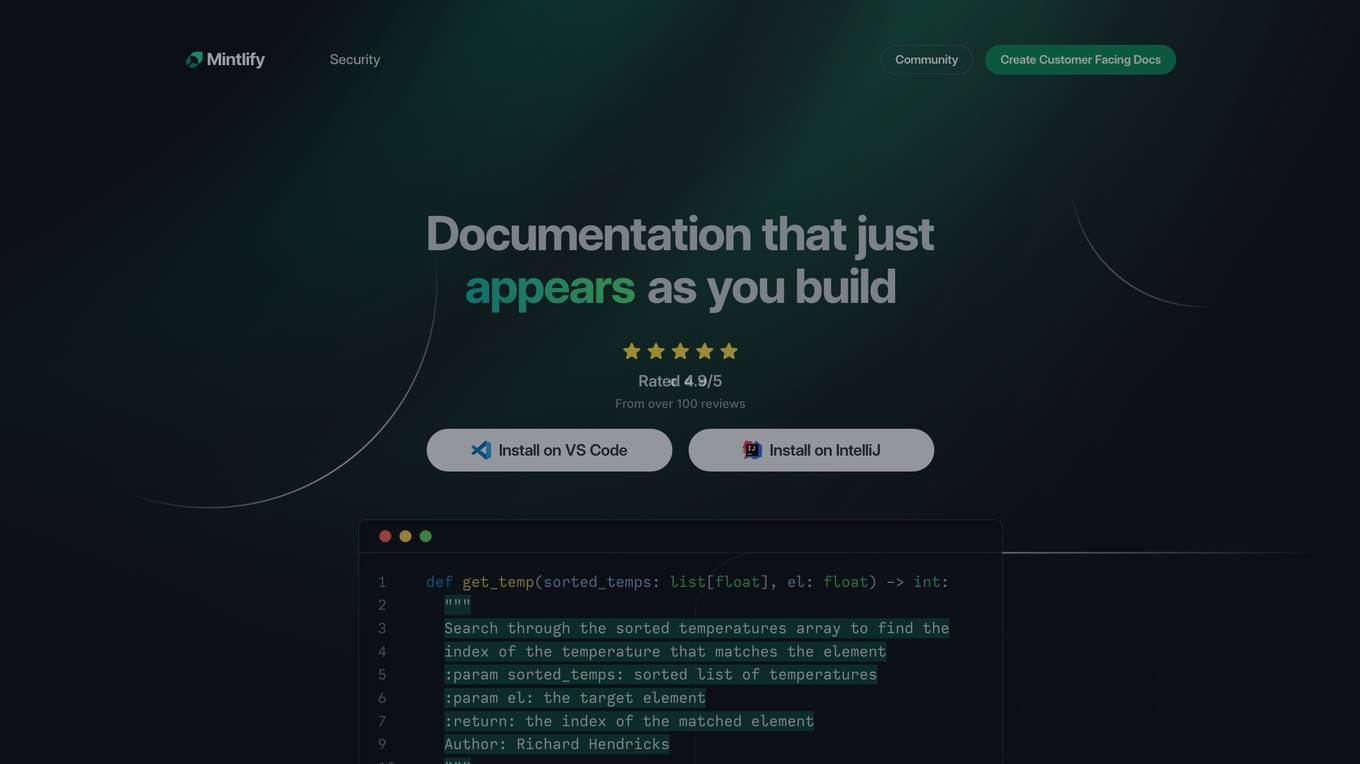

Mintlify

The website writer.mintlify.com encountered an SSL handshake failed error with code 525. This error occurred due to Cloudflare being unable to establish an SSL connection to the origin server. The issue may be related to incompatible SSL configurations or no shared cipher suites. Visitors are advised to try again in a few minutes, while website owners can refer to troubleshooting information on Cloudflare's website. The error message includes details such as the Cloudflare Ray ID and the visitor's IP address.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::zdhct-1723140771934-b5e5ad909fad'. Users are directed to refer to the documentation for further information and troubleshooting.

20 - Open Source AI Tools

airflow

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

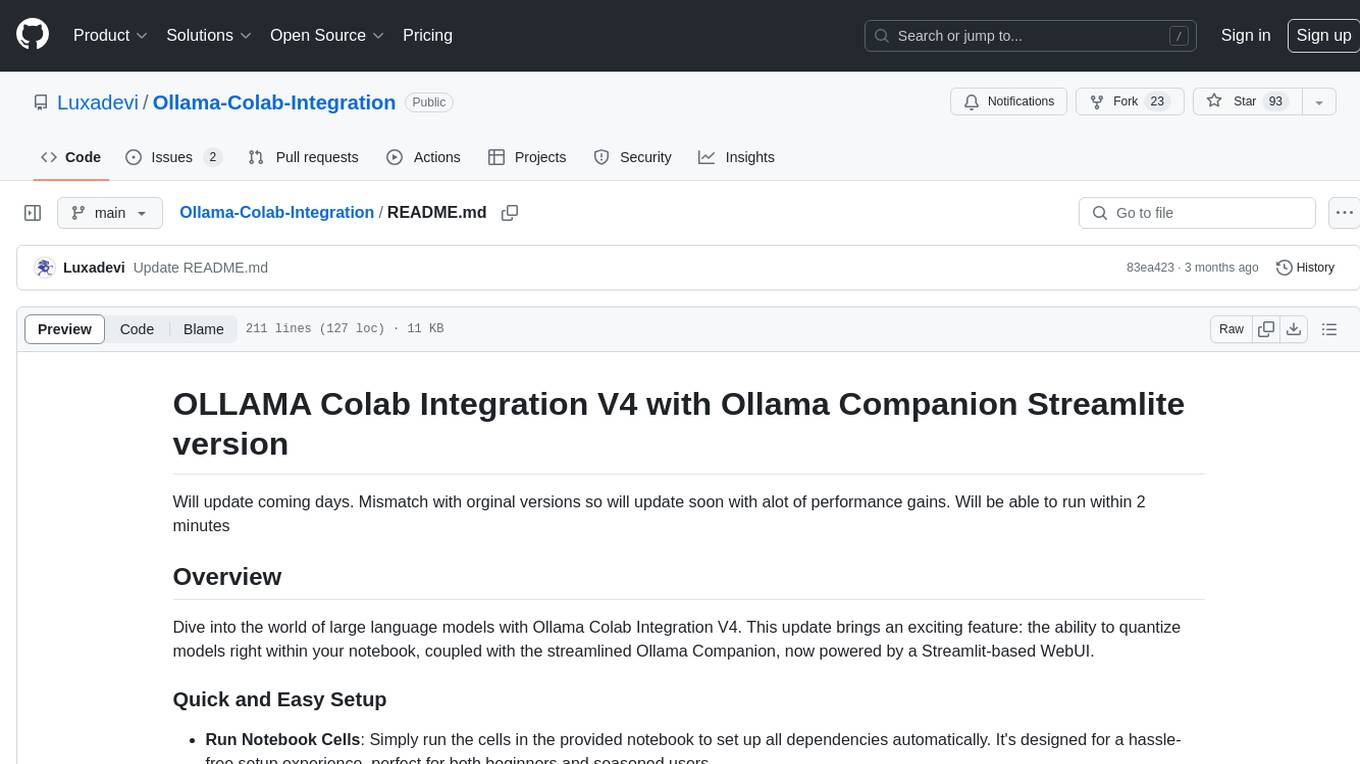

Ollama-Colab-Integration

Ollama Colab Integration V4 is a tool designed to enhance the interaction and management of large language models. It allows users to quantize models within their notebook environment, access a variety of models through a user-friendly interface, and manage public endpoints efficiently. The tool also provides features like LiteLLM proxy control, model insights, and customizable model file templating. Users can troubleshoot model loading issues, CPU fallback strategies, and manage VRAM and RAM effectively. Additionally, the tool offers functionalities for downloading model files from Hugging Face, model conversion with high precision, model quantization using Q and Kquants, and securely uploading converted models to Hugging Face.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

dream-textures

Dream Textures is a tool integrated into Blender that allows users to create textures, concept art, background assets, and more using simple text prompts. It offers features like seamless texture creation, texture projection for entire scenes, restyling animations, and running models on the user's machine for faster iteration. The tool supports CUDA and Apple Silicon GPUs, with over 4GB of VRAM recommended. Users can troubleshoot issues by checking Blender's system console or seeking help from the community on Discord.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

QuestCameraKit

QuestCameraKit is a collection of template and reference projects demonstrating how to use Meta Quest’s new Passthrough Camera API (PCA) for advanced AR/VR vision, tracking, and shader effects. It includes samples like Color Picker, Object Detection with Unity Sentis, QR Code Tracking with ZXing, Frosted Glass Shader, OpenAI vision model, and WebRTC video streaming. The repository provides detailed instructions on how to run each sample and troubleshoot known issues. Users can explore various functionalities such as converting 3D points to 2D image pixels, detecting objects, tracking QR codes, applying custom shader effects, interacting with OpenAI's vision model, and streaming camera feed over WebRTC.

AppFlowy-Cloud

AppFlowy Cloud is a secure user authentication, file storage, and real-time WebSocket communication tool written in Rust. It is part of the AppFlowy ecosystem, providing an efficient and collaborative user experience. The tool offers deployment guides, development setup with Rust and Docker, debugging tips for components like PostgreSQL, Redis, Minio, and Portainer, and guidelines for contributing to the project.

Auto_Jobs_Applier_AIHawk

Auto_Jobs_Applier_AIHawk is an AI-powered job search assistant that revolutionizes the job search and application process. It automates application submissions, provides personalized recommendations, and enhances the chances of landing a dream job. The tool offers features like intelligent job search automation, rapid application submission, AI-powered personalization, volume management with quality, intelligent filtering, dynamic resume generation, and secure data handling. It aims to address the challenges of modern job hunting by saving time, increasing efficiency, and improving application quality.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

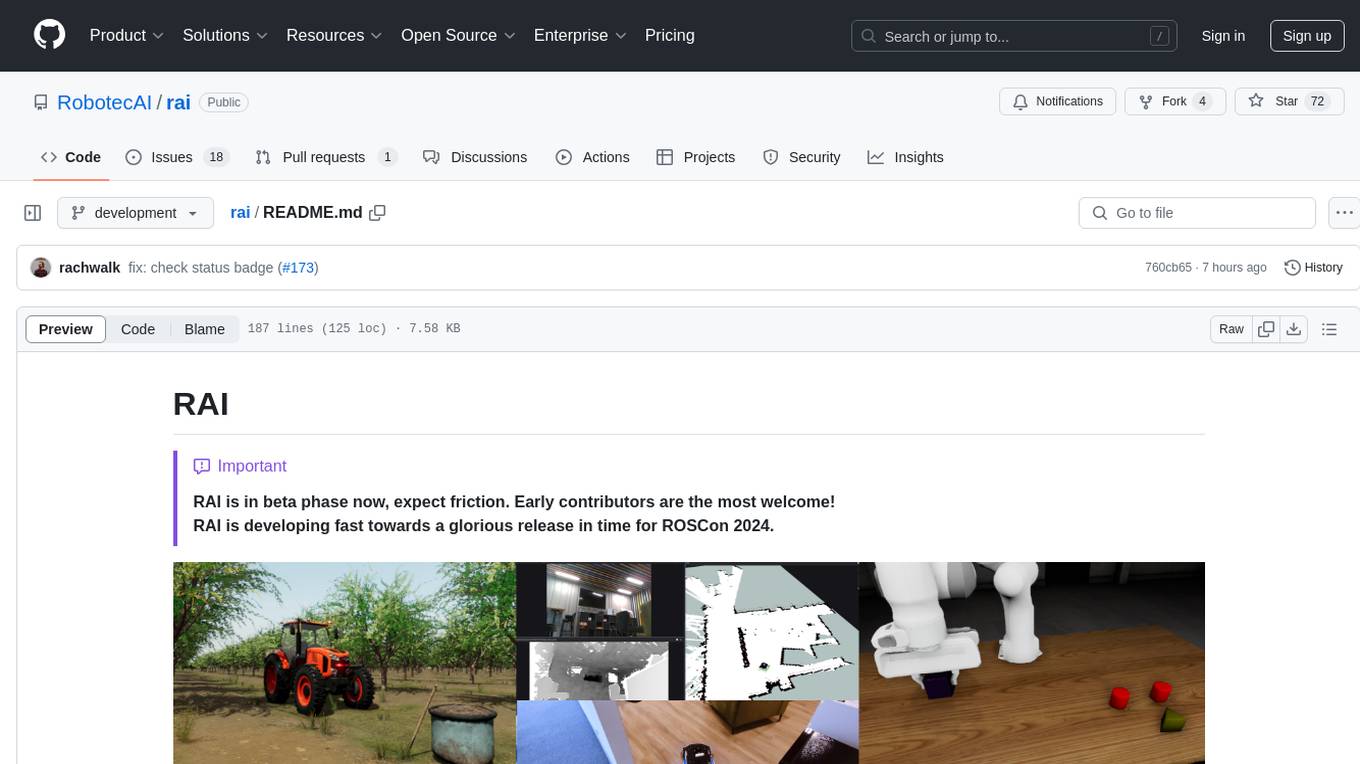

rai

RAI is a framework designed to bring general multi-agent system capabilities to robots, enhancing human interactivity, flexibility in problem-solving, and out-of-the-box AI features. It supports multi-modalities, incorporates an advanced database for agent memory, provides ROS 2-oriented tooling, and offers a comprehensive task/mission orchestrator. The framework includes features such as voice interaction, customizable robot identity, camera sensor access, reasoning through ROS logs, and integration with LangChain for AI tools. RAI aims to support various AI vendors, improve human-robot interaction, provide an SDK for developers, and offer a user interface for configuration.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

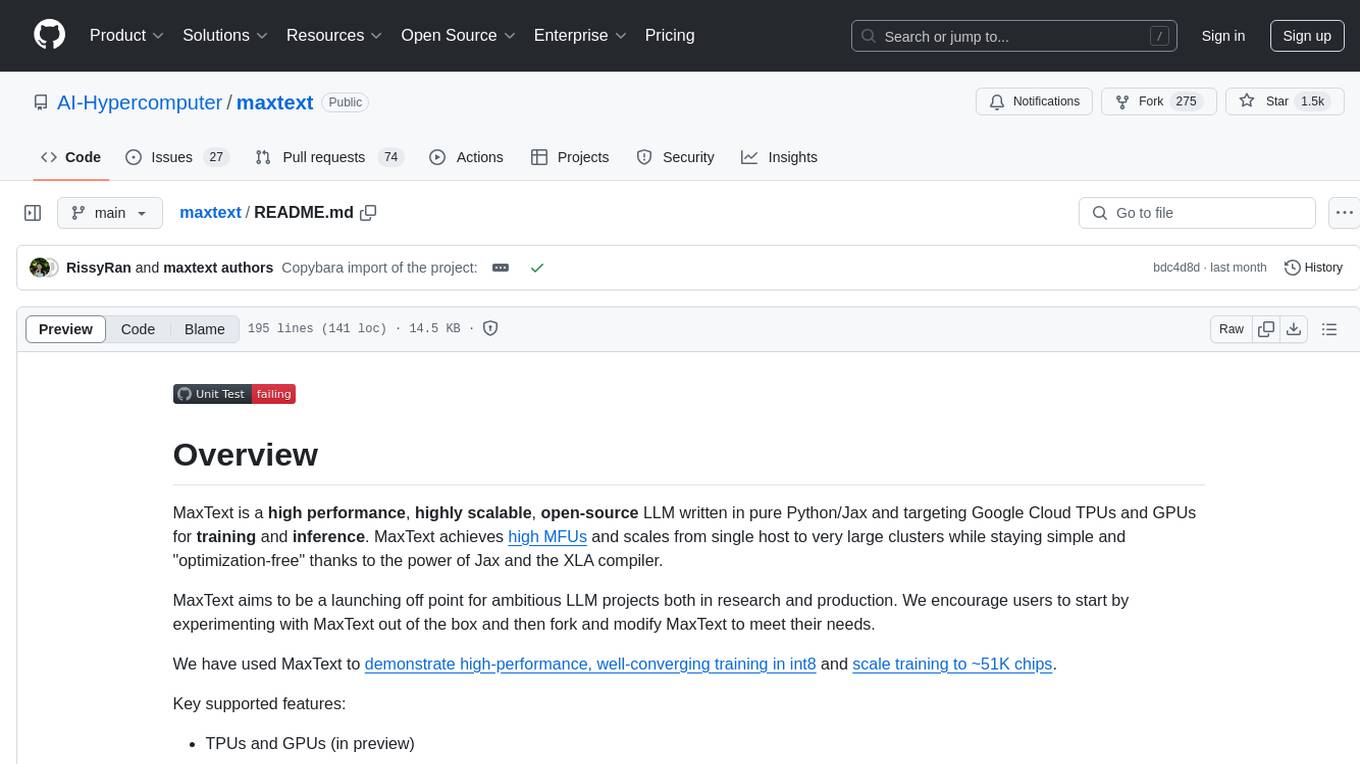

maxtext

MaxText is a high-performance, highly scalable, open-source LLM written in pure Python/Jax and targeting Google Cloud TPUs and GPUs for training and inference. MaxText achieves high MFUs and scales from single host to very large clusters while staying simple and "optimization-free" thanks to the power of Jax and the XLA compiler. MaxText aims to be a launching off point for ambitious LLM projects both in research and production. We encourage users to start by experimenting with MaxText out of the box and then fork and modify MaxText to meet their needs.

obsidian-smart-connections

Smart Connections is an AI-powered plugin for Obsidian that helps you discover hidden connections and insights in your notes. With features like Smart View for real-time relevant note suggestions and Smart Chat for chatting with your notes, Smart Connections makes it easier than ever to stay organized and uncover hidden connections between your notes. Its intuitive interface and customizable settings ensure a seamless experience, tailored to your unique needs and preferences.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

codecompanion.nvim

CodeCompanion.nvim is a Neovim plugin that provides a Copilot Chat experience, adapter support for various LLMs, agentic workflows, inline code creation and modification, built-in actions for language prompts and error fixes, custom actions creation, async execution, and more. It supports Anthropic, Ollama, and OpenAI adapters. The plugin is primarily developed for personal workflows with no guarantees of regular updates or support. Users can customize the plugin to their needs by forking the project.

maxtext

MaxText is a high performance, highly scalable, open-source Large Language Model (LLM) written in pure Python/Jax targeting Google Cloud TPUs and GPUs for training and inference. It aims to be a launching off point for ambitious LLM projects in research and production, supporting TPUs and GPUs, models like Llama2, Mistral, and Gemma. MaxText provides specific instructions for getting started, runtime performance results, comparison to alternatives, and features like stack trace collection, ahead of time compilation for TPUs and GPUs, and automatic upload of logs to Vertex Tensorboard.

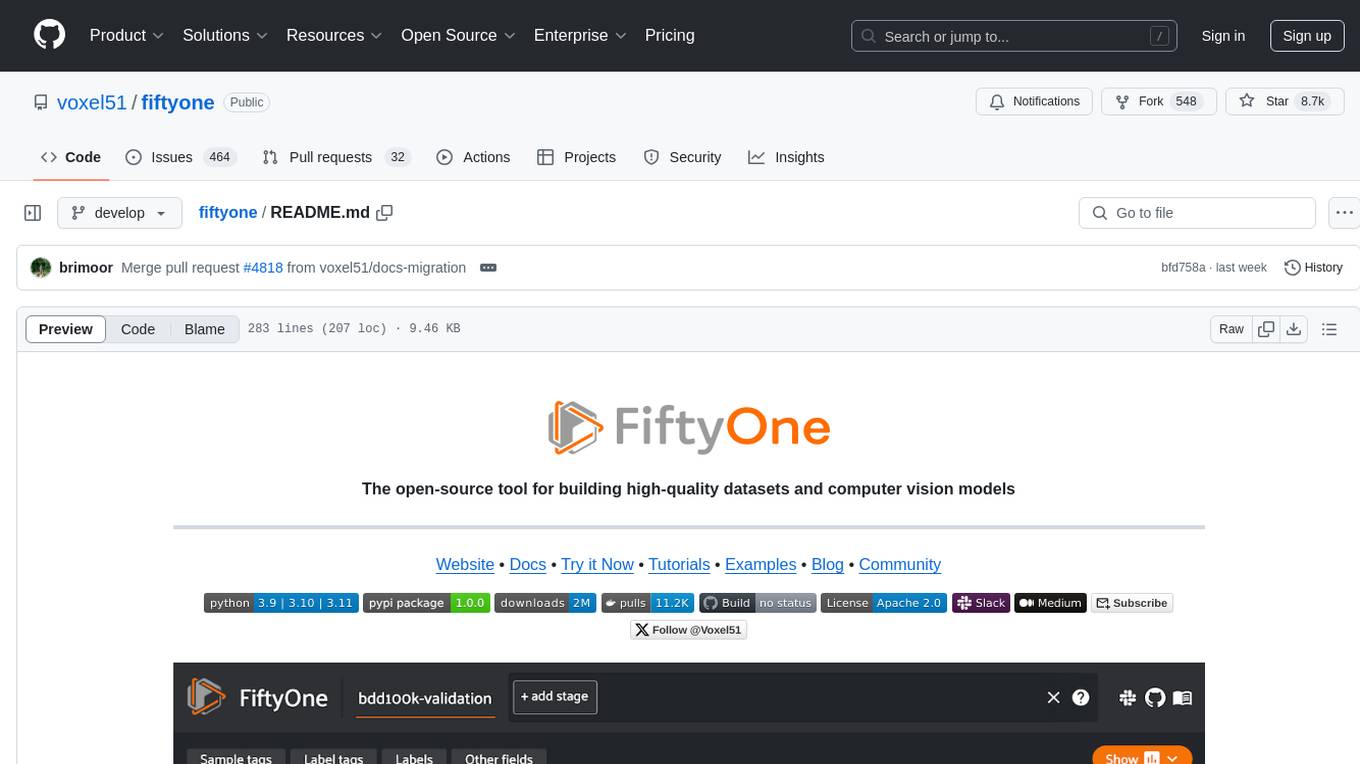

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

20 - OpenAI Gpts

CDR

Explore call detail records (CDR) for a variety of PBX platforms including Avaya, Mitel, NEC, and others with this UC trained GPT. Use specific commands to help you expertly navigate and troubleshoot CDR from diverse UC environments.

Logic Pro - Talk to the Manual

I'm Logic Pro X's manual. Let me answer your questions, troubleshoot whatever issue you're having and get you back into the groove!

Pi Pico + Micropython Assistant

An advanced virtual assistant specializing in RaspBerry Pi Pico's and Micropython. Designed to offer expert advice, troubleshoot code, and provide detailed guidance.

3D Print Diagnostics Expert

Expert in 3D printing diagnostics and problem resolution, mindful of confidentiality and careful with brand usage.

MacExpert

An assistant replying to any question related to the Mac platform: macOS, computers and apps. Visit macexpert.io for human assistance.

Aws Guru

Your friendly coworker in AWS troubleshooting, offering precise, bullet-point advice. Leave feedback: https://dlmdby03vet.typeform.com/to/VqWNt8Dh

Tech Senior Helper

Warm tech support for seniors, with calming strategies, patient and helpful.

GC Method Developer

Provides concise GC troubleshooting and method development advice that is easy to implement.