Auto_Jobs_Applier_AIHawk

Auto_Jobs_Applier_AIHawk is a tool that automates the jobs application process. Utilizing artificial intelligence, it enables users to apply for multiple job offers in an automated and personalized way.

Stars: 17633

Auto_Jobs_Applier_AIHawk is an AI-powered job search assistant that revolutionizes the job search and application process. It automates application submissions, provides personalized recommendations, and enhances the chances of landing a dream job. The tool offers features like intelligent job search automation, rapid application submission, AI-powered personalization, volume management with quality, intelligent filtering, dynamic resume generation, and secure data handling. It aims to address the challenges of modern job hunting by saving time, increasing efficiency, and improving application quality.

README:

🤖🔍 Your AI-powered job search assistant. Automate applications, get personalized recommendations, and land your dream job faster.

Connect with like-minded individuals and get the most out of AIHawk.

💡 Get support: Ask questions, troubleshoot issues, and find solutions.

🗣️ Share knowledge: Share your experiences, tips, and best practices.

🤝 Network: Connect with other professionals and explore new opportunities.

🔔 Stay updated: Get the latest news and updates on AIHawk.

- Introduction

- Features

- Installation

- Configuration

- Usage

- Documentation

- Troubleshooting

- Conclusion

- Contributors

- License

- Disclaimer

Auto_Jobs_Applier_AIHawk is a cutting-edge, automated tool designed to revolutionize the job search and application process. In today's fiercely competitive job market, where opportunities can vanish in the blink of an eye, this program offers job seekers a significant advantage. By leveraging the power of automation and artificial intelligence, Auto_Jobs_Applier_AIHawk enables users to apply to a vast number of relevant positions efficiently and in a personalized manner, maximizing their chances of landing their dream job.

In the digital age, the job search landscape has undergone a dramatic transformation. While online platforms have opened up a world of opportunities, they have also intensified competition. Job seekers often find themselves spending countless hours scrolling through listings, tailoring applications, and repetitively filling out forms. This process can be not only time-consuming but also emotionally draining, leading to job search fatigue and missed opportunities.

Auto_Jobs_Applier_AIHawk steps in as a game-changing solution to these challenges. It's not just a tool; it's your tireless, 24/7 job search partner. By automating the most time-consuming aspects of the job search process, it allows you to focus on what truly matters - preparing for interviews and developing your professional skills.

-

Intelligent Job Search Automation

- Customizable search criteria

- Continuous scanning for new openings

- Smart filtering to exclude irrelevant listings

-

Rapid and Efficient Application Submission

- One-click applications

- Form auto-fill using your profile information

- Automatic document attachment (resume, cover letter)

-

AI-Powered Personalization

- Dynamic response generation for employer-specific questions

- Tone and style matching to fit company culture

- Keyword optimization for improved application relevance

-

Volume Management with Quality

- Bulk application capability

- Quality control measures

- Detailed application tracking

-

Intelligent Filtering and Blacklisting

- Company blacklist to avoid unwanted employers

- Title filtering to focus on relevant positions

-

Dynamic Resume Generation

- Automatically creates tailored resumes for each application

- Customizes resume content based on job requirements

-

Secure Data Handling

- Manages sensitive information securely using YAML files

Confirmed successful runs on the following:

- Operating Systems:

- Windows 10

- Ubuntu 22

- Python versions:

- 3.10

- 3.11.9(64b)

- 3.12.5(64b)

-

Download and Install Python:

Ensure you have the last Python version installed. If not, download and install it from Python's official website. For detailed instructions, refer to the tutorials:

-

Download and Install Google Chrome:

- Download and install the latest version of Google Chrome in its default location from the official website.

-

Clone the repository:

git clone https://github.com/feder-cr/Auto_Jobs_Applier_AIHawk.git cd Auto_Jobs_Applier_AIHawk -

Activate virtual environment:

python3 -m venv virtual

source virtual/bin/activateor for Windows-based machines -

.\virtual\Scripts\activate

-

Install the required packages:

pip install -r requirements.txt

This file contains sensitive information. Never share or commit this file to version control.

-

llm_api_key: [Your OpenAI or Ollama API key or Gemini API key]- Replace with your OpenAI API key for GPT integration

- To obtain an API key, follow the tutorial at: https://medium.com/@lorenzozar/how-to-get-your-own-openai-api-key-f4d44e60c327

- Note: You need to add credit to your OpenAI account to use the API. You can add credit by visiting the OpenAI billing dashboard.

- According to the OpenAI community and our users' reports, right after setting up the OpenAI account and purchasing the required credits, users still have a

Freeaccount type. This prevents them from having unlimited access to OpenAI models and allows only 200 requests per day. This might cause runtime errors such as:

Error code: 429 - {'error': {'message': 'You exceeded your current quota, please check your plan and billing details. ...}}

{'error': {'message': 'Rate limit reached for gpt-4o-mini in organization <org> on requests per day (RPD): Limit 200, Used 200, Requested 1.}}

OpenAI will update your account automatically, but it might take some time, ranging from a couple of hours to a few days.

You can find more about your organization limits on the official page. - For obtaining Gemini API key visit Google AI for Devs

This file defines your job search parameters and bot behavior. Each section contains options that you can customize:

-

remote: [true/false]- Set to

trueto include remote jobs,falseto exclude them

- Set to

-

experienceLevel:- Set desired experience levels to

true, others tofalse

- Set desired experience levels to

-

jobTypes:- Set desired job types to

true, others tofalse

- Set desired job types to

-

date:- Choose one time range for job postings by setting it to

true, others tofalse

- Choose one time range for job postings by setting it to

-

positions:- List job titles you're interested in, one per line

- Example:

positions: - Software Developer - Data Scientist

-

locations:- List locations you want to search in, one per line

- Example:

locations: - Italy - London

-

apply_once_at_company: [True/False]- Set to

Trueto apply only once per company,Falseto allow multiple applications per company

- Set to

-

distance: [number]- Set the radius for your job search in miles

- Example:

distance: 50

-

companyBlacklist:- List companies you want to exclude from your search, one per line

- Example:

companyBlacklist: - Company X - Company Y

-

titleBlacklist:- List keywords in job titles you want to avoid, one per line

- Example:

titleBlacklist: - Sales - Marketing

-

llm_model_type:- Choose the model type, supported: openai / ollama / claude / gemini

-

llm_model:- Choose the LLM model, currently supported:

- openai: gpt-4o

- ollama: llama2, mistral:v0.3

- claude: any model

- gemini: any model

- Choose the LLM model, currently supported:

-

llm_api_url:- Link of the API endpoint for the LLM model

- openai: https://api.pawan.krd/cosmosrp/v1

- ollama: http://127.0.0.1:11434/

- claude: https://api.anthropic.com/v1

- gemini: no api_url

- Link of the API endpoint for the LLM model

- Note: To run local Ollama, follow the guidelines here: Guide to Ollama deployment

This file contains your resume information in a structured format. Fill it out with your personal details, education, work experience, and skills. This information is used to auto-fill application forms and generate customized resumes.

Each section has specific fields to fill out:

-

personal_information:- This section contains basic personal details to identify yourself and provide contact information.

- name: Your first name.

- surname: Your last name or family name.

- date_of_birth: Your birth date in the format DD/MM/YYYY.

- country: The country where you currently reside.

- city: The city where you currently live.

- address: Your full address, including street and number.

- zip_code: Your postal/ZIP code.

- phone_prefix: The international dialing code for your phone number (e.g., +1 for the USA, +44 for the UK).

- phone: Your phone number without the international prefix.

- email: Your primary email address.

- github: URL to your GitHub profile, if applicable.

- linkedin: URL to your LinkedIn profile, if applicable.

- Example

personal_information: name: "Jane" surname: "Doe" date_of_birth: "01/01/1990" country: "USA" city: "New York" address: "123 Main St" zip_code: "520123" phone_prefix: "+1" phone: "5551234567" email: "[email protected]" github: "https://github.com/janedoe" linkedin: "https://www.linkedin.com/in/janedoe/"

- This section contains basic personal details to identify yourself and provide contact information.

-

education_details:-

This section outlines your academic background, including degrees earned and relevant coursework.

- degree: The type of degree obtained (e.g., Bachelor's Degree, Master's Degree).

- university: The name of the university or institution where you studied.

- final_evaluation_grade: Your Grade Point Average or equivalent measure of academic performance.

- start_date: The start year of your studies.

- graduation_year: The year you graduated.

- field_of_study: The major or focus area of your studies.

- exam: A list of courses or subjects taken along with their respective grades.

-

Example:

education_details: - education_level: "Bachelor's Degree" institution: "University of Example" field_of_study: "Software Engineering" final_evaluation_grade: "4/4" start_date: "2021" year_of_completion: "2023" exam: Algorithms: "A" Data Structures: "B+" Database Systems: "A" Operating Systems: "A-" Web Development: "B"

-

-

experience_details:-

This section details your work experience, including job roles, companies, and key responsibilities.

- position: Your job title or role.

- company: The name of the company or organization where you worked.

- employment_period: The timeframe during which you were employed in the role (e.g., MM/YYYY - MM/YYYY).

- location: The city and country where the company is located.

- industry: The industry or field in which the company operates.

- key_responsibilities: A list of major responsibilities or duties you had in the role.

- skills_acquired: Skills or expertise gained through this role.

-

Example:

experience_details: - position: "Software Developer" company: "Tech Innovations Inc." employment_period: "06/2021 - Present" location: "San Francisco, CA" industry: "Technology" key_responsibilities: - "Developed web applications using React and Node.js" - "Collaborated with cross-functional teams to design and implement new features" - "Troubleshot and resolved complex software issues" skills_acquired: - "React" - "Node.js" - "Software Troubleshooting"

-

-

projects:-

Include notable projects you have worked on, including personal or professional projects.

- name: The name or title of the project.

- description: A brief summary of what the project involves or its purpose.

- link: URL to the project, if available (e.g., GitHub repository, website).

-

Example:

projects: - name: "Weather App" description: "A web application that provides real-time weather information using a third-party API." link: "https://github.com/janedoe/weather-app" - name: "Task Manager" description: "A task management tool with features for tracking and prioritizing tasks." link: "https://github.com/janedoe/task-manager"

-

-

achievements:-

Highlight notable accomplishments or awards you have received.

- name: The title or name of the achievement.

- description: A brief explanation of the achievement and its significance.

-

Example:

achievements: - name: "Employee of the Month" description: "Recognized for exceptional performance and contributions to the team." - name: "Hackathon Winner" description: "Won first place in a national hackathon competition."

-

-

certifications:-

Include any professional certifications you have earned.

- name: "PMP"

description: "Certification for project management professionals, issued by the Project Management Institute (PMI)"

- name: "PMP"

-

Example:

certifications: - "Certified Scrum Master" - "AWS Certified Solutions Architect"

-

-

languages:-

Detail the languages you speak and your proficiency level in each.

- language: The name of the language.

- proficiency: Your level of proficiency (e.g., Native, Fluent, Intermediate).

-

Example:

languages: - language: "English" proficiency: "Fluent" - language: "Spanish" proficiency: "Intermediate"

-

-

interests:-

Mention your professional or personal interests that may be relevant to your career.

- interest: A list of interests or hobbies.

-

Example:

interests: - "Machine Learning" - "Cybersecurity" - "Open Source Projects" - "Digital Marketing" - "Entrepreneurship"

-

-

availability:-

State your current availability or notice period.

- notice_period: The amount of time required before you can start a new role (e.g., "2 weeks", "1 month").

-

Example:

availability: notice_period: "2 weeks"

-

-

salary_expectations:-

Provide your expected salary range.

- salary_range_usd: The salary range you are expecting, expressed in USD.

-

Example:

salary_expectations: salary_range_usd: "80000 - 100000"

-

-

self_identification:-

Provide information related to personal identity, including gender and pronouns.

- gender: Your gender identity.

- pronouns: The pronouns you use (e.g., He/Him, She/Her, They/Them).

- veteran: Your status as a veteran (e.g., Yes, No).

- disability: Whether you have a disability (e.g., Yes, No).

- ethnicity: Your ethnicity.

-

Example:

self_identification: gender: "Female" pronouns: "She/Her" veteran: "No" disability: "No" ethnicity: "Asian"

-

-

legal_authorization:-

Indicate your legal ability to work in various locations.

- eu_work_authorization: Whether you are authorized to work in the European Union (Yes/No).

- us_work_authorization: Whether you are authorized to work in the United States (Yes/No).

- requires_us_visa: Whether you require a visa to work in the United States (Yes/No).

- requires_us_sponsorship: Whether you require sponsorship to work in the United States (Yes/No).

- requires_eu_visa: Whether you require a visa to work in the European Union (Yes/No).

- legally_allowed_to_work_in_eu: Whether you are legally allowed to work in the European Union (Yes/No).

- legally_allowed_to_work_in_us: Whether you are legally allowed to work in the United States (Yes/No).

- requires_eu_sponsorship: Whether you require sponsorship to work in the European Union (Yes/No).

- canada_work_authorization: Whether you are authorized to work in Canada (Yes/No).

- requires_canada_visa: Whether you require a visa to work in Canada (Yes/No).

- legally_allowed_to_work_in_canada: Whether you are legally allowed to work in Canada (Yes/No).

- requires_canada_sponsorship: Whether you require sponsorship to work in Canada (Yes/No).

- uk_work_authorization: Whether you are authorized to work in the United Kingdom (Yes/No).

- requires_uk_visa: Whether you require a visa to work in the United Kingdom (Yes/No).

- legally_allowed_to_work_in_uk: Whether you are legally allowed to work in the United Kingdom (Yes/No).

- requires_uk_sponsorship: Whether you require sponsorship to work in the United Kingdom (Yes/No).

-

Example:

legal_authorization: eu_work_authorization: "Yes" us_work_authorization: "Yes" requires_us_visa: "No" requires_us_sponsorship: "Yes" requires_eu_visa: "No" legally_allowed_to_work_in_eu: "Yes" legally_allowed_to_work_in_us: "Yes" requires_eu_sponsorship: "No" canada_work_authorization: "Yes" requires_canada_visa: "No" legally_allowed_to_work_in_canada: "Yes" requires_canada_sponsorship: "No" uk_work_authorization: "Yes" requires_uk_visa: "No" legally_allowed_to_work_in_uk: "Yes" requires_uk_sponsorship: "No"

-

-

work_preferences:-

Specify your preferences for work arrangements and conditions.

- remote_work: Whether you are open to remote work (Yes/No).

- in_person_work: Whether you are open to in-person work (Yes/No).

- open_to_relocation: Whether you are willing to relocate for a job (Yes/No).

- willing_to_complete_assessments: Whether you are willing to complete job assessments (Yes/No).

- willing_to_undergo_drug_tests: Whether you are willing to undergo drug testing (Yes/No).

- willing_to_undergo_background_checks: Whether you are willing to undergo background checks (Yes/No).

-

Example:

work_preferences: remote_work: "Yes" in_person_work: "No" open_to_relocation: "Yes" willing_to_complete_assessments: "Yes" willing_to_undergo_drug_tests: "No" willing_to_undergo_background_checks: "Yes"

-

The data_folder_example folder contains a working example of how the files necessary for the bot's operation should be structured and filled out. This folder serves as a practical reference to help you correctly set up your work environment for the job search bot.

Inside this folder, you'll find example versions of the key files:

secrets.yamlconfig.yamlplain_text_resume.yaml

These files are already populated with fictitious but realistic data. They show you the correct format and type of information to enter in each file.

Using this folder as a guide can be particularly helpful for:

- Understanding the correct structure of each configuration file

- Seeing examples of valid data for each field

- Having a reference point while filling out your personal files

-

Account language To ensure the bot works, your account language must be set to English.

-

Data Folder: Ensure that your data_folder contains the following files:

secrets.yamlconfig.yamlplain_text_resume.yaml

-

Run the Bot:

Auto_Jobs_Applier_AIHawk offers flexibility in how it handles your pdf resume:

-

Dynamic Resume Generation:

If you don't use the

--resumeoption, the bot will automatically generate a unique resume for each application. This feature uses the information from yourplain_text_resume.yamlfile and tailors it to each specific job application, potentially increasing your chances of success by customizing your resume for each position.python main.py

-

Using a Specific Resume:

If you want to use a specific PDF resume for all applications, place your resume PDF in the

data_folderdirectory and run the bot with the--resumeoption:python main.py --resume /path/to/your/resume.pdf

-

Using the colled mode:

If you want to collect job data only to perform any type of data analytics you can use the bot with the

--collectoption. This will store in output/data.json file all data found from linkedin jobs offers.python main.py --collect

Error Message:

openai.RateLimitError: Error code: 429 - {'error': {'message': 'You exceeded your current quota, please check your plan and billing details. For more information on this error, read the docs: https://platform.openai.com/docs/guides/error-codes/api-errors.', 'type': 'insufficient_quota', 'param': None, 'code': 'insufficient_quota'}}

Solution:

- Check your OpenAI API billing settings at https://platform.openai.com/account/billing

- Ensure you have added a valid payment method to your OpenAI account

- Note that ChatGPT Plus subscription is different from API access

- If you've recently added funds or upgraded, wait 12-24 hours for changes to take effect

- Free tier has a 3 RPM limit; spend at least $5 on API usage to increase

Error Message:

Exception: No clickable 'Easy Apply' button found

Solution:

- Ensure that you're logged properly

- Check if the job listings you're targeting actually have the "Easy Apply" option

- Verify that your search parameters in the

config.yamlfile are correct and returning jobs with the "Easy Apply" button - Try increasing the wait time for page loading in the script to ensure all elements are loaded before searching for the button

Issue: Bot provides inaccurate data for experience, CTC, and notice period

Solution:

- Update prompts for professional experience specificity

- Add fields in

config.yamlfor current CTC, expected CTC, and notice period - Modify bot logic to use these new config fields

Error Message:

yaml.scanner.ScannerError: while scanning a simple key

Solution:

- Copy example

config.yamland modify gradually - Ensure proper YAML indentation and spacing

- Use a YAML validator tool

- Avoid unnecessary special characters or quotes

Issue: Bot searches for jobs but continues scrolling without applying

Solution:

- Check for security checks or CAPTCHAs

- Verify

config.yamljob search parameters - Ensure your account profile meets job requirements

- Review console output for error messages

- Use the latest version of the script

- Verify all dependencies are installed and updated

- Check internet connection stability

- Clear browser cache and cookies if issues persist

For further assistance, please create an issue on the GitHub repository with detailed information about your problem, including error messages and your configuration (with sensitive information removed).

To install and configure Ollama and Gemini, please refer to the following documents:

Follow the instructions in these guides to ensure proper configuration of AIHawk with Ollama and Gemini.

For detailed instructions on editing YAML configuration sections for AIHawk, refer to this document:

To make AIHawk automatically start when your system boots, follow the steps in this guide:

Navigate to the docs/ directory and download the PDF guides you need.

Written by Rushi, Linkedin, support him by following.

- Video Tutorial: How to set up Auto_Jobs_Applier_AIHawk

- OpenAI API Documentation

- Lang Chain Developer Documentation

If you encounter any issues, you can open an issue on GitHub.

Please add valuable details to the subject and to the description. If you need new feature then please reflect this.

I'll be more than happy to assist you!

Auto_Jobs_Applier_AIHawk provides a significant advantage in the modern job market by automating and enhancing the job application process. With features like dynamic resume generation and AI-powered personalization, it offers unparalleled flexibility and efficiency. Whether you're a job seeker aiming to maximize your chances of landing a job, a recruiter looking to streamline application submissions, or a career advisor seeking to offer better services, Auto_Jobs_Applier_AIHawk is an invaluable resource. By leveraging cutting-edge automation and artificial intelligence, this tool not only saves time but also significantly increases the effectiveness and quality of job applications in today's competitive landscape.

- feder-cr - Creator and Lead Developer

Auto_Jobs_Applier_AIHawk is still in beta, and your feedback, suggestions, and contributions are highly valued. Feel free to open issues, suggest enhancements, or submit pull requests to help improve the project. Let's work together to make Auto_Jobs_Applier_AIHawk an even more powerful tool for job seekers worldwide.

This project is licensed under the MIT License - see the LICENSE file for details.

This tool, Auto_Jobs_Applier_AIHawk, is intended for educational purposes only. The creator assumes no responsibility for any consequences arising from its use. Users are advised to comply with the terms of service of relevant platforms and adhere to all applicable laws, regulations, and ethical guidelines. The use of automated tools for job applications may carry risks, including potential impacts on user accounts. Proceed with caution and at your own discretion.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Auto_Jobs_Applier_AIHawk

Similar Open Source Tools

Auto_Jobs_Applier_AIHawk

Auto_Jobs_Applier_AIHawk is an AI-powered job search assistant that revolutionizes the job search and application process. It automates application submissions, provides personalized recommendations, and enhances the chances of landing a dream job. The tool offers features like intelligent job search automation, rapid application submission, AI-powered personalization, volume management with quality, intelligent filtering, dynamic resume generation, and secure data handling. It aims to address the challenges of modern job hunting by saving time, increasing efficiency, and improving application quality.

linkedIn_auto_jobs_applier_with_AI

LinkedIn_AIHawk is an automated tool designed to revolutionize the job search and application process on LinkedIn. It leverages automation and artificial intelligence to efficiently apply to relevant positions, personalize responses, manage application volume, filter listings, generate dynamic resumes, and handle sensitive information securely. The tool aims to save time, increase application relevance, and enhance job search effectiveness in today's competitive landscape.

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

zenml

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready machine learning pipelines. By decoupling infrastructure from code, ZenML enables developers across your organization to collaborate more effectively as they develop to production.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, offers quick setup for conversational service robots, integrates diverse knowledge bases, provides flexible configuration options, and features an attractive user interface.

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

NeoPass

NeoPass is a free Chrome extension designed for students taking tests on exam portals like Iamneo and Wildlife Ecology NPTEL. It provides features such as NPTEL integration, NeoExamShield bypass, AI chatbot with stealth mode, AI search answers/code, MCQ solving, tab switching bypass, pasting when restricted, and remote logout. Users can install the extension by following simple steps and use shortcuts for quick access to features. The tool is intended for educational purposes only and promotes academic integrity.

restai

RestAI is an AIaaS (AI as a Service) platform that allows users to create and consume AI agents (projects) using a simple REST API. It supports various types of agents, including RAG (Retrieval-Augmented Generation), RAGSQL (RAG for SQL), inference, vision, and router. RestAI features automatic VRAM management, support for any public LLM supported by LlamaIndex or any local LLM supported by Ollama, a user-friendly API with Swagger documentation, and a frontend for easy access. It also provides evaluation capabilities for RAG agents using deepeval.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

For similar tasks

Auto_Jobs_Applier_AIHawk

Auto_Jobs_Applier_AIHawk is an AI-powered job search assistant that revolutionizes the job search and application process. It automates application submissions, provides personalized recommendations, and enhances the chances of landing a dream job. The tool offers features like intelligent job search automation, rapid application submission, AI-powered personalization, volume management with quality, intelligent filtering, dynamic resume generation, and secure data handling. It aims to address the challenges of modern job hunting by saving time, increasing efficiency, and improving application quality.

stremio-ai-search

Stremio AI Search is an intelligent search addon powered by Google's Gemini AI, providing personalized movie and TV series recommendations based on natural language queries. It integrates with Trakt for personalized suggestions, allows selection of Google AI models, and offers TMDB integration for a content-rich catalog. RPDB integration provides access to posters with ratings. Users can configure API keys, set recommendation limits, and enjoy a wide range of query ideas for versatile content discovery.

For similar jobs

linkedIn_auto_jobs_applier_with_AI

LinkedIn_AIHawk is an automated tool designed to revolutionize the job search and application process on LinkedIn. It leverages automation and artificial intelligence to efficiently apply to relevant positions, personalize responses, manage application volume, filter listings, generate dynamic resumes, and handle sensitive information securely. The tool aims to save time, increase application relevance, and enhance job search effectiveness in today's competitive landscape.

Auto_Jobs_Applier_AIHawk

Auto_Jobs_Applier_AIHawk is an AI-powered job search assistant that revolutionizes the job search and application process. It automates application submissions, provides personalized recommendations, and enhances the chances of landing a dream job. The tool offers features like intelligent job search automation, rapid application submission, AI-powered personalization, volume management with quality, intelligent filtering, dynamic resume generation, and secure data handling. It aims to address the challenges of modern job hunting by saving time, increasing efficiency, and improving application quality.

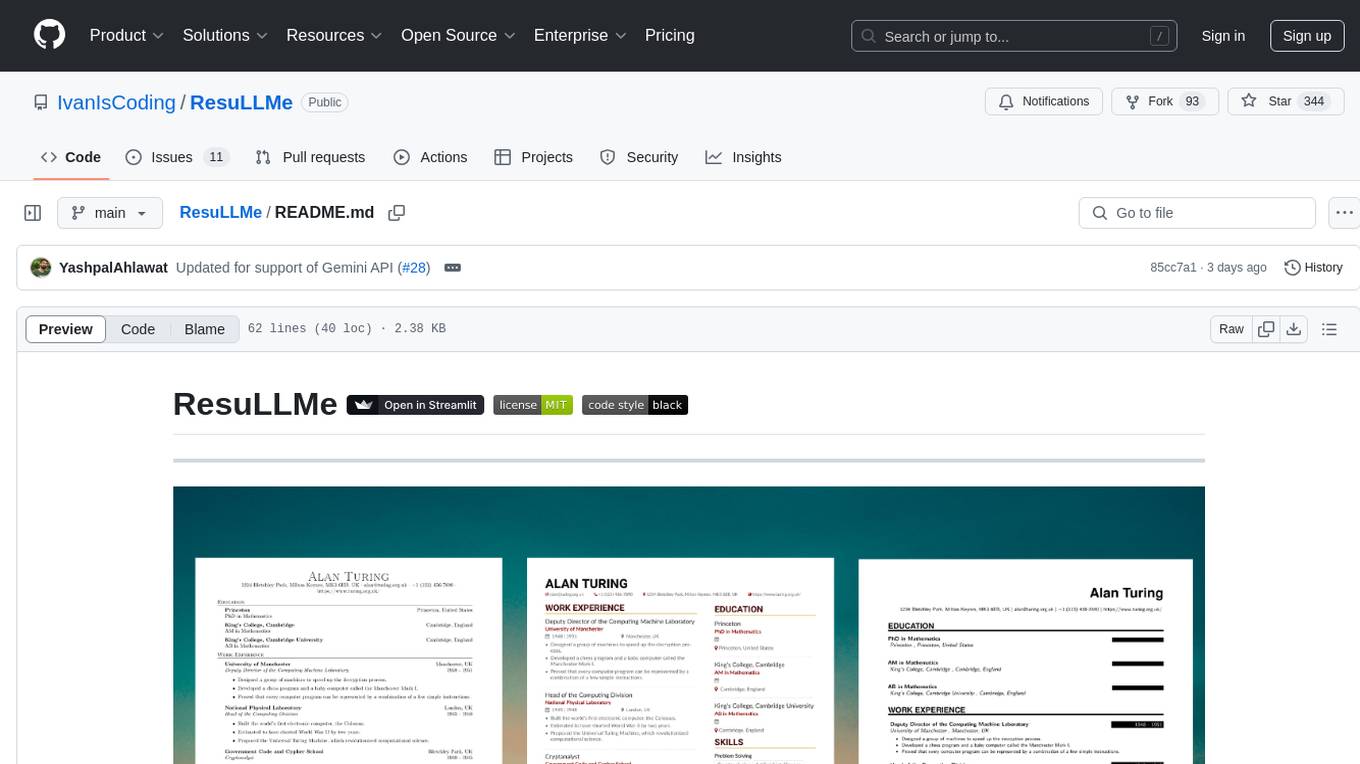

ResuLLMe

ResuLLMe is a prototype tool that uses Large Language Models (LLMs) to enhance résumés by tailoring them to help candidates avoid common mistakes while applying for jobs. It acts as a smart career advisor to check and improve résumés. The tool supports both OpenAI and Gemini, providing users with smarter, more accurate career guidance. Users can upload their CV as a PDF or Word Document, and ResuLLMe uses LLMs to improve the résumé following published guidelines, convert it to a JSON Resume format, and render it using LaTeX to generate an enhanced PDF resume.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.