Best AI tools for< Generate Synthetic Datasets >

20 - AI tool Sites

Bifrost AI

Bifrost AI is a data generation engine designed for AI and robotics applications. It enables users to train and validate AI models faster by generating physically accurate synthetic datasets in 3D simulations, eliminating the need for real-world data. The platform offers pixel-perfect labels, scenario metadata, and a simulated 3D world to enhance AI understanding. Bifrost AI empowers users to create new scenarios and datasets rapidly, stress test AI perception, and improve model performance. It is built for teams at every stage of AI development, offering features like automated labeling, class imbalance correction, and performance enhancement.

Syntho

Syntho is a self-service AI-generated synthetic data platform that offers a comprehensive solution for generating synthetic data for various purposes. It provides tools for de-identification, test data management, rule-based synthetic data generation, data masking, and more. With a focus on privacy and accuracy, Syntho enables users to create synthetic data that mirrors real production data while ensuring compliance with regulations and data privacy standards. The platform offers a range of features and use cases tailored to different industries, including healthcare, finance, and public organizations.

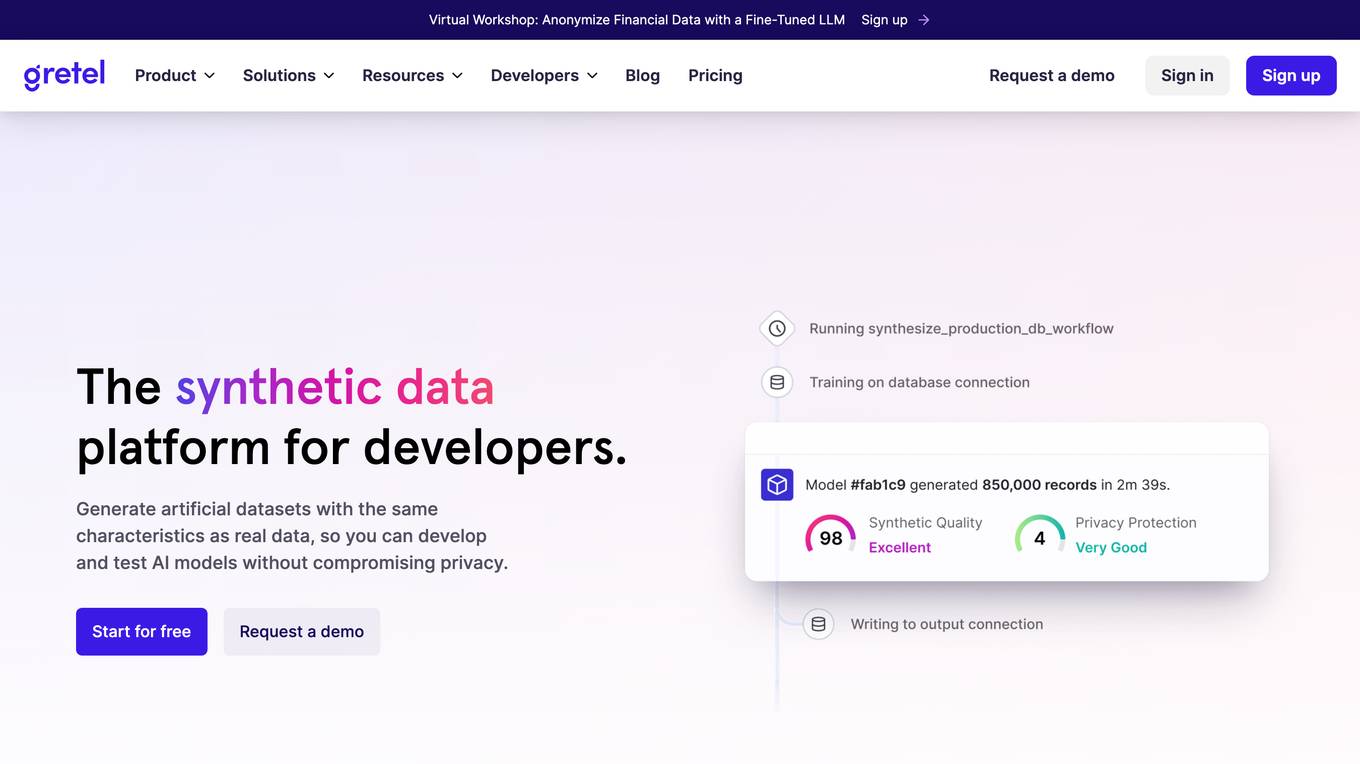

Gretel.ai

Gretel.ai is an AI tool that helps users incorporate generative AI into their data by generating synthetic data that is as good or better than the existing data. Users can fine-tune custom AI models and use Gretel's APIs to generate unlimited synthesized datasets, perform privacy-preserving transformations on sensitive data, and identify PII with advanced NLP detection. Gretel's APIs make it simple to generate anonymized and safe synthetic data, allowing users to innovate faster and preserve privacy while doing it. Gretel's platform includes Synthetics, Transform, and Classify APIs that provide users with a complete set of tools to create safe data. Gretel also offers a range of resources, including documentation, tutorials, GitHub projects, and open-source SDKs for developers. Gretel Cloud runners allow users to keep data contained by running Gretel containers in their environment or scaling out workloads to the cloud in seconds. Overall, Gretel.ai is a powerful AI tool for generating synthetic data that can help users unlock innovation and achieve more with safe access to the right data.

Datagen

Datagen is a platform that provides synthetic data for computer vision. Synthetic data is artificially generated data that can be used to train machine learning models. Datagen's data is generated using a variety of techniques, including 3D modeling, computer graphics, and machine learning. The company's data is used by a variety of industries, including automotive, security, smart office, fitness, cosmetics, and facial applications.

Rendered.ai

Rendered.ai is a platform that provides unlimited synthetic data for AI and ML applications, specifically focusing on computer vision. It helps in generating low-cost physically-accurate data to overcome bias and power innovation in AI and ML. The platform allows users to capture rare events and edge cases, acquire data that is difficult to obtain, overcome data labeling challenges, and simulate restricted or high-risk scenarios. Rendered.ai aims to revolutionize the use of synthetic data in AI and data analytics projects, with a vision that by 2030, synthetic data will surpass real data in AI models.

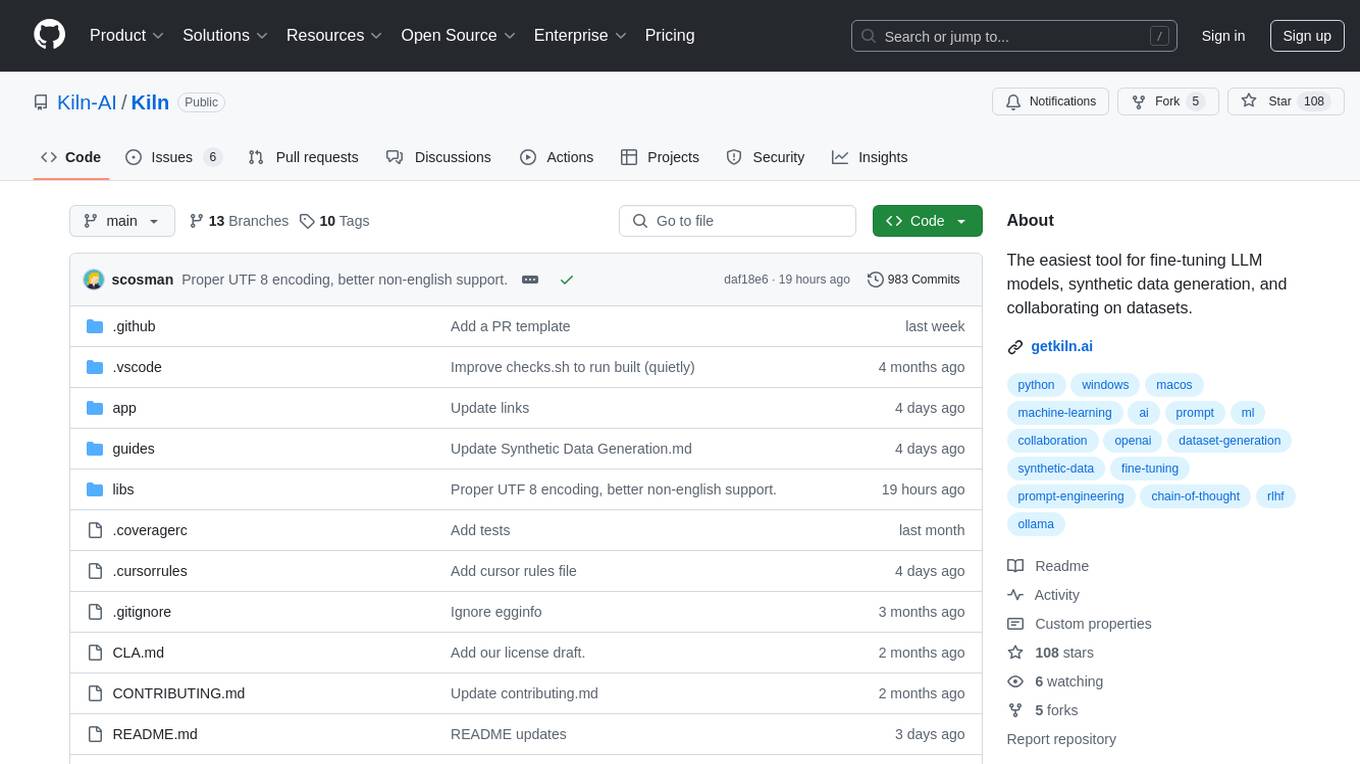

Kiln

Kiln is an AI tool designed for fine-tuning LLM models, generating synthetic data, and facilitating collaboration on datasets. It offers intuitive desktop apps, zero-code fine-tuning for various models, interactive visual tools for data generation, Git-based version control for datasets, and the ability to generate various prompts from data. Kiln supports a wide range of models and providers, provides an open-source library and API, prioritizes privacy, and allows structured data tasks in JSON format. The tool is free to use and focuses on rapid AI prototyping and dataset collaboration.

Tafi Avatar

Tafi Avatar is a global leader in 3D content creation, specializing in AI-driven character and environment datasets. As part of Daz 3D, Tafi delivers procedurally generated 3D assets for AI model training, synthetic population modeling, and identity variation. With a focus on realism and scalability, Tafi offers a wide range of features and advantages for creators and enterprises looking to leverage AI in their projects.

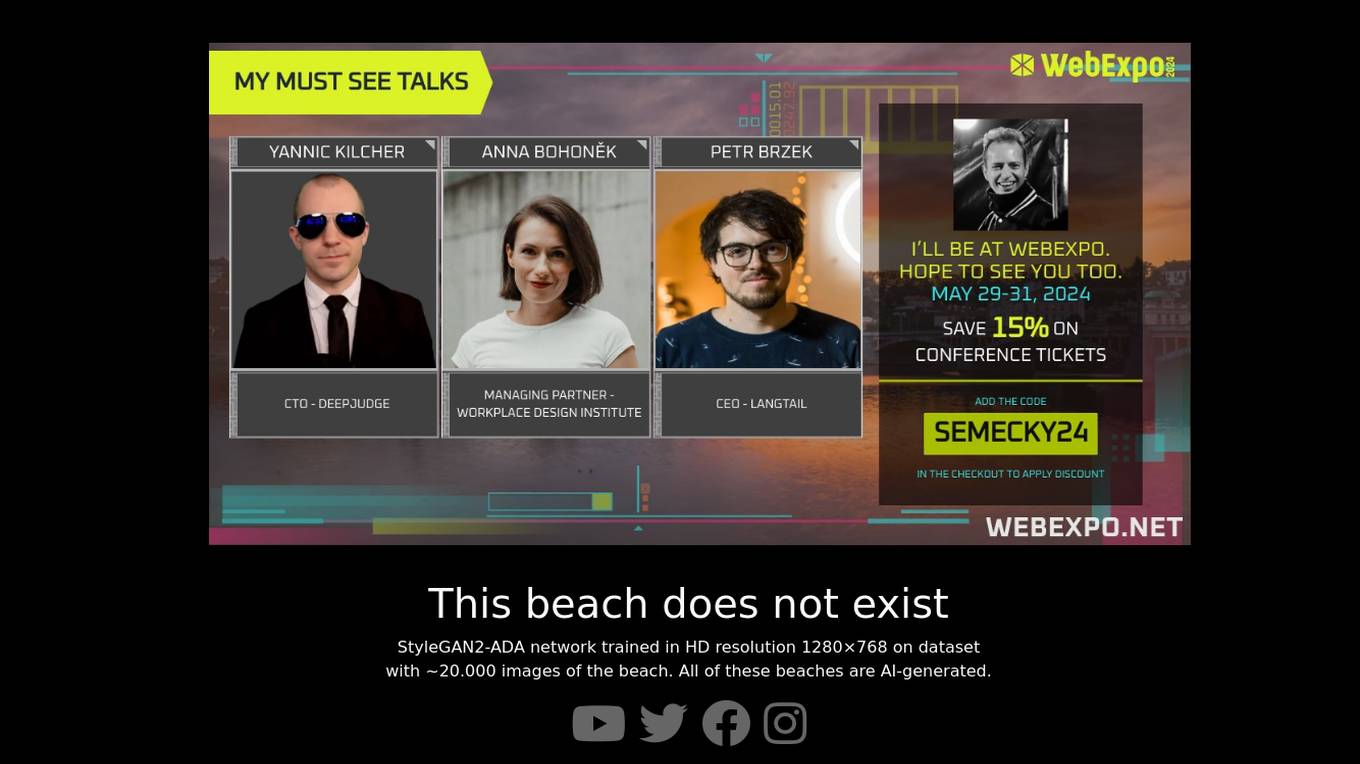

This Beach Does Not Exist

This Beach Does Not Exist is an AI application powered by StyleGAN2-ADA network, capable of generating realistic beach images. The website showcases AI-generated beach landscapes created from a dataset of approximately 20,000 images. Users can explore the training progress of the network, generate random images, utilize K-Means Clustering for image grouping, and download the network for experimentation or retraining purposes. Detailed technical information about the network architecture, dataset, training steps, and metrics is provided. The application is based on the GAN architecture developed by NVIDIA Labs and offers a unique experience of creating virtual beach scenes through AI technology.

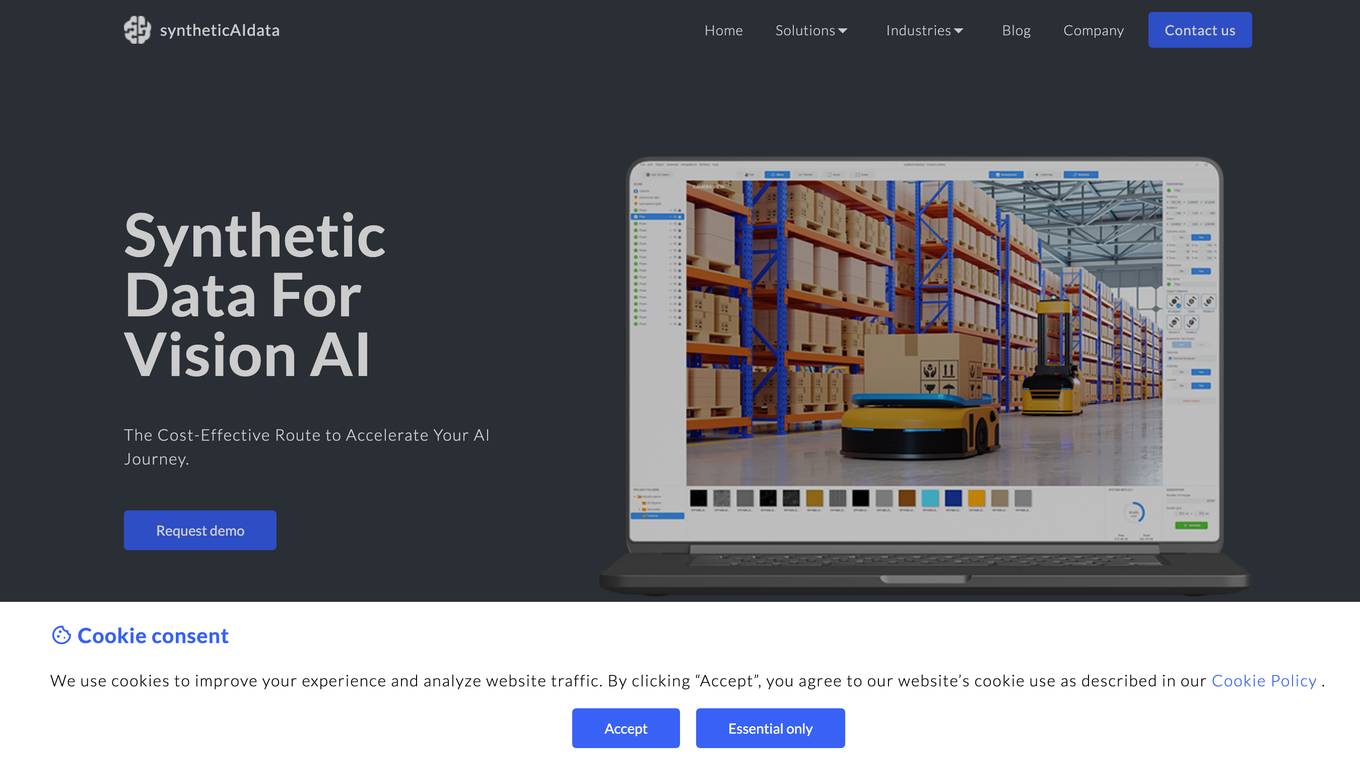

syntheticAIdata

syntheticAIdata is a platform that provides synthetic data for training vision AI models. Synthetic data is generated artificially, and it can be used to augment existing real-world datasets or to create new datasets from scratch. syntheticAIdata's platform is easy to use, and it can be integrated with leading cloud platforms. The company's mission is to make synthetic data accessible to everyone, and to help businesses overcome the challenges of acquiring high-quality data for training their vision AI models.

Undress AI Pro

Undress AI Pro is a controversial computer vision application that uses machine learning to remove clothing from images of people. It was based on deep learning and generative adversarial networks (GANs). The technology powering Undress AI and DeepNude was based on deep learning and generative adversarial networks (GANs). GANs involve two neural networks competing against each other - a generator creates synthetic images trying to mimic the training data, while a discriminator tries to distinguish the real images from the generated ones. Through this adversarial process, the generator learns to produce increasingly realistic outputs. For Undress AI, the GAN was trained on a dataset of nude and clothed images, allowing it to "unclothe" people in new images by generating the nudity.

TrainEngine.ai

TrainEngine.ai is a powerful AI-powered image generation tool that allows users to create stunning, unique images from text prompts. With its advanced algorithms and vast dataset, TrainEngine.ai can generate images in a wide range of styles, from realistic to abstract, and in various formats, including photos, paintings, and illustrations. The platform is easy to use, making it accessible to both professional artists and hobbyists alike. TrainEngine.ai offers a range of features, including the ability to fine-tune models, generate unlimited AI assets, and access trending models. It also provides a marketplace where users can buy and sell AI-generated images.

Supermachine

Supermachine is an AI-powered image generator that allows users to create realistic and unique images from scratch. With a simple text prompt, users can generate images of anything they can imagine, from landscapes and portraits to abstract concepts and surreal scenes. Supermachine's AI technology is trained on a massive dataset of images, allowing it to generate images that are both visually appealing and realistic.

Synthetic Data Generation for Agentic AI

The website provides information on Synthetic Data Generation for Agentic AI, offering high-quality, domain-specific synthetic data to accelerate the development of agentic workflows. It explains the technical implementation, benefits, and usage of synthetic data in training specialized agentic systems. The site also showcases related use cases, quick links for further exploration, and detailed steps for generating synthetic data.

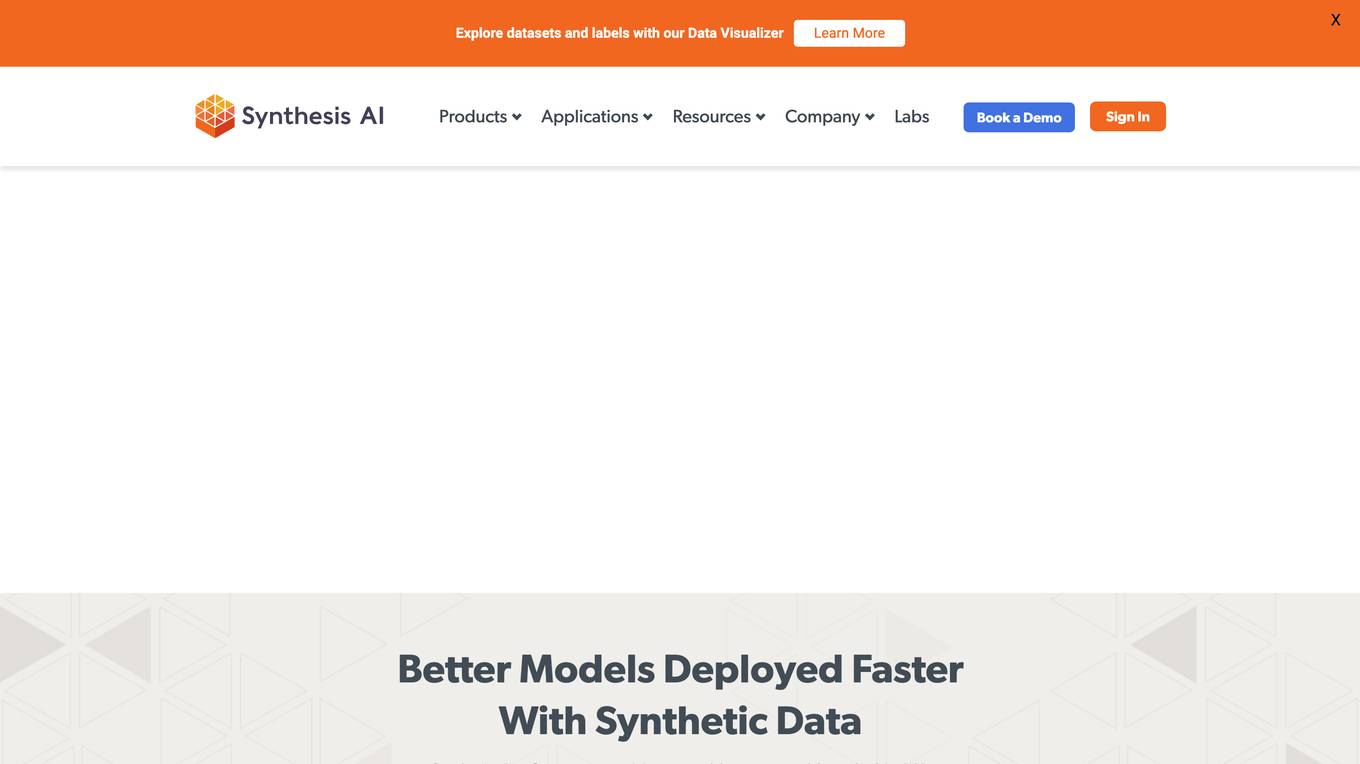

Synthesis AI

Synthesis AI is a synthetic data platform that enables more capable and ethical computer vision AI. It provides on-demand labeled images and videos, photorealistic images, and 3D generative AI to help developers build better models faster. Synthesis AI's products include Synthesis Humans, which allows users to create detailed images and videos of digital humans with rich annotations; Synthesis Scenarios, which enables users to craft complex multi-human simulations across a variety of environments; and a range of applications for industries such as ID verification, automotive, avatar creation, virtual fashion, AI fitness, teleconferencing, visual effects, and security.

MOSTLY AI Platform

The website offers a Synthetic Data Generation platform with the highest accuracy for free. It provides detailed information on synthetic data, data anonymization, and features a Python Client for data generation. The platform ensures privacy and security, allowing users to create fully anonymous synthetic data from original data. It supports various AI/ML use cases, self-service analytics, testing & QA, and data sharing. The platform is designed for Enterprise organizations, offering scalability, privacy by design, and the world's most accurate synthetic data.

Incribo

Incribo is a company that provides synthetic data for training machine learning models. Synthetic data is artificially generated data that is designed to mimic real-world data. This data can be used to train machine learning models without the need for real-world data, which can be expensive and difficult to obtain. Incribo's synthetic data is high quality and affordable, making it a valuable resource for machine learning developers.

Marvin

Marvin is a lightweight toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. It provides a variety of AI functions for text, images, audio, and video, as well as interactive tools and utilities. Marvin is designed to be easy to use and integrate, and it can be used to build a wide range of applications, from simple chatbots to complex AI-powered systems.

QuarkIQL

QuarkIQL is a generative testing tool for computer vision APIs. It allows users to create custom test images and requests with just a few clicks. QuarkIQL also provides a log of your queries so you can run more experiments without starting from square one.

DeepEval

DeepEval by Confident AI is a comprehensive LLM Evaluation Framework used by leading AI companies. It enables users to build reliable evaluation pipelines to test any AI system. With 50+ research-backed metrics, native multi-modal support, and auto-optimization of prompts, DeepEval offers a sophisticated evaluation ecosystem for AI applications. The framework covers unit-testing for LLMs, single and multi-turn evaluations, generation & simulation of test data, and state-of-the-art evaluation techniques like G-Eval and DAG. DeepEval is integrated with Pytest and supports various system architectures, making it a versatile tool for AI testing.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

3 - Open Source AI Tools

datadreamer

DataDreamer is an advanced toolkit designed to facilitate the development of edge AI models by enabling synthetic data generation, knowledge extraction from pre-trained models, and creation of efficient and potent models. It eliminates the need for extensive datasets by generating synthetic datasets, leverages latent knowledge from pre-trained models, and focuses on creating compact models suitable for integration into any device and performance for specialized tasks. The toolkit offers features like prompt generation, image generation, dataset annotation, and tools for training small-scale neural networks for edge deployment. It provides hardware requirements, usage instructions, available models, and limitations to consider while using the library.

llm-swarm

llm-swarm is a tool designed to manage scalable open LLM inference endpoints in Slurm clusters. It allows users to generate synthetic datasets for pretraining or fine-tuning using local LLMs or Inference Endpoints on the Hugging Face Hub. The tool integrates with huggingface/text-generation-inference and vLLM to generate text at scale. It manages inference endpoint lifetime by automatically spinning up instances via `sbatch`, checking if they are created or connected, performing the generation job, and auto-terminating the inference endpoints to prevent idling. Additionally, it provides load balancing between multiple endpoints using a simple nginx docker for scalability. Users can create slurm files based on default configurations and inspect logs for further analysis. For users without a Slurm cluster, hosted inference endpoints are available for testing with usage limits based on registration status.

DataDreamer

DataDreamer is a powerful open-source Python library designed for prompting, synthetic data generation, and training workflows. It is simple, efficient, and research-grade, allowing users to create prompting workflows, generate synthetic datasets, and train models with ease. The library is built for researchers, by researchers, focusing on correctness, best practices, and reproducibility. It offers features like aggressive caching, resumability, support for bleeding-edge techniques, and easy sharing of datasets and models. DataDreamer enables users to run multi-step prompting workflows, generate synthetic datasets for various tasks, and train models by aligning, fine-tuning, instruction-tuning, and distilling them using existing or synthetic data.

20 - OpenAI Gpts

Synthetic Work (Re)Search Assistant

Search data on the impact of AI on jobs, productivity and operations published by Synthetic Work (https://synthetic.work)

Chemistry Companion

Professional chemistry assistant, SMILES/SMART supported molecule and reaction diagrams, and more!

PANˈDÔRƏ

Pandora is a Posthuman Prompt Engineer powered by the MANNS engine. Surpass human creative limitations by synthesizing diverse knowledge, advanced pattern recognition, and algorithmic creativity

Angular Architect AI: Generate Angular Components

Generates Angular components based on requirements, with a focus on code-first responses.

🖌️ Line to Image: Generate The Evolved Prompt!

Transforms lines into detailed prompts for visual storytelling.

Generate text imperceptible to detectors.

Discover how your writing can shine with a unique and human style. This prompt guides you to create rich and varied texts, surprising with original twists and maintaining coherence and originality. Transform your writing and challenge AI detection tools!