Best AI tools for< Distill Models >

11 - AI tool Sites

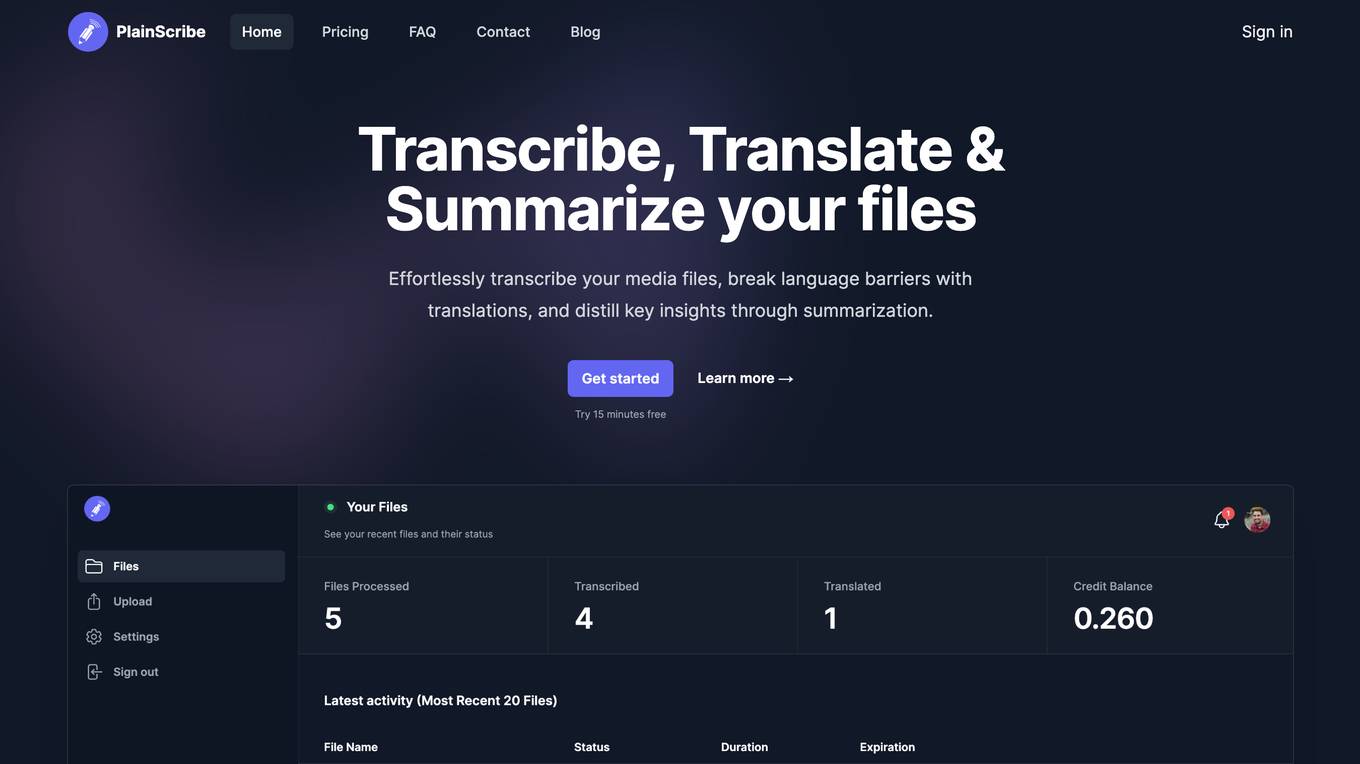

PlainScribe

PlainScribe is a versatile online tool that offers transcription, translation, and summarization services for various media files. Users can effortlessly transcribe their audio and video files, overcome language barriers with translations, and distill key insights through summarization. The platform supports a wide range of file sizes and provides a pay-as-you-go model for cost efficiency. With a focus on privacy and security, PlainScribe automatically deletes user data after 7 days. Additionally, users can benefit from multilingual support, summarized transcripts, and flexible export options like CSV and subtitle formats.

ZoomInfo + Chorus

ZoomInfo + Chorus is an AI-powered Conversation Intelligence platform designed for sales teams to capture and analyze customer calls, meetings, and emails. It helps in understanding customer trends, improving sales processes, and driving revenue growth through data-driven insights and actionable intelligence. The platform leverages machine learning technology to provide performance-driven sales teams with visibility, process improvements, and behavior changes to enhance sales effectiveness and deal outcomes.

Blizzy AI

Blizzy AI is an innovative tool that allows users to have meaningful conversations with their data. Users can chat with any file and access the internet securely. With features like bulk upload knowledge, personalized knowledge vault creation, and ready-made prompts, Blizzy AI enhances marketing strategies, content creation, and online browsing experience. The tool prioritizes privacy and security by not using user data for training purposes and ensuring data accessibility only to the user.

Laxis

Laxis is a revolutionary AI Meeting Assistant designed to capture and distill key insights from every customer interaction effortlessly. It seamlessly integrates across platforms, from online meetings to CRM updates, all with a user-friendly interface. Laxis empowers revenue teams to maximize every customer conversation, ensuring no valuable detail is missed. With Laxis, sales teams can close more deals with AI note-taking and insights from client conversations, business development teams can engage prospects more effectively and grow their business faster, marketing teams can repurpose podcasts, webinars, and meetings into engaging content with a single click, product and market researchers can conduct better research interviews that get to the "aha!" moment faster, project managers can remember key takeaways and status updates, and capture them for progress reports, and product and UX designers can capture and organize insights from their interviews and user research.

Narratize

Narratize is a generative AI storytelling platform designed for innovative enterprises to accelerate R&D, product innovation, and marketing solutions. It helps enterprise teams and individuals to distill scientific, technical, and medical insights into impactful content that scales. With Narratize, users can generate various types of content quickly without the need for prompt engineering, improving communication and collaboration within the organization.

Crayon

Crayon is a competitive intelligence software that helps businesses track competitors, win more deals, and stay ahead in the market. Powered by AI, Crayon enables users to analyze, enable, compete, and measure their competitive landscape efficiently. The platform offers features such as competitor monitoring, AI news summarization, importance scoring, content creation, sales enablement, performance metrics, and more. With Crayon, users can receive high-priority insights, distill articles about competitors, create battlecards, find intel to win deals, and track performance metrics. The application aims to make competitive intelligence seamless and impactful for sales teams.

Swipe Insight

Swipe Insight is a mobile application that provides users with daily updates on digital marketing and analytics trends, news, and strategies. The app features a personalized feed that adapts to the user's preferences, intelligent insights that distill complex topics into concise summaries, and a curated selection of content from over 100 trusted sources. Swipe Insight is designed to help users stay ahead in the industry with just minutes of reading per day.

AnyLearn.ai

AnyLearn.ai is an AI-powered platform that offers a wide range of courses and guides generated by artificial intelligence. It provides users with the opportunity to learn about various topics in a structured and comprehensive manner. The platform leverages the power of AI to create personalized learning experiences for individuals seeking to enhance their knowledge and skills.

Supermanage AI

Supermanage AI is an AI-powered application designed to streamline and enhance 1-on-1 meetings for managers. It provides personalized insights by distilling information from public Slack channels, enabling managers to have more meaningful interactions with their team members. The application aims to improve team dynamics, support, and engagement by offering a snapshot of contributions, challenges, and engagement levels. Supermanage AI offers features such as automated snapshots, Slack integration, and data privacy measures to ensure a seamless and secure user experience.

AI Index

The AI Index is a comprehensive resource for data and insights on artificial intelligence. It provides unbiased, rigorously vetted, and globally sourced data for policymakers, researchers, journalists, executives, and the general public to develop a deeper understanding of the complex field of AI. The AI Index tracks, collates, distills, and visualizes data relating to artificial intelligence. This includes data on research and development, technical performance and ethics, the economy and education, AI policy and governance, diversity, public opinion, and more.

The Video Calling App

The Video Calling App is an AI-powered platform designed to revolutionize meeting experiences by providing laser-focused, context-aware, and outcome-driven meetings. It aims to streamline post-meeting routines, enhance collaboration, and improve overall meeting efficiency. With powerful integrations and AI features, the app captures, organizes, and distills meeting content to provide users with a clearer perspective and free headspace. It offers seamless integration with popular tools like Slack, Linear, and Google Calendar, enabling users to automate tasks, manage schedules, and enhance productivity. The app's user-friendly interface, interactive features, and advanced search capabilities make it a valuable tool for global teams and remote workers seeking to optimize their meeting experiences.

2 - Open Source AI Tools

DataDreamer

DataDreamer is a powerful open-source Python library designed for prompting, synthetic data generation, and training workflows. It is simple, efficient, and research-grade, allowing users to create prompting workflows, generate synthetic datasets, and train models with ease. The library is built for researchers, by researchers, focusing on correctness, best practices, and reproducibility. It offers features like aggressive caching, resumability, support for bleeding-edge techniques, and easy sharing of datasets and models. DataDreamer enables users to run multi-step prompting workflows, generate synthetic datasets for various tasks, and train models by aligning, fine-tuning, instruction-tuning, and distilling them using existing or synthetic data.

RAG-Retrieval

RAG-Retrieval is an end-to-end code repository that provides training, inference, and distillation capabilities for the RAG retrieval model. It supports fine-tuning of various open-source RAG retrieval models, including embedding models, late interactive models, and reranker models. The repository offers a lightweight Python library for calling different RAG ranking models and allows distillation of LLM-based reranker models into bert-based reranker models. It includes features such as support for end-to-end fine-tuning, distillation of large models, advanced algorithms like MRL, multi-GPU training strategy, and a simple code structure for easy modifications.

1 - OpenAI Gpts

Executive Summary Assistant

Maximize efficiency with our AI Executive Summary Assistant! Tailored for busy professionals, it distills complex inputs into concise, clear summaries. Save time, grasp key points, and make informed decisions faster. Ideal for business leaders on-the-go.