Best AI tools for< deploy a backend >

20 - AI tool Sites

Backengine

Backengine is a low-code/no-code platform that allows users to build backends for their applications using natural language. With Backengine, users can create a database schema, define API endpoints, and deploy their backend to the cloud, all without writing any code. Backengine is designed to be easy to use, even for users with no programming experience. The platform provides a user-friendly interface that allows users to create and manage their backends using simple drag-and-drop actions. Backengine is a powerful tool that can be used to build a wide variety of applications, including web applications, mobile applications, and IoT devices. The platform is also highly extensible, allowing users to connect to external databases and services, and to customize their backends to meet their specific needs.

Supabase

Supabase is an open-source Firebase alternative. It is a backend service that provides authentication, storage, real-time database, and other features for web and mobile applications.

Prisms

Prisms is a no-code platform for building AI-powered apps. It allows users to harness the power of AI without having to write any code. Prisms is built on top of Large Language models including GPT3, DALL-E, and Stable Diffusion. Users can connect the pieces in Prisms to stack together data sources, user inputs, and off-the-shelf building blocks to create their own AI-powered apps. Prisms also makes it easy to deploy AI-powered apps directly from the platform with its pre-built UI. Alternatively, users can build their own frontend and use Prisms as a backend for their AI logic.

Local AI Playground

Local AI Playground is a free and open-source native app that simplifies the process of AI management, verification, and inferencing offline and in private, without the need for a GPU. It features a powerful native app with a Rust backend, making it memory efficient and compact. With Local AI Playground, you can power any AI app offline or online, and keep track of your AI models in one centralized location. It also provides a robust digest verification feature to ensure the integrity of downloaded models.

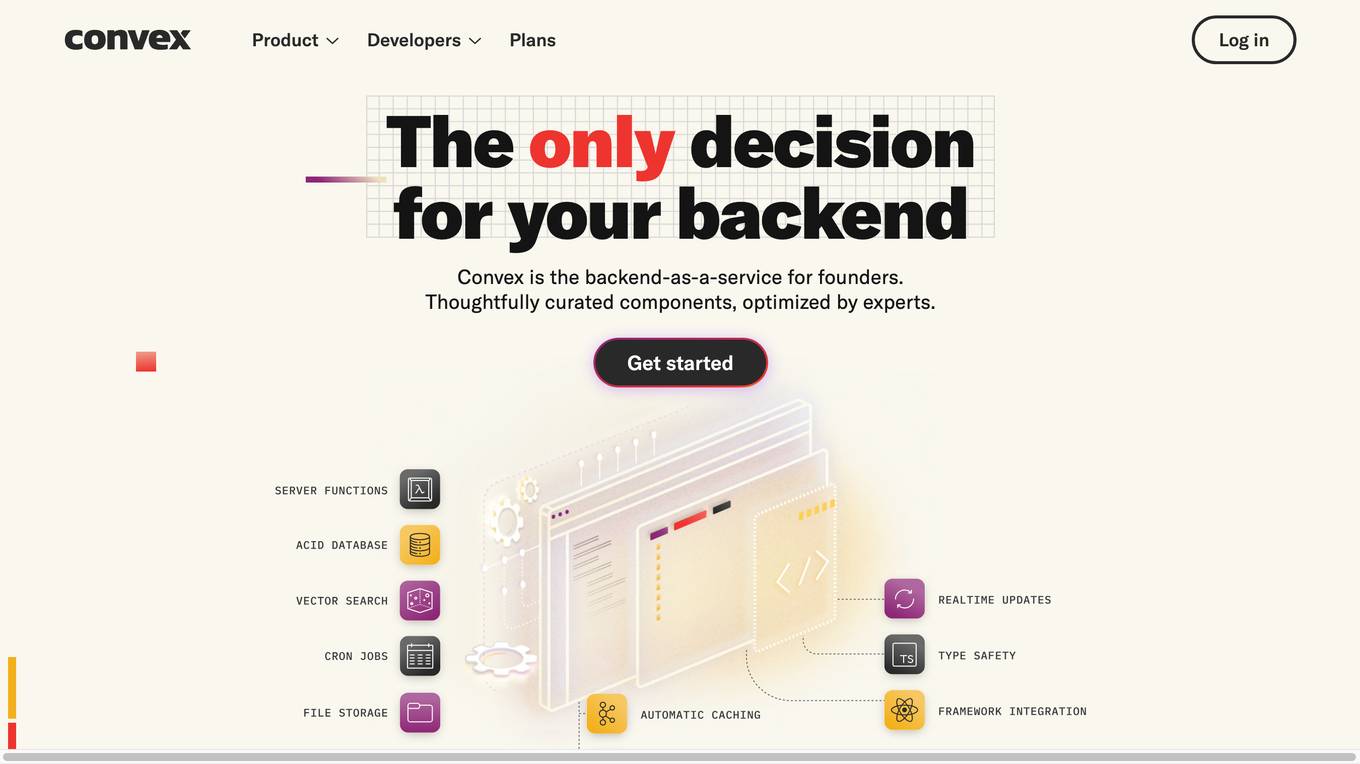

Convex

Convex is a fullstack TypeScript development platform that provides a flexible, 100% ACID compliant database. It allows developers to build real-time, reactive applications with ease. Convex also offers a variety of features such as actions, jobs, and webhooks that make it easy to integrate with third-party services. Additionally, Convex provides a variety of resources to help developers get started, including documentation, tutorials, and a community forum.

Goptimise

Goptimise is a no-code AI-powered scalable backend builder that helps developers craft scalable, seamless, powerful, and intuitive backend solutions. It offers a solid foundation with robust and scalable infrastructure, including dedicated infrastructure, security, and scalability. Goptimise simplifies software rollouts with one-click deployment, automating the process and amplifying productivity. It also provides smart API suggestions, leveraging AI algorithms to offer intelligent recommendations for API design and accelerating development with automated recommendations tailored to each project. Goptimise's intuitive visual interface and effortless integration make it easy to use, and its customizable workspaces allow for dynamic data management and a personalized development experience.

Koxy AI

Koxy AI is a no-code, AI-powered serverless backend platform that allows developers to build and deploy globally distributed, fast, secure, and scalable backends. It offers a wide range of features, including a drag-and-drop builder, real-time data synchronization, and integration with over 80,000 AI models. Koxy AI is designed to make backend development faster, easier, and more accessible, even for those with no coding experience.

Vairflow

Vairflow is a next-generation IDE for cloud services that helps developers build faster and more efficiently. It provides an AI-driven development environment that simplifies complex ideas into reusable components, enabling seamless development and deployment of backend microservices, web UIs, and mobile app UIs. Vairflow eliminates the need for local environment setup and allows developers to deploy their components with a single click. It also offers advanced features such as code generation, code completion, code explanation, and monitoring of inter-service dependencies, powered by Code Llama. Additionally, Vairflow provides live preview capabilities for full-stack, multi-platform apps, with upcoming support for built-in multi-browser web previews and Android and iOS emulators.

Pythagora

Pythagora is a revolutionary AI-powered development tool that empowers you to create software applications effortlessly. With its intuitive interface and advanced capabilities, Pythagora streamlines the entire development process, making it accessible to developers of all skill levels. Pythagora seamlessly integrates with Visual Studio Code, providing real-time assistance and guidance throughout your development journey. Its advanced AI engine, powered by GPT Pilot and GPT-4, enables natural language communication, allowing you to describe your app's specifications and requirements in plain English. Pythagora's comprehensive feature set empowers you to: - Break down complex app specifications into manageable tasks - Define project architecture and select appropriate technologies - Design and implement both frontend and backend components - Troubleshoot errors and debug code efficiently - Utilize version control to track code changes and collaborate seamlessly - Deploy your app to the cloud with a single click - Generate automated tests to ensure code quality and scalability - Integrate external documentation for comprehensive app understanding Pythagora's user-centric approach eliminates the need for extensive documentation. Simply engage in a conversation with the AI assistant, and it will guide you through every step of the development process, clarifying requirements, resolving issues, and providing valuable insights. With Pythagora, you can transform your ideas into fully functional apps in record time. Its AI-powered capabilities accelerate development, reduce debugging time, and ensure production-level code quality. Whether you're a seasoned developer or just starting your software development journey, Pythagora empowers you to achieve your coding goals with ease.

Spine AI

Spine AI is a managed service that helps businesses deploy conversational interfaces that can execute complex workflows, perform bulk actions, and provide real-time data insights. It is designed to work with any product that has a REST or GraphQL API, and can be used to improve user flows, provide deep business insights, and offer a better input method for AI touchpoints. Spine AI is backed by Y Combinator and has a team of experienced AI engineers who can help businesses get started with AI.

GitWit

GitWit is an online tool that helps you build web apps quickly and easily, even if you don't have any coding experience. With GitWit, you can create a React app in minutes, and you can use AI to augment your own coding skills. GitWit supports React, Tailwind, and NodeJS, and it has generated over 1000 projects to date. GitWit can help you build any type of web app, from simple landing pages to complex e-commerce stores.

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

GPT Engineer

GPT Engineer is a tool that allows users to build interactive web apps using natural language. It is designed to be fast and easy to use, with a shared codebase for AI and humans. This makes it a great tool for prototyping and iterating on new ideas.

Marblism

Marblism is a platform that allows developers to quickly and easily launch React and Node.js applications. With Marblism, developers can generate the database schema, all the endpoints in the API, the design system, and even a few pages in the front-end. This can save developers a significant amount of time and effort, allowing them to focus on adding their unique touch to their applications.

Lazy AI

Lazy AI is a platform that enables users to build and modify full stack web apps with prompts and deploy to the cloud with one click. It provides a variety of templates and integrations to help users get started quickly and easily. Lazy AI is designed to be accessible to users of all skill levels, from beginners to experienced developers.

Nerdynav

Nerdynav is a website that provides reviews of AI tools and software for businesses. The website is run by Nav, a software developer and online entrepreneur who has tested over 100 tools to help businesses find the best solutions for their needs. Nerdynav's reviews are data-backed and provide insights into the features, advantages, and disadvantages of each tool. The website also includes articles on how to use AI to improve business processes and increase productivity.

Dynaboard

Dynaboard is a collaborative low-code IDE for developers that allows users to build web apps in minutes using a drag-and-drop builder, a flexible code-first UI framework, and the power of generative AI. With Dynaboard, users can connect to popular databases, SaaS apps, or any API with GraphQL or REST endpoints, and secure their apps using any existing OIDC compliant provider. Dynaboard also offers unlimited editors for team collaboration, multi-environment deployment support, automatic versioning, and easy roll-backs for production-grade confidence.

Vellum AI

Vellum AI is an AI platform that supports using Microsoft Azure hosted OpenAI models. It offers tools for prompt engineering, semantic search, prompt chaining, evaluations, and monitoring. Vellum enables users to build AI systems with features like workflow automation, document analysis, fine-tuning, Q&A over documents, intent classification, summarization, vector search, chatbots, blog generation, sentiment analysis, and more. The platform is backed by top VCs and founders of well-known companies, providing a complete solution for building LLM-powered applications.

Mirage

Mirage is a custom AI platform that builds custom LLMs to accelerate productivity. It is backed by Sequoia and offers a variety of features, including the ability to create custom AI models, train models on your own data, and deploy models to the cloud or on-premises.

Kapa.ai

Kapa.ai is an AI application that provides instant AI answers to technical questions for developers. It learns from technical resources to generate a chatbot that automatically answers questions and helps identify documentation gaps. With over 300k developers worldwide, Kapa.ai offers a range of features to improve developer experience and reduce support time. The application is backed by leading community-focused teams and integrates with various technical knowledge sources.

20 - Open Source AI Tools

sandbox

Sandbox is an open-source cloud-based code editing environment with custom AI code autocompletion and real-time collaboration. It consists of a frontend built with Next.js, TailwindCSS, Shadcn UI, Clerk, Monaco, and Liveblocks, and a backend with Express, Socket.io, Cloudflare Workers, D1 database, R2 storage, Workers AI, and Drizzle ORM. The backend includes microservices for database, storage, and AI functionalities. Users can run the project locally by setting up environment variables and deploying the containers. Contributions are welcome following the commit convention and structure provided in the repository.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

ray-llm

RayLLM (formerly known as Aviary) is an LLM serving solution that makes it easy to deploy and manage a variety of open source LLMs, built on Ray Serve. It provides an extensive suite of pre-configured open source LLMs, with defaults that work out of the box. RayLLM supports Transformer models hosted on Hugging Face Hub or present on local disk. It simplifies the deployment of multiple LLMs, the addition of new LLMs, and offers unique autoscaling support, including scale-to-zero. RayLLM fully supports multi-GPU & multi-node model deployments and offers high performance features like continuous batching, quantization and streaming. It provides a REST API that is similar to OpenAI's to make it easy to migrate and cross test them. RayLLM supports multiple LLM backends out of the box, including vLLM and TensorRT-LLM.

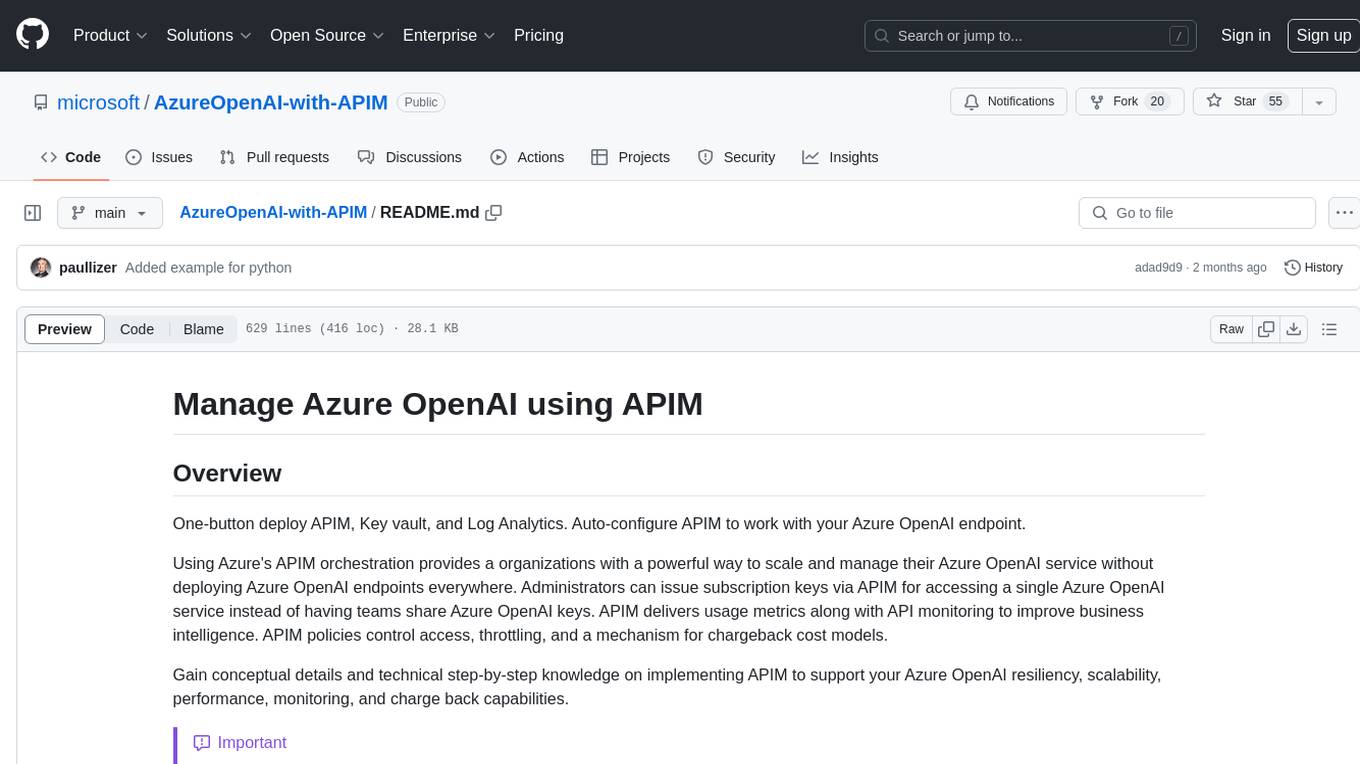

AzureOpenAI-with-APIM

AzureOpenAI-with-APIM is a repository that provides a one-button deploy solution for Azure API Management (APIM), Key Vault, and Log Analytics to work seamlessly with Azure OpenAI endpoints. It enables organizations to scale and manage their Azure OpenAI service efficiently by issuing subscription keys via APIM, delivering usage metrics, and implementing policies for access control and cost management. The repository offers detailed guidance on implementing APIM to enhance Azure OpenAI resiliency, scalability, performance, monitoring, and chargeback capabilities.

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

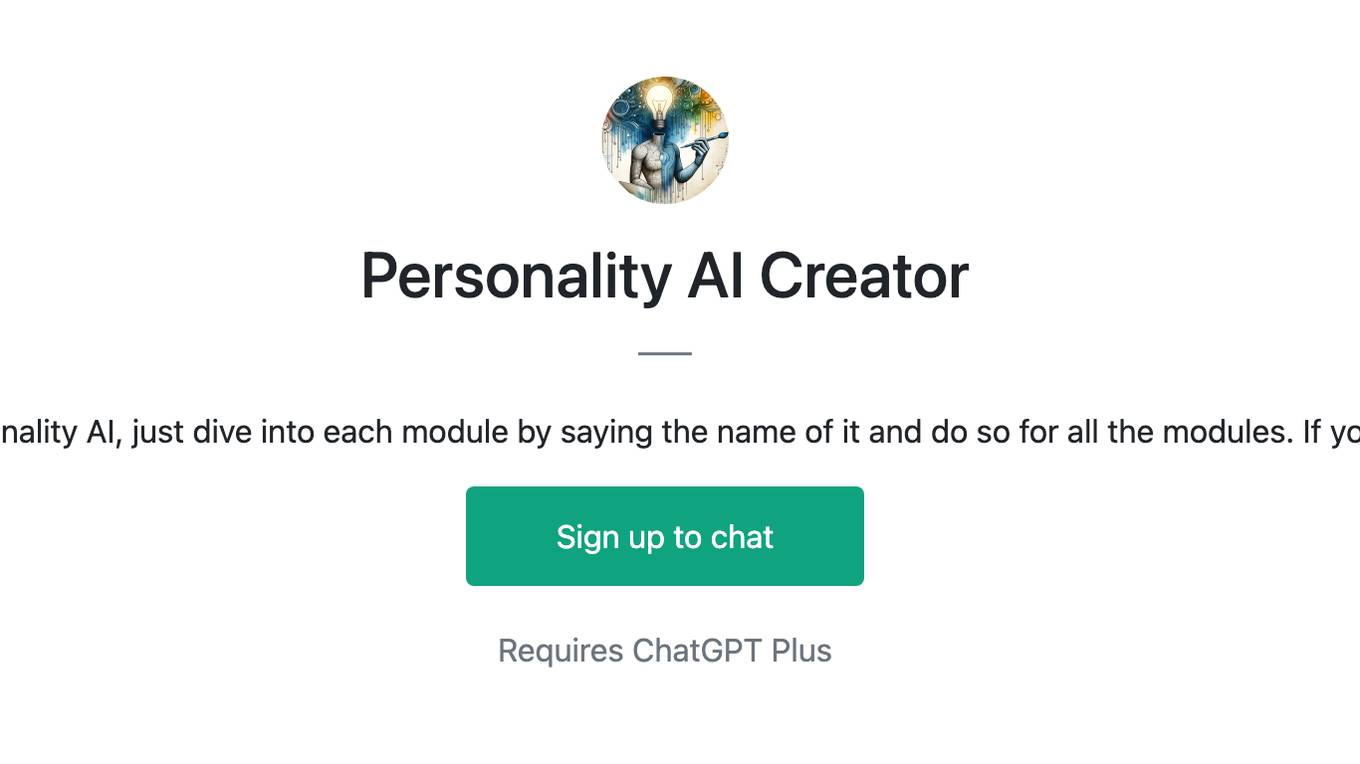

contoso-chat

Contoso Chat is a Python sample demonstrating how to build, evaluate, and deploy a retail copilot application with Azure AI Studio using Promptflow with Prompty assets. The sample implements a Retrieval Augmented Generation approach to answer customer queries based on the company's product catalog and customer purchase history. It utilizes Azure AI Search, Azure Cosmos DB, Azure OpenAI, text-embeddings-ada-002, and GPT models for vectorizing user queries, AI-assisted evaluation, and generating chat responses. By exploring this sample, users can learn to build a retail copilot application, define prompts using Prompty, design, run & evaluate a copilot using Promptflow, provision and deploy the solution to Azure using the Azure Developer CLI, and understand Responsible AI practices for evaluation and content safety.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

cognita

Cognita is an open-source framework to organize your RAG codebase along with a frontend to play around with different RAG customizations. It provides a simple way to organize your codebase so that it becomes easy to test it locally while also being able to deploy it in a production ready environment. The key issues that arise while productionizing RAG system from a Jupyter Notebook are: 1. **Chunking and Embedding Job** : The chunking and embedding code usually needs to be abstracted out and deployed as a job. Sometimes the job will need to run on a schedule or be trigerred via an event to keep the data updated. 2. **Query Service** : The code that generates the answer from the query needs to be wrapped up in a api server like FastAPI and should be deployed as a service. This service should be able to handle multiple queries at the same time and also autoscale with higher traffic. 3. **LLM / Embedding Model Deployment** : Often times, if we are using open-source models, we load the model in the Jupyter notebook. This will need to be hosted as a separate service in production and model will need to be called as an API. 4. **Vector DB deployment** : Most testing happens on vector DBs in memory or on disk. However, in production, the DBs need to be deployed in a more scalable and reliable way. Cognita makes it really easy to customize and experiment everything about a RAG system and still be able to deploy it in a good way. It also ships with a UI that makes it easier to try out different RAG configurations and see the results in real time. You can use it locally or with/without using any Truefoundry components. However, using Truefoundry components makes it easier to test different models and deploy the system in a scalable way. Cognita allows you to host multiple RAG systems using one app. ### Advantages of using Cognita are: 1. A central reusable repository of parsers, loaders, embedders and retrievers. 2. Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team. 3. Fully API driven - which allows integration with other systems. > If you use Cognita with Truefoundry AI Gateway, you can get logging, metrics and feedback mechanism for your user queries. ### Features: 1. Support for multiple document retrievers that use `Similarity Search`, `Query Decompostion`, `Document Reranking`, etc 2. Support for SOTA OpenSource embeddings and reranking from `mixedbread-ai` 3. Support for using LLMs using `Ollama` 4. Support for incremental indexing that ingests entire documents in batches (reduces compute burden), keeps track of already indexed documents and prevents re-indexing of those docs.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

kernel-memory

Kernel Memory (KM) is a multi-modal AI Service specialized in the efficient indexing of datasets through custom continuous data hybrid pipelines, with support for Retrieval Augmented Generation (RAG), synthetic memory, prompt engineering, and custom semantic memory processing. KM is available as a Web Service, as a Docker container, a Plugin for ChatGPT/Copilot/Semantic Kernel, and as a .NET library for embedded applications. Utilizing advanced embeddings and LLMs, the system enables Natural Language querying for obtaining answers from the indexed data, complete with citations and links to the original sources. Designed for seamless integration as a Plugin with Semantic Kernel, Microsoft Copilot and ChatGPT, Kernel Memory enhances data-driven features in applications built for most popular AI platforms.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

awesome-langchain

LangChain is an amazing framework to get LLM projects done in a matter of no time, and the ecosystem is growing fast. Here is an attempt to keep track of the initiatives around LangChain. Subscribe to the newsletter to stay informed about the Awesome LangChain. We send a couple of emails per month about the articles, videos, projects, and tools that grabbed our attention Contributions welcome. Add links through pull requests or create an issue to start a discussion. Please read the contribution guidelines before contributing.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

20 - OpenAI Gpts

Flask Expert Assistant

This GPT is a specialized assistant for Flask, the popular web framework in Python. It is designed to help both beginners and experienced developers with Flask-related queries, ranging from basic setup and routing to advanced features like database integration and application scaling.

Frontend Developer

AI front-end developer expert in coding React, Nextjs, Vue, Svelte, Typescript, Gatsby, Angular, HTML, CSS, JavaScript & advanced in Flexbox, Tailwind & Material Design. Mentors in coding & debugging for junior, intermediate & senior front-end developers alike. Let’s code, build & deploy a SaaS app.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

DevOps Mentor

A formal, expert guide for DevOps pros advancing their skills. Your DevOps GYM

Personality AI Creator

I will create a quality data set for a personality AI, just dive into each module by saying the name of it and do so for all the modules. If you find it useful, share it to your friends

Solidity Master

I'll help you master Solidity to become a better smart contract developer.

Apple CoreML Complete Code Expert

A detailed expert trained on all 3,018 pages of Apple CoreML, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

Tech Tutor

A tech guide for software engineers, focusing on the latest tools and foundational knowledge.