AI tools for vidio

Related Tools:

DeployMaster

The website is a platform for managing software deployments. It allows users to automate the deployment process, ensuring smooth and efficient delivery of software updates and changes to servers and applications. With features like version control, rollback options, and monitoring capabilities, users can easily track and manage their deployments. The platform simplifies the deployment process, reducing errors and downtime, and improving overall productivity.

VisionStory

VisionStory is an AI-powered video creation platform that allows users to transform images and text into captivating videos with lifelike avatars, natural voiceovers, and dynamic animations. With features like AI video creation, voice cloning, green screen effects, and support for multiple languages, VisionStory empowers content creators, marketers, educators, and YouTubers to produce high-quality video content effortlessly. The platform offers fast rendering, HD video output, and affordable pricing, making it a valuable tool for solo creators and teams alike.

Videograph

Videograph is an AI-powered video streaming platform that offers a range of video APIs for live and on-demand video streaming. It provides advanced video analytics, content distribution analytics, and features like portrait mode conversion, digital asset management, live streaming with low latency, content monetization, and dynamic ad insertion. With seamless organization through Digital Asset Management, Videograph enables users to elevate their content strategy with precision analytics. The platform also offers a user-friendly interface for easy video management and detailed insights on viewership metrics.

Boolvideo

Boolvideo is an AI video generator application that allows users to turn various content such as product URLs, blog URLs, images, and text into high-quality videos with dynamic AI voices and engaging audio-visual elements. It offers a user-friendly interface and a range of features to create captivating videos effortlessly. Users can customize videos with AI co-pilots, choose from professional templates, and make advanced edits with a lightweight editor. Boolvideo caters to a wide range of use cases, including e-commerce, content creation, marketing, design, photography, and more.

video2quiz

video2quiz is an AI tool that allows users to create quizzes in seconds based on any video. The platform emphasizes the importance of human knowledge for skills and professionalism, with a focus on validating learnings through quizzes and tests. Founded in 2023, video2quiz offers consulting services, AI tools, and the K3 LMS to help individuals, teams, and enterprises thrive in the AI era.

Video2Recipe

Video2Recipe is an AI tool that allows users to convert their favorite cooking videos into recipes effortlessly. By simply pasting the video URL or ID, the AI generates step-by-step instructions and ingredient lists. The platform aims to simplify the process of following cooking tutorials and make it more accessible for users to recreate dishes they love.

VideoAsk by Typeform

VideoAsk by Typeform is an interactive video platform that helps streamline conversations and build business relationships at scale. It offers features such as asynchronous interviews, easy scheduling, tagging, gathering contact info, capturing leads, research and feedback, training, customer support, and more. Users can create interactive video forms, conduct async interviews, and engage with their audience through AI-powered video chatbots. The platform is user-friendly, code-free, and integrates with over 1,500 applications through Zapier.

Video Summarizer

Video Summarizer is an AI tool designed to generate educational summaries from lengthy videos in multiple languages. It simplifies the process of summarizing video content, making it easier for users to grasp key information efficiently. The tool is user-friendly and efficient, catering to individuals seeking quick and concise video summaries for educational purposes.

NeuralNexus

Leveraging the power of models like VisionaryGeniusAI, GaiaAI, ACALLM, GannAI, and many more, I will generate answers that go beyond standard replies, instead offering a unique blend of insights and perspectives drawn from multiple domains and methodologies.

Visionary Business Coach

A vision-based business coach specializing in plan creation and strategy refinement.

Video Brief Genius

Transform your brand! Provide brand and product info, and we'll craft a unique, visually stunning 30-45 second video brief. Simple, effective, impactful.

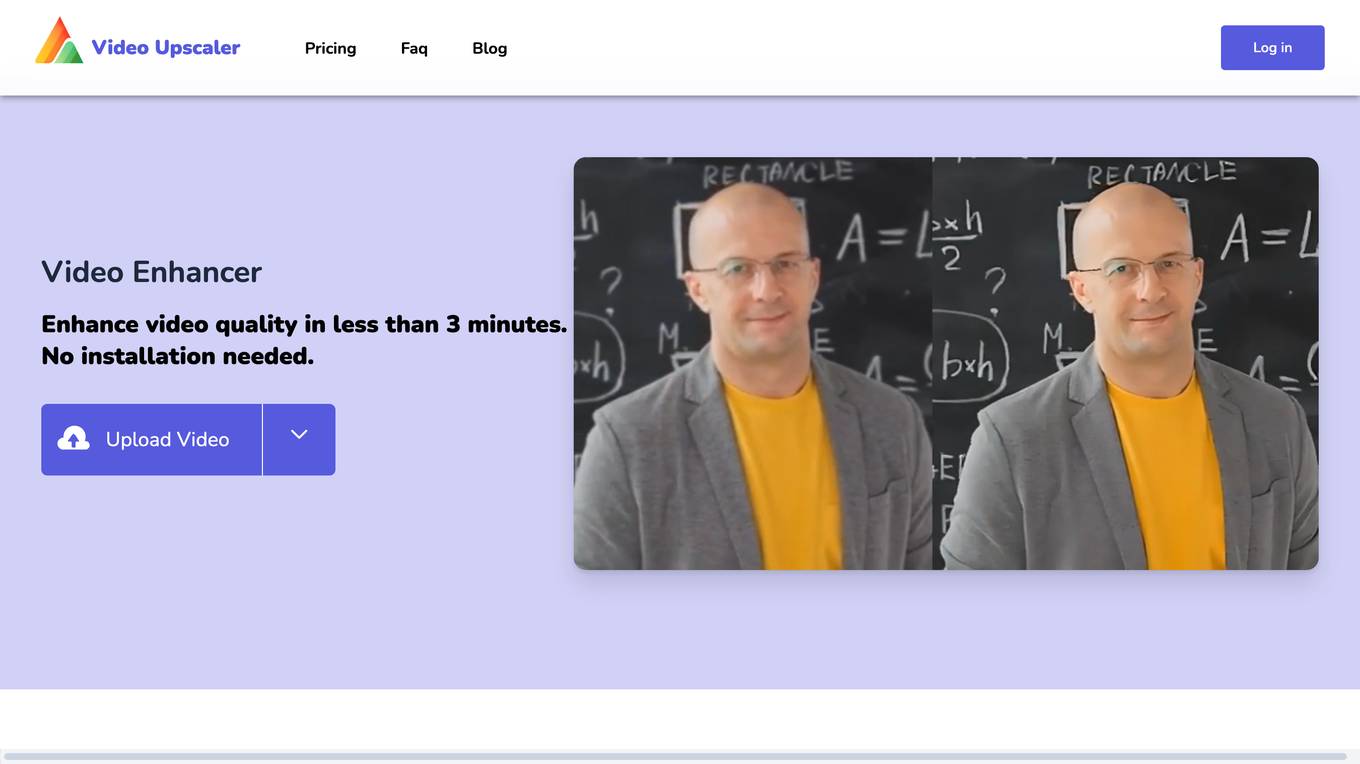

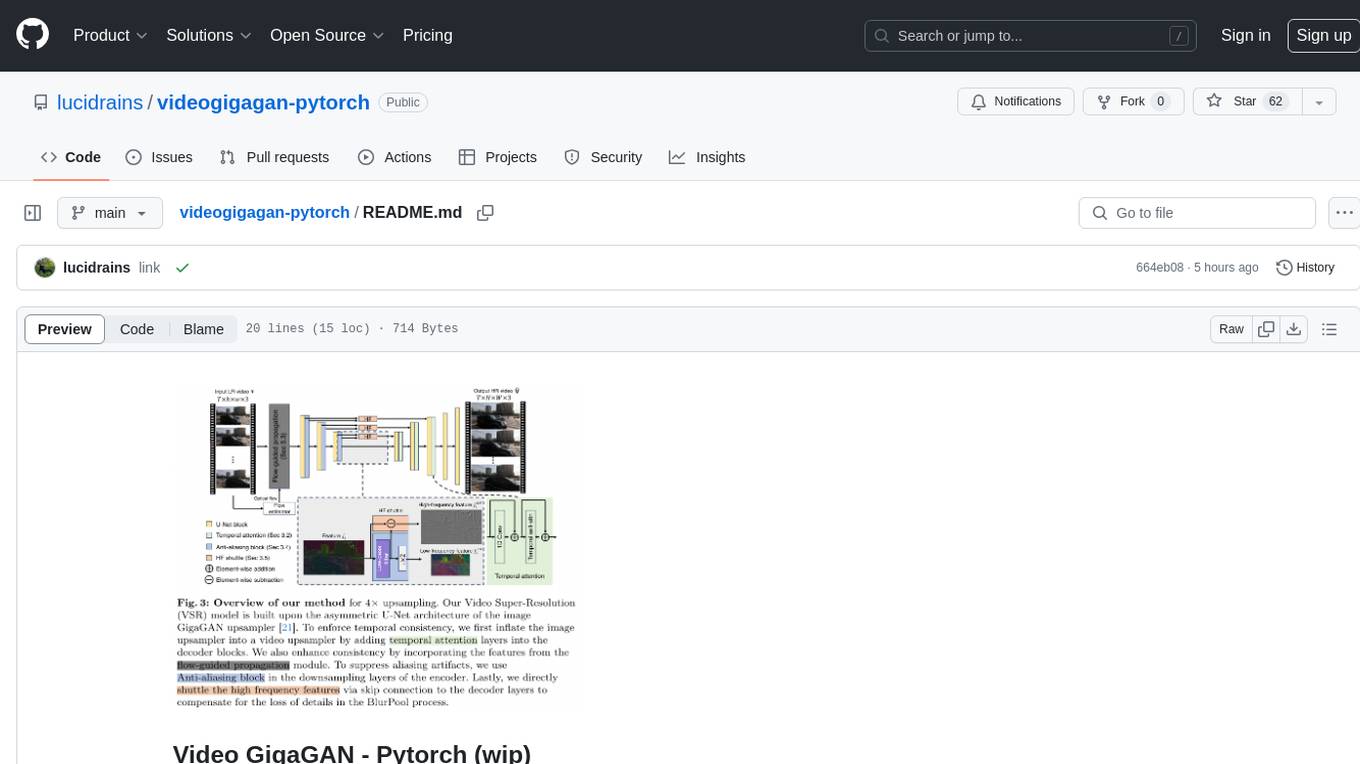

videogigagan-pytorch

Video GigaGAN - Pytorch is an implementation of Video GigaGAN, a state-of-the-art video upsampling technique developed by Adobe AI labs. The project aims to provide a Pytorch implementation for researchers and developers interested in video super-resolution. The codebase allows users to replicate the results of the original research paper and experiment with video upscaling techniques. The repository includes the necessary code and resources to train and test the GigaGAN model on video datasets. Researchers can leverage this implementation to enhance the visual quality of low-resolution videos and explore advancements in video super-resolution technology.

Video-MME

Video-MME is the first-ever comprehensive evaluation benchmark of Multi-modal Large Language Models (MLLMs) in Video Analysis. It assesses the capabilities of MLLMs in processing video data, covering a wide range of visual domains, temporal durations, and data modalities. The dataset comprises 900 videos with 256 hours and 2,700 human-annotated question-answer pairs. It distinguishes itself through features like duration variety, diversity in video types, breadth in data modalities, and quality in annotations.

videodb-python

VideoDB Python SDK allows you to interact with the VideoDB serverless database. Manage videos as intelligent data, not files. It's scalable, cost-efficient & optimized for AI applications and LLM integration. The SDK provides functionalities for uploading videos, viewing videos, streaming specific sections of videos, searching inside a video, searching inside multiple videos in a collection, adding subtitles to a video, generating thumbnails, and more. It also offers features like indexing videos by spoken words, semantic indexing, and future indexing options for scenes, faces, and specific domains like sports. The SDK aims to simplify video management and enhance AI applications with video data.

video-subtitle-remover

Video-subtitle-remover (VSR) is a software based on AI technology that removes hard subtitles from videos. It achieves the following functions: - Lossless resolution: Remove hard subtitles from videos, generate files with subtitles removed - Fill the region of removed subtitles using a powerful AI algorithm model (non-adjacent pixel filling and mosaic removal) - Support custom subtitle positions, only remove subtitles in defined positions (input position) - Support automatic removal of all text in the entire video (no input position required) - Support batch removal of watermark text from multiple images.

videokit

VideoKit is a full-featured user-generated content solution for Unity Engine, enabling video recording, camera streaming, microphone streaming, social sharing, and conversational interfaces. It is cross-platform, with C# source code available for inspection. Users can share media, save to camera roll, pick from camera roll, stream camera preview, record videos, remove background, caption audio, and convert text commands. VideoKit requires Unity 2022.3+ and supports Android, iOS, macOS, Windows, and WebGL platforms.

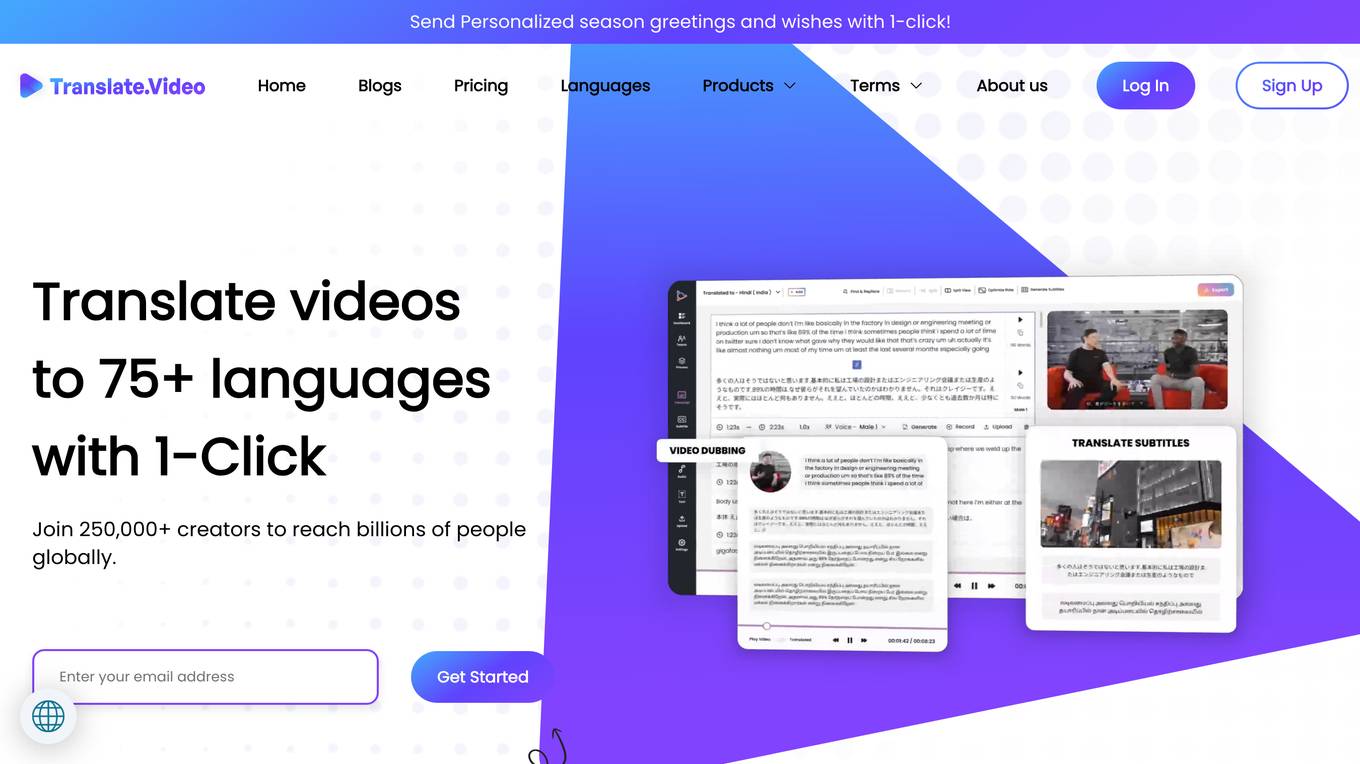

VideoLingo

VideoLingo is an all-in-one video translation and localization dubbing tool designed to generate Netflix-level high-quality subtitles. It aims to eliminate stiff machine translation, multiple lines of subtitles, and can even add high-quality dubbing, allowing knowledge from around the world to be shared across language barriers. Through an intuitive Streamlit web interface, the entire process from video link to embedded high-quality bilingual subtitles and even dubbing can be completed with just two clicks, easily creating Netflix-quality localized videos. Key features and functions include using yt-dlp to download videos from Youtube links, using WhisperX for word-level timeline subtitle recognition, using NLP and GPT for subtitle segmentation based on sentence meaning, summarizing intelligent term knowledge base with GPT for context-aware translation, three-step direct translation, reflection, and free translation to eliminate strange machine translation, checking single-line subtitle length and translation quality according to Netflix standards, using GPT-SoVITS for high-quality aligned dubbing, and integrating package for one-click startup and one-click output in streamlit.

VideoCaptioner

VideoCaptioner is a video subtitle processing assistant based on a large language model (LLM), supporting speech recognition, subtitle segmentation, optimization, translation, and full-process handling. It is user-friendly and does not require high configuration, supporting both network calls and local offline (GPU-enabled) speech recognition. It utilizes a large language model for intelligent subtitle segmentation, correction, and translation, providing stunning subtitles for videos. The tool offers features such as accurate subtitle generation without GPU, intelligent segmentation and sentence splitting based on LLM, AI subtitle optimization and translation, batch video subtitle synthesis, intuitive subtitle editing interface with real-time preview and quick editing, and low model token consumption with built-in basic LLM model for easy use.

Video-Super-Resolution-Library

Intel® Library for Video Super Resolution (Intel® Library for VSR) is a project that offers a variety of algorithms, including machine learning and deep learning implementations, to convert low-resolution videos to high resolution. It enhances the RAISR algorithm to provide better visual quality and real-time performance for upscaling on Intel® Xeon® platforms and Intel® GPUs. The project is developed in C++ and utilizes Intel® AVX-512 on Intel® Xeon® Scalable Processor family and OpenCL support on Intel® GPUs. It includes an FFmpeg plugin inside a Docker container for ease of testing and deployment.

VideoChat

VideoChat is a real-time voice interaction digital human tool that supports end-to-end voice solutions (GLM-4-Voice - THG) and cascade solutions (ASR-LLM-TTS-THG). Users can customize appearance and voice, support voice cloning, and achieve low first-packet delay of 3s. The tool offers various modules such as ASR, LLM, MLLM, TTS, and THG for different functionalities. It requires specific hardware and software configurations for local deployment, and provides options for weight downloads and customization of digital human appearance and voice. The tool also addresses known issues related to resource availability, video streaming optimization, and model loading.

Video-ChatGPT

Video-ChatGPT is a video conversation model that aims to generate meaningful conversations about videos by combining large language models with a pretrained visual encoder adapted for spatiotemporal video representation. It introduces high-quality video-instruction pairs, a quantitative evaluation framework for video conversation models, and a unique multimodal capability for video understanding and language generation. The tool is designed to excel in tasks related to video reasoning, creativity, spatial and temporal understanding, and action recognition.

AIO-Video-Downloader

AIO Video Downloader is an open-source Android application built on the robust yt-dlp backend with the help of youtubedl-android. It aims to be the most powerful download manager available, offering a clean and efficient interface while unlocking advanced downloading capabilities with minimal setup. With support for 1000+ sites and virtually any downloadable content across the web, AIO delivers a seamless yet powerful experience that balances speed, flexibility, and simplicity.