promptbook

Turn your company's scattered knowledge into AI ready Books ✨

Stars: 150

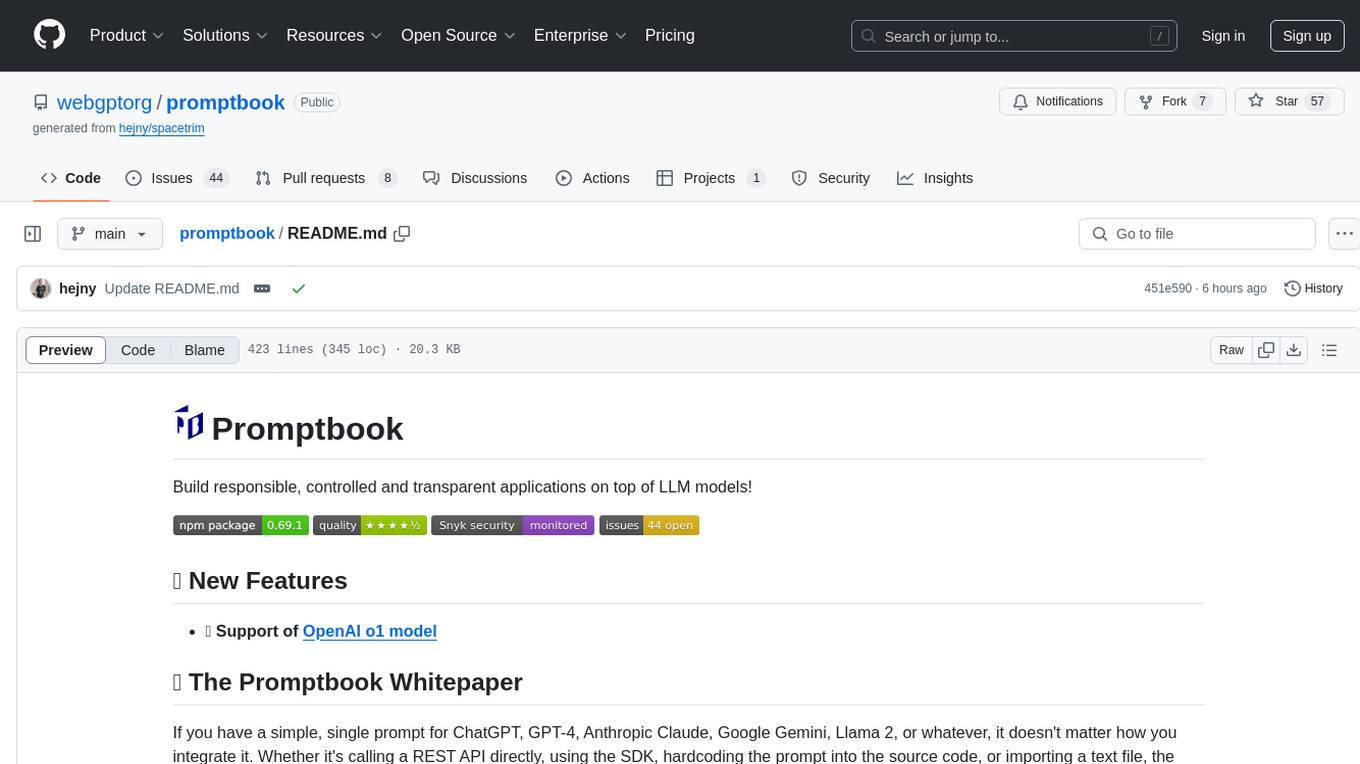

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

README:

Turn your company's scattered knowledge into AI ready Books

- Gemini 3 Support

Nowadays, the biggest challenge for most business applications isn't the raw capabilities of AI models. Large language models such as GPT-5.2 and Claude-4.5 are incredibly capable.

The main challenge lies in managing the context, providing rules and knowledge, and narrowing the personality.

In Promptbook, you can define your context using simple Books that are very explicit, easy to understand and write, reliable, and highly portable.

|

Paul Smith |

We have created a language called Book, which allows you to write AI agents in their native language and create your own AI persona. Book provides a guide to define all the traits and commitments.

You can look at it as "prompting" (or writing a system message), but decorated by commitments.

Commitments are special syntax elements that define contracts between you and the AI agent. They are transformed by Promptbook Engine into low-level parameters like which model to use, its temperature, system message, RAG index, MCP servers, and many other parameters. For some commitments (for example RULE commitment) Promptbook Engine can even create adversary agents and extra checks to enforce the rules.

Personas define the character of your AI persona, its role, and how it should interact with users. It sets the tone and style of communication.

|

Paul Smith & Associés |

Knowledge Commitment allows you to provide specific information, facts, or context that the AI should be aware of when responding.

This can include domain-specific knowledge, company policies, or any other relevant information.

Promptbook Engine will automatically enforce this knowledge during interactions. When the knowledge is short enough, it will be included in the prompt. When it is too long, it will be stored in vector databases and RAG retrieved when needed. But you don't need to care about it.

|

Paul Smith & Associés |

Rules will enforce specific behaviors or constraints on the AI's responses. This can include ethical guidelines, communication styles, or any other rules you want the AI to follow.

Depending on rule strictness, Promptbook will either propagate it to the prompt or use other techniques, like adversary agent, to enforce it.

|

Paul Smith & Associés |

Team commitment allows you to define the team structure and advisory fellow members the AI can consult with. This allows the AI to simulate collaboration and consultation with other experts, enhancing the quality of its responses.

|

Paul Smith & Associés |

!!!@@@

!!!@@@

!!!@@@

Promptbook project is ecosystem of multiple projects and tools, following is a list of most important pieces of the project:

| Project | About |

|---|---|

| Agents Server | Place where you "AI agents live". It allows to create, manage, deploy, and interact with AI agents created in Book language. |

| Book language |

Human-friendly, high-level language that abstracts away low-level details of AI. It allows to focus on personality, behavior, knowledge, and rules of AI agents rather than on models, parameters, and prompt engineering.

There is also a plugin for VSCode to support .book file extension

|

| Promptbook Engine | Promptbook engine can run AI agents based on Book language. It is released as multiple NPM packages and Promptbook Agent Server as Docker Package Agent Server is based on Promptbook Engine. |

Join our growing community of developers and users:

| Platform | Description |

|---|---|

| 💬 Discord | Join our active developer community for discussions and support |

| 🗣️ GitHub Discussions | Technical discussions, feature requests, and community Q&A |

| Professional updates and industry insights | |

| General announcements and community engagement | |

| 🔗 ptbk.io | Official landing page with project information |

| 📸 Instagram @promptbook.studio | Visual updates, UI showcases, and design inspiration |

See detailed guides and API reference in the docs or online.

For information on reporting security vulnerabilities, see our Security Policy.

This library is divided into several packages, all are published from single monorepo. You can install all of them at once:

npm i ptbkOr you can install them separately:

⭐ Marked packages are worth to try first

- ⭐ ptbk - Bundle of all packages, when you want to install everything and you don't care about the size

-

promptbook - Same as

ptbk - ⭐🧙♂️ @promptbook/wizard - Wizard to just run the books in node without any struggle

- @promptbook/core - Core of the library, it contains the main logic for promptbooks

- @promptbook/node - Core of the library for Node.js environment

- @promptbook/browser - Core of the library for browser environment

- ⭐ @promptbook/utils - Utility functions used in the library but also useful for individual use in preprocessing and postprocessing LLM inputs and outputs

- @promptbook/markdown-utils - Utility functions used for processing markdown

- (Not finished) @promptbook/wizard - Wizard for creating+running promptbooks in single line

- @promptbook/javascript - Execution tools for javascript inside promptbooks

- @promptbook/openai - Execution tools for OpenAI API, wrapper around OpenAI SDK

- @promptbook/anthropic-claude - Execution tools for Anthropic Claude API, wrapper around Anthropic Claude SDK

- @promptbook/vercel - Adapter for Vercel functionalities

- @promptbook/google - Integration with Google's Gemini API

- @promptbook/deepseek - Integration with DeepSeek API

- @promptbook/ollama - Integration with Ollama API

- @promptbook/azure-openai - Execution tools for Azure OpenAI API

- @promptbook/fake-llm - Mocked execution tools for testing the library and saving the tokens

- @promptbook/remote-client - Remote client for remote execution of promptbooks

- @promptbook/remote-server - Remote server for remote execution of promptbooks

-

@promptbook/pdf - Read knowledge from

.pdfdocuments - @promptbook/documents - Integration of Markitdown by Microsoft

-

@promptbook/documents - Read knowledge from documents like

.docx,.odt,… -

@promptbook/legacy-documents - Read knowledge from legacy documents like

.doc,.rtf,… - @promptbook/website-crawler - Crawl knowledge from the web

- @promptbook/editable - Editable book as native javascript object with imperative object API

- @promptbook/templates - Useful templates and examples of books which can be used as a starting point

- @promptbook/types - Just typescript types used in the library

- @promptbook/color - Color manipulation library

- ⭐ @promptbook/cli - Command line interface utilities for promptbooks

- 🐋 Docker image - Promptbook server

The following glossary is used to clarify certain concepts:

- Prompt drift is a phenomenon where the AI model starts to generate outputs that are not aligned with the original prompt. This can happen due to the model's training data, the prompt's wording, or the model's architecture.

- Pipeline, workflow scenario or chain is a sequence of tasks that are executed in a specific order. In the context of AI, a pipeline can refer to a sequence of AI models that are used to process data.

- Fine-tuning is a process where a pre-trained AI model is further trained on a specific dataset to improve its performance on a specific task.

- Zero-shot learning is a machine learning paradigm where a model is trained to perform a task without any labeled examples. Instead, the model is provided with a description of the task and is expected to generate the correct output.

- Few-shot learning is a machine learning paradigm where a model is trained to perform a task with only a few labeled examples. This is in contrast to traditional machine learning, where models are trained on large datasets.

- Meta-learning is a machine learning paradigm where a model is trained on a variety of tasks and is able to learn new tasks with minimal additional training. This is achieved by learning a set of meta-parameters that can be quickly adapted to new tasks.

- Retrieval-augmented generation is a machine learning paradigm where a model generates text by retrieving relevant information from a large database of text. This approach combines the benefits of generative models and retrieval models.

- Longtail refers to non-common or rare events, items, or entities that are not well-represented in the training data of machine learning models. Longtail items are often challenging for models to predict accurately.

Note: This section is not a complete dictionary, more list of general AI / LLM terms that has connection with Promptbook

- 📚 Collection of pipelines

- 📯 Pipeline

- 🙇♂️ Tasks and pipeline sections

- 🤼 Personas

- ⭕ Parameters

- 🚀 Pipeline execution

- 🧪 Expectations - Define what outputs should look like and how they're validated

- ✂️ Postprocessing - How outputs are refined after generation

- 🔣 Words not tokens - The human-friendly way to think about text generation

- ☯ Separation of concerns - How Book language organizes different aspects of AI workflows

| Data & Knowledge Management | Pipeline Control |

|---|---|

|

|

| Language & Output Control | Advanced Generation |

|

|

- When you are writing app that generates complex things via LLM - like websites, articles, presentations, code, stories, songs,...

- When you want to separate code from text prompts

- When you want to describe complex prompt pipelines and don't want to do it in the code

- When you want to orchestrate multiple prompts together

- When you want to reuse parts of prompts in multiple places

- When you want to version your prompts and test multiple versions

- When you want to log the execution of prompts and backtrace the issues

- When you have already implemented single simple prompt and it works fine for your job

- When OpenAI Assistant (GPTs) is enough for you

- When you need streaming (this may be implemented in the future, see discussion).

- When you need to use something other than JavaScript or TypeScript (other languages are on the way, see the discussion)

- When your main focus is on something other than text - like images, audio, video, spreadsheets (other media types may be added in the future, see discussion)

- When you need to use recursion (see the discussion)

If you have a question start a discussion, open an issue or write me an email.

- ❔ Why not just use the OpenAI SDK / Anthropic Claude SDK / ...?

- ❔ How is it different from the OpenAI`s GPTs?

- ❔ How is it different from the Langchain?

- ❔ How is it different from the DSPy?

- ❔ How is it different from anything?

- ❔ Is Promptbook using RAG (Retrieval-Augmented Generation)?

- ❔ Is Promptbook using function calling?

See CHANGELOG.md

This project is licensed under BUSL 1.1.

We welcome contributions! See CONTRIBUTING.md for guidelines.

You can also ⭐ star the project, follow us on GitHub or various other social networks.We are open to pull requests, feedback, and suggestions.

Need help with Book language? We're here for you!

- 💬 Join our Discord community for real-time support

- 📝 Browse our GitHub discussions for FAQs and community knowledge

- 🐛 Report issues for bugs or feature requests

- 📚 Visit ptbk.io for more resources and documentation

- 📧 Contact us directly through the channels listed in our signpost

We welcome contributions and feedback to make Book language better for everyone!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for promptbook

Similar Open Source Tools

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

spacy-llm

This package integrates Large Language Models (LLMs) into spaCy, featuring a modular system for **fast prototyping** and **prompting** , and turning unstructured responses into **robust outputs** for various NLP tasks, **no training data** required. It supports open-source LLMs hosted on Hugging Face 🤗: Falcon, Dolly, Llama 2, OpenLLaMA, StableLM, Mistral. Integration with LangChain 🦜️🔗 - all `langchain` models and features can be used in `spacy-llm`. Tasks available out of the box: Named Entity Recognition, Text classification, Lemmatization, Relationship extraction, Sentiment analysis, Span categorization, Summarization, Entity linking, Translation, Raw prompt execution for maximum flexibility. Soon: Semantic role labeling. Easy implementation of **your own functions** via spaCy's registry for custom prompting, parsing and model integrations. For an example, see here. Map-reduce approach for splitting prompts too long for LLM's context window and fusing the results back together

ai_automation_suggester

An integration for Home Assistant that leverages AI models to understand your unique home environment and propose intelligent automations. By analyzing your entities, devices, areas, and existing automations, the AI Automation Suggester helps you discover new, context-aware use cases you might not have considered, ultimately streamlining your home management and improving efficiency, comfort, and convenience. The tool acts as a personal automation consultant, providing actionable YAML-based automations that can save energy, improve security, enhance comfort, and reduce manual intervention. It turns the complexity of a large Home Assistant environment into actionable insights and tangible benefits.

deepdoctection

**deep** doctection is a Python library that orchestrates document extraction and document layout analysis tasks using deep learning models. It does not implement models but enables you to build pipelines using highly acknowledged libraries for object detection, OCR and selected NLP tasks and provides an integrated framework for fine-tuning, evaluating and running models. For more specific text processing tasks use one of the many other great NLP libraries. **deep** doctection focuses on applications and is made for those who want to solve real world problems related to document extraction from PDFs or scans in various image formats. **deep** doctection provides model wrappers of supported libraries for various tasks to be integrated into pipelines. Its core function does not depend on any specific deep learning library. Selected models for the following tasks are currently supported: * Document layout analysis including table recognition in Tensorflow with **Tensorpack**, or PyTorch with **Detectron2**, * OCR with support of **Tesseract**, **DocTr** (Tensorflow and PyTorch implementations available) and a wrapper to an API for a commercial solution, * Text mining for native PDFs with **pdfplumber**, * Language detection with **fastText**, * Deskewing and rotating images with **jdeskew**. * Document and token classification with all LayoutLM models provided by the **Transformer library**. (Yes, you can use any LayoutLM-model with any of the provided OCR-or pdfplumber tools straight away!). * Table detection and table structure recognition with **table-transformer**. * There is a small dataset for token classification available and a lot of new tutorials to show, how to train and evaluate this dataset using LayoutLMv1, LayoutLMv2, LayoutXLM and LayoutLMv3. * Comprehensive configuration of **analyzer** like choosing different models, output parsing, OCR selection. Check this notebook or the docs for more infos. * Document layout analysis and table recognition now runs with **Torchscript** (CPU) as well and **Detectron2** is not required anymore for basic inference. * [**new**] More angle predictors for determining the rotation of a document based on **Tesseract** and **DocTr** (not contained in the built-in Analyzer). * [**new**] Token classification with **LiLT** via **transformers**. We have added a model wrapper for token classification with LiLT and added a some LiLT models to the model catalog that seem to look promising, especially if you want to train a model on non-english data. The training script for LayoutLM can be used for LiLT as well and we will be providing a notebook on how to train a model on a custom dataset soon. **deep** doctection provides on top of that methods for pre-processing inputs to models like cropping or resizing and to post-process results, like validating duplicate outputs, relating words to detected layout segments or ordering words into contiguous text. You will get an output in JSON format that you can customize even further by yourself. Have a look at the **introduction notebook** in the notebook repo for an easy start. Check the **release notes** for recent updates. **deep** doctection or its support libraries provide pre-trained models that are in most of the cases available at the **Hugging Face Model Hub** or that will be automatically downloaded once requested. For instance, you can find pre-trained object detection models from the Tensorpack or Detectron2 framework for coarse layout analysis, table cell detection and table recognition. Training is a substantial part to get pipelines ready on some specific domain, let it be document layout analysis, document classification or NER. **deep** doctection provides training scripts for models that are based on trainers developed from the library that hosts the model code. Moreover, **deep** doctection hosts code to some well established datasets like **Publaynet** that makes it easy to experiment. It also contains mappings from widely used data formats like COCO and it has a dataset framework (akin to **datasets** so that setting up training on a custom dataset becomes very easy. **This notebook** shows you how to do this. **deep** doctection comes equipped with a framework that allows you to evaluate predictions of a single or multiple models in a pipeline against some ground truth. Check again **here** how it is done. Having set up a pipeline it takes you a few lines of code to instantiate the pipeline and after a for loop all pages will be processed through the pipeline.

codegate

CodeGate is a local gateway that enhances the safety of AI coding assistants by ensuring AI-generated recommendations adhere to best practices, safeguarding code integrity, and protecting individual privacy. Developed by Stacklok, CodeGate allows users to confidently leverage AI in their development workflow without compromising security or productivity. It works seamlessly with coding assistants, providing real-time security analysis of AI suggestions. CodeGate is designed with privacy at its core, keeping all data on the user's machine and offering complete control over data.

kairon

Kairon is an open-source conversational digital transformation platform that helps build LLM-based digital assistants at scale. It provides a no-coding web interface for adapting, training, testing, and maintaining AI assistants. Kairon focuses on pre-processing data for chatbots, including question augmentation, knowledge graph generation, and post-processing metrics. It offers end-to-end lifecycle management, low-code/no-code interface, secure script injection, telemetry monitoring, chat client designer, analytics module, and real-time struggle analytics. Kairon is suitable for teams and individuals looking for an easy interface to create, train, test, and deploy digital assistants.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

neo

Neo.mjs is a revolutionary Application Engine for the web that offers true multithreading and context engineering, enabling desktop-class UI performance and AI-driven runtime mutation. It is not a framework but a complete runtime and toolchain for enterprise applications, excelling in single page apps and browser-based multi-window applications. With a pioneering Off-Main-Thread architecture, Neo.mjs ensures butter-smooth UI performance by keeping the main thread free for flawless user interactions. The latest version, v11, introduces AI-native capabilities, allowing developers to work with AI agents as first-class partners in the development process. The platform offers a suite of dedicated Model Context Protocol servers that give agents the context they need to understand, build, and reason about the code, enabling a new level of human-AI collaboration.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

For similar tasks

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.