bit

AI-powered development workspaces with reusable components, architectural clarity and zero overhead.

Stars: 18235

Bit is a build system that organizes source code into composable components, enabling the creation of reliable, scalable, and consistent applications. It supports the creation of reusable UI components, standard building blocks, shell applications, and atomic deployments. Bit is compatible with various tools in the JavaScript ecosystem and offers official dev environments for popular frameworks. It can be used in different codebase structures like monorepos or polyrepos, and even without repositories. Users can install Bit, create shell applications, compose components, release and deploy components, and modernize existing projects using Bit Cloud or self-hosted scopes.

README:

Website | Docs | Community | Bit Cloud

Bit is the build system to connect components and apps from development to CI in the AI era. Bit organizes source code into composable components, empowering to build reliable, scalable and consistent applications. It enables AI agents to intelligenly create and reuse components via MCP preventing duplication and accelerating development.

⚡ Features

- Reusable components. Create reusable UI components and modules to reuse across your software.

- Standard building blocks. Define the blueprints templates for creating components for devs and AI as one.

- Shell applications. Compose reusable components and features into application shells.

- Atmoic and safe deployments. Ensure simple, safe and optimized deployments of apps and services for testing and production.

Bit supports all tooling in the JS ecosystem and comes out of the box with official dev environments for NodeJS, React, Angular, Vue, React Native, NextJS and far more. All are native to TypeScript and ESM and equipped with the best dev tooling.

Bit is a fit to every codebase structure. You can use Bit components in a monorepo, polyrepo, or even without repositories at all.

Use the Bit installer to install Bit to be available on your PATH.

npx @teambit/bvm installInitialize Bit on a new folder or in an existing project by running the following command:

bit init --default-scope my-org.my-projectMake sure to create your scope on the Bit platform and use the right org and project name. After running the command, Bit is initialized on the chosen directory, and ready to be used via Bit commands, AI agent, your editor or the Bit UI!

Create the application shell to run, compose and deploy your application:

bit create react-app corporate-websiteRun the platform:

bit run corporate-website

Head to http://localhost:3000 to view your application shell. You can start composing the application layout and specific pages to build your application. Learn more on building shell applications.

Create the components to compose into the feature. Run the following command to create a new React UI component for the application login route:

bit create react pages/login

Find simple guides for creating NodeJS modules, UI components and apps, backend services and more on the Create Component docs.

Compose the component into the application shell:

import { Login } from '@my-org/users.pages.login';

import { Routes, Route } from 'react-router-dom';

export function CorporateWebsite() {

return (

<AcmeTheme>

<NavigationProvider>

<Routes>

<Route path="/" element={<div>Hello world</div>} />

<Route path="/login" element={<Login />} />

</Routes>

</NavigationProvider>

</AcmeTheme>

);

}Head to http://localhost:3000/login to view your new login page. You can use bit templates to list official templates or find guides for creating React hooks, backend services, NodeJS modules, UI components and more on our create components docs. Optionally, use bit start to run the Bit UI to preview components in isolation.

You can either use hosted scopes on Bit Cloud or by hosting scopes on your own. Use the following command to create your Bit Cloud account and your first scope.

bit loginUse semantic versioning to version your components:

bit tag --message "my first release" --majorBy default, Bit uses Ripple CI to build components. You can use the --build flag to build the components on the local machine. To tag and export from your CI of choice to automate the release process or use our official CI scripts.

After versioning, you can proceed to release your components:

bit exportHead over to your bit.cloud account to see your components build progress. Once the build process is completed, the components will be available for use using standard package managers:

npm install @my-org/users.pages.loginBit is entirely built with Bit and you can find all its components on Bit Cloud.

Your contribution, no matter how big or small, is much appreciated. Before contributing, please read the code of conduct.

See Contributing.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bit

Similar Open Source Tools

bit

Bit is a build system that organizes source code into composable components, enabling the creation of reliable, scalable, and consistent applications. It supports the creation of reusable UI components, standard building blocks, shell applications, and atomic deployments. Bit is compatible with various tools in the JavaScript ecosystem and offers official dev environments for popular frameworks. It can be used in different codebase structures like monorepos or polyrepos, and even without repositories. Users can install Bit, create shell applications, compose components, release and deploy components, and modernize existing projects using Bit Cloud or self-hosted scopes.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

slidev-ai

Slidev AI is a web app that leverages LLM (Large Language Model) technology to make creating Slidev-based online presentations elegant and effortless. It is designed to help engineers and academics quickly produce content-focused, minimalist PPTs that are easily shareable online. This project serves as a reference implementation for OpenMCP agent development, a production-ready presentation generation solution, and a template for creating domain-specific AI agents.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

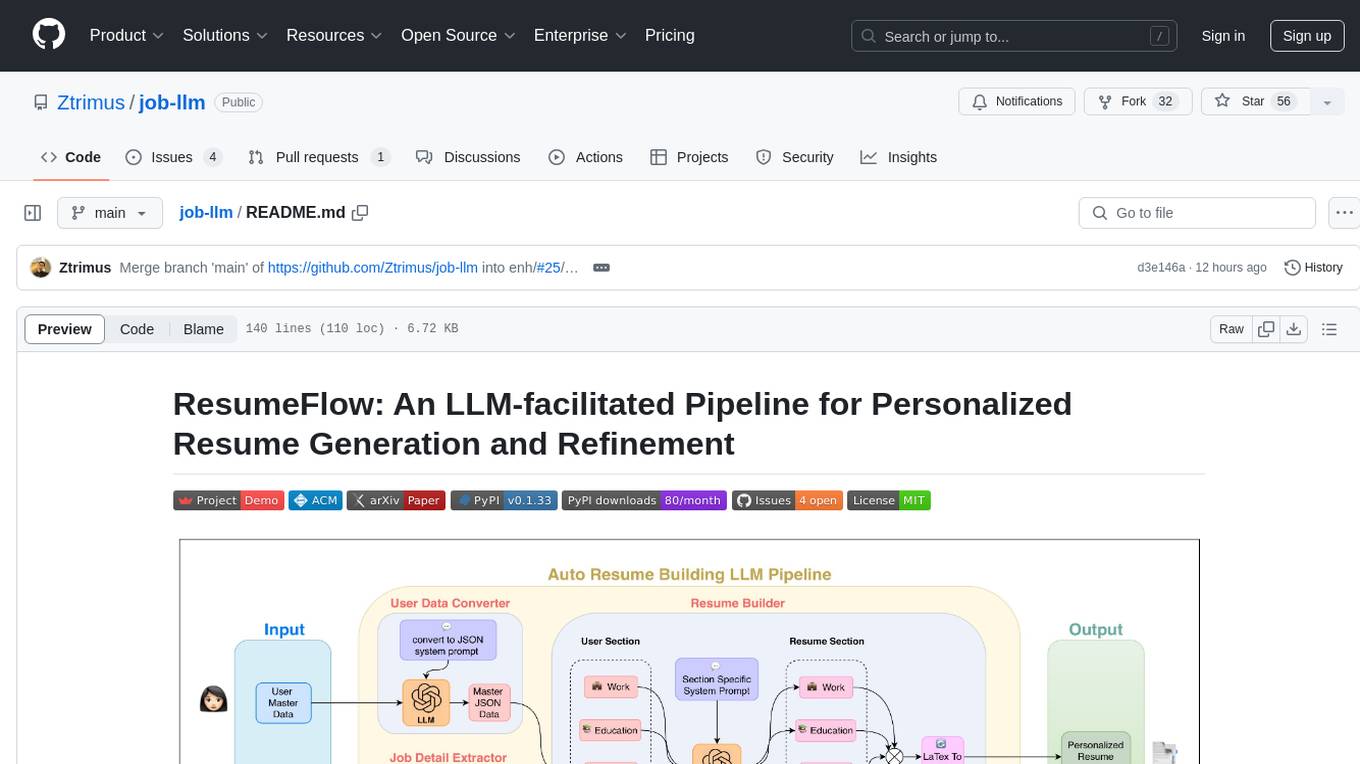

job-llm

ResumeFlow is an automated system utilizing Large Language Models (LLMs) to streamline the job application process. It aims to reduce human effort in various steps of job hunting by integrating LLM technology. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code. The project focuses on leveraging LLMs to automate tasks such as resume generation and refinement, making job applications smoother and more efficient.

air-light

Air-light is a minimalist WordPress starter theme designed to be an ultra minimal starting point for a WordPress project. It is built to be very straightforward, backwards compatible, front-end developer friendly and modular by its structure. Air-light is free of weird "app-like" folder structures or odd syntaxes that nobody else uses. It loves WordPress as it was and as it is.

gpt-engineer

GPT-Engineer is a tool that allows you to specify a software in natural language, sit back and watch as an AI writes and executes the code, and ask the AI to implement improvements.

fal-js

The fal.ai JS client is a robust and user-friendly library for seamless integration of fal serverless functions in Web, Node.js, and React Native applications. Developed in TypeScript, it provides developers with type safety right from the start. The client library is crafted as a lightweight layer atop platform standards like `fetch`, ensuring hassle-free integration into existing codebases and flawless operation across various JavaScript runtimes. The client proxy feature allows secure handling of credentials by using a server proxy for serverless APIs. The repository also includes example Next.js applications for demonstration and integration.

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

opencode

Opencode is an AI coding agent designed for the terminal. It is a tool that allows users to interact with AI models for coding tasks in a terminal-based environment. Opencode is open source, provider-agnostic, and focuses on a terminal user interface (TUI) for coding. It offers features such as client/server architecture, support for various AI models, and a strong emphasis on community contributions and feedback.

stable-diffusion-discord-bot

A discord bot built to interface with the InvokeAI fork of stable-diffusion. It is a work in progress for a major rewrite of the arty project, compatible with `invokeai 5.1.1`. The bot supports various functionalities like building node graphs from job requests, refreshing renders using png metadata, removing backgrounds, job progress tracking, and LLM integration. Users can install custom invokeai nodes for advanced functionality and launch the bot natively or with docker. Patches and pull requests are welcomed.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

obs-cleanstream

CleanStream is an OBS plugin that utilizes real-time local AI to clean live audio streams by removing unwanted words and utterances, such as 'uh' and 'um', and configurable words like profanity. It employs a neural network (OpenAI Whisper) to predict speech in real-time and eliminate undesired words. The plugin runs efficiently using the Whisper.cpp project from ggerganov. CleanStream offers users the ability to adjust settings and add the plugin to any audio-generating source in OBS, providing a seamless experience for content creators looking to enhance the quality of their live audio streams.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

codebox-api

CodeBox is a cloud infrastructure tool designed for running Python code in an isolated environment. It also offers simple file input/output capabilities and will soon support vector database operations. Users can install CodeBox using pip and utilize it by setting up an API key. The tool allows users to execute Python code snippets and interact with the isolated environment. CodeBox is currently in early development stages and requires manual handling for certain operations like refunds and cancellations. The tool is open for contributions through issue reporting and pull requests. It is licensed under MIT and can be contacted via email at [email protected].

For similar tasks

bit

Bit is a build system that organizes source code into composable components, enabling the creation of reliable, scalable, and consistent applications. It supports the creation of reusable UI components, standard building blocks, shell applications, and atomic deployments. Bit is compatible with various tools in the JavaScript ecosystem and offers official dev environments for popular frameworks. It can be used in different codebase structures like monorepos or polyrepos, and even without repositories. Users can install Bit, create shell applications, compose components, release and deploy components, and modernize existing projects using Bit Cloud or self-hosted scopes.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

cheat-sheet-pdf

The Cheat-Sheet Collection for DevOps, Engineers, IT professionals, and more is a curated list of cheat sheets for various tools and technologies commonly used in the software development and IT industry. It includes cheat sheets for Nginx, Docker, Ansible, Python, Go (Golang), Git, Regular Expressions (Regex), PowerShell, VIM, Jenkins, CI/CD, Kubernetes, Linux, Redis, Slack, Puppet, Google Cloud Developer, AI, Neural Networks, Machine Learning, Deep Learning & Data Science, PostgreSQL, Ajax, AWS, Infrastructure as Code (IaC), System Design, and Cyber Security.

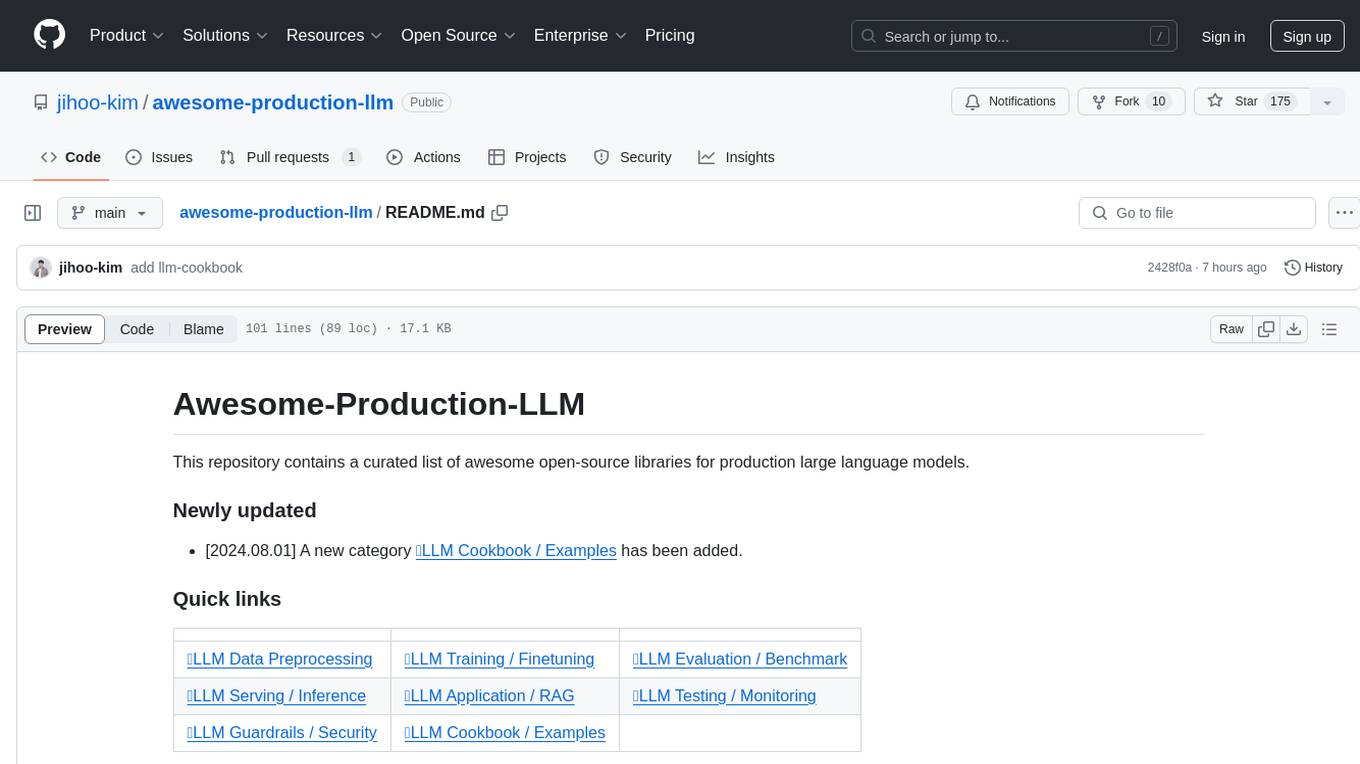

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

generative-ai-on-aws

Generative AI on AWS by O'Reilly Media provides a comprehensive guide on leveraging generative AI models on the AWS platform. The book covers various topics such as generative AI use cases, prompt engineering, large-language models, fine-tuning techniques, optimization, deployment, and more. Authors Chris Fregly, Antje Barth, and Shelbee Eigenbrode offer insights into cutting-edge AI technologies and practical applications in the field. The book is a valuable resource for data scientists, AI enthusiasts, and professionals looking to explore generative AI capabilities on AWS.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.