emacs-aio

async/await for Emacs Lisp

Stars: 242

The 'emacs-aio' repository provides an async/await library for Emacs Lisp, similar to 'asyncio' in Python. It allows functions to pause while waiting on asynchronous events without blocking any threads. Users can define async functions using 'aio-defun' or 'aio-lambda' and pause execution with 'aio-await'. The package offers utility macros and functions for synchronous waiting, cancellation, and async evaluation. Additionally, it includes awaitable functions like 'aio-sleep' and 'aio-url-retrieve', a select()-like API for waiting on multiple promises, and a semaphore API for thread synchronization. 'emacs-aio' enables users to create async-friendly functions and handle async operations in Emacs Lisp effectively.

README:

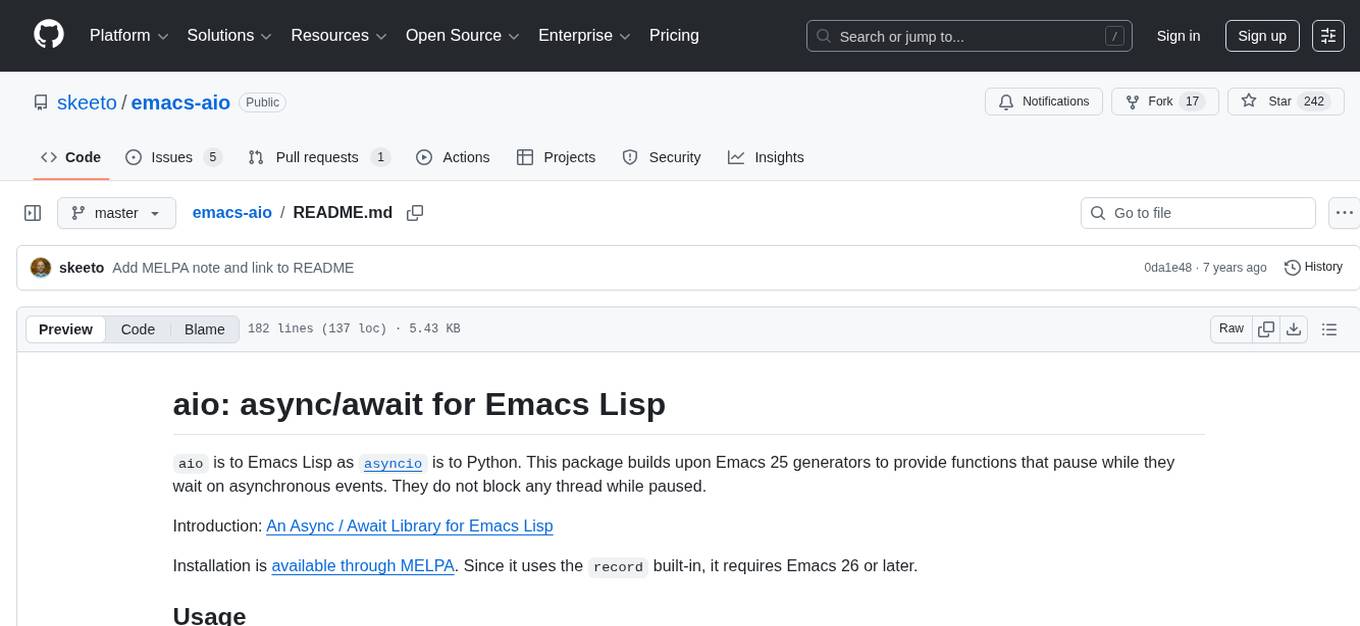

aio is to Emacs Lisp as asyncio is to Python. This

package builds upon Emacs 25 generators to provide functions that

pause while they wait on asynchronous events. They do not block any

thread while paused.

Introduction: An Async / Await Library for Emacs Lisp

Installation is available through MELPA. Since it uses the

record built-in, it requires Emacs 26 or later.

An async function is defined using aio-defun or aio-lambda. The

body of such functions can use aio-await to pause the function and

wait on a given promise. The function continues with the promise's

resolved value when it's ready. The package provides a number of

functions that return promises, and every async function returns a

promise representing its future return value.

For example:

(aio-defun foo (url)

(aio-await (aio-sleep 3))

(message "Done sleeping. Now fetching %s" url)

(let* ((result (aio-await (aio-url-retrieve url)))

(contents (with-current-buffer (cdr result)

(prog1 (buffer-string)

(kill-buffer)))))

(message "Result: %s" contents)))If an uncaught signal terminates an asynchronous function, that signal is captured by its return value promise and propagated into any function that awaits on that function.

(aio-defun divide (a b)

(aio-await (aio-sleep 1))

(/ a b))

(aio-defun divide-safe (a b)

(condition-case error

(aio-await (divide a b))

(arith-error :arith-error)))

(aio-wait-for (divide-safe 1.0 2.0))

;; => 0.5

(aio-wait-for (divide-safe 0 0))

;; => :arith-errorTo convert a callback-based function into an awaitable, async-friendly

function, create a new promise object with aio-promise, then

aio-resolve that promise in the callback. The helper function,

aio-make-callback, makes this easy.

(aio-wait-for promise)

;; Synchronously wait for PROMISE, blocking the current thread.

(aio-cancel promise)

;; Attempt to cancel PROMISE, returning non-nil if successful.

(aio-with-promise promise &rest body) [macro]

;; Evaluate BODY and resolve PROMISE with the result.

(aio-with-async &rest body) [macro]

;; Evaluate BODY asynchronously as if it was inside `aio-lambda'.

(aio-make-callback &key tag once)

;; Return a new callback function and its first promise.

(aio-chain expr) [macro]

;; `aio-await' on EXPR and replace place EXPR with the next promise.The aio-make-callback function is especially useful for callbacks

that are invoked repeatedly, such as process filters and sentinels.

The aio-chain macro works in conjunction.

Here are some useful promise-returning — i.e. awaitable — functions defined by this package.

(aio-sleep seconds &optional result)

;; Return a promise that is resolved after SECONDS with RESULT.

(aio-idle seconds &optional result)

;; Return a promise that is resolved after idle SECONDS with RESULT.

(aio-url-retrieve url &optional silent inhibit-cookies)

;; Wraps `url-retrieve' in a promise.

(aio-all promises)

;; Return a promise that resolves when all PROMISES are resolved."This package includes a select()-like, level-triggered API for waiting on multiple promises at once. Create a "select" object, add promises to it, and await on it. Resolved and returned promises are automatically removed, and the "select" object can be reused.

(aio-make-select &optional promises)

;; Create a new `aio-select' object for waiting on multiple promises.

(aio-select-add select promise)

;; Add PROMISE to the set of promises in SELECT.

(aio-select-remove select promise)

;; Remove PROMISE form the set of promises in SELECT.

(aio-select-promises select)

;; Return a list of promises in SELECT.

(aio-select select)

;; Return a promise that resolves when any promise in SELECT resolves.For example, here's an implementation of sleep sort:

(aio-defun sleep-sort (values)

(let* ((promises (mapcar (lambda (v) (aio-sleep v v)) values))

(select (aio-make-select promises)))

(cl-loop repeat (length promises)

for next = (aio-await (aio-select select))

collect (aio-await next))))Semaphores work just as they would as a thread synchronization

primitive. There's an internal counter that cannot drop below zero,

and aio-sem-wait is an awaitable function that may block the

asynchronous function until another asynchronous function calls

aio-sem-post. Blocked functions wait in a FIFO queue and are awoken

in the same order that they awaited.

(aio-sem init)

;; Create a new semaphore with initial value INIT.

(aio-sem-post sem)

;; Increment the value of SEM.

(aio-sem-wait sem)

;; Decrement the value of SEM.This can be used to create a work queue. For example, here's a

configurable download queue for url-retrieve:

(defun fetch (url-list max-parallel callback)

(let ((sem (aio-sem max-parallel)))

(dolist (url url-list)

(aio-with-async

(aio-await (aio-sem-wait sem))

(cl-destructuring-bind (status . buffer)

(aio-await (aio-url-retrieve url))

(aio-sem-post sem)

(funcall callback

(with-current-buffer buffer

(prog1 (buffer-string)

(kill-buffer)))))))))For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for emacs-aio

Similar Open Source Tools

emacs-aio

The 'emacs-aio' repository provides an async/await library for Emacs Lisp, similar to 'asyncio' in Python. It allows functions to pause while waiting on asynchronous events without blocking any threads. Users can define async functions using 'aio-defun' or 'aio-lambda' and pause execution with 'aio-await'. The package offers utility macros and functions for synchronous waiting, cancellation, and async evaluation. Additionally, it includes awaitable functions like 'aio-sleep' and 'aio-url-retrieve', a select()-like API for waiting on multiple promises, and a semaphore API for thread synchronization. 'emacs-aio' enables users to create async-friendly functions and handle async operations in Emacs Lisp effectively.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

volga

Volga is a general purpose real-time data processing engine in Python for modern AI/ML systems. It aims to be a Python-native alternative to Flink/Spark Streaming with extended functionality for real-time AI/ML workloads. It provides a hybrid push+pull architecture, Entity API for defining data entities and feature pipelines, DataStream API for general data processing, and customizable data connectors. Volga can run on a laptop or a distributed cluster, making it suitable for building custom real-time AI/ML feature platforms or general data pipelines without relying on third-party platforms.

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

next-token-prediction

Next-Token Prediction is a language model tool that allows users to create high-quality predictions for the next word, phrase, or pixel based on a body of text. It can be used as an alternative to well-known decoder-only models like GPT and Mistral. The tool provides options for simple usage with built-in data bootstrap or advanced customization by providing training data or creating it from .txt files. It aims to simplify methodologies, provide autocomplete, autocorrect, spell checking, search/lookup functionalities, and create pixel and audio transformers for various prediction formats.

StepWise

StepWise is a code-first, event-driven workflow framework for .NET designed to help users build complex workflows in a simple and efficient way. It allows users to define workflows using C# code, visualize and execute workflows from a browser, execute steps in parallel, and resolve dependencies automatically. StepWise also features an AI assistant called `Geeno` in its WebUI to help users run and analyze workflows with ease.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

litserve

LitServe is a high-throughput serving engine for deploying AI models at scale. It generates an API endpoint for a model, handles batching, streaming, autoscaling across CPU/GPUs, and more. Built for enterprise scale, it supports every framework like PyTorch, JAX, Tensorflow, and more. LitServe is designed to let users focus on model performance, not the serving boilerplate. It is like PyTorch Lightning for model serving but with broader framework support and scalability.

uzu-swift

Swift package for uzu, a high-performance inference engine for AI models on Apple Silicon. Deploy AI directly in your app with zero latency, full data privacy, and no inference costs. Key features include a simple, high-level API, specialized configurations for performance boosts, broad model support, and an observable model manager. Easily set up projects, obtain an API key, choose a model, and run it with corresponding identifiers. Examples include chat, speedup with speculative decoding, chat with dynamic context, chat with static context, summarization, classification, cloud, and structured output. Troubleshooting available via Discord or email. Licensed under MIT.

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

microchain

Microchain is a function calling-based LLM agents tool with no bloat. It allows users to define LLM and templates, use various functions like Sum and Product, and create LLM agents for specific tasks. The tool provides a simple and efficient way to interact with OpenAI models and create conversational agents for various applications.

For similar tasks

emacs-aio

The 'emacs-aio' repository provides an async/await library for Emacs Lisp, similar to 'asyncio' in Python. It allows functions to pause while waiting on asynchronous events without blocking any threads. Users can define async functions using 'aio-defun' or 'aio-lambda' and pause execution with 'aio-await'. The package offers utility macros and functions for synchronous waiting, cancellation, and async evaluation. Additionally, it includes awaitable functions like 'aio-sleep' and 'aio-url-retrieve', a select()-like API for waiting on multiple promises, and a semaphore API for thread synchronization. 'emacs-aio' enables users to create async-friendly functions and handle async operations in Emacs Lisp effectively.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.