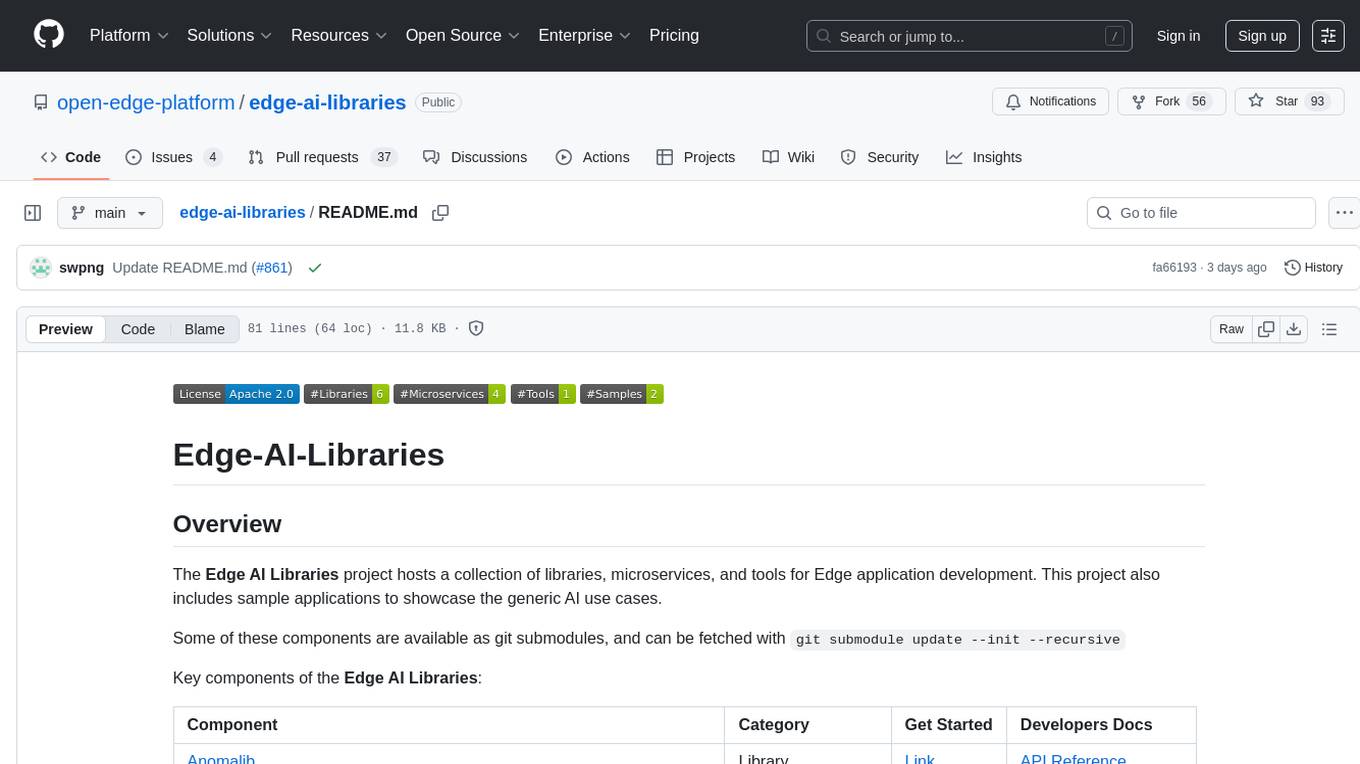

edge-ai-libraries

Performance optimized libraries, microservices, and tools to support the development of Edge AI applications.

Stars: 118

The Edge AI Libraries project is a collection of libraries, microservices, and tools for Edge application development. It includes sample applications showcasing generic AI use cases. Key components include Anomalib, Dataset Management Framework, Deep Learning Streamer, ECAT EnableKit, EtherCAT Masterstack, FLANN, OpenVINO toolkit, Audio Analyzer, ORB Extractor, PCL, PLCopen Servo, Real-time Data Agent, RTmotion, Audio Intelligence, Deep Learning Streamer Pipeline Server, Document Ingestion, Model Registry, Multimodal Embedding Serving, Time Series Analytics, Vector Retriever, Visual-Data Preparation, VLM Inference Serving, Intel Geti, Intel SceneScape, Visual Pipeline and Platform Evaluation Tool, Chat Question and Answer, Document Summarization, PLCopen Benchmark, PLCopen Databus, Video Search and Summarization, Isolation Forest Classifier, Random Forest Microservices. Visit sub-directories for instructions and guides.

README:

Welcome to Edge AI Libraries - highly optimized libraries, microservices, and tools designed for building and deploying real-time AI solutions on edge devices.

If you are an AI developer, data scientist, or system integrator, these assets help you organize data, train models, run efficient inference, and deliver robust, industry-grade automation systems for computer vision, multimedia, and industrial use cases.

These flagship components represent the most advanced, widely adopted, and impactful tools in the repository:

-

Build efficient media analytics pipelines using streaming AI pipelines for audio/video media analytics using GStreamer for optimized media operations and OpenVINO for optimized inferencing

-

Create 3D/4D dynamic digital twins from multimodal sensor data for advanced spatial analytics.

-

Build computer vision AI models enabling rapid dataset management, model training, and deployment to the edge.

-

Deploy visual anomaly detection with this state-of-the-art library, offering algorithms for segmentation, classification, and reconstruction, plus features like experiment management and hyperparameter optimization.

-

Optimize, run, and deploy AI models with this industry-standard toolkit, accelerating inference on Intel CPUs, GPUs, and NPUs. Supports a broad range of AI solutions including vision-based applications, generative AI, and vision-language models.

This group provides core AI libraries and tools focused on computer vision model building, training, optimization, and deployment for Intel hardware. They address challenges such as dataset curation, model lifecycle management, and high-performance inference on edge devices.

-

Build computer vision AI models enabling rapid dataset management, model training, and deployment to the edge.

-

Deploy visual anomaly detection with this state-of-the-art library, offering algorithms for segmentation, classification, and reconstruction, plus features like experiment management and hyperparameter optimization.

-

Software for efficient model training and deployment.

-

Toolkit for optimizing and deploying AI inference, offering performance boost on Intel CPU, GPU, and NPU devices.

-

OpenVINO Training Extensions & Model API

A set of advanced algorithms for model training and conversion.

-

Dataset management framework to curate and convert vision datasets.

-

Create, tailor, and implement custom AI models directly on edge platforms.

Handling large-scale media analytics workloads, these components support real-time audio and video AI pipeline processing, transcription, and multimodal embedding generation, addressing common use cases like surveillance, content indexing, and audio analysis.

-

Deep Learning Streamer & Deep Learning Streamer Pipeline Server

Streaming AI pipeline builder with scalable server for media inferencing.

-

Microservices providing real-time audio transcription and intelligence extraction.

-

VLM Inference Serving & Multimodal Embedding

Services handling vision-language models and embedding generation for multimodal search.

-

Software for creating dynamic 3D/4D digital twins for spatial analytics.

-

Time Series Analytics Microservice

Real-time analytics microservice designed for anomaly detection and forecasting on sensor time-series data.

-

Multi-level Video Understanding Microservice

Microservices providing a multi-level, temporal-enhanced approach to generate high quality summaries for video files, especially for long videos.

Efficient data management and retrieval are crucial for AI performance and scalability. This group offers components for dataset curation, vector search, and document ingestion across multimodal data.

-

Vector Retriever (Milvus) & Visual Data Preparation (Milvus and VDMS)

High-performance vector similarity search and visual data indexing.

-

Model Registry & Document Ingestion

Tools for managing AI model versions and preparing documents for AI workflows.

-

Splits/segments video streams into chunks, supporting batch and pipeline-based analytics.

These support tools provide visual pipeline evaluation and performance benchmarking to help analyze AI workloads and industrial environments effectively.

-

Visual Pipeline and Platform Evaluation Tool

Benchmark and analyze AI pipeline performance on various edge platforms.

-

Compliance and performance testing toolkit for motion control.

-

Showcase, monitor, and optimize the scalability and performance of AI workloads on Intel edge hardware. Configure models, choose performance modes, and visualize resource metrics in real time.

-

Command Line Interface (CLI) tool that provides a collection of test suites to qualify Edge AI system. It enables users to perform targeted tests, supporting a wide range of use cases from system evaluation to data extraction and reporting.

Focused on real-time industrial automation, motion control, and fieldbus communication, these components provide reliable, standards-compliant building blocks for manufacturing and factory automation applications.

-

ECAT EnableKit & EtherCAT Masterstack

EtherCAT communication protocol stack and development tools.

-

Libraries implementing motion control standards for servo drives and real-time trajectory management.

-

Tools for data communication in automation networks.

-

Microservice for collecting, processing, and distributing real-time sensor and industrial device data; supports both edge analytics and integration with operational systems.

Optimized libraries for robotic perception, localization, mapping, and 3D point cloud analytics. These tools are designed to maximize performance on heterogeneous Intel hardware using oneAPI DPC++, enabling advanced robotic workloads at the edge.

-

FLANN optimized with oneAPI DPC++

High-speed nearest neighbor library, optimized for Intel architectures; supports scalable feature matching, search, and clustering in robotic vision and SLAM.

-

Efficient ORB feature and descriptor extraction for visual SLAM, mapping, and tracking; designed for multicamera and GPU acceleration scenarios.

-

Point Cloud Library Optimized with Intel oneAPI DPC++

Accelerated modules from PCL for real-time 2D/3D point cloud processing—supports object detection, mapping, segmentation, and scene understanding in automation and robotics.

-

Unified interface library bridging motion control commands between AI modules and industrial/robotic devices; simplifies real-time control integration and system interoperability in mixed hardware environments.

Ready-to-use example applications demonstrating real-world AI use cases to help users get started quickly and understand integration patterns:

-

Conversational AI application integrating retrieval-augmented generation for question answering.

-

Conversational AI application integrating retrieval-augmented generation for question answering.Optimized for Intel(R) Core.

-

AI pipeline for automated summarization of textual documents.

-

Video Search and Summarization

Application combining video content analysis with search and summarization capabilities.

-

Edge Developer Kit Reference Scripts

Automate the setup of edge AI development environments using these proven reference scripts. Quickly install required drivers, configure hardware, and validate platform readiness for Intel-based edge devices.

Visit the Edge AI Suites repository for a broader set of sample applications targeted at specific industry segments.

Specialized microservices delivering machine learning-powered analytics optimized for edge deployment. These microservices support scalable anomaly detection, classification, and predictive analytics on structured and time-series data.

-

An efficient Isolation Forest microservice for unsupervised anomaly detection supporting high-performance training and inference on tabular and streaming data.

-

High-speed Random Forest microservice for supervised classification tasks, optimized for edge and industrial use cases with rapid training and low-latency inference.

A comprehensive framework providing hardware abstraction, device management, and deployment tools for edge AI applications. Simplifies cross-platform development and enables consistent deployment across diverse edge hardware architectures.

-

Edge-device Enablement Framework

Framework for Intel® platform enablement and streamlining edge AI application deployment across heterogeneous device platforms.

To learn how to contribute to the project, see CONTRIBUTING.md.

If you need help, want to suggest a new feature, or report a bug, please use the following channels:

- Questions & Discussions: Join the conversation in GitHub Discussions to ask questions, share ideas, or get help from the community.

- Bug Reports & Feature Requests: Submit issues via Github Issues for bugs or feature requests.

The Edge AI Libraries project is licensed under the APACHE 2.0 license, except for the following components:

| Component | License |

|---|---|

| Dataset Management Framework (Datumaro) | MIT License |

| Intel® Geti™ | Limited Edge Software Distribution License |

| Deep Learning Streamer | MIT License |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for edge-ai-libraries

Similar Open Source Tools

edge-ai-libraries

The Edge AI Libraries project is a collection of libraries, microservices, and tools for Edge application development. It includes sample applications showcasing generic AI use cases. Key components include Anomalib, Dataset Management Framework, Deep Learning Streamer, ECAT EnableKit, EtherCAT Masterstack, FLANN, OpenVINO toolkit, Audio Analyzer, ORB Extractor, PCL, PLCopen Servo, Real-time Data Agent, RTmotion, Audio Intelligence, Deep Learning Streamer Pipeline Server, Document Ingestion, Model Registry, Multimodal Embedding Serving, Time Series Analytics, Vector Retriever, Visual-Data Preparation, VLM Inference Serving, Intel Geti, Intel SceneScape, Visual Pipeline and Platform Evaluation Tool, Chat Question and Answer, Document Summarization, PLCopen Benchmark, PLCopen Databus, Video Search and Summarization, Isolation Forest Classifier, Random Forest Microservices. Visit sub-directories for instructions and guides.

awesome-mlops

Awesome MLOps is a curated list of tools related to Machine Learning Operations, covering areas such as AutoML, CI/CD for Machine Learning, Data Cataloging, Data Enrichment, Data Exploration, Data Management, Data Processing, Data Validation, Data Visualization, Drift Detection, Feature Engineering, Feature Store, Hyperparameter Tuning, Knowledge Sharing, Machine Learning Platforms, Model Fairness and Privacy, Model Interpretability, Model Lifecycle, Model Serving, Model Testing & Validation, Optimization Tools, Simplification Tools, Visual Analysis and Debugging, and Workflow Tools. The repository provides a comprehensive collection of tools and resources for individuals and teams working in the field of MLOps.

awesome-alt-clouds

Awesome Alt Clouds is a curated list of non-hyperscale cloud providers offering specialized infrastructure and services catering to specific workloads, compliance requirements, and developer needs. The list includes various categories such as Infrastructure Clouds, Sovereign Clouds, Unikernels & WebAssembly, Data Clouds, Workflow and Operations Clouds, Network, Connectivity and Security Clouds, Vibe Clouds, Developer Happiness Clouds, Authorization, Identity, Fraud and Abuse Clouds, Monetization, Finance and Legal Clouds, Customer, Marketing and eCommerce Clouds, IoT, Communications, and Media Clouds, Blockchain Clouds, Source Code Control, Cloud Adjacent, and Future Clouds.

awesome-openvino

Awesome OpenVINO is a curated list of AI projects based on the OpenVINO toolkit, offering a rich assortment of projects, libraries, and tutorials covering various topics like model optimization, deployment, and real-world applications across industries. It serves as a valuable resource continuously updated to maximize the potential of OpenVINO in projects, featuring projects like Stable Diffusion web UI, Visioncom, FastSD CPU, OpenVINO AI Plugins for GIMP, and more.

ianvs

Ianvs is a distributed synergy AI benchmarking project incubated in KubeEdge SIG AI. It aims to test the performance of distributed synergy AI solutions following recognized standards, providing end-to-end benchmark toolkits, test environment management tools, test case control tools, and benchmark presentation tools. It also collaborates with other organizations to establish comprehensive benchmarks and related applications. The architecture includes critical components like Test Environment Manager, Test Case Controller, Generation Assistant, Simulation Controller, and Story Manager. Ianvs documentation covers quick start, guides, dataset descriptions, algorithms, user interfaces, stories, and roadmap.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

knavigator

Knavigator is a project designed to analyze, optimize, and compare scheduling systems, with a focus on AI/ML workloads. It addresses various needs, including testing, troubleshooting, benchmarking, chaos engineering, performance analysis, and optimization. Knavigator interfaces with Kubernetes clusters to manage tasks such as manipulating with Kubernetes objects, evaluating PromQL queries, as well as executing specific operations. It can operate both outside and inside a Kubernetes cluster, leveraging the Kubernetes API for task management. To facilitate large-scale experiments without the overhead of running actual user workloads, Knavigator utilizes KWOK for creating virtual nodes in extensive clusters.

edgeai

Embedded inference of Deep Learning models is quite challenging due to high compute requirements. TI’s Edge AI software product helps optimize and accelerate inference on TI’s embedded devices. It supports heterogeneous execution of DNNs across cortex-A based MPUs, TI’s latest generation C7x DSP, and DNN accelerator (MMA). The solution simplifies the product life cycle of DNN development and deployment by providing a rich set of tools and optimized libraries.

AimRT

AimRT is a basic runtime framework for modern robotics, developed in modern C++ with lightweight and easy deployment. It integrates research and development for robot applications in various deployment scenarios, providing debugging tools and observability support. AimRT offers a plug-in development interface compatible with ROS2, HTTP, Grpc, and other ecosystems for progressive system upgrades.

public

This public repository contains API, tools, and packages for Datagrok, a web-based data analytics platform. It offers support for scientific domains, applications, connectors to web services, visualizations, file importing, scientific methods in R, Python, or Julia, file metadata extractors, custom predictive models, platform enhancements, and more. The open-source packages are free to use, with restrictions on server computational capacities for the public environment. Academic institutions can use Datagrok for research and education, benefiting from reproducible and scalable computations and data augmentation capabilities. Developers can contribute by creating visualizations, scientific methods, file editors, connectors to web services, and more.

apo

AutoPilot Observability (APO) is an out-of-the-box observability platform that provides one-click installation and ready-to-use capabilities. APO's OneAgent supports one-click configuration-free installation of Tracing probes, collects application fault scene logs, infrastructure metrics, network metrics of applications and downstream dependencies, and Kubernetes events. It supports collecting causality metrics based on eBPF implementation. APO integrates OpenTelemetry probes, otel-collector, Jaeger, ClickHouse, and VictoriaMetrics, reducing user configuration work. APO innovatively integrates eBPF technology with the OpenTelemetry ecosystem, significantly reducing data storage volume. It offers guided troubleshooting using eBPF technology to assist users in pinpointing fault causes on a single page.

akeru

Akeru.ai is an open-source AI platform leveraging the power of decentralization. It offers transparent, safe, and highly available AI capabilities. The platform aims to give developers access to open-source and transparent AI resources through its decentralized nature hosted on an edge network. Akeru API introduces features like retrieval, function calling, conversation management, custom instructions, data input optimization, user privacy, testing and iteration, and comprehensive documentation. It is ideal for creating AI agents and enhancing web and mobile applications with advanced AI capabilities. The platform runs on a Bittensor Subnet design that aims to democratize AI technology and promote an equitable AI future. Akeru.ai embraces decentralization challenges to ensure a decentralized and equitable AI ecosystem with security features like watermarking and network pings. The API architecture integrates with technologies like Bun, Redis, and Elysia for a robust, scalable solution.

psychic

Finic is an open source python-based integration platform designed to simplify integration workflows for both business users and developers. It offers a drag-and-drop UI, a dedicated Python environment for each workflow, and generative AI features to streamline transformation tasks. With a focus on decoupling integration from product code, Finic aims to provide faster and more flexible integrations by supporting custom connectors. The tool is open source and allows deployment to users' own cloud environments with minimal legal friction.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

Fast-dLLM

Fast-DLLM is a diffusion-based Large Language Model (LLM) inference acceleration framework that supports efficient inference for models like Dream and LLaDA. It offers fast inference support, multiple optimization strategies, code generation, evaluation capabilities, and an interactive chat interface. Key features include Key-Value Cache for Block-Wise Decoding, Confidence-Aware Parallel Decoding, and overall performance improvements. The project structure includes directories for Dream and LLaDA model-related code, with installation and usage instructions provided for using the LLaDA and Dream models.

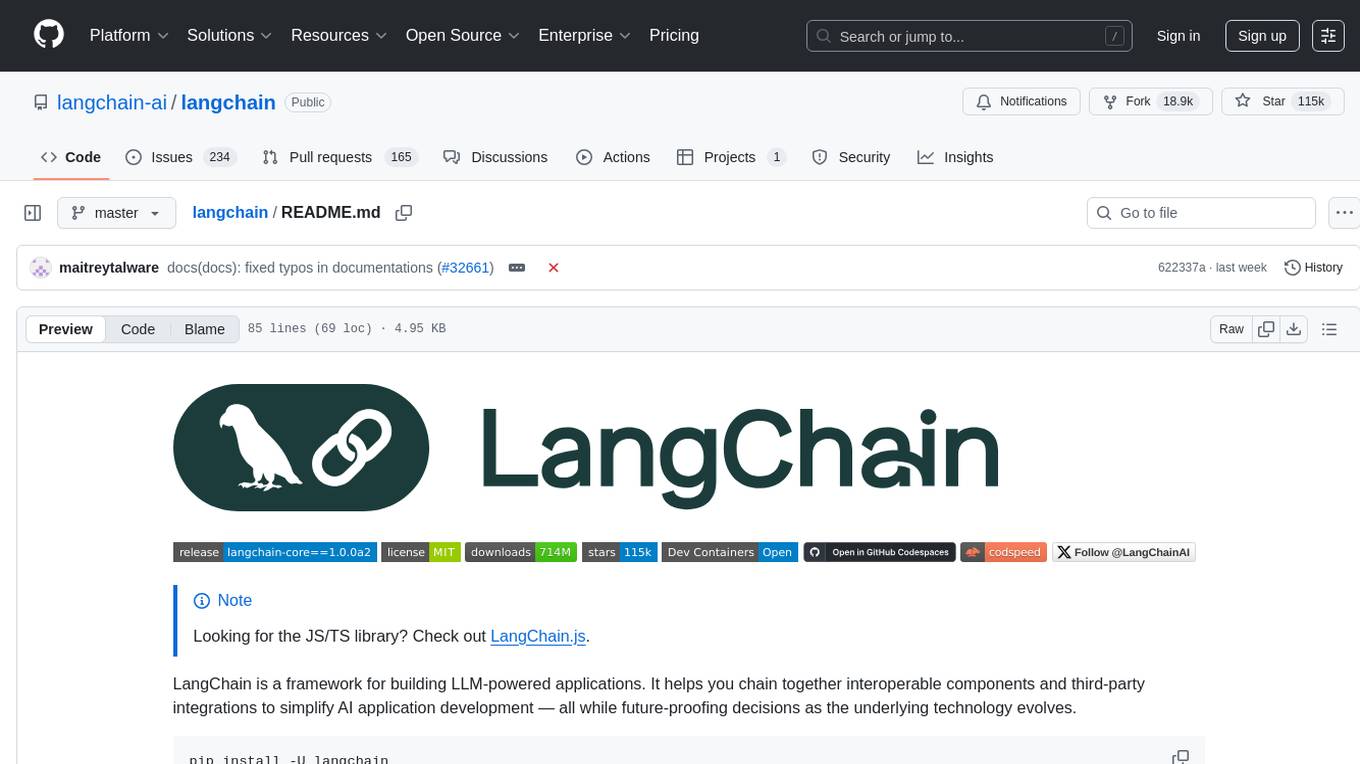

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

openvino

OpenVINO™ is an open-source toolkit for optimizing and deploying AI inference. It provides a common API to deliver inference solutions on various platforms, including CPU, GPU, NPU, and heterogeneous devices. OpenVINO™ supports pre-trained models from Open Model Zoo and popular frameworks like TensorFlow, PyTorch, and ONNX. Key components of OpenVINO™ include the OpenVINO™ Runtime, plugins for different hardware devices, frontends for reading models from native framework formats, and the OpenVINO Model Converter (OVC) for adjusting models for optimal execution on target devices.

peft

PEFT (Parameter-Efficient Fine-Tuning) is a collection of state-of-the-art methods that enable efficient adaptation of large pretrained models to various downstream applications. By only fine-tuning a small number of extra model parameters instead of all the model's parameters, PEFT significantly decreases the computational and storage costs while achieving performance comparable to fully fine-tuned models.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

emgucv

Emgu CV is a cross-platform .Net wrapper for the OpenCV image-processing library. It allows OpenCV functions to be called from .NET compatible languages. The wrapper can be compiled by Visual Studio, Unity, and "dotnet" command, and it can run on Windows, Mac OS, Linux, iOS, and Android.

MMStar

MMStar is an elite vision-indispensable multi-modal benchmark comprising 1,500 challenge samples meticulously selected by humans. It addresses two key issues in current LLM evaluation: the unnecessary use of visual content in many samples and the existence of unintentional data leakage in LLM and LVLM training. MMStar evaluates 6 core capabilities across 18 detailed axes, ensuring a balanced distribution of samples across all dimensions.

VLMEvalKit

VLMEvalKit is an open-source evaluation toolkit of large vision-language models (LVLMs). It enables one-command evaluation of LVLMs on various benchmarks, without the heavy workload of data preparation under multiple repositories. In VLMEvalKit, we adopt generation-based evaluation for all LVLMs, and provide the evaluation results obtained with both exact matching and LLM-based answer extraction.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.