marimo

A reactive notebook for Python — run reproducible experiments, query with SQL, execute as a script, deploy as an app, and version with git. Stored as pure Python. All in a modern, AI-native editor.

Stars: 19186

Marimo is a reactive Python notebook that ensures code and outputs consistency by automatically running dependent cells or marking them as stale. It replaces various tools like Jupyter, streamlit, and more, offering an interactive environment with features like binding UI elements to Python, reproducibility, executability as scripts or apps, shareability, and designed for data tasks. It is git-friendly, offers a modern editor with AI assistants, and comes with built-in package management. Marimo provides deterministic execution order, dynamic markdown and SQL capabilities, and a performant runtime. It is easy to get started with and suitable for both beginners and power users.

README:

A reactive Python notebook that's reproducible, git-friendly, and deployable as scripts or apps.

Docs · Discord · Examples · Gallery · YouTube

English | 繁體中文 | 简体中文 | 日本語 | Español

marimo is a reactive Python notebook: run a cell or interact with a UI element, and marimo automatically runs dependent cells (or marks them as stale), keeping code and outputs consistent. marimo notebooks are stored as pure Python (with first-class SQL support), executable as scripts, and deployable as apps.

Highlights.

- 🚀 batteries-included: replaces

jupyter,streamlit,jupytext,ipywidgets,papermill, and more - ⚡️ reactive: run a cell, and marimo reactively runs all dependent cells or marks them as stale

- 🖐️ interactive: bind sliders, tables, plots, and more to Python — no callbacks required

- 🐍 git-friendly: stored as

.pyfiles - 🛢️ designed for data: query dataframes, databases, warehouses, or lakehouses with SQL, filter and search dataframes

- 🤖 AI-native: generate cells with AI tailored for data work

- 🔬 reproducible: no hidden state, deterministic execution, built-in package management

- 🏃 executable: execute as a Python script, parameterized by CLI args

- 🛜 shareable: deploy as an interactive web app or slides, run in the browser via WASM

- 🧩 reusable: import functions and classes from one notebook to another

- 🧪 testable: run pytest on notebooks

- ⌨️ a modern editor: GitHub Copilot, AI assistants, vim keybindings, variable explorer, and more

- 🧑💻 use your favorite editor: run in VS Code or Cursor, or edit in neovim, Zed, or any other text editor

pip install marimo && marimo tutorial introGet started instantly with molab, our free online notebook. Or jump to the quickstart for a primer on our CLI.

marimo guarantees your notebook code, outputs, and program state are consistent. This solves many problems associated with traditional notebooks like Jupyter.

A reactive programming environment. Run a cell and marimo reacts by automatically running the cells that reference its variables, eliminating the error-prone task of manually re-running cells. Delete a cell and marimo scrubs its variables from program memory, eliminating hidden state.

Compatible with expensive notebooks. marimo lets you configure the runtime to be lazy, marking affected cells as stale instead of automatically running them. This gives you guarantees on program state while preventing accidental execution of expensive cells.

Synchronized UI elements. Interact with UI elements like sliders, dropdowns, dataframe transformers, and chat interfaces, and the cells that use them are automatically re-run with their latest values.

Interactive dataframes. Page through, search, filter, and sort millions of rows blazingly fast, no code required.

Generate cells with data-aware AI. Generate code with an AI assistant that is highly specialized for working with data, with context about your variables in memory; zero-shot entire notebooks. Customize the system prompt, bring your own API keys, or use local models.

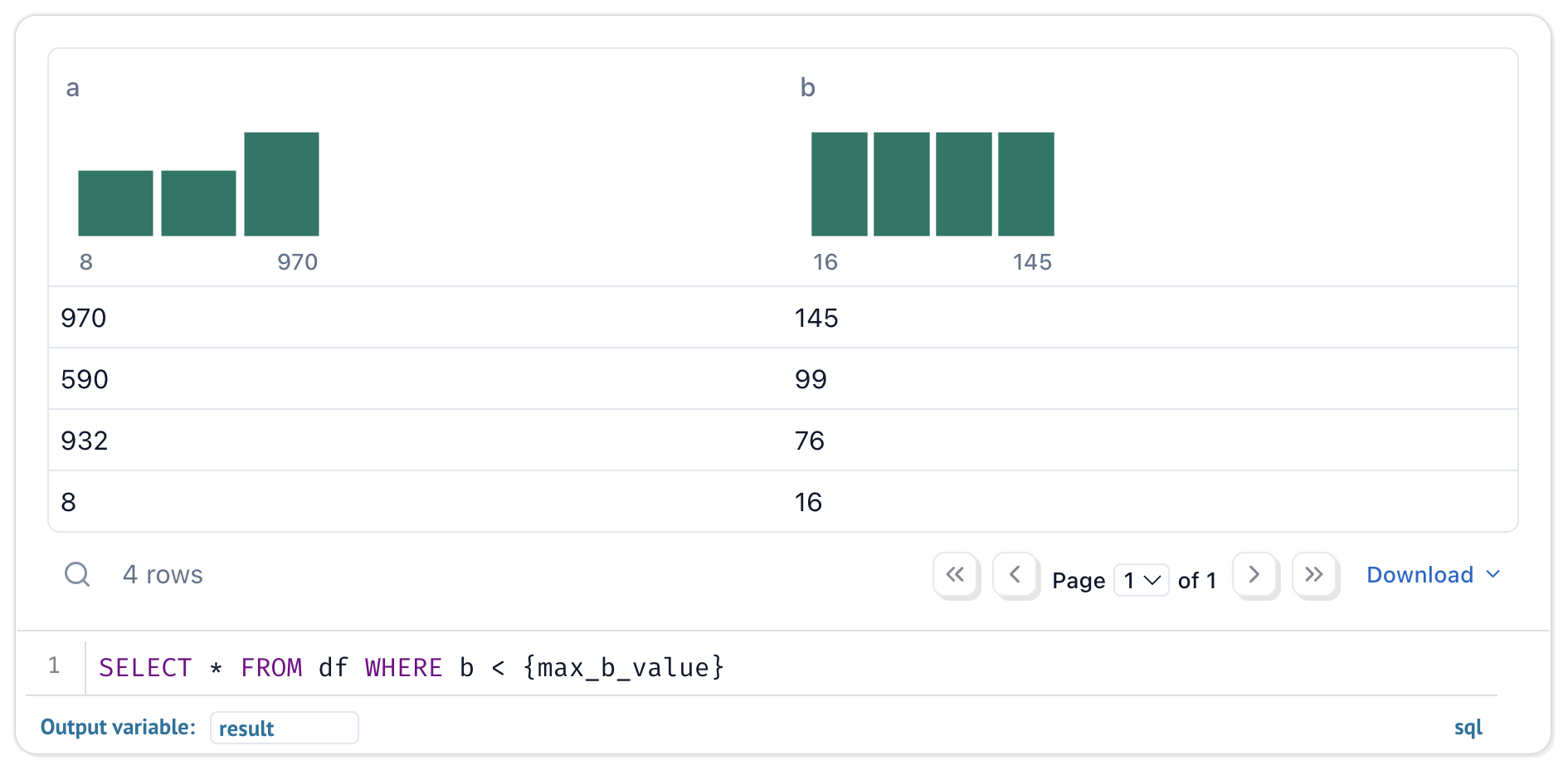

Query data with SQL. Build SQL queries that depend on Python values and execute them against dataframes, databases, lakehouses, CSVs, Google Sheets, or anything else using our built-in SQL engine, which returns the result as a Python dataframe.

Your notebooks are still pure Python, even if they use SQL.

Dynamic markdown. Use markdown parametrized by Python variables to tell dynamic stories that depend on Python data.

Built-in package management. marimo has built-in support for all major package managers, letting you install packages on import. marimo can even serialize package requirements in notebook files, and auto install them in isolated venv sandboxes.

Deterministic execution order. Notebooks are executed in a deterministic order, based on variable references instead of cells' positions on the page. Organize your notebooks to best fit the stories you'd like to tell.

Performant runtime. marimo runs only those cells that need to be run by statically analyzing your code.

Batteries-included. marimo comes with GitHub Copilot, AI assistants, Ruff code formatting, HTML export, fast code completion, a VS Code extension, an interactive dataframe viewer, and many more quality-of-life features.

The marimo concepts playlist on our YouTube channel gives an overview of many features.

Installation. In a terminal, run

pip install marimo # or conda install -c conda-forge marimo

marimo tutorial introTo install with additional dependencies that unlock SQL cells, AI completion, and more, run

pip install "marimo[recommended]"Create notebooks.

Create or edit notebooks with

marimo editRun apps. Run your notebook as a web app, with Python code hidden and uneditable:

marimo run your_notebook.pyExecute as scripts. Execute a notebook as a script at the command line:

python your_notebook.pyAutomatically convert Jupyter notebooks. Automatically convert Jupyter notebooks to marimo notebooks with the CLI

marimo convert your_notebook.ipynb > your_notebook.pyor use our web interface.

Tutorials. List all tutorials:

marimo tutorial --helpShare cloud-based notebooks. Use molab, a cloud-based marimo notebook service similar to Google Colab, to create and share notebook links.

See the FAQ at our docs.

marimo is easy to get started with, with lots of room for power users. For example, here's an embedding visualizer made in marimo (try the notebook live on molab!):

Check out our docs, usage examples, and our gallery to learn more.

|

|

|

|

| Tutorial | Inputs | Plots | Layout |

|

|

|

|

|

We appreciate all contributions! You don't need to be an expert to help out. Please see CONTRIBUTING.md for more details on how to get started.

Questions? Reach out to us on Discord.

We're building a community. Come hang out with us!

- 🌟 Star us on GitHub

- 💬 Chat with us on Discord

- 📧 Subscribe to our Newsletter

- ☁️ Join our Cloud Waitlist

- ✏️ Start a GitHub Discussion

- 🦋 Follow us on Bluesky

- 🐦 Follow us on Twitter

- 🎥 Subscribe on YouTube

- 🤖 Follow us on Reddit

- 🕴️ Follow us on LinkedIn

A NumFOCUS affiliated project. marimo is a core part of the broader Python ecosystem and is a member of the NumFOCUS community, which includes projects such as NumPy, SciPy, and Matplotlib.

marimo is a reinvention of the Python notebook as a reproducible, interactive, and shareable Python program, instead of an error-prone JSON scratchpad.

We believe that the tools we use shape the way we think — better tools, for better minds. With marimo, we hope to provide the Python community with a better programming environment to do research and communicate it; to experiment with code and share it; to learn computational science and teach it.

Our inspiration comes from many places and projects, especially Pluto.jl, ObservableHQ, and Bret Victor's essays. marimo is part of a greater movement toward reactive dataflow programming. From IPyflow, streamlit, TensorFlow, PyTorch, JAX, and React, the ideas of functional, declarative, and reactive programming are transforming a broad range of tools for the better.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for marimo

Similar Open Source Tools

marimo

Marimo is a reactive Python notebook that ensures code and outputs consistency by automatically running dependent cells or marking them as stale. It replaces various tools like Jupyter, streamlit, and more, offering an interactive environment with features like binding UI elements to Python, reproducibility, executability as scripts or apps, shareability, and designed for data tasks. It is git-friendly, offers a modern editor with AI assistants, and comes with built-in package management. Marimo provides deterministic execution order, dynamic markdown and SQL capabilities, and a performant runtime. It is easy to get started with and suitable for both beginners and power users.

deepnote

Deepnote is a data notebook tool designed for the AI era, used by over 500,000 data professionals at companies like Estée Lauder, SoundCloud, Statsig, and Gusto. It offers a human-readable format, block-based architecture, reactive notebook execution, and effortless conversion between .ipynb and .deepnote formats. Deepnote extends Jupyter with features like native AI agent, Git integration, cloud compute, and native database & API connections. The repository contains reusable packages and libraries for Deepnote's notebook, runtime, and collaboration features.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

kestra

Kestra is an open-source event-driven orchestration platform that simplifies building scheduled and event-driven workflows. It offers Infrastructure as Code best practices for data, process, and microservice orchestration, allowing users to create reliable workflows using YAML configuration. Key features include everything as code with Git integration, event-driven and scheduled workflows, rich plugin ecosystem for data extraction and script running, intuitive UI with syntax highlighting, scalability for millions of workflows, version control friendly, and various features for structure and resilience. Kestra ensures declarative orchestration logic management even when workflows are modified via UI, API calls, or other methods.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

neptune-client

Neptune is a scalable experiment tracker for teams training foundation models. Log millions of runs, effortlessly monitor and visualize model training, and deploy on your infrastructure. Track 100% of metadata to accelerate AI breakthroughs. Log and display any framework and metadata type from any ML pipeline. Organize experiments with nested structures and custom dashboards. Compare results, visualize training, and optimize models quicker. Version models, review stages, and access production-ready models. Share results, manage users, and projects. Integrate with 25+ frameworks. Trusted by great companies to improve workflow.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing features like local data storage, multiple LLM provider support, image generation, enhanced prompting, keyboard shortcuts, and more. It offers a user-friendly interface with dark theme, team collaboration, cross-platform availability, web version access, iOS & Android apps, multilingual support, and ongoing feature enhancements. Developed for prompt and API debugging, it has gained popularity for daily chatting and professional role-playing with AI assistance.

trigger.dev

Trigger.dev is an open source platform and SDK for creating long-running background jobs. It provides features like JavaScript and TypeScript SDK, no timeouts, retries, queues, schedules, observability, React hooks, Realtime API, custom alerts, elastic scaling, and works with existing tech stack. Users can create tasks in their codebase, deploy tasks using the SDK, manage tasks in different environments, and have full visibility of job runs. The platform offers a trace view of every task run for detailed monitoring. Getting started is easy with account creation, project setup, and onboarding instructions. Self-hosting and development guides are available for users interested in contributing or hosting Trigger.dev.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

RWKV_APP

RWKV App is an experimental application that enables users to run Large Language Models (LLMs) offline on their edge devices. It offers a privacy-first, on-device LLM experience for everyday devices. Users can engage in multi-turn conversations, text-to-speech, visual understanding, and more, all without requiring an internet connection. The app supports switching between different models, running locally without internet, and exploring various AI tasks such as chat, speech generation, and visual understanding. It is built using Flutter and Dart FFI for cross-platform compatibility and efficient communication with the C++ inference engine. The roadmap includes integrating features into the RWKV Chat app, supporting more model weights, hardware, operating systems, and devices.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.