livekit

End-to-end realtime stack for connecting humans and AI

Stars: 14509

LiveKit is an open source project providing scalable, multi-user conferencing based on WebRTC. It offers a server written in Go, client SDKs, and advanced features like speaker detection, end-to-end encryption, and SVC codecs. The tool is easy to deploy with support for JWT authentication and robust networking. LiveKit ecosystem includes agents for AI applications, tools like CLI and Docker image, and SDKs for both client and server-side development.

README:

LiveKit is an open source project that provides scalable, multi-user conferencing based on WebRTC. It's designed to provide everything you need to build real-time video audio data capabilities in your applications.

LiveKit's server is written in Go, using the awesome Pion WebRTC implementation.

- Scalable, distributed WebRTC SFU (Selective Forwarding Unit)

- Modern, full-featured client SDKs

- Built for production, supports JWT authentication

- Robust networking and connectivity, UDP/TCP/TURN

- Easy to deploy: single binary, Docker or Kubernetes

- Advanced features including:

- speaker detection

- simulcast

- end-to-end optimizations

- selective subscription

- moderation APIs

- end-to-end encryption

- SVC codecs (VP9, AV1)

- webhooks

- distributed and multi-region

- LiveKit Meet (source)

- Spatial Audio (source)

- Livestreaming from OBS Studio (source)

- AI voice assistant using ChatGPT (source)

- Agents: build real-time multimodal AI applications with programmable backend participants

- Egress: record or multi-stream rooms and export individual tracks

- Ingress: ingest streams from external sources like RTMP, WHIP, HLS, or OBS Studio

Client SDKs enable your frontend to include interactive, multi-user experiences.

| Language | Repo | Declarative UI | Links |

|---|---|---|---|

| JavaScript (TypeScript) | client-sdk-js | React | docs | JS example | React example |

| Swift (iOS / MacOS) | client-sdk-swift | Swift UI | docs | example |

| Kotlin (Android) | client-sdk-android | Compose | docs | example | Compose example |

| Flutter (all platforms) | client-sdk-flutter | native | docs | example |

| Unity WebGL | client-sdk-unity-web | docs | |

| React Native (beta) | client-sdk-react-native | native | |

| Rust | client-sdk-rust |

Server SDKs enable your backend to generate access tokens, call server APIs, and receive webhooks. In addition, the Go SDK includes client capabilities, enabling you to build automations that behave like end-users.

| Language | Repo | Docs |

|---|---|---|

| Go | server-sdk-go | docs |

| JavaScript (TypeScript) | server-sdk-js | docs |

| Ruby | server-sdk-ruby | |

| Java (Kotlin) | server-sdk-kotlin | |

| Python (community) | python-sdks | |

| PHP (community) | agence104/livekit-server-sdk-php |

- CLI - command line interface & load tester

- Docker image

- Helm charts

[!TIP] We recommend installing LiveKit CLI along with the server. It lets you access server APIs, create tokens, and generate test traffic.

The following will install LiveKit's media server:

brew install livekitcurl -sSL https://get.livekit.io | bashDownload the latest release here

Start LiveKit in development mode by running livekit-server --dev. It'll use a placeholder API key/secret pair.

API Key: devkey

API Secret: secret

To customize your setup for production, refer to our deployment docs

A user connecting to a LiveKit room requires an access token. Access tokens (JWT) encode the user's identity and the room permissions they've been granted. You can generate a token with our CLI:

lk token create \

--api-key devkey --api-secret secret \

--join --room my-first-room --identity user1 \

--valid-for 24hHead over to our example app and enter a generated token to connect to your LiveKit server. This app is built with our React SDK.

Once connected, your video and audio are now being published to your new LiveKit instance!

lk room join \

--url ws://localhost:7880 \

--api-key devkey --api-secret secret \

--identity bot-user1 \

--publish-demo \

my-first-roomThis command publishes a looped demo video to a room. Due to how the video clip was encoded (keyframes every 3s), there's a slight delay before the browser has sufficient data to begin rendering frames. This is an artifact of the simulation.

LiveKit Cloud is the fastest and most reliable way to run LiveKit. Every project gets free monthly bandwidth and transcoding credits.

Sign up for LiveKit Cloud.

Read our deployment docs for more information.

Pre-requisites:

- Go 1.23+ is installed

- GOPATH/bin is in your PATH

Then run

git clone https://github.com/livekit/livekit

cd livekit

./bootstrap.sh

mageWe welcome your contributions toward improving LiveKit! Please join us on Slack to discuss your ideas and/or PRs.

LiveKit server is licensed under Apache License v2.0.

| LiveKit Ecosystem | |

|---|---|

| LiveKit SDKs | Browser · iOS/macOS/visionOS · Android · Flutter · React Native · Rust · Node.js · Python · Unity · Unity (WebGL) · ESP32 |

| Server APIs | Node.js · Golang · Ruby · Java/Kotlin · Python · Rust · PHP (community) · .NET (community) |

| UI Components | React · Android Compose · SwiftUI · Flutter |

| Agents Frameworks | Python · Node.js · Playground |

| Services | LiveKit server · Egress · Ingress · SIP |

| Resources | Docs · Example apps · Cloud · Self-hosting · CLI |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for livekit

Similar Open Source Tools

livekit

LiveKit is an open source project providing scalable, multi-user conferencing based on WebRTC. It offers a server written in Go, client SDKs, and advanced features like speaker detection, end-to-end encryption, and SVC codecs. The tool is easy to deploy with support for JWT authentication and robust networking. LiveKit ecosystem includes agents for AI applications, tools like CLI and Docker image, and SDKs for both client and server-side development.

mindpocket

MindPocket is a fully open-source, free, multi-platform, one-click deployable personal bookmark system with AI Agent integration. It organizes bookmarks with AI-powered RAG content summarization and automatic tag generation, making it easy to find and manage saved content. The project is built using VIBE CODING principles and offers features like zero cost deployment, one-click deploy setup, multi-platform support, AI enhancement for smart tagging and summarization, and full open-source accessibility for user data ownership. The tool is designed to provide a seamless bookmarking experience across web, mobile, and browser extension platforms.

ToolJet

ToolJet is an open-source platform for building and deploying internal tools, workflows, and AI agents. It offers a visual builder with drag-and-drop UI, integrations with databases, APIs, SaaS apps, and object storage. The community edition includes features like a visual app builder, ToolJet database, multi-page apps, collaboration tools, extensibility with plugins, code execution, and security measures. ToolJet AI, the enterprise version, adds AI capabilities for app generation, query building, debugging, agent creation, security compliance, user management, environment management, GitSync, branding, access control, embedded apps, and enterprise support.

LobsterAI

LobsterAI is an all-in-one personal assistant Agent developed by NetEase Youdao. It works around the clock to handle everyday tasks like data analysis, making presentations, generating videos, writing documents, searching the web, sending emails, and scheduling tasks. At its core is Cowork mode, which executes tools, manipulates files, and runs commands in a local or sandboxed environment. Users can also chat with the agent via various platforms and control it remotely from their phones. The tool features built-in skills, scheduled tasks, persistent memory, and cross-platform support.

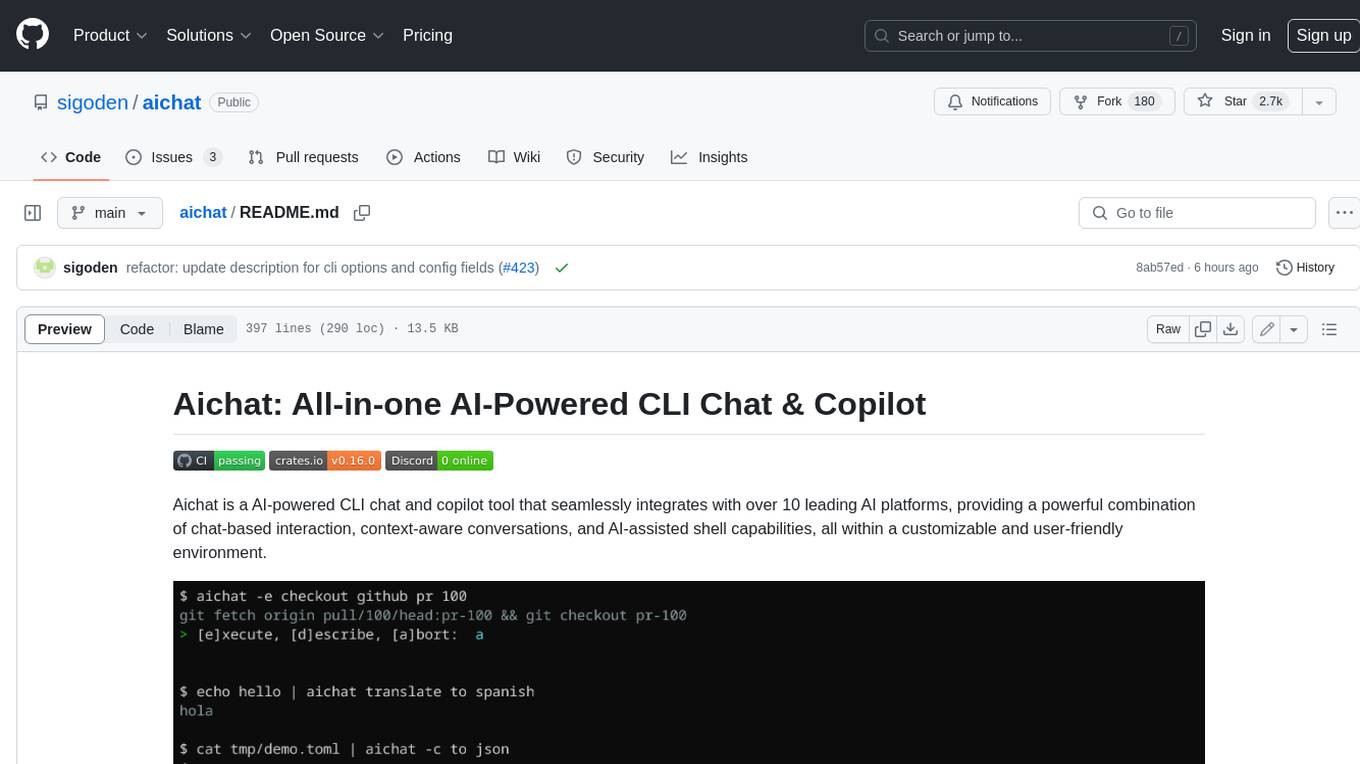

aichat

Aichat is an AI-powered CLI chat and copilot tool that seamlessly integrates with over 10 leading AI platforms, providing a powerful combination of chat-based interaction, context-aware conversations, and AI-assisted shell capabilities, all within a customizable and user-friendly environment.

kubesphere

KubeSphere is a distributed operating system for cloud-native application management, using Kubernetes as its kernel. It provides a plug-and-play architecture, allowing third-party applications to be seamlessly integrated into its ecosystem. KubeSphere is also a multi-tenant container platform with full-stack automated IT operation and streamlined DevOps workflows. It provides developer-friendly wizard web UI, helping enterprises to build out a more robust and feature-rich platform, which includes most common functionalities needed for enterprise Kubernetes strategy.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

MiniAI-Face-Recognition-LivenessDetection-ServerSDK

The MiniAiLive Face Recognition LivenessDetection Server SDK provides system integrators with fast, flexible, and extremely precise facial recognition that can be deployed across various scenarios, including security, access control, public safety, fintech, smart retail, and home protection. The SDK is fully on-premise, meaning all processing happens on the hosting server, and no data leaves the server. The project structure includes bin, cpp, flask, model, python, test_image, and Dockerfile directories. To set up the project on Linux, download the repo, install system dependencies, and copy libraries into the system folder. For Windows, contact MiniAiLive via email. The C++ example involves replacing the license key in main.cpp, building the project, and running it. The Python example requires installing dependencies and running the project. The Python Flask example involves replacing the license key in app.py, installing dependencies, and running the project. The Docker Flask example includes building the docker image and running it. To request a license, contact MiniAiLive. Contributions to the project are welcome by following specific steps. An online demo is available at https://demo.miniai.live. Related products include MiniAI-Face-Recognition-LivenessDetection-AndroidSDK, MiniAI-Face-Recognition-LivenessDetection-iOS-SDK, MiniAI-Face-LivenessDetection-AndroidSDK, MiniAI-Face-LivenessDetection-iOS-SDK, MiniAI-Face-Matching-AndroidSDK, and MiniAI-Face-Matching-iOS-SDK. MiniAiLive is a leading AI solutions company specializing in computer vision and machine learning technologies.

flowgram.ai

FlowGram.AI is a node-based flow building engine that helps developers create workflows in fixed or free connection layout modes. It provides interaction best practices and is suitable for visual workflows with clear inputs and outputs. The tool focuses on empowering workflows with AI capabilities.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

ovos-buildroot

OVOS - Buildroot OS is a minimalistic Linux OS designed to bring the open source voice assistant ovos-core to embedded, low-spec headless, and small touchscreen devices. It includes a full 64-bit distribution with Linux kernel 6.1.x, Buildroot 2023.02.x, and OVOS framework utilizing ovos-docker containers. The supported hardware includes Raspberry Pi 3, 3b, 3b+, Raspberry Pi 4, x86_64 Intel-based computers, and Open Virtual Appliance. The project is inspired by Mycroft AI, Buildroot, and HassOS, offering a platform for building voice assistant solutions on various devices.

yournextstore

Your Next Store is an open-source Next.js e-commerce platform designed for AI development. It offers a Stripe-native integration, ultra-fast page loads, and typed APIs. The codebase follows consistent patterns, provides blazing fast performance with Next.js 16 and edge caching, and allows direct API integration without the need for plugins. With a focus on AI coding tools, Your Next Store offers familiar patterns, typed APIs for Commerce Kit SDK methods, and a well-defined domain for commerce data models, making it easier for AI models to generate accurate suggestions and write correct code.

nexus

Nexus is a virtual filesystem server designed for AI agents, offering a unified path-based API that integrates files, databases, APIs, and SaaS tools. It provides built-in permissions, memory management, semantic search, and skills lifecycle management. The Nexus Control Panel allows humans to curate files, memories, permissions, and integrations for agents, ensuring oversight and control while agents focus on execution. The repository includes the open-source server, SDK, CLI, and examples, catering to the needs of AI-native filesystems for cognitive agents.

EvoAgentX

EvoAgentX is an open-source framework for building, evaluating, and evolving LLM-based agents or agentic workflows in an automated, modular, and goal-driven manner. It enables developers and researchers to move beyond static prompt chaining or manual workflow orchestration by introducing a self-evolving agent ecosystem. The framework includes features such as agent workflow autoconstruction, built-in evaluation, self-evolution engine, plug-and-play compatibility, comprehensive built-in tools, memory module support, and human-in-the-loop interactions.

off-grid-mobile

Off Grid is a complete offline AI suite that allows users to perform various tasks such as text generation, image generation, vision AI, voice transcription, and document analysis on their mobile devices without sending any data out. The tool offers high performance on flagship devices and supports a wide range of models for different tasks. Users can easily install the tool on Android by downloading the APK from GitHub Releases or build it from source with Node.js and JDK. The documentation provides detailed information on the system architecture, codebase, design system, visual hierarchy, test flows, and more. Contributions are welcome, and the tool is built with a focus on user privacy and data security, ensuring no cloud, subscription, or data harvesting.

For similar tasks

livekit

LiveKit is an open source project providing scalable, multi-user conferencing based on WebRTC. It offers a server written in Go, client SDKs, and advanced features like speaker detection, end-to-end encryption, and SVC codecs. The tool is easy to deploy with support for JWT authentication and robust networking. LiveKit ecosystem includes agents for AI applications, tools like CLI and Docker image, and SDKs for both client and server-side development.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

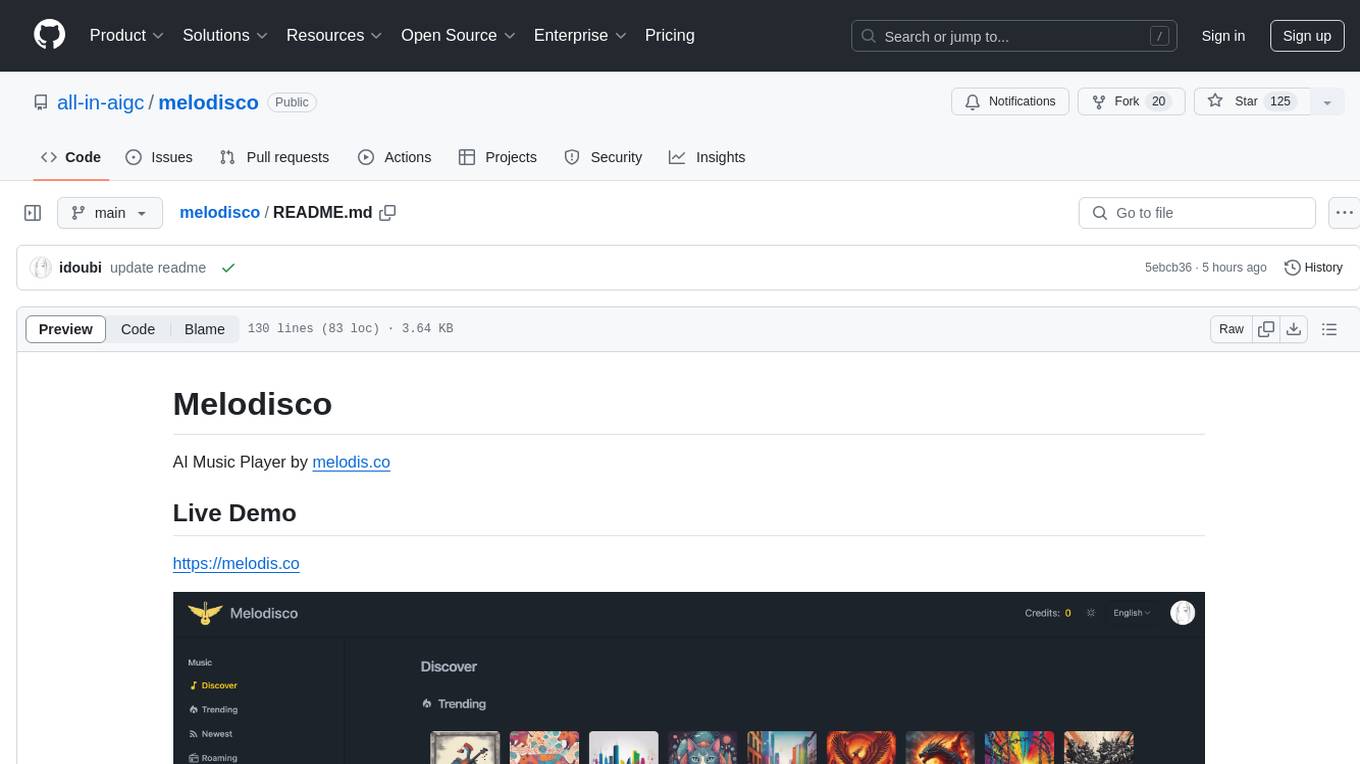

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.