binglish

简单的AI桌面英语程序。点亮屏幕,欣赏美景,邂逅知识,聚沙成塔。

Stars: 67

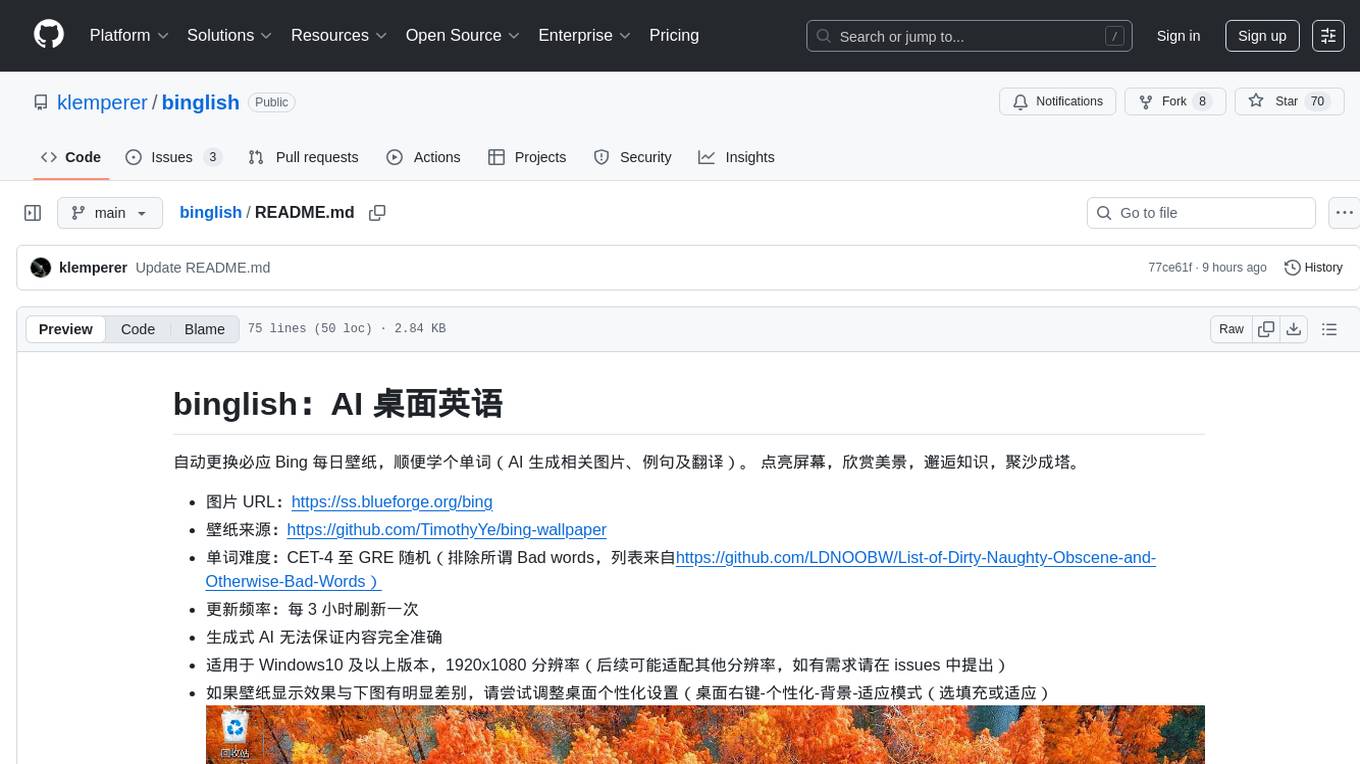

binglish is a desktop English learning tool that automatically changes the Bing daily wallpaper while helping users learn new words through AI-generated images, example sentences, and translations. Users can enjoy beautiful scenery, acquire knowledge, and build vocabulary towers. The tool excludes bad words and offers words ranging from CET-4 to GRE difficulty levels. It refreshes every 3 hours and is designed for Windows 10 and above with a resolution of 1920x1080. The AI-generated content may not be completely accurate.

README:

自动更换必应 Bing 每日壁纸,顺便学个单词(AI 生成相关图片、例句及翻译)。 点亮屏幕,欣赏美景,邂逅知识,聚沙成塔。

- 图片 URL:https://ss.blueforge.org/bing

- 壁纸来源:https://github.com/TimothyYe/bing-wallpaper

- 单词难度:CET-4 至 GRE 随机(排除所谓 Bad words,列表来自https://github.com/LDNOOBW/List-of-Dirty-Naughty-Obscene-and-Otherwise-Bad-Words)

- 更新频率:每 3 小时刷新一次

- 生成式 AI 无法保证内容完全准确

- 适用于 Windows10 及以上版本,1920x1080 分辨率(后续可能适配其他分辨率,如有需求请在 issues 中提出)

- 如果壁纸显示效果与下图有明显差别,请尝试调整桌面个性化设置(桌面右键-个性化-背景-适应模式(选填充或适应)

pip install -r requirements.txtgit clone https://github.com/klemperer/binglish/

cd binglish

pip install pyinstaller

bundle.bat打包后在dist目录下找到binglish.exe,或在项目releases中下载已打包的最新版本,双击运行。程序运行后将最小化至右侧任务栏中,可在右键菜单中选择开机自动运行。

也可以在命令行中执行以下命令以运行(不推荐,“检查更新”功能不可用):

python binglish.py在ipad“快捷指令”程序中新建快捷指令(参考https://www.icloud.com/shortcuts/f309786b43b0420f96c59602b8a0361f ,在ipad safari浏览器中打开),在“快捷指令-自动化”中设置特定时间运行上述快捷指令。该功能未充分测试,可能存在部分ipad机型无法显示墙纸全部内容等现象。

如果遇到如下问题:

D:\Repository\binglish>python binglish.py

Traceback (most recent call last):

File "D:\Repository\binglish\binglish.py", line 11, in <module>

import tkinter as tk

ModuleNotFoundError: No module named 'tkinter'

通常情况下是由于没有正确安装 tkinter 导致的。当您安装完整的 Python 解释器时,tkinter 会随之一起安装。但是,如果您使用的是自定义的 Python 版本,或者在安装时选择了最小化安装选项,那么 tkinter 可能没有被包含。 要解决这个问题,您需要单独安装 tkinter。您可以使用以下命令来安装 tkinter:

pip install tk如果您使用的是 Windows,并且已经安装了 Python,那么您可能需要以管理员身份运行命令提示符,然后使用以下命令来安装 tkinter:

python -m pip install tk安装完成后,您应该能够正常运行 binglish.py 脚本了。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for binglish

Similar Open Source Tools

binglish

binglish is a desktop English learning tool that automatically changes the Bing daily wallpaper while helping users learn new words through AI-generated images, example sentences, and translations. Users can enjoy beautiful scenery, acquire knowledge, and build vocabulary towers. The tool excludes bad words and offers words ranging from CET-4 to GRE difficulty levels. It refreshes every 3 hours and is designed for Windows 10 and above with a resolution of 1920x1080. The AI-generated content may not be completely accurate.

yoyak

Yoyak is a small CLI tool powered by LLM for summarizing and translating web pages. It provides shell completion scripts for bash, fish, and zsh. Users can set the model they want to use and summarize web pages with the 'yoyak summary' command. Additionally, translation to other languages is supported using the '-l' option with ISO 639-1 language codes. Yoyak supports various models for summarization and translation tasks.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

CosyVoice

CosyVoice is a tool designed for speech synthesis, offering pretrained models for zero-shot, sft, instruct inference. It provides a web demo for easy usage and supports advanced users with train and inference scripts. The tool can be deployed using grpc for service deployment. Users can download pretrained models and resources for immediate use or train their own models from scratch. CosyVoice is suitable for researchers, developers, linguists, AI engineers, and speech technology enthusiasts.

llama.vim

llama.vim is a plugin that provides local LLM-assisted text completion for Vim users. It offers features such as auto-suggest on cursor movement, manual suggestion toggling, suggestion acceptance with Tab and Shift+Tab, control over text generation time, context configuration, ring context with chunks from open and edited files, and performance stats display. The plugin requires a llama.cpp server instance to be running and supports FIM-compatible models. It aims to be simple, lightweight, and provide high-quality and performant local FIM completions even on consumer-grade hardware.

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

org-ai

org-ai is a minor mode for Emacs org-mode that provides access to generative AI models, including OpenAI API (ChatGPT, DALL-E, other text models) and Stable Diffusion. Users can use ChatGPT to generate text, have speech input and output interactions with AI, generate images and image variations using Stable Diffusion or DALL-E, and use various commands outside org-mode for prompting using selected text or multiple files. The tool supports syntax highlighting in AI blocks, auto-fill paragraphs on insertion, and offers block options for ChatGPT, DALL-E, and other text models. Users can also generate image variations, use global commands, and benefit from Noweb support for named source blocks.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

AI-Video-Boilerplate-Simple

AI-video-boilerplate-simple is a free Live AI Video boilerplate for testing out live video AI experiments. It includes a simple Flask server that serves files, supports live video from various sources, and integrates with Roboflow for AI vision. Users can use this template for projects, research, business ideas, and homework. It is lightweight and can be deployed on popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

Discord-AI-Chatbot

Discord AI Chatbot is a versatile tool that seamlessly integrates into your Discord server, offering a wide range of capabilities to enhance your communication and engagement. With its advanced language model, the bot excels at imaginative generation, providing endless possibilities for creative expression. Additionally, it offers secure credential management, ensuring the privacy of your data. The bot's hybrid command system combines the best of slash and normal commands, providing flexibility and ease of use. It also features mention recognition, ensuring prompt responses whenever you mention it or use its name. The bot's message handling capabilities prevent confusion by recognizing when you're replying to others. You can customize the bot's behavior by selecting from a range of pre-existing personalities or creating your own. The bot's web access feature unlocks a new level of convenience, allowing you to interact with it from anywhere. With its open-source nature, you have the freedom to modify and adapt the bot to your specific needs.

openedai-speech

OpenedAI Speech is a free, private text-to-speech server compatible with the OpenAI audio/speech API. It offers custom voice cloning and supports various models like tts-1 and tts-1-hd. Users can map their own piper voices and create custom cloned voices. The server provides multilingual support with XTTS voices and allows fixing incorrect sounds with regex. Recent changes include bug fixes, improved error handling, and updates for multilingual support. Installation can be done via Docker or manual setup, with usage instructions provided. Custom voices can be created using Piper or Coqui XTTS v2, with guidelines for preparing audio files. The tool is suitable for tasks like generating speech from text, creating custom voices, and multilingual text-to-speech applications.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

For similar tasks

binglish

binglish is a desktop English learning tool that automatically changes the Bing daily wallpaper while helping users learn new words through AI-generated images, example sentences, and translations. Users can enjoy beautiful scenery, acquire knowledge, and build vocabulary towers. The tool excludes bad words and offers words ranging from CET-4 to GRE difficulty levels. It refreshes every 3 hours and is designed for Windows 10 and above with a resolution of 1920x1080. The AI-generated content may not be completely accurate.

vocabulary-book-by-deepseek

Vocabulary Book by DeepSeek is a manual for CET-4, postgraduate entrance examination, and TOEFL vocabulary, providing word meanings, roots, example sentences, mnemonic aids, and mnemonic images. The project uses Cline + DeepSeek-R1-16b for over 80% of the code to automatically encode the vocabulary manual. The generated manual includes vocabulary from A to Z for CET-4, CET-6, postgraduate entrance examination, and TOEFL, along with features to generate Anki cards and PDFs. The tool also allows for the creation of mnemonic images for each word and articles.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.