AI-on-the-edge-device-docs

Github for hosting the documentation for the project: https://github.com/jomjol/AI-on-the-edge-device

Stars: 79

This repository contains documentation for the AI on the Edge Device Project. Users can edit Markdown documents in the 'docs' folder, create Pull Requests to merge changes, and Github Actions will regenerate the documentation on the 'gh-pages' branch. The documentation includes parameter documentation, template generation for new parameters, formatting options like boxes using the admonition extension, and local testing instructions using MkDocs.

README:

Go to https://jomjol.github.io/AI-on-the-edge-device-docs to use it.

This repo contains the documentation for the AI-on-the-Edge-Device Project.

- You can edit any

*.mddocument in the docs folder. - Then create a Pull Request for it to merge it into the

mainbranch (or edit it directly in themainbranch if you have the required rights). - When it got merged, the Github Actions will re-generate the documentation and place it in the

gh-pagesbranch. This branch automatically gets populated to the public Documentation Site

Each page has a link on its top-right corner Edit on GitHub which brings you directly to the Github editor.

- Add a new

*.mddocument in the docs folder. - Add the filename to the docs/nav.yml at the wished position in the Links section.

Each parameter in the main project repo is documented in a separate file, see https://github.com/jomjol/AI-on-the-edge-device/tree/rolling/param-docs. The script in param-docs/concat-parameter-pages.py collects them and compiles it into the documentation as provided in https://jomjol.github.io/AI-on-the-edge-device-docs/Parameters.

The script should be run whenever one of the pages changed.

This happens automatically daily in the Github action.

if you run it manually, make sure to clone the main repo first, eg. using:

cd param-docs

git clone https://github.com/jomjol/AI-on-the-edge-device.git

python concat-parameter-pages.pyThe script generate-template-param-doc-pages.py should be run whenever a new parameter gets added to the config file.

It then checks if there is already page for each of the parameters.

- If no page exists yet, a templated page gets generated.

- Existing pages do not get modified.

If the parameter is listed in expert-params.txt, an Expert warning will be shown.

If the parameter is listed in hidden-in-ui.txt, a Note will be shown.

Boxes can be shown using the admonition extension.

!!! Note

I am a note

Make sure to have 4-whitespace Intents!

Possible types: attention, caution, danger, error, hint, important, note, tip, and warning

See https://python-markdown.github.io/extensions/admonition/

To test it locally:

-

Clone this repo

-

Install the required tools (See also .github/workflows/build-docs.yaml):

pip install --upgrade pip pip install mkdocs mkdocs-gen-files mkdocs-awesome-pages-plugin mkdocs-material pymdown-extensions mkdocs-enumerate-headings-plugin -

In the main folder of the repo, call

mkdocs serve(and keep it running). This will locally generate the documentation. You can access it under http://127.0.0.1:8000/AI-on-the-edge-device-docs/Any change to the files will automatically be applied.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-on-the-edge-device-docs

Similar Open Source Tools

AI-on-the-edge-device-docs

This repository contains documentation for the AI on the Edge Device Project. Users can edit Markdown documents in the 'docs' folder, create Pull Requests to merge changes, and Github Actions will regenerate the documentation on the 'gh-pages' branch. The documentation includes parameter documentation, template generation for new parameters, formatting options like boxes using the admonition extension, and local testing instructions using MkDocs.

reai-ghidra

The RevEng.AI Ghidra Plugin by RevEng.ai allows users to interact with their API within Ghidra for Binary Code Similarity analysis to aid in Reverse Engineering stripped binaries. Users can upload binaries, rename functions above a confidence threshold, and view similar functions for a selected function.

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

latex2ai

LaTeX2AI is a plugin for Adobe Illustrator that allows users to use editable text labels typeset in LaTeX inside an Illustrator document. It provides a seamless integration of LaTeX functionality within the Illustrator environment, enabling users to create and edit LaTeX labels, manage item scaling behavior, set global options, and save documents as PDF with included LaTeX labels. The tool simplifies the process of including LaTeX-generated content in Illustrator designs, ensuring accurate scaling and alignment with other elements in the document.

langgraph-studio

LangGraph Studio is a specialized agent IDE that enables visualization, interaction, and debugging of complex agentic applications. It offers visual graphs and state editing to better understand agent workflows and iterate faster. Users can collaborate with teammates using LangSmith to debug failure modes. The tool integrates with LangSmith and requires Docker installed. Users can create and edit threads, configure graph runs, add interrupts, and support human-in-the-loop workflows. LangGraph Studio allows interactive modification of project config and graph code, with live sync to the interactive graph for easier iteration on long-running agents.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

vault-ai

OP Vault is a tool that leverages the OP Stack (OpenAI + Pinecone Vector Database) to allow users to upload custom knowledgebase files and ask questions about their contents. It provides a user-friendly Golang server and React frontend for querying human-readable content like books and documents, making it valuable for knowledge extraction and question-answering. Users can upload entire libraries, receive specific answers with file and section references, and explore the power of the OP Stack in a practical interface.

verifAI

VerifAI is a document-based question-answering system that addresses hallucinations in generative large language models and search engines. It retrieves relevant documents, generates answers with references, and verifies answers for accuracy. The engine uses generative search technology and a verification model to ensure no misinformation. VerifAI supports various document formats and offers user registration with a React.js interface. It is open-source and designed to be user-friendly, making it accessible for anyone to use.

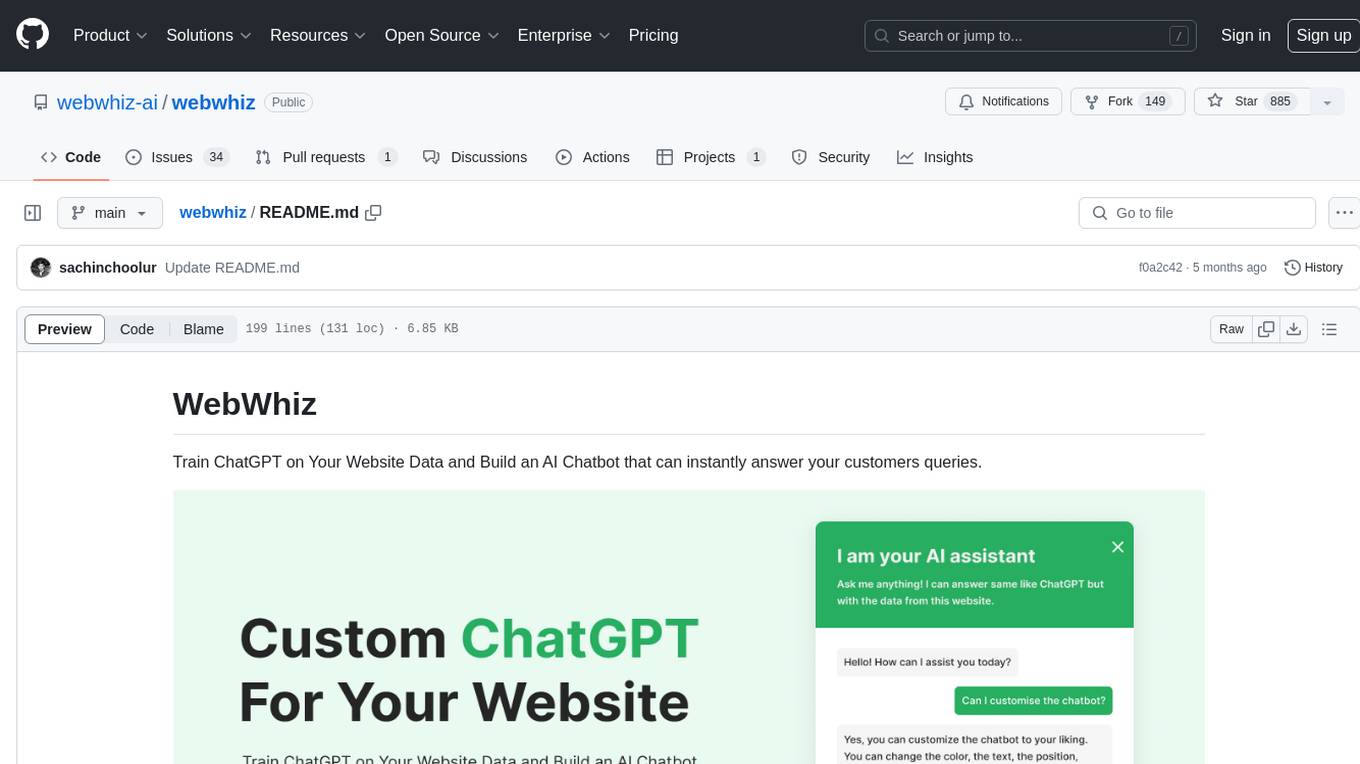

webwhiz

WebWhiz is an open-source tool that allows users to train ChatGPT on website data to build AI chatbots for customer queries. It offers easy integration, data-specific responses, regular data updates, no-code builder, chatbot customization, fine-tuning, and offline messaging. Users can create and train chatbots in a few simple steps by entering their website URL, automatically fetching and preparing training data, training ChatGPT, and embedding the chatbot on their website. WebWhiz can crawl websites monthly, collect text data and metadata, and process text data using tokens. Users can train custom data, but bringing custom open AI keys is not yet supported. The tool has no limitations on context size but may limit the number of pages based on the chosen plan. WebWhiz SDK is available on NPM, CDNs, and GitHub, and users can self-host it using Docker or manual setup involving MongoDB, Redis, Node, Python, and environment variables setup. For any issues, users can contact [email protected].

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

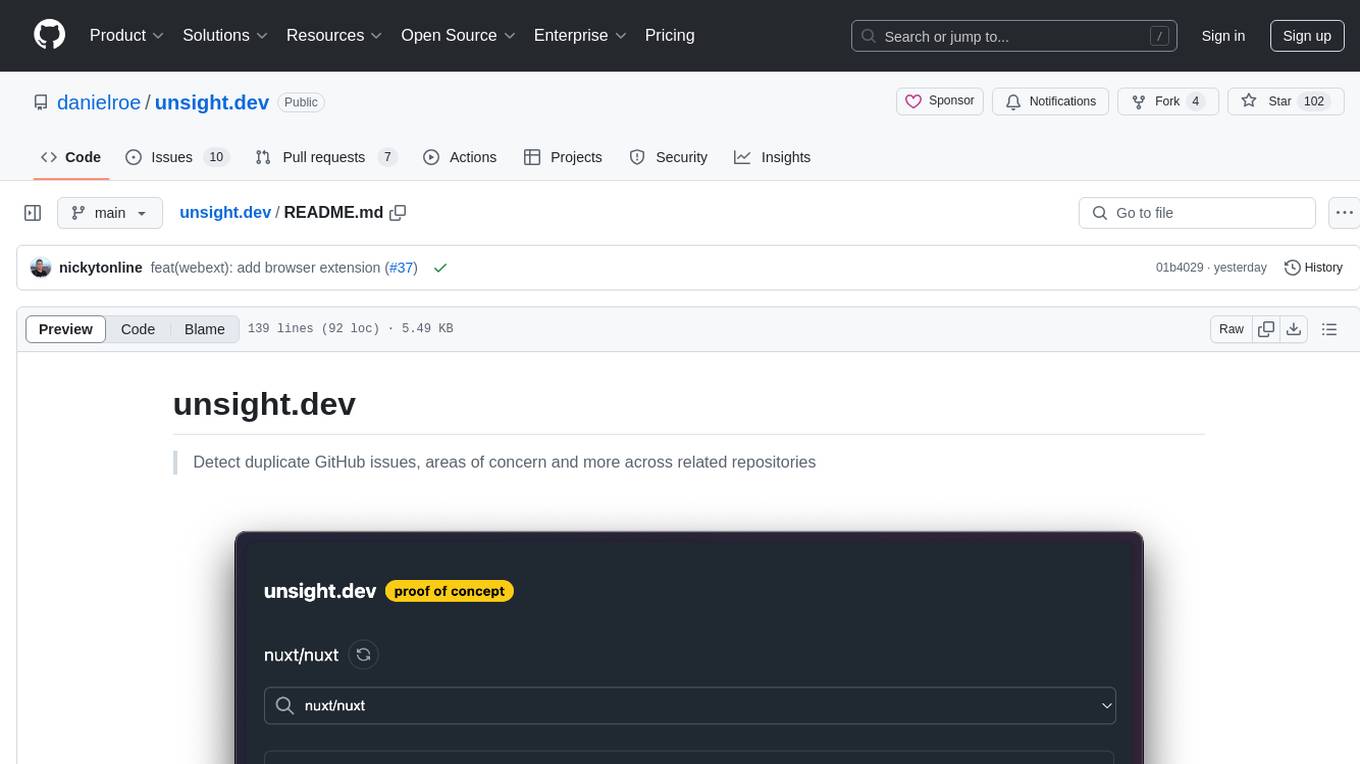

unsight.dev

unsight.dev is a tool built on Nuxt that helps detect duplicate GitHub issues and areas of concern across related repositories. It utilizes Nitro server API routes, GitHub API, and a GitHub App, along with UnoCSS. The tool is deployed on Cloudflare with NuxtHub, using Workers AI, Workers KV, and Vectorize. It also offers a browser extension soon to be released. Users can try the app locally for tweaking the UI and setting up a full development environment as a GitHub App.

open-source-slack-ai

This repository provides a ready-to-run basic Slack AI solution that allows users to summarize threads and channels using OpenAI. Users can generate thread summaries, channel overviews, channel summaries since a specific time, and full channel summaries. The tool is powered by GPT-3.5-Turbo and an ensemble of NLP models. It requires Python 3.8 or higher, an OpenAI API key, Slack App with associated API tokens, Poetry package manager, and ngrok for local development. Users can customize channel and thread summaries, run tests with coverage using pytest, and contribute to the project for future enhancements.

For similar tasks

AI-on-the-edge-device-docs

This repository contains documentation for the AI on the Edge Device Project. Users can edit Markdown documents in the 'docs' folder, create Pull Requests to merge changes, and Github Actions will regenerate the documentation on the 'gh-pages' branch. The documentation includes parameter documentation, template generation for new parameters, formatting options like boxes using the admonition extension, and local testing instructions using MkDocs.

documentation

Vespa documentation is served using GitHub Project pages with Jekyll. To edit documentation, check out and work off the master branch in this repository. Documentation is written in HTML or Markdown. Use a single Jekyll template _layouts/default.html to add header, footer and layout. Install bundler, then $ bundle install $ bundle exec jekyll serve --incremental --drafts --trace to set up a local server at localhost:4000 to see the pages as they will look when served. If you get strange errors on bundle install try $ export PATH=“/usr/local/opt/[email protected]/bin:$PATH” $ export LDFLAGS=“-L/usr/local/opt/[email protected]/lib” $ export CPPFLAGS=“-I/usr/local/opt/[email protected]/include” $ export PKG_CONFIG_PATH=“/usr/local/opt/[email protected]/lib/pkgconfig” The output will highlight rendering/other problems when starting serving. Alternatively, use the docker image `jekyll/jekyll` to run the local server on Mac $ docker run -ti --rm --name doc \ --publish 4000:4000 -e JEKYLL_UID=$UID -v $(pwd):/srv/jekyll \ jekyll/jekyll jekyll serve or RHEL 8 $ podman run -it --rm --name doc -p 4000:4000 -e JEKYLL_ROOTLESS=true \ -v "$PWD":/srv/jekyll:Z docker.io/jekyll/jekyll jekyll serve The layout is written in denali.design, see _layouts/default.html for usage. Please do not add custom style sheets, as it is harder to maintain.

Software

This repository contains the main software and firmware for controlling a fleet of autonomous soccer-playing robots competing in the RoboCup Small Size League. It includes guides for setting up the software, software architecture and design documentation, and resources for learning more about the RoboCup SSL.

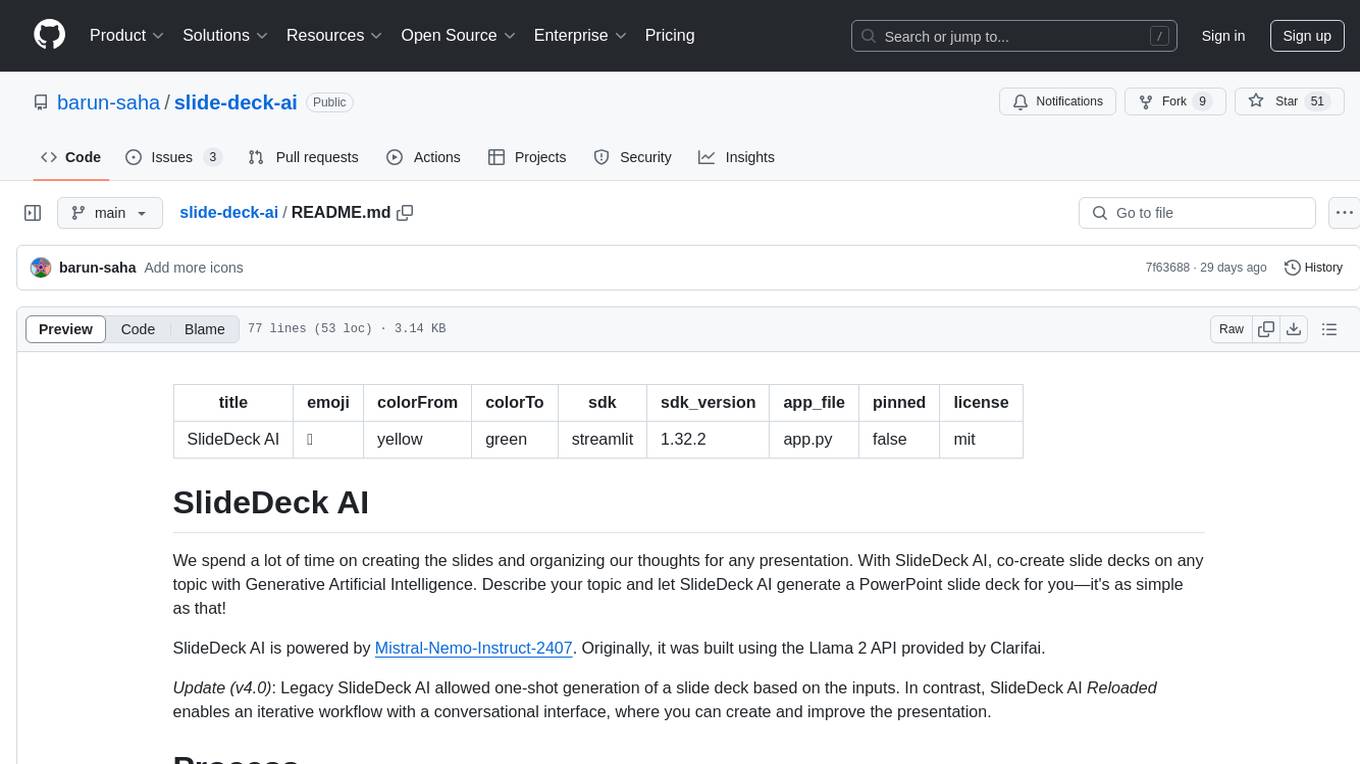

slide-deck-ai

SlideDeck AI is a tool that leverages Generative Artificial Intelligence to co-create slide decks on any topic. Users can describe their topic and let SlideDeck AI generate a PowerPoint slide deck, streamlining the presentation creation process. The tool offers an iterative workflow with a conversational interface for creating and improving presentations. It uses Mistral Nemo Instruct to generate initial slide content, searches and downloads images based on keywords, and allows users to refine content through additional instructions. SlideDeck AI provides pre-defined presentation templates and a history of instructions for users to enhance their presentations.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.